数据预处理流程

在搜索语法时,您是否因为破坏数据分析流而感到沮丧?为什么你在第三次查找之后仍然不记得它?这是因为你还没有足够的练习来为它建立肌肉记忆。

现在,想象一下,当您编写代码时,Python语法和函数会根据您的分析思路从指尖飞出。那太棒了!本教程旨在帮助您实现目标。

我建议每天早上练习这个剧本10分钟,并重复一个星期。这就像每天做一些小小的仰卧起坐 - 不是为了你的腹肌,而是为了你的数据科学肌肉。逐渐地,您会注意到重复培训后数据分析编程效率的提高。

从我的“数据科学训练”开始,在本教程中,我们将练习最常用的数据预处理语法作为预热会话;)

目录

0.读取,查看和保存数据

首先,为我们的练习加载库:

# 1.Load libraries #

import pandas as pd

import numpy as np现在我们将从我的GitHub存储库中读取数据。我从Zillow下载了数据。

file_dir = "https://raw.githubusercontent.com/zhendata/Medium_Posts/master/City_Zhvi_1bedroom_2018_05.csv"

# read csv file into a Pandas dataframe

raw_df = pd.read_csv(file_dir)

# check first 5 rows of the file

raw_df.head(5)

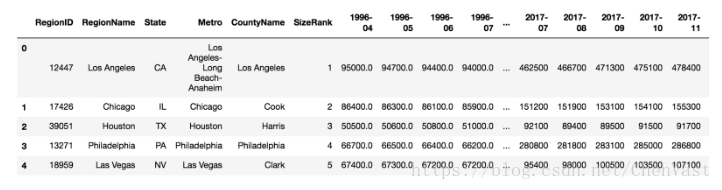

# use raw_df.tail(5) to see last 5 rows of the file结果如下:

保存文件是dataframe.to_csv()。如果您不想保存索引号,请使用dataframe.to_csv(index = False)。

1。表的维度和数据类型

1.1尺寸

这个数据中有多少行和列?

raw_df.shape

# the results is a vector: (# of rows, # of cols)

# Get the number of rows

print(raw_df.shape[0])

# column is raw_df.shape[1]1.2数据类型

您的数据的数据类型是什么,有多少列是数字的?

# Check the data types of the entire table's columns

raw_df.dtypes

# Check the data type of a specific column

raw_df['RegionID'].dtypes

# result: dtype('int64')输出前几列的数据类型:

如果您想更加具体地了解数据,请使用select_dtypes()来包含或排除数据类型。问:如果我只想查看2018的数据,我该如何获得?

2.基本列操作

2.1按列子集数据

按数据类型选择列:

# if you only want to include columns of float data

raw_df.select_dtypes(include=['float64'])

# Or to get numerical columns by excluding objects (non-numeric)

raw_df.select_dtypes(exclude=['object'])

# Get a list of all numerical column names #

num_cols = raw_df.select_dtypes(include=[np.number]).columns.tolist()例如,如果您只想要float和integer列:

按名称选择和删除列:

# select a subset of columns by names

raw_df_info = raw_df[['RegionID', 'RegionName', 'State', 'Metro', 'CountyName']]

# drop columns by names

raw_df_sub = raw_df_info.drop(['RegionID','RegionName'],axis=1)

raw_df_sub.head(5)2.2重命名列

如果我不喜欢它们,如何重命名列?例如,将“State”更改为“state_”; '城市'到'city_':

# Change column names #

raw_df_renamed1 = raw_df.rename(columns= {'State':'state_', 'City':'city_})

# If you need to change a lot of columns: this is easy for you to map the old and new names

old_names = ['State', 'City']

new_names = ['state_', 'city_']

raw_df_renamed2 = raw_df.rename(columns=dict(zip(old_names, new_names))3.空值:查看,删除和估算

3.1有多少行和列有空值?

# 1. For each column, are there any NaN values?

raw_df.isnull().any()

# 2. For each column, how many rows are NaN?

raw_df.isnull().sum()

# the results for 1&2 are shown in the screenshot below this block

# 3. How many columns have NaNs?

raw_df.isnull().sum(axis=0).count()

# the result is 271.

# axis=0 is the default for operation across rows, so raw_df.isnull().sum().count() yields the same result

# 4. Similarly, how many rows have NaNs?

raw_df.isnull().sum(axis=1).count()

# the result is 1324isnull.any()与isnull.sum()的输出:

选择一列中不为空的数据,例如,“Metro”不为空。

raw_df_metro = raw_df[pd.notnull(raw_df['Metro'])]

# If we want to take a look at what cities have null metros

raw_df[pd.isnull(raw_df['Metro'])].head(5)3.2为固定的一组列选择非空行

选择2000之后没有null的数据子集:

如果要在7月份选择数据,则需要找到包含“-07”的列。要查看字符串是否包含子字符串,可以在字符串中使用子字符串,并输出true或false。

# Drop NA rows based on a subset of columns: for example, drop the rows if it doesn't have 'State' and 'RegionName' info

df_regions = raw_df.dropna(subset = ['State', 'RegionName'])

# Get the columns with data available after 2000: use <string>.startwith("string") function #

cols_2000= [x for x in raw_df.columns.tolist() if '2000-' in x]

raw_df.dropna(subset=cols_2000).head(5)3.3 Null值的子集行

选择我们希望拥有至少50个非NA值的行,但不需要特定于列:

# Drop the rows where at least one columns is NAs.

# Method 1:

raw_df.dropna()

#It's the same as df.dropna(axis='columns', how = 'all')

# Method 2:

raw_df[raw_df.notnull()]

# Only drop the rows if at least 50 columns are Nas

not_null_50_df = raw_df.dropna(axis='columns', thresh=50)3.4删除和估算缺失值

填写NA或估算NA:

#fill with 0:

raw_df.fillna(0)

#fill NA with string 'missing':

raw_df['State'].fillna('missing')

#fill with mean or median:

raw_df['2018-01'].fillna((raw_df['2018-01'].mean()),inplace=True)

# inplace=True changes the original dataframe without assigning it to a column or dataframe

# it's the same as raw_df['2018-01']=raw_df['2018-01'].fillna((raw_df['2018-01'].mean()),inplace=False)

使用where函数填充自己的条件:

# fill values with conditional assignment by using np.where

# syntax df['column_name'] = np.where(statement, A, B) #

# the value is A is the statement is True, otherwise it's B #

# axis = 'columns' is the same as axis =1, it's an action across the rows along the column

# axis = 'index' is the same as axis= 0;

raw_df['2018-02'] = np.where(raw_df['2018-02'].notnull(), raw_df['2018-02'], raw_df['2017-02'].mean(), axis='columns')

4.重复数据删除

在汇总数据或加入数据之前,我们需要确保没有重复的行。

我们想看看是否有任何重复的城市/地区。我们需要确定我们想要在分析中使用哪个唯一ID(城市,地区)。

# Check duplicates #

raw_df.duplicated()

# output True/False values for each column

raw_df.duplicated().sum()

# for raw_df it's 0, meaning there's no duplication

# Check if there's any duplicated values by column, output is True/False for each row

raw_df.duplicated('RegionName')

# Select the duplicated rows to see what they look like

# keep = False marks all duplicated values as True so it only leaves the duplicated rows

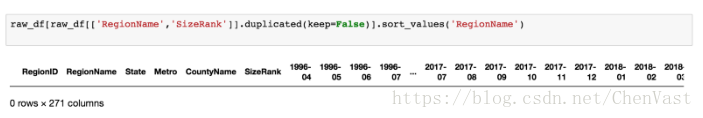

raw_df[raw_df['RegionName'].duplicated(keep=False)].sort_values('RegionName')IF set keep = False:

删除重复的值。

'CountyName'和'SizeRank'组合已经是唯一的。所以我们只使用列来演示drop_duplicated的语法。

# Drop duplicated rows #

# syntax: df.drop_duplicates(subset =[list of columns], keep = 'first', 'last', False)

unique_df = raw_df.drop_duplicates(subset = ['CountyName','SizeRank'], keep='first')这就是我在Python系列中为数据科学构建肌肉记忆系列的第一部分。完整的脚本可以在这里找到。