目标:训练一个评估器对红酒进行打分

step 1. 引包

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split # 对数据集进行划分

from sklearn import preprocessing # 对数据进行预处理

from sklearn.ensemble import RandomForestRegressor # 使用随机分类树这个模型

# 用于cross-validation(交叉验证)

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import GridSearchCV

# 用于评估

from sklearn.metrics import mean_squared_error, r2_score

# 用于保存

from sklearn.externals import joblibstep 2. 获取加载数据

# Load red wine data

dataset_url = 'http://mlr.cs.umass.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv'

data = pd.read_csv(dataset_url, sep=';')

看看加载的数据详情:

print(data.head())

print(data.shape)

print(data.describe())可以看出data为一个1599X12的表,这12个features中quality是我们要预测的部分,而且其他11个features都为数字,这很方便之后的处理,此外我们还能发现这批红酒的平均quality为5.6分左右,最好的有8.0分等等信息

step 3. 划分数据集

X 是去除quality后的数据,y就是监督学习中所指的标签

之后通过train_test_split这个方法将原数据分为训练集合测试集合,原数据的20%作为测试集,random_state(随机种子)的值相同时,可以得到相同的随机分布结果,它其实就是该组随机数的编号,stratify=y可以使得训练集和测试集更加相似

X = data.drop('quality', axis=1)

y = data.quality

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2, random_state=123, stratify=y)step 4. 对数据进行预处理(标准化 standardization)

在preprocessing中有一个叫scale()的方法,试试这个方法(之后并不会用这个方法):

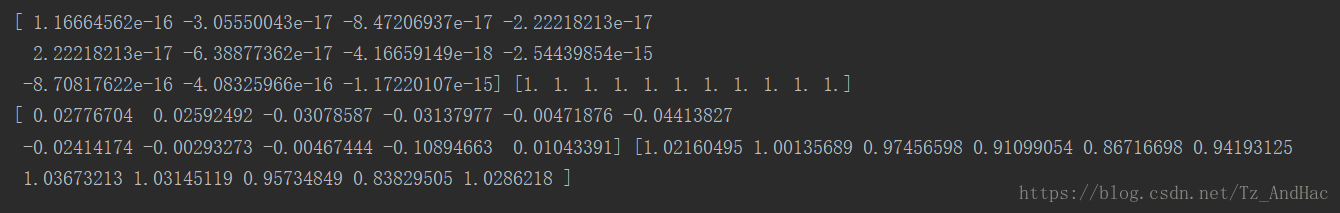

X_train_scaled = preprocessing.scale(X_train)

print(X_train_scaled.mean(axis=0), X_train_scaled.std(axis=0))scale后其每列平均值为0(10的负16,17次方这里视为0),std(standard deviations) 都为1,这时你大概能感受到标准化所做的事情了吧

那么我们为什么不能用这个方法标准化呢?

A:这里我们只对X_train进行了标准化,使其mean都为0,std都为1,当然我们可以再次用scale对X_test进行相同的操作,但此时X_test的mean和std将也为0和1,而实际上我们应对X_test进行和X_train完全相同的变换,这里相当于拿着X_test做了弊,让测试集朝着我们希望的方向变化

这是我们会用到的代码

# the scaler object has the saved means and standard deviations for each feature in the training set.

scaler = preprocessing.StandardScaler().fit(X_train)

X_train_scaled = scaler.transform(X_train)

print(X_train_scaled.mean(axis=0), X_train_scaled.std(axis=0))

X_test_scaled = scaler.transform(X_test)

print(X_test_scaled.mean(axis=0), X_test_scaled.std(axis=0))这是我们希望得到的结果,测试集的mean和std应该不会都为0和1

但这样标准化使得每次对需要训练/测试的数据都需要用scaler进行处理,很不方便

通常采用以下方法:

pipeline = make_pipeline(preprocessing.StandardScaler(),

RandomForestRegressor(n_estimators=100))这里用preprocessing中的StandardScaler来做标准化,并采用随机森林数模型进行预测, n_estimators为森林中的数的个数,默认为10

step 5. 调整超参数

hyperparameters = {'randomforestregressor__max_features' : ['auto', 'sqrt', 'log2'],

'randomforestregressor__max_depth': [None, 5, 3, 1]}

clf = GridSearchCV(pipeline, hyperparameters, cv=10)什么是超参数?(采用网格交叉验证)

机器学习中需要关注两类参数,一为模型参数(例如线性模型中的回归系数),二为超参数,模型参数是在训练模型之后我们能得到的,而超参数通常需要在训练模型之前就设好,例如 学习率(learning rate),随机森林的最大深度和数量,神经网络中隐藏层数等

网格交叉验证图示:

step 6. 训练模型:

clf.fit(X_train, y_train)step 7. 评估:

y_pred = clf.predict(X_test)

print(r2_score(y_test, y_pred))

print(mean_squared_error(y_test, y_pred))step 8. 保存模型:

joblib.dump(clf, 'rf_regressor.pkl')所有代码如下:

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn import preprocessing

from sklearn.ensemble import RandomForestRegressor

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import mean_squared_error, r2_score

from sklearn.externals import joblib

dataset_url = 'http://mlr.cs.umass.edu/ml/machine-learning-databases/wine-quality/winequality-red.csv'

data = pd.read_csv(dataset_url, sep=';')

X = data.drop('quality', axis=1)

y = data.quality

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.2, random_state=123, stratify=y)

pipeline = make_pipeline(preprocessing.StandardScaler(),

RandomForestRegressor(n_estimators=100))

print(pipeline.get_params())

hyperparameters = {'randomforestregressor__max_features' : ['auto', 'sqrt', 'log2'],

'randomforestregressor__max_depth': [None, 5, 3, 1]}

clf = GridSearchCV(pipeline, hyperparameters, cv=10)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

print(r2_score(y_test, y_pred))

print(mean_squared_error(y_test, y_pred))

joblib.dump(clf, 'rf_regressor.pkl')