版权声明:转载请注明出处~ https://blog.csdn.net/sinat_31425585/article/details/83243961

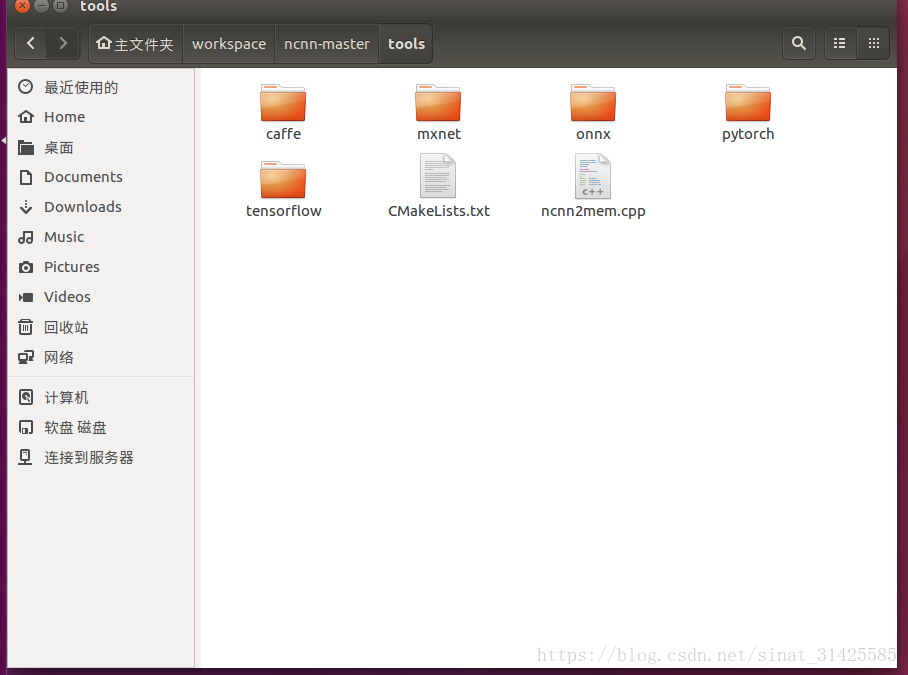

ncnn框架是一个非常好的深度学习部署框架,基于ncnn,我们可以很方便的对训练好的深度学习模型部署到手机(Android或Ios)、linux和PC端上,而且ncnn还提供了各个框架模型转换成ncnn可读形式模型的工具,如图1所示。

图1 ncnn提供的模型转换工具

分别对应caffe、mxnet、pytorch、tensorflow,以及onnx转换,将这些框架训练好的深度学习模型,转换成ncnn框架可读的模型形式(.param和.bin文件),然后,基于ncnn框架,将模型进行部署。

在examples文件夹中,也提供了一些实例,像squeezenet、mobilenet-v1、mobilenet-v2、mobilefacenet,github上已经有资源了,这里,我做一个yolov2的笔记。

代码如下:

// Tencent is pleased to support the open source community by making ncnn available.

//

// Copyright (C) 2018 THL A29 Limited, a Tencent company. All rights reserved.

//

// Licensed under the BSD 3-Clause License (the "License"); you may not use this file except

// in compliance with the License. You may obtain a copy of the License at

//

// https://opensource.org/licenses/BSD-3-Clause

//

// Unless required by applicable law or agreed to in writing, software distributed

// under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR

// CONDITIONS OF ANY KIND, either express or implied. See the License for the

// specific language governing permissions and limitations under the License.

#include <stdio.h>

#include <vector>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "ncnn/net.h"

struct Object

{

cv::Rect_<float> rect;

int label;

float prob;

};

static int detect_yolov2(const cv::Mat& bgr, std::vector<Object>& objects)

{

ncnn::Net yolov2;

// original pretrained model from https://github.com/eric612/Caffe-YOLOv2-Windows

// yolov2_deploy.prototxt

// yolov2_deploy_iter_30000.caffemodel

// https://drive.google.com/file/d/17w7oZBbTHPI5TMuD9DKQzkPhSVDaTlC9/view?usp=sharing

yolov2.load_param("../model/mobilenet_yolo.param");

yolov2.load_model("../model/mobilenet_yolo.bin");

// https://github.com/eric612/MobileNet-YOLO

// https://github.com/eric612/MobileNet-YOLO/blob/master/models/yolov2/mobilenet_yolo_deploy%20.prototxt

// https://github.com/eric612/MobileNet-YOLO/blob/master/models/yolov2/mobilenet_yolo_deploy_iter_57000.caffemodel

// yolov2.load_param("mobilenet_yolo.param");

// yolov2.load_model("mobilenet_yolo.bin");

const int target_size = 416;

int img_w = bgr.cols;

int img_h = bgr.rows;

ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR, bgr.cols, bgr.rows, target_size, target_size);

// the Caffe-YOLOv2-Windows style

// X' = X * scale - mean

const float mean_vals[3] = {0.5f, 0.5f, 0.5f};

const float norm_vals[3] = {0.007843f, 0.007843f, 0.007843f};

in.substract_mean_normalize(0, norm_vals);

in.substract_mean_normalize(mean_vals, 0);

ncnn::Extractor ex = yolov2.create_extractor();

ex.set_num_threads(4);

ex.input("data", in);

ncnn::Mat out;

ex.extract("detection_out", out);

// printf("%d %d %d\n", out.w, out.h, out.c);

objects.clear();

for (int i=0; i<out.h; i++)

{

const float* values = out.row(i);

Object object;

object.label = values[0];

object.prob = values[1];

object.rect.x = values[2] * img_w;

object.rect.y = values[3] * img_h;

object.rect.width = values[4] * img_w - object.rect.x;

object.rect.height = values[5] * img_h - object.rect.y;

objects.push_back(object);

}

return 0;

}

static void draw_objects(const cv::Mat& bgr, const std::vector<Object>& objects)

{

static const char* class_names[] = {"background",

"aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair",

"cow", "diningtable", "dog", "horse",

"motorbike", "person", "pottedplant",

"sheep", "sofa", "train", "tvmonitor"};

cv::Mat image = bgr.clone();

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(image, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > image.cols)

x = image.cols - label_size.width;

cv::rectangle(image, cv::Rect(cv::Point(x, y),

cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), CV_FILLED);

cv::putText(image, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

cv::imshow("image", image);

cv::waitKey(0);

}

int main(int argc, char** argv)

{

const char* imagepath = "test.jpg";

cv::Mat m = cv::imread(imagepath, CV_LOAD_IMAGE_COLOR);

if (m.empty())

{

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

std::vector<Object> objects;

detect_yolov2(m, objects);

draw_objects(m, objects);

return 0;

}效果如图2所示。

图2 yolo v2效果图

最后,安利一波模型,这里用到的模型可以从这里下载:https://download.csdn.net/download/sinat_31425585/10737783

参考资料: