Tensorflow Object detection API 在 Windows10 下的配置不如在 Ubuntu 下配置方便,但还是有方法的,介绍一下我的配置流程。

官方目标检测的demo中调用了大量的py文件,不利于项目的部署,因此我将其合并为两个文件

##1.Tensorflow models windows 下的环境配置

默认安装好了显卡驱动、cuda、cudnn

(1)下载anaconda3并安装

https://www.anaconda.com/download/

(2)安装tensorflow-gpu (我安装了1.10.0)

# For CPU

pip install tensorflow

# For GPU

pip install tensorflow-gpu==版本号(如1.10.0)

(3)下载 tensorflow GitHub上的models源码

git clone https://github.com/tensorflow/models.git

(4)依赖包的安装

官方给出的安装方法:https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/installation.md

pip install protobuf-compiler python-pil python-lxml python-tk

pip install Cython

pip install contextlib2

pip install jupyter

pip install matplotlib

(5)coco API 的安装

# 官方给出的步骤,此方法在Windows下并不能直接用

git clone https://github.com/cocodataset/cocoapi.git

cd cocoapi/PythonAPI

make

cp -r pycocotools <path_to_tensorflow>/models/research/

# 首先为了编译,下载 mingw32-make

mingw官网下载地址:https://sourceforge.net/projects/mingw/files/latest/download?source=files

# 配置环境变量

C:\MINGW\bin

# 安装

cmd输入命令:mingw-get install gcc g++ mingw32-make

# 安装完成之后 将 mingw32-make.exe 放到 ...cocoapi/PythonAPI之下,之后输入指令进行编译

mingw32-make

# 然后在pycocotools文件夹下会生成 相应的python文件,将整个文件夹移动到tensorflow-models下的research目录下

(6)安装research

# 切换到以下路径并输入指令

/models/research/

python setup.py build

python setup.py install

(7)编译protobuf

# 下载 protoc 并安装

https://github.com/protocolbuffers/protobuf/releases

# 切换到/models/research/

protoc object_detection/protos/*.proto --python_out=.

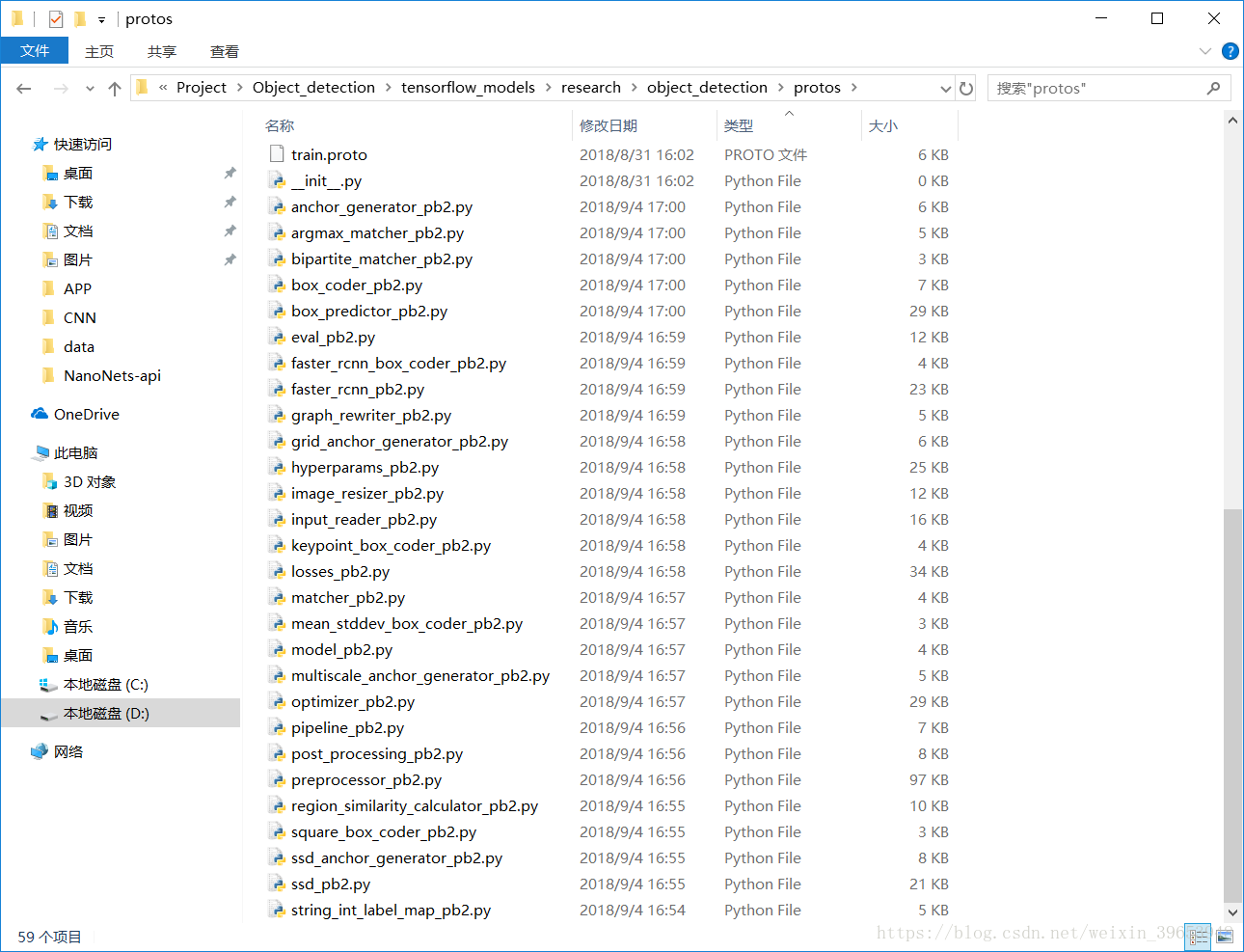

# 如果提示错误就按照文件名一个一个生成即可 生成之后,有如下python文件:

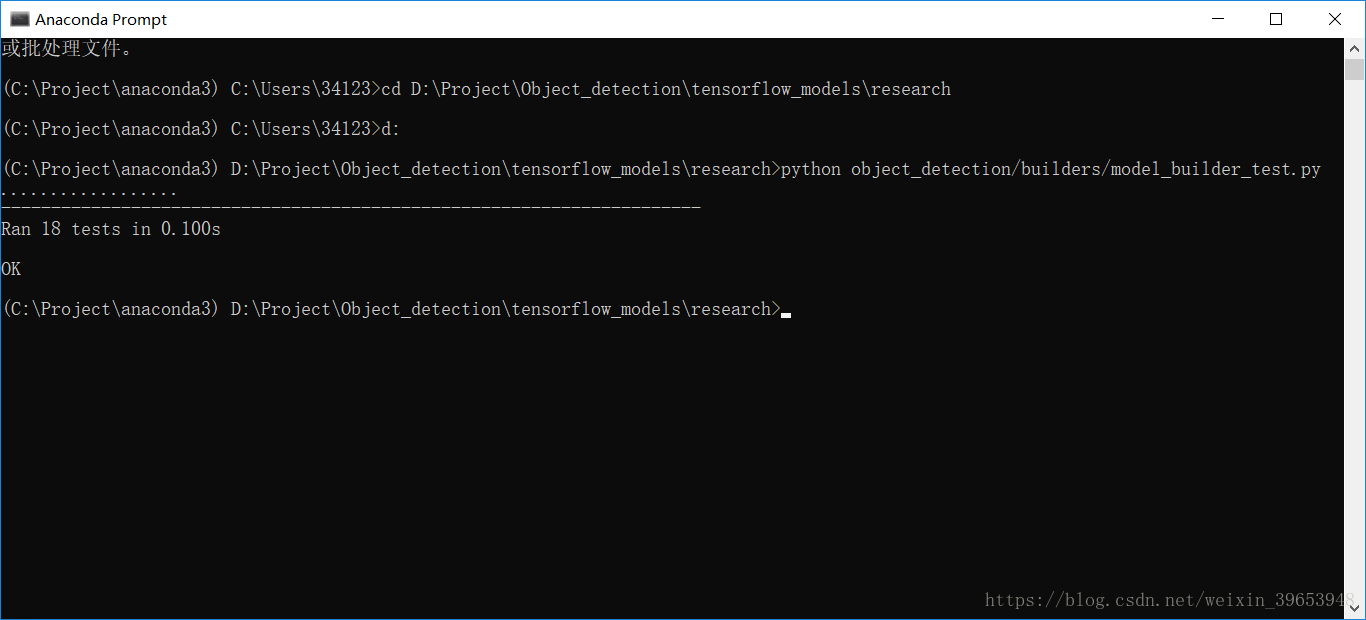

(8)测试安装是否成功

python object_detection/builders/model_builder_test.py

# 返回 OK 表明安装成功

注意

有可能会遇到 “No model named nets” 的情况,原因是slim没有编译,按如下解决:

cd models/research/slim

python setup.py build

python setup.py instal

##二.tensorflow目标检测python脚本整合

PATH_TO_LABELS = ‘’ 需要用绝对路径指定,否则会报错,并且最好路径中不要包含中文。UnicodeEncodeError: ‘utf-8’ codec can’t encode character ‘\udcd5’ in position 2201: surrogates not allowed

MODEL_NAME = ‘ssd_mobilenet_v2_coco_2018_03_29’

MODEL_FILE = MODEL_NAME + ‘.tar.gz’

PATH_TO_FROZEN_GRAPH = MODEL_NAME + ‘/frozen_inference_graph.pb’

需要指定图片路径:

PATH_TO_TEST_IMAGES_DIR = ‘test_images’

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, ‘image{}.jpg’.format(i)) for i in range(4, 5) ]

从官方下载模型并解压:

# 模型下载地址

https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/detection_model_zoo.md

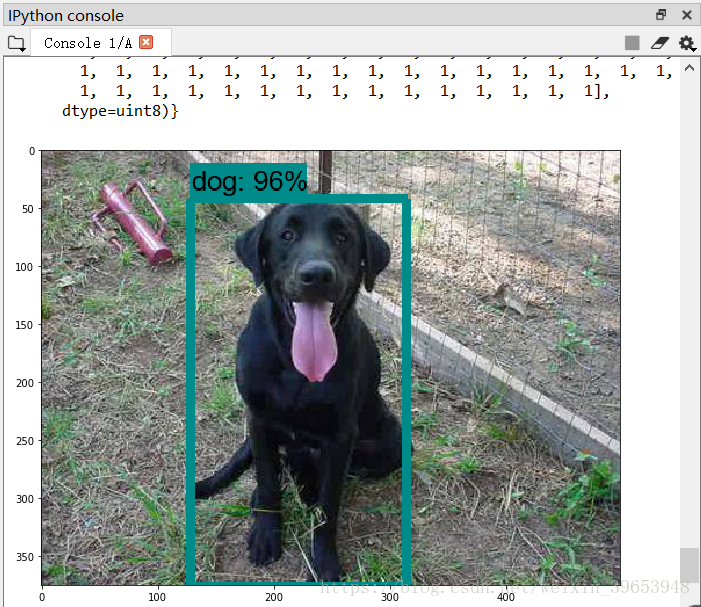

以下为整理的目标检测源码

需要将models\research\object_detection\protos路径下的string_int_label_map_pb2.py文件放到与之相同路径下,因为在文件中调用了该py文件。

# -*- coding: utf-8 -*-

"""

Created on Tue Sep 4 22:54:32 2018

@author: 34123

"""

import numpy as np

import os

import tensorflow as tf

from matplotlib import pyplot as plt

from PIL import Image

#from object_detection.utils import label_map_util

#from object_detection.utils import visualization_utils as vis_util

MODEL_NAME = 'ssd_mobilenet_v2_coco_2018_03_29'

MODEL_FILE = MODEL_NAME + '.tar.gz'

PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

#PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

PATH_TO_LABELS = 'C:\\Users\\34123\Desktop\\Block Programing\\tensorflow_demo\\ssd_mobilenet_v2_coco_2018_03_29\\mscoco_label_map.pbtxt'

NUM_CLASSES = 90

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

################################################################################################

import string_int_label_map_pb2

from google.protobuf import text_format

def _validate_label_map(label_map):

"""Checks if a label map is valid.

Args:

label_map: StringIntLabelMap to validate.

Raises:

ValueError: if label map is invalid.

"""

for item in label_map.item:

if item.id < 0:

raise ValueError('Label map ids should be >= 0.')

if (item.id == 0 and item.name != 'background' and

item.display_name != 'background'):

raise ValueError('Label map id 0 is reserved for the background label')

import logging

def convert_label_map_to_categories(label_map,

max_num_classes,

use_display_name=True):

"""Loads label map proto and returns categories list compatible with eval.

This function loads a label map and returns a list of dicts, each of which

has the following keys:

'id': (required) an integer id uniquely identifying this category.

'name': (required) string representing category name

e.g., 'cat', 'dog', 'pizza'.

We only allow class into the list if its id-label_id_offset is

between 0 (inclusive) and max_num_classes (exclusive).

If there are several items mapping to the same id in the label map,

we will only keep the first one in the categories list.

Args:

label_map: a StringIntLabelMapProto or None. If None, a default categories

list is created with max_num_classes categories.

max_num_classes: maximum number of (consecutive) label indices to include.

use_display_name: (boolean) choose whether to load 'display_name' field

as category name. If False or if the display_name field does not exist,

uses 'name' field as category names instead.

Returns:

categories: a list of dictionaries representing all possible categories.

"""

categories = []

list_of_ids_already_added = []

if not label_map:

label_id_offset = 1

for class_id in range(max_num_classes):

categories.append({

'id': class_id + label_id_offset,

'name': 'category_{}'.format(class_id + label_id_offset)

})

return categories

for item in label_map.item:

if not 0 < item.id <= max_num_classes:

logging.info('Ignore item %d since it falls outside of requested '

'label range.', item.id)

continue

if use_display_name and item.HasField('display_name'):

name = item.display_name

else:

name = item.name

if item.id not in list_of_ids_already_added:

list_of_ids_already_added.append(item.id)

categories.append({'id': item.id, 'name': name})

return categories

def load_labelmap(path):

"""Loads label map proto.

Args:

path: path to StringIntLabelMap proto text file.

Returns:

a StringIntLabelMapProto

"""

with tf.gfile.GFile(path, 'r') as fid:

label_map_string = fid.read()

label_map = string_int_label_map_pb2.StringIntLabelMap()

try:

text_format.Merge(label_map_string, label_map)

except text_format.ParseError:

label_map.ParseFromString(label_map_string)

_validate_label_map(label_map)

return label_map

def create_category_index(categories):

"""Creates dictionary of COCO compatible categories keyed by category id.

Args:

categories: a list of dicts, each of which has the following keys:

'id': (required) an integer id uniquely identifying this category.

'name': (required) string representing category name

e.g., 'cat', 'dog', 'pizza'.

Returns:

category_index: a dict containing the same entries as categories, but keyed

by the 'id' field of each category.

"""

category_index = {}

for cat in categories:

category_index[cat['id']] = cat

return category_index

###############################################################################################

label_map = load_labelmap(PATH_TO_LABELS)

categories = convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = create_category_index(categories)

##########################from object_detection.utils import ops as utils_ops###############################

def reframe_box_masks_to_image_masks(box_masks, boxes, image_height,

image_width):

"""Transforms the box masks back to full image masks.

Embeds masks in bounding boxes of larger masks whose shapes correspond to

image shape.

Args:

box_masks: A tf.float32 tensor of size [num_masks, mask_height, mask_width].

boxes: A tf.float32 tensor of size [num_masks, 4] containing the box

corners. Row i contains [ymin, xmin, ymax, xmax] of the box

corresponding to mask i. Note that the box corners are in

normalized coordinates.

image_height: Image height. The output mask will have the same height as

the image height.

image_width: Image width. The output mask will have the same width as the

image width.

Returns:

A tf.float32 tensor of size [num_masks, image_height, image_width].

"""

# TODO(rathodv): Make this a public function.

def reframe_box_masks_to_image_masks_default():

"""The default function when there are more than 0 box masks."""

def transform_boxes_relative_to_boxes(boxes, reference_boxes):

boxes = tf.reshape(boxes, [-1, 2, 2])

min_corner = tf.expand_dims(reference_boxes[:, 0:2], 1)

max_corner = tf.expand_dims(reference_boxes[:, 2:4], 1)

transformed_boxes = (boxes - min_corner) / (max_corner - min_corner)

return tf.reshape(transformed_boxes, [-1, 4])

box_masks_expanded = tf.expand_dims(box_masks, axis=3)

num_boxes = tf.shape(box_masks_expanded)[0]

unit_boxes = tf.concat(

[tf.zeros([num_boxes, 2]), tf.ones([num_boxes, 2])], axis=1)

reverse_boxes = transform_boxes_relative_to_boxes(unit_boxes, boxes)

return tf.image.crop_and_resize(

image=box_masks_expanded,

boxes=reverse_boxes,

box_ind=tf.range(num_boxes),

crop_size=[image_height, image_width],

extrapolation_value=0.0)

image_masks = tf.cond(

tf.shape(box_masks)[0] > 0,

reframe_box_masks_to_image_masks_default,

lambda: tf.zeros([0, image_height, image_width, 1], dtype=tf.float32))

return tf.squeeze(image_masks, axis=3)

#######################################################################################################

import collections

import PIL.ImageColor as ImageColor

import PIL.ImageDraw as ImageDraw

import PIL.ImageFont as ImageFont

STANDARD_COLORS = [

'AliceBlue', 'Chartreuse', 'Aqua', 'Aquamarine', 'Azure', 'Beige', 'Bisque',

'BlanchedAlmond', 'BlueViolet', 'BurlyWood', 'CadetBlue', 'AntiqueWhite',

'Chocolate', 'Coral', 'CornflowerBlue', 'Cornsilk', 'Crimson', 'Cyan',

'DarkCyan', 'DarkGoldenRod', 'DarkGrey', 'DarkKhaki', 'DarkOrange',

'DarkOrchid', 'DarkSalmon', 'DarkSeaGreen', 'DarkTurquoise', 'DarkViolet',

'DeepPink', 'DeepSkyBlue', 'DodgerBlue', 'FireBrick', 'FloralWhite',

'ForestGreen', 'Fuchsia', 'Gainsboro', 'GhostWhite', 'Gold', 'GoldenRod',

'Salmon', 'Tan', 'HoneyDew', 'HotPink', 'IndianRed', 'Ivory', 'Khaki',

'Lavender', 'LavenderBlush', 'LawnGreen', 'LemonChiffon', 'LightBlue',

'LightCoral', 'LightCyan', 'LightGoldenRodYellow', 'LightGray', 'LightGrey',

'LightGreen', 'LightPink', 'LightSalmon', 'LightSeaGreen', 'LightSkyBlue',

'LightSlateGray', 'LightSlateGrey', 'LightSteelBlue', 'LightYellow', 'Lime',

'LimeGreen', 'Linen', 'Magenta', 'MediumAquaMarine', 'MediumOrchid',

'MediumPurple', 'MediumSeaGreen', 'MediumSlateBlue', 'MediumSpringGreen',

'MediumTurquoise', 'MediumVioletRed', 'MintCream', 'MistyRose', 'Moccasin',

'NavajoWhite', 'OldLace', 'Olive', 'OliveDrab', 'Orange', 'OrangeRed',

'Orchid', 'PaleGoldenRod', 'PaleGreen', 'PaleTurquoise', 'PaleVioletRed',

'PapayaWhip', 'PeachPuff', 'Peru', 'Pink', 'Plum', 'PowderBlue', 'Purple',

'Red', 'RosyBrown', 'RoyalBlue', 'SaddleBrown', 'Green', 'SandyBrown',

'SeaGreen', 'SeaShell', 'Sienna', 'Silver', 'SkyBlue', 'SlateBlue',

'SlateGray', 'SlateGrey', 'Snow', 'SpringGreen', 'SteelBlue', 'GreenYellow',

'Teal', 'Thistle', 'Tomato', 'Turquoise', 'Violet', 'Wheat', 'White',

'WhiteSmoke', 'Yellow', 'YellowGreen'

]

def draw_bounding_box_on_image(image,

ymin,

xmin,

ymax,

xmax,

color='red',

thickness=4,

display_str_list=(),

use_normalized_coordinates=True):

"""Adds a bounding box to an image.

Bounding box coordinates can be specified in either absolute (pixel) or

normalized coordinates by setting the use_normalized_coordinates argument.

Each string in display_str_list is displayed on a separate line above the

bounding box in black text on a rectangle filled with the input 'color'.

If the top of the bounding box extends to the edge of the image, the strings

are displayed below the bounding box.

Args:

image: a PIL.Image object.

ymin: ymin of bounding box.

xmin: xmin of bounding box.

ymax: ymax of bounding box.

xmax: xmax of bounding box.

color: color to draw bounding box. Default is red.

thickness: line thickness. Default value is 4.

display_str_list: list of strings to display in box

(each to be shown on its own line).

use_normalized_coordinates: If True (default), treat coordinates

ymin, xmin, ymax, xmax as relative to the image. Otherwise treat

coordinates as absolute.

"""

draw = ImageDraw.Draw(image)

im_width, im_height = image.size

if use_normalized_coordinates:

(left, right, top, bottom) = (xmin * im_width, xmax * im_width,

ymin * im_height, ymax * im_height)

else:

(left, right, top, bottom) = (xmin, xmax, ymin, ymax)

draw.line([(left, top), (left, bottom), (right, bottom),

(right, top), (left, top)], width=thickness, fill=color)

try:

font = ImageFont.truetype('arial.ttf', 24)

except IOError:

font = ImageFont.load_default()

# If the total height of the display strings added to the top of the bounding

# box exceeds the top of the image, stack the strings below the bounding box

# instead of above.

display_str_heights = [font.getsize(ds)[1] for ds in display_str_list]

# Each display_str has a top and bottom margin of 0.05x.

total_display_str_height = (1 + 2 * 0.05) * sum(display_str_heights)

if top > total_display_str_height:

text_bottom = top

else:

text_bottom = bottom + total_display_str_height

# Reverse list and print from bottom to top.

for display_str in display_str_list[::-1]:

text_width, text_height = font.getsize(display_str)

margin = np.ceil(0.05 * text_height)

draw.rectangle(

[(left, text_bottom - text_height - 2 * margin), (left + text_width,

text_bottom)],

fill=color)

draw.text(

(left + margin, text_bottom - text_height - margin),

display_str,

fill='black',

font=font)

text_bottom -= text_height - 2 * margin

def draw_bounding_boxes_on_image(image,

boxes,

color='red',

thickness=4,

display_str_list_list=()):

"""Draws bounding boxes on image.

Args:

image: a PIL.Image object.

boxes: a 2 dimensional numpy array of [N, 4]: (ymin, xmin, ymax, xmax).

The coordinates are in normalized format between [0, 1].

color: color to draw bounding box. Default is red.

thickness: line thickness. Default value is 4.

display_str_list_list: list of list of strings.

a list of strings for each bounding box.

The reason to pass a list of strings for a

bounding box is that it might contain

multiple labels.

Raises:

ValueError: if boxes is not a [N, 4] array

"""

boxes_shape = boxes.shape

if not boxes_shape:

return

if len(boxes_shape) != 2 or boxes_shape[1] != 4:

raise ValueError('Input must be of size [N, 4]')

for i in range(boxes_shape[0]):

display_str_list = ()

if display_str_list_list:

display_str_list = display_str_list_list[i]

draw_bounding_box_on_image(image, boxes[i, 0], boxes[i, 1], boxes[i, 2],

boxes[i, 3], color, thickness, display_str_list)

def draw_bounding_box_on_image_array(image,

ymin,

xmin,

ymax,

xmax,

color='red',

thickness=4,

display_str_list=(),

use_normalized_coordinates=True):

"""Adds a bounding box to an image (numpy array).

Bounding box coordinates can be specified in either absolute (pixel) or

normalized coordinates by setting the use_normalized_coordinates argument.

Args:

image: a numpy array with shape [height, width, 3].

ymin: ymin of bounding box.

xmin: xmin of bounding box.

ymax: ymax of bounding box.

xmax: xmax of bounding box.

color: color to draw bounding box. Default is red.

thickness: line thickness. Default value is 4.

display_str_list: list of strings to display in box

(each to be shown on its own line).

use_normalized_coordinates: If True (default), treat coordinates

ymin, xmin, ymax, xmax as relative to the image. Otherwise treat

coordinates as absolute.

"""

image_pil = Image.fromarray(np.uint8(image)).convert('RGB')

draw_bounding_box_on_image(image_pil, ymin, xmin, ymax, xmax, color,

thickness, display_str_list,

use_normalized_coordinates)

np.copyto(image, np.array(image_pil))

def draw_mask_on_image_array(image, mask, color='red', alpha=0.4):

"""Draws mask on an image.

Args:

image: uint8 numpy array with shape (img_height, img_height, 3)

mask: a uint8 numpy array of shape (img_height, img_height) with

values between either 0 or 1.

color: color to draw the keypoints with. Default is red.

alpha: transparency value between 0 and 1. (default: 0.4)

Raises:

ValueError: On incorrect data type for image or masks.

"""

if image.dtype != np.uint8:

raise ValueError('`image` not of type np.uint8')

if mask.dtype != np.uint8:

raise ValueError('`mask` not of type np.uint8')

if np.any(np.logical_and(mask != 1, mask != 0)):

raise ValueError('`mask` elements should be in [0, 1]')

if image.shape[:2] != mask.shape:

raise ValueError('The image has spatial dimensions %s but the mask has '

'dimensions %s' % (image.shape[:2], mask.shape))

rgb = ImageColor.getrgb(color)

pil_image = Image.fromarray(image)

solid_color = np.expand_dims(

np.ones_like(mask), axis=2) * np.reshape(list(rgb), [1, 1, 3])

pil_solid_color = Image.fromarray(np.uint8(solid_color)).convert('RGBA')

pil_mask = Image.fromarray(np.uint8(255.0*alpha*mask)).convert('L')

pil_image = Image.composite(pil_solid_color, pil_image, pil_mask)

np.copyto(image, np.array(pil_image.convert('RGB')))

def draw_keypoints_on_image(image,

keypoints,

color='red',

radius=2,

use_normalized_coordinates=True):

"""Draws keypoints on an image.

Args:

image: a PIL.Image object.

keypoints: a numpy array with shape [num_keypoints, 2].

color: color to draw the keypoints with. Default is red.

radius: keypoint radius. Default value is 2.

use_normalized_coordinates: if True (default), treat keypoint values as

relative to the image. Otherwise treat them as absolute.

"""

draw = ImageDraw.Draw(image)

im_width, im_height = image.size

keypoints_x = [k[1] for k in keypoints]

keypoints_y = [k[0] for k in keypoints]

if use_normalized_coordinates:

keypoints_x = tuple([im_width * x for x in keypoints_x])

keypoints_y = tuple([im_height * y for y in keypoints_y])

for keypoint_x, keypoint_y in zip(keypoints_x, keypoints_y):

draw.ellipse([(keypoint_x - radius, keypoint_y - radius),

(keypoint_x + radius, keypoint_y + radius)],

outline=color, fill=color)

def draw_keypoints_on_image_array(image,

keypoints,

color='red',

radius=2,

use_normalized_coordinates=True):

"""Draws keypoints on an image (numpy array).

Args:

image: a numpy array with shape [height, width, 3].

keypoints: a numpy array with shape [num_keypoints, 2].

color: color to draw the keypoints with. Default is red.

radius: keypoint radius. Default value is 2.

use_normalized_coordinates: if True (default), treat keypoint values as

relative to the image. Otherwise treat them as absolute.

"""

image_pil = Image.fromarray(np.uint8(image)).convert('RGB')

draw_keypoints_on_image(image_pil, keypoints, color, radius,

use_normalized_coordinates)

np.copyto(image, np.array(image_pil))

def visualize_boxes_and_labels_on_image_array(

image,

boxes,

classes,

scores,

category_index,

instance_masks=None,

instance_boundaries=None,

keypoints=None,

use_normalized_coordinates=False,

max_boxes_to_draw=20,

min_score_thresh=.5,

agnostic_mode=False,

line_thickness=4,

groundtruth_box_visualization_color='black',

skip_scores=False,

skip_labels=False):

"""Overlay labeled boxes on an image with formatted scores and label names.

This function groups boxes that correspond to the same location

and creates a display string for each detection and overlays these

on the image. Note that this function modifies the image in place, and returns

that same image.

Args:

image: uint8 numpy array with shape (img_height, img_width, 3)

boxes: a numpy array of shape [N, 4]

classes: a numpy array of shape [N]. Note that class indices are 1-based,

and match the keys in the label map.

scores: a numpy array of shape [N] or None. If scores=None, then

this function assumes that the boxes to be plotted are groundtruth

boxes and plot all boxes as black with no classes or scores.

category_index: a dict containing category dictionaries (each holding

category index `id` and category name `name`) keyed by category indices.

instance_masks: a numpy array of shape [N, image_height, image_width] with

values ranging between 0 and 1, can be None.

instance_boundaries: a numpy array of shape [N, image_height, image_width]

with values ranging between 0 and 1, can be None.

keypoints: a numpy array of shape [N, num_keypoints, 2], can

be None

use_normalized_coordinates: whether boxes is to be interpreted as

normalized coordinates or not.

max_boxes_to_draw: maximum number of boxes to visualize. If None, draw

all boxes.

min_score_thresh: minimum score threshold for a box to be visualized

agnostic_mode: boolean (default: False) controlling whether to evaluate in

class-agnostic mode or not. This mode will display scores but ignore

classes.

line_thickness: integer (default: 4) controlling line width of the boxes.

groundtruth_box_visualization_color: box color for visualizing groundtruth

boxes

skip_scores: whether to skip score when drawing a single detection

skip_labels: whether to skip label when drawing a single detection

Returns:

uint8 numpy array with shape (img_height, img_width, 3) with overlaid boxes.

"""

# Create a display string (and color) for every box location, group any boxes

# that correspond to the same location.

box_to_display_str_map = collections.defaultdict(list)

box_to_color_map = collections.defaultdict(str)

box_to_instance_masks_map = {}

box_to_instance_boundaries_map = {}

box_to_keypoints_map = collections.defaultdict(list)

if not max_boxes_to_draw:

max_boxes_to_draw = boxes.shape[0]

for i in range(min(max_boxes_to_draw, boxes.shape[0])):

if scores is None or scores[i] > min_score_thresh:

box = tuple(boxes[i].tolist())

if instance_masks is not None:

box_to_instance_masks_map[box] = instance_masks[i]

if instance_boundaries is not None:

box_to_instance_boundaries_map[box] = instance_boundaries[i]

if keypoints is not None:

box_to_keypoints_map[box].extend(keypoints[i])

if scores is None:

box_to_color_map[box] = groundtruth_box_visualization_color

else:

display_str = ''

if not skip_labels:

if not agnostic_mode:

if classes[i] in category_index.keys():

class_name = category_index[classes[i]]['name']

else:

class_name = 'N/A'

display_str = str(class_name)

if not skip_scores:

if not display_str:

display_str = '{}%'.format(int(100*scores[i]))

else:

display_str = '{}: {}%'.format(display_str, int(100*scores[i]))

box_to_display_str_map[box].append(display_str)

if agnostic_mode:

box_to_color_map[box] = 'DarkOrange'

else:

box_to_color_map[box] = STANDARD_COLORS[

classes[i] % len(STANDARD_COLORS)]

# Draw all boxes onto image.

for box, color in box_to_color_map.items():

ymin, xmin, ymax, xmax = box

if instance_masks is not None:

draw_mask_on_image_array(

image,

box_to_instance_masks_map[box],

color=color

)

if instance_boundaries is not None:

draw_mask_on_image_array(

image,

box_to_instance_boundaries_map[box],

color='red',

alpha=1.0

)

draw_bounding_box_on_image_array(

image,

ymin,

xmin,

ymax,

xmax,

color=color,

thickness=line_thickness,

display_str_list=box_to_display_str_map[box],

use_normalized_coordinates=use_normalized_coordinates)

if keypoints is not None:

draw_keypoints_on_image_array(

image,

box_to_keypoints_map[box],

color=color,

radius=line_thickness / 2,

use_normalized_coordinates=use_normalized_coordinates)

return image

#######################################################################################################

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# For the sake of simplicity we will use only 2 images:

# -image1.jpg -image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(6, 7) ]

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

#######################################################################################################

def run_inference_for_single_image(image, graph):

with graph.as_default():

config = tf.ConfigProto(gpu_options=tf.GPUOptions(allow_growth=True)) #限制GPU资源分配,刚开始分配少量资源,然后按需慢慢增加GPU资源。

with tf.Session(config=config) as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict['detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

print(output_dict)

##测试结果