前言

上篇文章介绍了k8s使用pv/pvc 的方式使用cephfs,

k8s(十一)、分布式存储Cephfs使用

Ceph存储有三种存储接口,分别是:

对象存储 Ceph Object Gateway

块设备 RBD

文件系统 CEPHFS

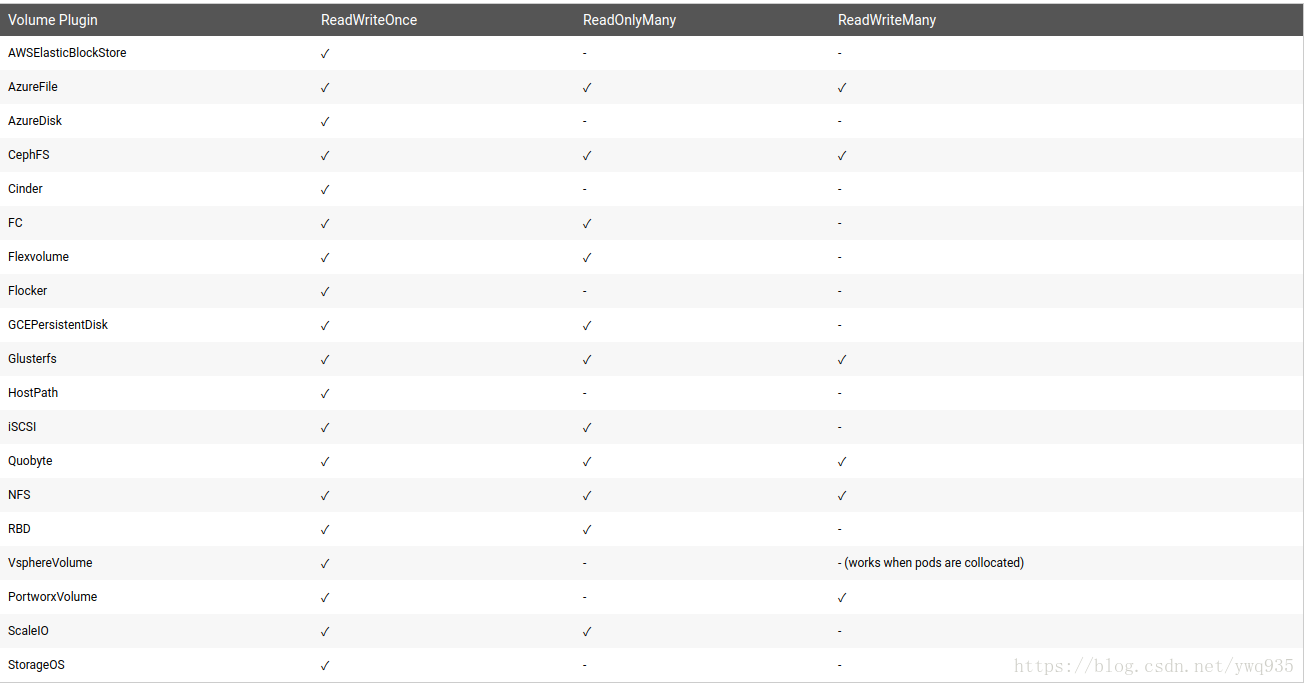

Kubernetes支持后两种存储接口,支持的接入模式如下图:

在本篇将测试使用ceph rbd作持久化存储后端

RBD创建测试

rbd的使用分为3个步骤:

1.服务端/客户端创建块设备image

2.客户端将image映射进linux系统内核,内核识别出该块设备后生成dev下的文件标识

3.格式化块设备并挂载使用

# 创建

[root@yksp020112 ~]# rbd create --size 10240000 rbd/test1 #创建指定大小的node

[root@yksp020112 ~]# rbd info test1

rbd image 'test1':

size 10000 GB in 2560000 objects

order 22 (4096 kB objects)

block_name_prefix: rbd_data.31e3b6b8b4567

format: 2

features: layering, exclusive-lock,object-map, fast-diff, deep-flatten

flags:

#映射进内核操作之前,首先查看内核版本,jw版本的ceph默认format为2,默认开启5种特性,2.x及之前的内核版本需手动调整format为1

,4.x之前要关闭object-map, fast-diff, deep-flatten功能才能成功映射到内核,这里使用的是centos7.4,内核版本3.10

[root@yksp020112 ~]# rbd feature disable test1 object-map fast-diff deep-flatten

# 映射进内核

[root@yksp020112 ~]# rbd map test1

[root@yksp020112 ~]# ls /dev/rbd0

/dev/rbd0

#挂载使用

[root@yksp020112 ~]# mkdir /mnt/cephrbd

[root@yksp020112 ~]# mkfs.ext4 -m0 /dev/rbd0

[root@yksp020112 ~]# mount /dev/rbd0 /mnt/cephrbd/

Kubernetes Dynamic Pv 使用

首先回顾一下上篇cephfs在k8s中的使用方式:

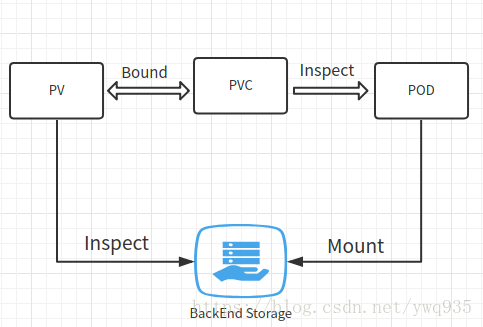

绑定使用流程: pv指定存储类型和接口,pvc绑定pv,pod挂载指定pvc.这种一一对应的方式,即为静态pv

例如cephfs的使用流程:

那么什么是动态pv呢?

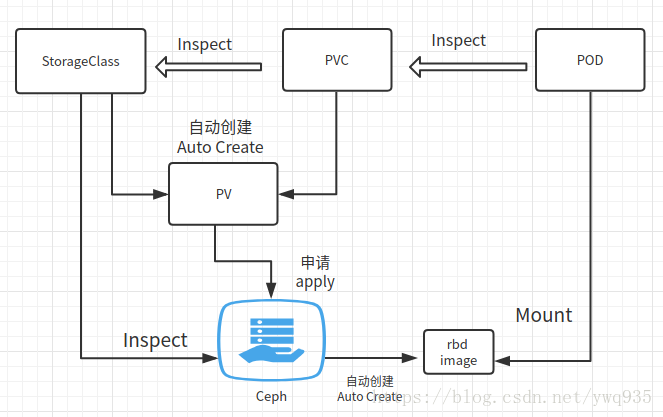

在现有PV不满足PVC的请求时,可以使用存储分类(StorageClass),pvc对接sc后,k8s会动态地创建pv,并且向相应的存储后端发出资源申请.

描述具体过程为:首先创建sc分类,PVC请求已创建的sc的资源,来自动创建pv,这样就达到动态配置的效果。即通过一个叫 Storage Class的对象由存储系统根据PVC的要求自动创建pv并使用存储资源

Kubernetes的pv支持rbd,从第一部分测试使用rbd的过程可以看出,存储实际使用的单位是image,首先必须在ceph端创建image,才能创建pv/pvc,再在pod里面挂载使用.这是一个标准的静态pv的绑定使用流程.但是这样的流程就带来了一个弊端,即一个完整的存储资源挂载使用的操作被分割成了两段,一段在ceph端划分rbd image,一段由k8s端绑定pv,这样使得管理的复杂性提高,不利于自动化运维.

因此,动态pv是一个很好的选择,ceph rbd接口的动态pv的实现流程是这样的:

根据pvc及sc的声明,自动生成pv,且向sc指定的ceph后端发出申请自动创建相应大小的rbd image,最终绑定使用

工作流程图:

下面开始演示

1.创建secret

key获取方式参考上篇文章

~/mytest/ceph/rbd# cat ceph-secret-rbd.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret-rbd

type: "kubernetes.io/rbd"

data:

key: QVFCL3E1ZGIvWFdxS1JBQTUyV0ZCUkxldnRjQzNidTFHZXlVYnc9PQ==

2.创建sc

~/mytest/ceph/rbd# cat ceph-secret-rbd.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cephrbd-test2

provisioner: kubernetes.io/rbd

reclaimPolicy: Retain

parameters:

monitors: 192.168.20.112:6789,192.168.20.113:6789,192.168.20.114:6789

adminId: admin

adminSecretName: ceph-secret-rbd

pool: rbd

userId: admin

userSecretName: ceph-secret-rbd

fsType: ext4

imageFormat: "2"

imageFeatures: "layering"

创建pvc

~/mytest/ceph/rbd# cat cephrbd-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephrbd-pvc2

spec:

storageClassName: cephrbd-test2

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

创建deploy,容器挂载使用创建的pvc

:~/mytest/ceph/rbd# cat deptest13dbdm.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

labels:

app: deptest13dbdm

name: deptest13dbdm

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: deptest13dbdm

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: deptest13dbdm

spec:

containers:

image: registry.xxx.com:5000/mysql:56v6

imagePullPolicy: Always

name: deptest13dbdm

ports:

- containerPort: 3306

protocol: TCP

resources:

limits:

memory: 16Gi

requests:

memory: 512Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/mysql

name: datadir

subPath: deptest13dbdm

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: datadir

persistentVolumeClaim:

claimName: cephrbd-pvc2

这里会遇到一个大坑:

~# kubectl describe pvc cephrbd-pvc2

...

Error creating rbd image: executable file not found in $PATH

这是因为kube-controller-manager需要调用rbd客户端api来创建rbd image,集群的每个节点都已经安装ceph-common,但是集群是使用kubeadm部署的,kube-controller-manager组件是以容器方式工作的,内部不包含rbd的执行文件,后面在这个issue里,找到了解决的办法:

Github issue

使用外部的rbd-provisioner,提供给kube-controller-manager以rbd的执行入口,注意,issue内的demo指定的image版本有bug,不能指定rbd挂载后使用的文件系统格式,需要使用最新的版本demo,项目地址:rbd-provisioner

完整demo yaml文件:

root@yksp009027:~/mytest/ceph/rbd/rbd-provisioner# cat rbd-provisioner.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["kube-dns","coredns"]

verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: rbd-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: rbd-provisioner

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: rbd-provisioner

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rbd-provisioner

subjects:

- kind: ServiceAccount

name: rbd-provisioner

namespace: default

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rbd-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: quay.io/external_storage/rbd-provisioner:latest

env:

- name: PROVISIONER_NAME

value: ceph.com/rbd

serviceAccount: rbd-provisioner

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rbd-provisioner

部署完成后,重新创建上方的sc/pv,这个时候,pod就正常运行起来了,查看pvc,可以看到此pvc触发自动生成了一个pv且绑定了此pv,pv绑定的StorageClass则是刚创建的sc:

# pod

~/mytest/ceph/rbd/rbd-provisioner# kubectl get pods | grep deptest13

deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Running 0 6h

# pvc

~/mytest/ceph/rbd/rbd-provisioner# kubectl get pvc cephrbd-pvc2

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

cephrbd-pvc2 Bound pvc-d976c2bf-c2fb-11e8-878f-141877468256 20Gi RWO cephrbd-test2 23h

# 自动创建的pv

~/mytest/ceph/rbd/rbd-provisioner# kubectl get pv pvc-d976c2bf-c2fb-11e8-878f-141877468256

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-d976c2bf-c2fb-11e8-878f-141877468256 20Gi RWO Retain Bound default/cephrbd-pvc2 cephrbd-test2 23h

# sc

~/mytest/ceph/rbd/rbd-provisioner# kubectl get sc cephrbd-test2

NAME PROVISIONER AGE

cephrbd-test2 ceph.com/rbd 23h

再回到ceph节点上看看是否自动创建了rbd image

~# rbd ls

kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e

test1

~# rbd info kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e

rbd image 'kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e':

size 20480 MB in 5120 objects

order 22 (4096 kB objects)

block_name_prefix: rbd_data.47d0b6b8b4567

format: 2

features: layering

flags:

可以发现,动态创建pv/rbd成功

挂载测试

回顾最上方的kubernetes支持的接入模式截图,rbd是不支持ReadWriteMany的,而k8s的理念是像牲口一样管理你的容器,容器节点有可能随时横向扩展,横向缩减,在节点间漂移,因此,不支持多点挂载的RBD模式,显然不适合做多应用节点之间的共享文件存储,这里进行一次进一步的验证

多点挂载测试

创建一个新的deploy,并挂载同一个pvc:

root@yksp009027:~/mytest/ceph/rbd# cat deptest14dbdm.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "4"

creationTimestamp: 2018-09-26T07:32:41Z

generation: 4

labels:

app: deptest14dbdm

domain: dev.deptest14dbm.kokoerp.com

nginx_server: ""

name: deptest14dbdm

namespace: default

resourceVersion: "26647927"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/deptest14dbdm

uid: 5a1a62fe-c15e-11e8-878f-141877468256

spec:

replicas: 1

selector:

matchLabels:

app: deptest14dbdm

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: deptest14dbdm

domain: dev.deptest14dbm.kokoerp.com

nginx_server: ""

spec:

containers:

- env:

- name: DB_NAME

value: deptest14dbdm

image: registry.youkeshu.com:5000/mysql:56v6

imagePullPolicy: Always

name: deptest14dbdm

ports:

- containerPort: 3306

protocol: TCP

resources:

limits:

memory: 16Gi

requests:

memory: 512Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/lib/mysql

name: datadir

subPath: deptest14dbdm

- env:

- name: DATA_SOURCE_NAME

value: exporter:6qQidF3F5wNhrLC0@(127.0.0.1:3306)/

image: registry.youkeshu.com:5000/mysqld-exporter:v0.10

imagePullPolicy: Always

name: mysql-exporter

ports:

- containerPort: 9104

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

nodeSelector:

192.168.20.109: ""

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- name: datadir

persistentVolumeClaim:

claimName: cephrbd-pvc2

使用nodeselector将此deptest13和deptest14限制在同一个node中:

root@yksp009027:~/mytest/ceph/rbd# kubectl get pods -o wide | grep deptest1[3-4]

deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Running 0 6h 172.26.8.82 yksp020109

deptest14dbdm-78b8bb7995-q84dk 2/2 Running 0 2m 172.26.8.84 yksp020109

不难发现在同一个节点中,是可以支持多个pod共享rbd image运行的,在容器的工作节点上查看mount条目也可以证实这一点:

192.168.20.109:~# mount | grep rbd

/dev/rbd0 on /var/lib/kubelet/plugins/kubernetes.io/rbd/rbd/rbd-image-kubernetes-dynamic-pvc-d988cfb1-c2fb-11e8-b2df-0a58ac1a084e type ext4 (rw,relatime,stripe=1024,data=ordered)

/dev/rbd0 on /var/lib/kubelet/pods/68b8e3c5-c38d-11e8-878f-141877468256/volumes/kubernetes.io~rbd/pvc-d976c2bf-c2fb-11e8-878f-141877468256 type ext4 (rw,relatime,stripe=1024,data=ordered)

/dev/rbd0 on /var/lib/kubelet/pods/1e9b04b1-c3c3-11e8-878f-141877468256/volumes/kubernetes.io~rbd/pvc-d976c2bf-c2fb-11e8-878f-141877468256 type ext4 (rw,relatime,stripe=1024,data=ordered)

修改新deploy的nodeselector指向其他的node:

~/mytest/ceph/rbd# kubectl get pods -o wide| grep deptest14db

deptest14dbdm-78b8bb7995-q84dk 0/2 Terminating 0 7m 172.26.8.84 yksp020109

deptest14dbdm-79fd495579-rkl92 0/2 ContainerCreating 0 49s <none> yksp009028

# kubectl describe pod deptest14dbdm-79fd495579-rkl92

...

Warning FailedAttachVolume 1m attachdetach-controller Multi-Attach error for volume "pvc-d976c2bf-c2fb-11e8-878f-141877468256" Volume is already exclusively attached to one node and can't be attached to another

pod将阻塞在ContainerCreating状态,描述报错很清晰地指出控制器不支持多点挂载此pv.

进行下一步测试前先删除此临时新增的deploy

滚动更新测试

在deptest13dbdm的deploy文件中指定的更新方式为滚动更新:

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

这里我们尝试一下,将正在运行的pod,从一个节点调度到另一个节点,rbd 是否能迁移到新的节点上挂载:

# 修改nodeselector

root@yksp009027:~/mytest/ceph/rbd# kubectl edit deploy deptest13dbdm

deployment "deptest13dbdm" edited

# 观察pod调度迁移过程

root@yksp009027:~/mytest/ceph/rbd# kubectl get pods -o wide| grep deptest13

deptest13dbdm-6999f5757c-fk9dp 0/2 ContainerCreating 0 4s <none> yksp009028

deptest13dbdm-c9d5bfb7c-rzzv6 2/2 Terminating 0 6h 172.26.8.82 yksp020109

root@yksp009027:~/mytest/ceph/rbd# kubectl describe pod deptest13dbdm-6999f5757c-fk9dp

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m default-scheduler Successfully assigned deptest13dbdm-6999f5757c-fk9dp to yksp009028

Normal SuccessfulMountVolume 2m kubelet, yksp009028 MountVolume.SetUp succeeded for volume "default-token-7q8pq"

Warning FailedMount 45s kubelet, yksp009028 Unable to mount volumes for pod "deptest13dbdm-6999f5757c-fk9dp_default(28e9cdd6-c3c5-11e8-878f-141877468256)": timeout expired waiting for volumes to attach/mount for pod "default"/"deptest13dbdm-6999f5757c-fk9dp". list of unattached/unmounted volumes=[datadir]

Normal SuccessfulMountVolume 35s (x2 over 36s) kubelet, yksp009028 MountVolume.SetUp succeeded for volume "pvc-d976c2bf-c2fb-11e8-878f-141877468256"

Normal Pulling 27s kubelet, yksp009028 pulling image "registry.youkeshu.com:5000/mysql:56v6"

Normal Pulled 27s kubelet, yksp009028 Successfully pulled image "registry.youkeshu.com:5000/mysql:56v6"

Normal Pulling 27s kubelet, yksp009028 pulling image "registry.youkeshu.com:5000/mysqld-exporter:v0.10"

Normal Pulled 26s kubelet, yksp009028 Successfully pulled image "registry.youkeshu.com:5000/mysqld-exporter:v0.10"

Normal Created 26s kubelet, yksp009028 Created container

Normal Started 26s kubelet, yksp009028 Started container

root@yksp009027:~/mytest/ceph/rbd# kubectl get pods -o wide| grep deptest13

deptest13dbdm-6999f5757c-fk9dp 2/2 Running 0 3m 172.26.0.87 yksp009028

#在原node上查看内核中映射的rbd image块设备描述文件已消失,挂载已取消

root@yksp020109:~/tpcc-mysql# ls /dev/rbd*

ls: cannot access '/dev/rbd*': No such file or directory

root@yksp020109:~/tpcc-mysql# mount | grep rbd

root@yksp020109:~/tpcc-mysql#

可以看出,是可以自动完成在节点间迁移的滚动更新的,整个调度的过程有些漫长,接近3分钟,这是因为其中至少涉及如下几个步骤:

1.原pod终结,释放资源

2.原node取消挂载rbd,释放内核映射

3.新node内核映射rbd image,挂载rbd到文件目录

4.创建pod,挂载指定目录

结论:

ceph rbd不支持多节点挂载,支持滚动更新.

后续

在完成了使用cephfs和ceph rbd这两种k8s分布式存储的对接方式测试之后,下一篇文章将针对这两种方式进行数据库性能测试及适用场景讨论