作者:李毓

上一节我们讲到了如何搭建ceph分布式存储集群,现在我简单阐述一下如何在K8S集群中使用ceph存储。

学习过K8S之后都知道,挂载数据卷基本就是两种方式进行挂载,静态的或者是动态的。这点同样适用于ceph存储

今天我们重点学习动态存储,也就是基于cephfs provisioner实现动态分配。我的实验环境是K8S1.18的,没有内置集成cephfs provisioner,所以我们使用社区提供的版本。

去社区下载并且安装cephfs provisioner

git clone https://github.com/kubernetes-retired/external-storage.git

[root@adm-master ~]# cd external-storage-master/ceph/cephfs/deploy/

[root@adm-master deploy]# ls

non-rbac rbac README.md

[root@adm-master deploy]# sed -r -i "s/namespace: [^ ]+/namespace: $NAMESPACE/g" ./rbac/*.yaml

[root@adm-master deploy]# sed -i "/PROVISIONER_SECRET_NAMESPACE/{n;s/value:.*/value: $NAMESPACE/;}" rbac/deployment.yaml

[root@adm-master deploy]# kubectl -n $NAMESPACE apply -f ./rbac

clusterrole.rbac.authorization.k8s.io/cephfs-provisioner created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-provisioner created

deployment.apps/cephfs-provisioner created

role.rbac.authorization.k8s.io/cephfs-provisioner created

rolebinding.rbac.authorization.k8s.io/cephfs-provisioner created

serviceaccount/cephfs-provisioner created

查看是否安装成功

[root@adm-master deploy]# kubectl get pods -n kube-system|grep 'cephfs-provisioner'

cephfs-provisioner-6c4dc5f646-5jklv 1/1 Running 0 3m20s

创建资源之前先要生成secret,我们先获取cephfs的密钥。

进入cephfs的管理节点查看密钥

[liyu@admin-node my-cluster]$ cat ceph.client.admin.keyring

[client.admin]

key = AQBzoT9gbAcpExAAqDOEJNqLXbQfmSNhNnDQuA==

caps mds = "allow *"

caps mon = "allow *"

caps osd = "allow *"

将密钥进行base64处理

[root@adm-master deploy]# echo "AQBzoT9gbAcpExAAqDOEJNqLXbQfmSNhNnDQuA==" | base64

QVFCem9UOWdiQWNwRXhBQXFET0VKTnFMWGJRZm1TTmhObkRRdUE9PQo=

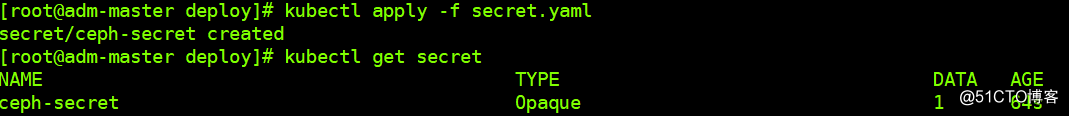

创建secret

[root@adm-master deploy]# cat secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

namespace: kube-system

data:

key: QVFCem9UOWdiQWNwRXhBQXFET0VKTnFMWGJRZm1TTmhObkRRdUE9PQo=

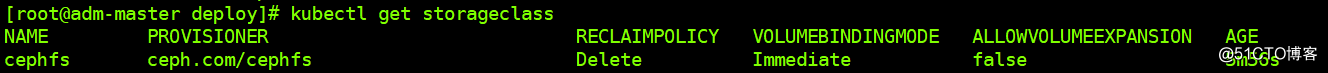

创建storageclass资源

[root@adm-master deploy]# cat sc.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cephfs

provisioner: ceph.com/cephfs

parameters:

monitors: 192.168.0.131:6789,192.168.0.132:6789,192.168.0.133:6789

adminId: admin

adminSecretNamespace: "kube-system"

adminSecretName: ceph-secret

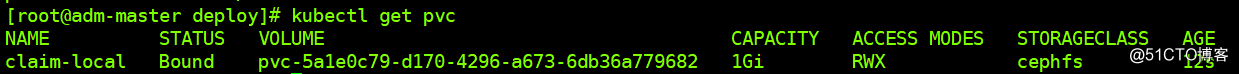

创建pvc

[root@adm-master deploy]# cat pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: claim-local

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: "cephfs"

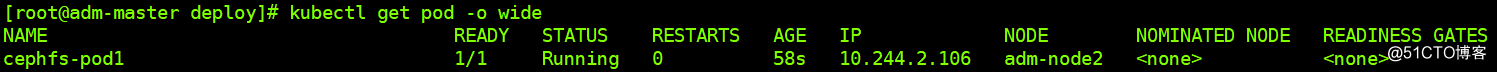

现在我们创建一个绑定此pvc的pod

[root@adm-master deploy]# cat pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: cephfs-pod1

spec:

containers:

- name: cephfs-busybox1

image: busybox

command: ["sleep", "60000"]

volumeMounts:

- mountPath: "/mnt/cephfs"

name: cephfs-vol1

readOnly: false

volumes:

- name: cephfs-vol1

persistentVolumeClaim:

claimName: claim-local

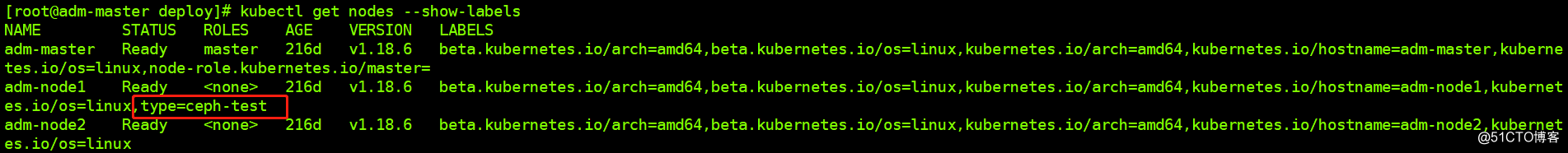

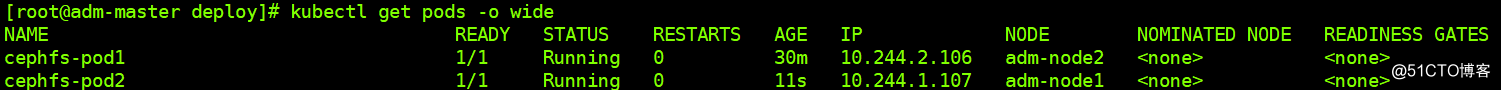

可以看见,他调度到了node2上面。所以为了实验效果,我们给node1打个label,并起一个cephfs-pod2的容器并且设置label把pod调度到node1上面。

[root@adm-master deploy]# kubectl label nodes adm-node1 type=ceph-test

node/adm-node1 labeled

[root@adm-master deploy]# kubectl get nodes --show-labels

[root@adm-master deploy]# cat pod2.yaml

kind: Pod

apiVersion: v1

metadata:

name: cephfs-pod2

spec:

containers:

- name: cephfs-busybox1

image: busybox

command: ["sleep", "60000"]

volumeMounts:

- mountPath: "/mnt/cephfs"

name: cephfs-vol1

readOnly: false

volumes:

- name: cephfs-vol1

persistentVolumeClaim:

claimName: claim-local

nodeSelector:

type: ceph-test

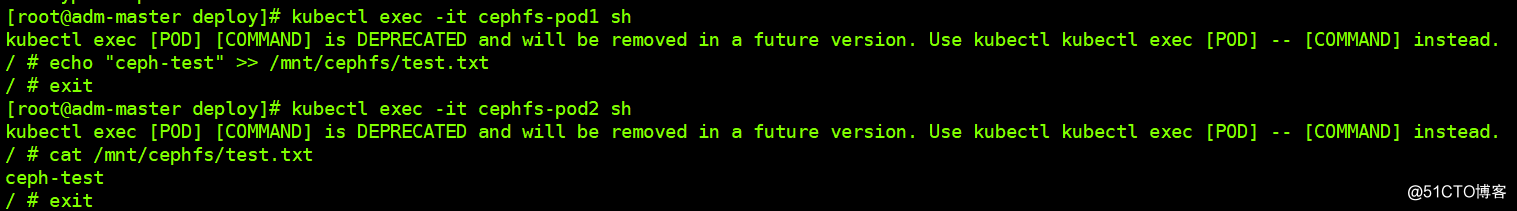

两个容器已经都起来了,现在我们测试一下在pod1里面写数据,然后去pod2里面看。

我们可以看到,数据同步成功了。