版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/Katherine_hsr/article/details/80878431

上一篇文章中已提到如何在linux中搭建pyspark环境,如果需要的同学请查看linux虚拟机搭建pyspark环境文章,本次主要讲解如何直接在环境中能够直接使用已写好的py文件直接运行。

文件共享

虚拟机和主机需要配置文件共享,首先将virtualbox安装增强工具,安装之前需要执行以下命令,提前安装好需要的程序

yum update

yum install gcc

yum install gcc-c++

yum install make

yum install kernel-headers

yum install kernel-devel由于增强工具是bzip2格式的,因此提前安装好

yum -y install bzip2*如果上述命令权限不够,在前面加上sudo

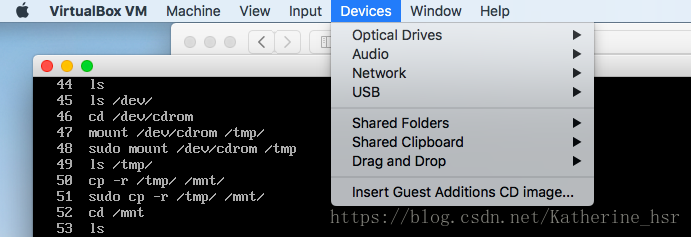

然后开始安装增强工具,点击Devices,然后选择Insert Guest Additions CD image

如果出现了mount类的错误,则需要先将以前的ISO退出,如下选择Devices->Optical Drives->Remove disk from virtual drive

然后再重新进行上一步骤。

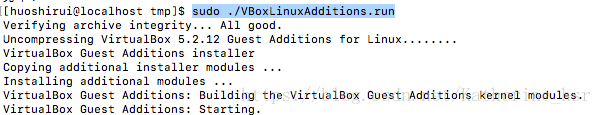

当点击后,ssh进入到虚拟机中,然后通过命令进入到/mnt/tmp目录下

cd /mnt/tmp

sudo ./VBoxLinuxAdditions.run

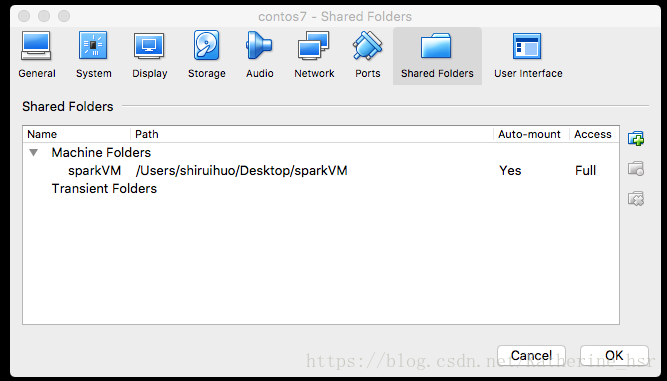

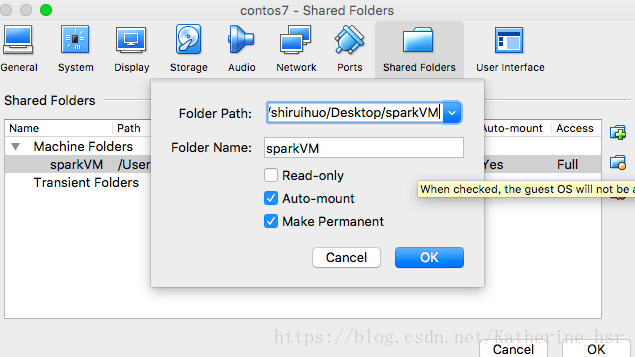

安装成功后便可进行配置文件夹共享了,配置路径方法为Device->share folders->setting

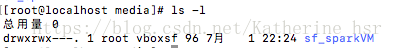

配置完成后,可以在/media/下看到配置的共享路径

然后就可以将文件复制到主机对应的路径下,从而在虚拟机共享路径下看到了,自此共享文件夹配置完毕

执行py文件运行

新建py文件,当中新增代码

import pyspark

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.master("local") \

.appName("Word Count") \

.config("spark.some.config.option", "some-value") \

.getOrCreate()

l = [('Alice', 1)]

spark.createDataFrame(l).collect()

data = spark.createDataFrame(l, ['name', 'age']).collect()

print(data)

print('hello spark')然后在命令中执行

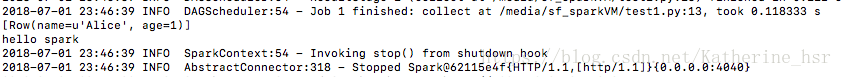

spark-submit test1.py将会输出

[root@localhost sf_sparkVM]# spark-submit test1.py

2018-07-01 23:46:28 WARN Utils:66 - Your hostname, localhost.localdomain resolves to a loopback address: 127.0.0.1; using 192.168.0.104 instead (on interface enp0s3)

2018-07-01 23:46:28 WARN Utils:66 - Set SPARK_LOCAL_IP if you need to bind to another address

2018-07-01 23:46:29 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-07-01 23:46:29 INFO SparkContext:54 - Running Spark version 2.3.1

2018-07-01 23:46:29 INFO SparkContext:54 - Submitted application: Word Count

2018-07-01 23:46:29 INFO SecurityManager:54 - Changing view acls to: root

2018-07-01 23:46:29 INFO SecurityManager:54 - Changing modify acls to: root

2018-07-01 23:46:29 INFO SecurityManager:54 - Changing view acls groups to:

2018-07-01 23:46:29 INFO SecurityManager:54 - Changing modify acls groups to:

2018-07-01 23:46:29 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2018-07-01 23:46:30 INFO Utils:54 - Successfully started service 'sparkDriver' on port 35293.

2018-07-01 23:46:30 INFO SparkEnv:54 - Registering MapOutputTracker

2018-07-01 23:46:30 INFO SparkEnv:54 - Registering BlockManagerMaster

2018-07-01 23:46:30 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2018-07-01 23:46:30 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2018-07-01 23:46:30 INFO DiskBlockManager:54 - Created local directory at /tmp/blockmgr-84bec309-d89d-48f6-88a3-1a0b880a71d7

2018-07-01 23:46:30 INFO MemoryStore:54 - MemoryStore started with capacity 413.9 MB

2018-07-01 23:46:30 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2018-07-01 23:46:30 INFO log:192 - Logging initialized @3342ms

2018-07-01 23:46:30 INFO Server:346 - jetty-9.3.z-SNAPSHOT

2018-07-01 23:46:30 INFO Server:414 - Started @3525ms

2018-07-01 23:46:30 INFO AbstractConnector:278 - Started ServerConnector@62115e4f{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2018-07-01 23:46:30 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@33ea787{/jobs,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3c9c83e0{/jobs/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1e28e221{/jobs/job,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1ceeb7f6{/jobs/job/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4435b408{/stages,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1dd56f45{/stages/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1c41d7d9{/stages/stage,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2a9c1671{/stages/stage/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3871e3e4{/stages/pool,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1a41ac8{/stages/pool/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@327d057a{/storage,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7818a2da{/storage/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4f4110fe{/storage/rdd,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6e122184{/storage/rdd/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@341ebfe9{/environment,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@92dd5c2{/environment/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4e2ce2f9{/executors,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@314df4b9{/executors/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@70ede48e{/executors/threadDump,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1789ac94{/executors/threadDump/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3e9bafb6{/static,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7aaeebb4{/,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6ebdd8f4{/api,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@14720c7d{/jobs/job/kill,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@22a87a97{/stages/stage/kill,null,AVAILABLE,@Spark}

2018-07-01 23:46:30 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://192.168.0.104:4040

2018-07-01 23:46:31 INFO SparkContext:54 - Added file file:/media/sf_sparkVM/test1.py at file:/media/sf_sparkVM/test1.py with timestamp 1530459991513

2018-07-01 23:46:31 INFO Utils:54 - Copying /media/sf_sparkVM/test1.py to /tmp/spark-58fef0f2-cf8a-4f34-8dda-50a85a624215/userFiles-56448a23-d6c7-498c-b561-404288c33940/test1.py

2018-07-01 23:46:31 INFO Executor:54 - Starting executor ID driver on host localhost

2018-07-01 23:46:31 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 40465.

2018-07-01 23:46:31 INFO NettyBlockTransferService:54 - Server created on 192.168.0.104:40465

2018-07-01 23:46:31 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2018-07-01 23:46:31 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, 192.168.0.104, 40465, None)

2018-07-01 23:46:31 INFO BlockManagerMasterEndpoint:54 - Registering block manager 192.168.0.104:40465 with 413.9 MB RAM, BlockManagerId(driver, 192.168.0.104, 40465, None)

2018-07-01 23:46:31 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, 192.168.0.104, 40465, None)

2018-07-01 23:46:31 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, 192.168.0.104, 40465, None)

2018-07-01 23:46:31 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@43aebf50{/metrics/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:32 INFO SharedState:54 - Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/media/sf_sparkVM/spark-warehouse/').

2018-07-01 23:46:32 INFO SharedState:54 - Warehouse path is 'file:/media/sf_sparkVM/spark-warehouse/'.

2018-07-01 23:46:32 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@18c42a11{/SQL,null,AVAILABLE,@Spark}

2018-07-01 23:46:32 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1929ba0a{/SQL/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:32 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@27e3b2a7{/SQL/execution,null,AVAILABLE,@Spark}

2018-07-01 23:46:32 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4ab7ef06{/SQL/execution/json,null,AVAILABLE,@Spark}

2018-07-01 23:46:32 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6d239ab7{/static/sql,null,AVAILABLE,@Spark}

2018-07-01 23:46:33 INFO StateStoreCoordinatorRef:54 - Registered StateStoreCoordinator endpoint

2018-07-01 23:46:37 INFO SparkContext:54 - Starting job: collect at /media/sf_sparkVM/test1.py:12

2018-07-01 23:46:37 INFO DAGScheduler:54 - Got job 0 (collect at /media/sf_sparkVM/test1.py:12) with 1 output partitions

2018-07-01 23:46:37 INFO DAGScheduler:54 - Final stage: ResultStage 0 (collect at /media/sf_sparkVM/test1.py:12)

2018-07-01 23:46:37 INFO DAGScheduler:54 - Parents of final stage: List()

2018-07-01 23:46:37 INFO DAGScheduler:54 - Missing parents: List()

2018-07-01 23:46:37 INFO DAGScheduler:54 - Submitting ResultStage 0 (MapPartitionsRDD[6] at collect at /media/sf_sparkVM/test1.py:12), which has no missing parents

2018-07-01 23:46:37 INFO MemoryStore:54 - Block broadcast_0 stored as values in memory (estimated size 8.1 KB, free 413.9 MB)

2018-07-01 23:46:37 INFO MemoryStore:54 - Block broadcast_0_piece0 stored as bytes in memory (estimated size 4.5 KB, free 413.9 MB)

2018-07-01 23:46:37 INFO BlockManagerInfo:54 - Added broadcast_0_piece0 in memory on 192.168.0.104:40465 (size: 4.5 KB, free: 413.9 MB)

2018-07-01 23:46:37 INFO SparkContext:54 - Created broadcast 0 from broadcast at DAGScheduler.scala:1039

2018-07-01 23:46:37 INFO DAGScheduler:54 - Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[6] at collect at /media/sf_sparkVM/test1.py:12) (first 15 tasks are for partitions Vector(0))

2018-07-01 23:46:37 INFO TaskSchedulerImpl:54 - Adding task set 0.0 with 1 tasks

2018-07-01 23:46:37 INFO TaskSetManager:54 - Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7870 bytes)

2018-07-01 23:46:37 INFO Executor:54 - Running task 0.0 in stage 0.0 (TID 0)

2018-07-01 23:46:37 INFO Executor:54 - Fetching file:/media/sf_sparkVM/test1.py with timestamp 1530459991513

2018-07-01 23:46:37 INFO Utils:54 - /media/sf_sparkVM/test1.py has been previously copied to /tmp/spark-58fef0f2-cf8a-4f34-8dda-50a85a624215/userFiles-56448a23-d6c7-498c-b561-404288c33940/test1.py

2018-07-01 23:46:38 INFO CodeGenerator:54 - Code generated in 400.40251 ms

2018-07-01 23:46:38 INFO PythonRunner:54 - Times: total = 407, boot = 358, init = 48, finish = 1

2018-07-01 23:46:38 INFO Executor:54 - Finished task 0.0 in stage 0.0 (TID 0). 1823 bytes result sent to driver

2018-07-01 23:46:38 INFO TaskSetManager:54 - Finished task 0.0 in stage 0.0 (TID 0) in 1302 ms on localhost (executor driver) (1/1)

2018-07-01 23:46:38 INFO TaskSchedulerImpl:54 - Removed TaskSet 0.0, whose tasks have all completed, from pool

2018-07-01 23:46:38 INFO DAGScheduler:54 - ResultStage 0 (collect at /media/sf_sparkVM/test1.py:12) finished in 1.667 s

2018-07-01 23:46:38 INFO DAGScheduler:54 - Job 0 finished: collect at /media/sf_sparkVM/test1.py:12, took 1.790226 s

2018-07-01 23:46:39 INFO SparkContext:54 - Starting job: collect at /media/sf_sparkVM/test1.py:13

2018-07-01 23:46:39 INFO DAGScheduler:54 - Got job 1 (collect at /media/sf_sparkVM/test1.py:13) with 1 output partitions

2018-07-01 23:46:39 INFO DAGScheduler:54 - Final stage: ResultStage 1 (collect at /media/sf_sparkVM/test1.py:13)

2018-07-01 23:46:39 INFO DAGScheduler:54 - Parents of final stage: List()

2018-07-01 23:46:39 INFO DAGScheduler:54 - Missing parents: List()

2018-07-01 23:46:39 INFO DAGScheduler:54 - Submitting ResultStage 1 (MapPartitionsRDD[13] at collect at /media/sf_sparkVM/test1.py:13), which has no missing parents

2018-07-01 23:46:39 INFO MemoryStore:54 - Block broadcast_1 stored as values in memory (estimated size 8.1 KB, free 413.9 MB)

2018-07-01 23:46:39 INFO MemoryStore:54 - Block broadcast_1_piece0 stored as bytes in memory (estimated size 4.5 KB, free 413.9 MB)

2018-07-01 23:46:39 INFO BlockManagerInfo:54 - Added broadcast_1_piece0 in memory on 192.168.0.104:40465 (size: 4.5 KB, free: 413.9 MB)

2018-07-01 23:46:39 INFO SparkContext:54 - Created broadcast 1 from broadcast at DAGScheduler.scala:1039

2018-07-01 23:46:39 INFO DAGScheduler:54 - Submitting 1 missing tasks from ResultStage 1 (MapPartitionsRDD[13] at collect at /media/sf_sparkVM/test1.py:13) (first 15 tasks are for partitions Vector(0))

2018-07-01 23:46:39 INFO TaskSchedulerImpl:54 - Adding task set 1.0 with 1 tasks

2018-07-01 23:46:39 INFO TaskSetManager:54 - Starting task 0.0 in stage 1.0 (TID 1, localhost, executor driver, partition 0, PROCESS_LOCAL, 7870 bytes)

2018-07-01 23:46:39 INFO Executor:54 - Running task 0.0 in stage 1.0 (TID 1)

2018-07-01 23:46:39 INFO PythonRunner:54 - Times: total = 53, boot = -893, init = 946, finish = 0

2018-07-01 23:46:39 INFO Executor:54 - Finished task 0.0 in stage 1.0 (TID 1). 1737 bytes result sent to driver

2018-07-01 23:46:39 INFO TaskSetManager:54 - Finished task 0.0 in stage 1.0 (TID 1) in 76 ms on localhost (executor driver) (1/1)

2018-07-01 23:46:39 INFO TaskSchedulerImpl:54 - Removed TaskSet 1.0, whose tasks have all completed, from pool

2018-07-01 23:46:39 INFO DAGScheduler:54 - ResultStage 1 (collect at /media/sf_sparkVM/test1.py:13) finished in 0.112 s

2018-07-01 23:46:39 INFO DAGScheduler:54 - Job 1 finished: collect at /media/sf_sparkVM/test1.py:13, took 0.118333 s

[Row(name=u'Alice', age=1)]

hello spark

2018-07-01 23:46:39 INFO SparkContext:54 - Invoking stop() from shutdown hook

2018-07-01 23:46:39 INFO AbstractConnector:318 - Stopped Spark@62115e4f{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2018-07-01 23:46:39 INFO SparkUI:54 - Stopped Spark web UI at http://192.168.0.104:4040

2018-07-01 23:46:39 INFO MapOutputTrackerMasterEndpoint:54 - MapOutputTrackerMasterEndpoint stopped!

2018-07-01 23:46:39 INFO MemoryStore:54 - MemoryStore cleared

2018-07-01 23:46:39 INFO BlockManager:54 - BlockManager stopped

2018-07-01 23:46:39 INFO BlockManagerMaster:54 - BlockManagerMaster stopped

2018-07-01 23:46:39 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:54 - OutputCommitCoordinator stopped!

2018-07-01 23:46:39 INFO SparkContext:54 - Successfully stopped SparkContext

2018-07-01 23:46:39 INFO ShutdownHookManager:54 - Shutdown hook called

2018-07-01 23:46:39 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-bc446cdd-6bde-48ba-91d4-33f784aa33e0

2018-07-01 23:46:39 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-58fef0f2-cf8a-4f34-8dda-50a85a624215

2018-07-01 23:46:39 INFO ShutdownHookManager:54 - Deleting directory /tmp/spark-58fef0f2-cf8a-4f34-8dda-50a85a624215/pyspark-0c194939-eabe-4312-bc76-b766b82fb77b

[root@localhost sf_sparkVM]# 其中可以看到打印出的内容为

自此在linux虚拟机中执行py文件程序结束,总结下共分为

- 配置文件共享

- 使用

spark-submit filePath命令