版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/QFire/article/details/82558780

RNN

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

DATA_DIR = '/tmp/data/mnist'

NUM_STEPS = 1000

MINIBATCH_SIZE = 100

mnist = input_data.read_data_sets(DATA_DIR, one_hot=True)

element_size = 28

time_steps = 28

num_classes = 10

batch_size = 128

hidden_layer_size = 128

LOG_DIR = 'logs/RNN_with_summaries'

_inputs = tf.placeholder(tf.float32, shape=[None, time_steps, element_size], name='inputs')

y = tf.placeholder(tf.float32, shape=[None, num_classes], name='labels')

batch_x, batch_y = mnist.train.next_batch(batch_size)

batch_x = batch_x.reshape(batch_size, time_steps, element_size)

def variable_summaries(var):

with tf.name_scope('summaries'):

mean = tf.reduce_mean(var)

tf.summary.scalar('mean', mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

tf.summary.scalar('stddev', stddev)

tf.summary.scalar('max', tf.reduce_max(var))

tf.summary.scalar('min', tf.reduce_min(var))

tf.summary.histogram('histogram', var)

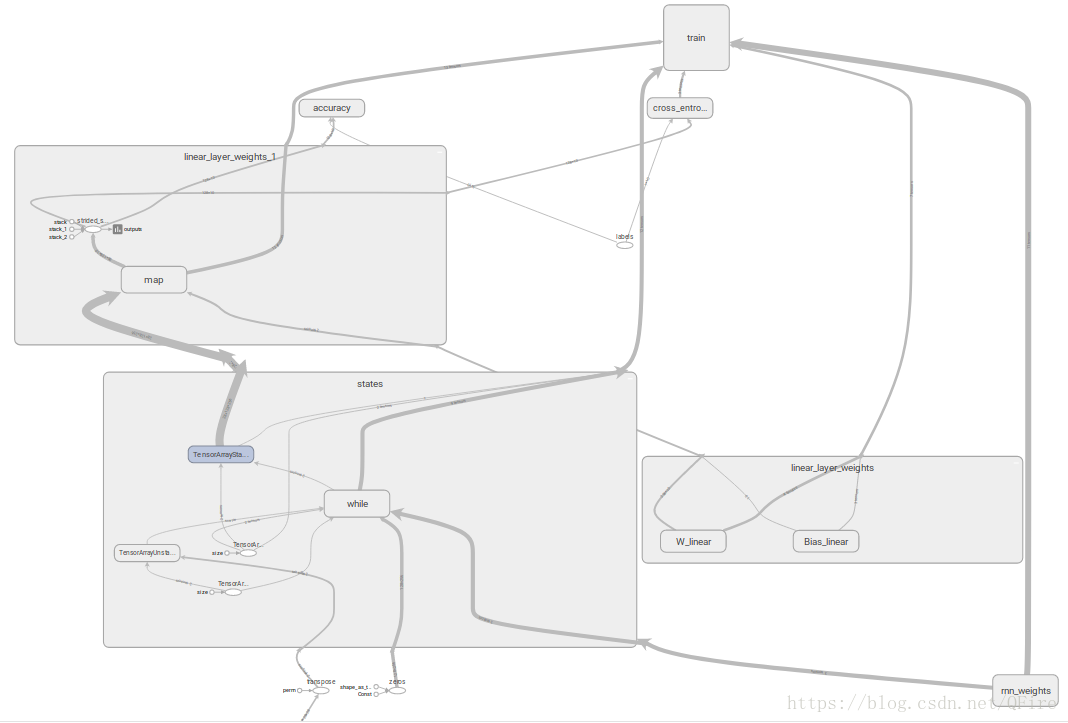

with tf.name_scope('rnn_weights'):

with tf.name_scope('W_x'):

Wx = tf.Variable(tf.zeros([element_size, hidden_layer_size]))

variable_summaries(Wx)

with tf.name_scope('W_h'):

Wh = tf.Variable(tf.zeros([hidden_layer_size, hidden_layer_size]))

variable_summaries(Wh)

with tf.name_scope('Bias'):

b_rnn = tf.Variable(tf.zeros([hidden_layer_size]))

variable_summaries(b_rnn)

def rnn_step(previous_hidden_state, x):

current_hidden_state = tf.tanh(tf.matmul(previous_hidden_state, Wh) + tf.matmul(x, Wx) + b_rnn)

return current_hidden_state

processed_input = tf.transpose(_inputs, perm=[1, 0, 2])

initial_hidden = tf.zeros([batch_size, hidden_layer_size])

all_hidden_states = tf.scan(rnn_step, processed_input, initializer=initial_hidden, name='states')

with tf.name_scope('linear_layer_weights') as scope:

with tf.name_scope('W_linear'):

Wl = tf.Variable(tf.truncated_normal([hidden_layer_size, num_classes], mean=0, stddev=.01))

variable_summaries(Wl)

with tf.name_scope('Bias_linear'):

bl = tf.Variable(tf.truncated_normal([num_classes], mean=0, stddev=.01))

variable_summaries(bl)

def get_linear_layer(hidden_state):

return tf.matmul(hidden_state, Wl) + bl

with tf.name_scope('linear_layer_weights') as scope:

all_outputs = tf.map_fn(get_linear_layer, all_hidden_states)

output = all_outputs[-1]

tf.summary.histogram('outputs', output)

with tf.name_scope('cross_entropy'):

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=output, labels=y))

tf.summary.scalar('cross_entropy', cross_entropy)

with tf.name_scope('train'):

train_step = tf.train.RMSPropOptimizer(0.001, 0.9).minimize(cross_entropy)

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(output, 1))

accuracy = (tf.reduce_mean(tf.cast(correct_prediction, tf.float32)))*100

tf.summary.scalar('accuracy', accuracy)

merged = tf.summary.merge_all()

test_data = mnist.test.images[:batch_size].reshape((-1, time_steps, element_size))

test_label = mnist.test.labels[:batch_size]

with tf.Session() as sess:

train_writer = tf.summary.FileWriter(LOG_DIR+'/train', graph=tf.get_default_graph())

test_writer = tf.summary.FileWriter(LOG_DIR+'/test', graph=tf.get_default_graph())

sess.run(tf.global_variables_initializer())

for i in range(10000):

batch_x, batch_y = mnist.train.next_batch(batch_size)

batch_x = batch_x.reshape((batch_size, time_steps, element_size))

summary, _ = sess.run([merged, train_step], feed_dict={_inputs:batch_x, y:batch_y})

train_writer.add_summary(summary, i)

if i % 1000 == 0:

acc, loss, = sess.run([accuracy, cross_entropy], feed_dict={_inputs:batch_x, y:batch_y})

print("Iter " + str(i) + ", Minibatch Loss= " + "{:.6f}".format(loss) +

", Training Accuracy= " + "{:.5f}".format(acc))

if i % 10:

summary, acc = sess.run([merged, accuracy], feed_dict={_inputs:test_data, y:test_label})

test_writer.add_summary(summary, i)

test_acc = sess.run(accuracy, feed_dict={_inputs:test_data, y:test_label})

print("Test Accuracy: ", test_acc)tensorflow中RNN

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

DATA_DIR = '/tmp/data/mnist'

NUM_STEPS = 1000

MINIBATCH_SIZE = 100

mnist = input_data.read_data_sets(DATA_DIR, one_hot=True)

element_size = 28

time_steps = 28

num_classes = 10

batch_size = 128

hidden_layer_size = 128

LOG_DIR = 'logs/RNN_with_summaries'

_inputs = tf.placeholder(tf.float32, shape=[None, time_steps, element_size], name='inputs')

y = tf.placeholder(tf.float32, shape=[None, num_classes], name='labels')

rnn_cell = tf.contrib.rnn.BasicRNNCell(hidden_layer_size)

outputs, _ = tf.nn.dynamic_rnn(rnn_cell, _inputs, dtype=tf.float32)

Wl = tf.variable(tf.truncated_normal([hidden_layer_size, num_classes], mean=0, stddev=0.01))

bl = tf.Variable(tf.truncated_normal([num_classes], mean=0, stddev=.01))

def get_linear_layer(vector):

return tf.matmul(vector, Wl) + bl

last_rnn_output = outputs[:, -1, :]

final_output = get_linear_layer(last_rnn_output)

softmax = tf.nn.softmax_cross_entropy_with_logits(logits=final_output, labels=y)

cross_entropy = tf.reduce_mean(softmax)

train_step = tf.train.RMSPropOptimizer(0.001, 0.9).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(final_output, 1))

accuracy = (tf.reduce_mean(tf.cast(correct_prediction, tf.float32)))*100

sess = tf.InteractiveSession()

sess.run(tf.global_variables_initializer())

test_data = mnist.test.images[:batch_size].reshape((-1, time_steps, element_size))

test_label = mnist.test.labels[:batch_size]

for i in range(3001):

batch_x, batch_y = mnist.train.next_batch(batch_size)

batch_x = batch_x.reshape((batch_size, time_steps, element_size))

summary = sess.run(train_step, feed_dict={_inputs:batch_x, y:batch_y})

if i % 1000 == 0:

acc, loss, = sess.run([accuracy, cross_entropy], feed_dict={_inputs:batch_x, y:batch_y})

print("Iter " + str(i) + ", Minibatch Loss= " + "{:.6f}".format(loss) +

", Training Accuracy= " + "{:.5f}".format(acc))

test_acc = sess.run(accuracy, feed_dict={_inputs:test_data, y:test_label})

print("Test Accuracy: ", test_acc)处理RNN的文本序列

import numpy as np

import tensorflow as tf

batch_size = 128;embedding_dimension=64;num_classes=2

hidden_layer_size=32;times_steps=6; element_size=1

digit_to_word_map = {1:"One",2:"Two",3:"Three",4:"Four",5:"Five",6:"Six",7:"Seven",8:"Eight",9:"Nine"}

digit_to_word_map[0] = 'PAD'

even_sentences = []

odd_sentences = []

seqlens = []

for i in range(10000):

rand_seq_len = np.random.choice(range(3,7))

seqlens.append(rand_seq_len)

rand_odd_ints = np.random.choice(range(1,10,2), rand_seq_len)

rand_event_ints = np.random.choice(range(2,10,2), rand_seq_len)

# Padding

if rand_seq_len<6:

rand_odd_ints = np.append(rand_odd_ints, [0]*(6-rand_seq_len))

rand_event_ints = np.append(rand_event_ints, [0]*(6-rand_seq_len))

even_sentences.append(" ".join([digit_to_word_map[r] for r in rand_odd_ints]))

odd_sentences.append(" ".join([digit_to_word_map[r] for r in rand_event_ints]))

data = even_sentences + odd_sentences

seqlens *= 2

print(seqlens[0:6])

print(even_sentences[0:6])

print(odd_sentences[0:6])

word2index_map = {}

index = 0

for sent in data:

for word in sent.lower().split():

if word not in word2index_map:

word2index_map[word] = index

index += 1

print(word2index_map)

index2word_map = {index: word for word, index in word2index_map.items()}

vocabulary_size = len(index2word_map)

print(vocabulary_size)

labels = [1]*10000 + [0]*10000

for i in range(len(labels)):

label = labels[i]

one_hot_encoding = [0]*2

one_hot_encoding[label] = 1

labels[i] = one_hot_encoding

data_indices = list(range(len(data)))

np.random.shuffle(data_indices)

data = np.array(data)[data_indices]

print(labels[:10])

labels = np.array(labels)[data_indices]

seqlens = np.array(seqlens)[data_indices]

train_x = data[:10000]

train_y = labels[:10000]

train_seqlens = seqlens[:10000]

test_x = data[10000:]

test_y = labels[10000:]

test_seqlens = seqlens[10000:]

def get_sentence_batch(batch_size, data_x, data_y, data_seqlens):

instance_indices = list(range(len(data_x)))

np.random.shuffle(instance_indices)

batch = instance_indices[:batch_size]

x = [[word2index_map[word] for word in data_x[i].lower().split()] for i in batch]

y = [data_y[i] for i in batch]

seqlens = [data_seqlens[i] for i in batch]

return x, y, seqlens

_inputs = tf.placeholder(tf.int32, shape=[batch_size, times_steps])

_labels = tf.placeholder(tf.float32, shape=[batch_size, num_classes])

_seqlens = tf.placeholder(tf.int32, shape=[batch_size])

with tf.name_scope('embeddings'):

embeddings = tf.Variable(tf.random_uniform([vocabulary_size, embedding_dimension], -1.0, 1.0), name="embedding")

embed = tf.nn.embedding_lookup(embeddings, _inputs)

with tf.variable_scope('lstm'):

lstm_cell = tf.contrib.rnn.BasicLSTMCell(hidden_layer_size, forget_bias=1.0)

outputs, states = tf.nn.dynamic_rnn(lstm_cell, embed, sequence_length=_seqlens, dtype=tf.float32)

weights = {

'linear_layer' : tf.Variable(tf.truncated_normal([hidden_layer_size, num_classes], mean=0, stddev=.01))

}

biases = {

'linear_layer' : tf.Variable(tf.truncated_normal([num_classes], mean=0, stddev=.01))

}

final_output = tf.matmul(states[1], weights["linear_layer"]) + biases["linear_layer"]

softmax = tf.nn.softmax_cross_entropy_with_logits(logits=final_output, labels=_labels)

cross_entropy = tf.reduce_mean(softmax)

train_step = tf.train.RMSPropOptimizer(0.001, 0.9).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(_labels, 1), tf.argmax(final_output, 1))

accuracy = (tf.reduce_mean(tf.cast(correct_prediction, tf.float32)))*100

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for step in range(1000):

x_batch, y_batch, seqlen_batch = get_sentence_batch(batch_size, train_x, train_y, train_seqlens)

sess.run(train_step, feed_dict={_inputs:x_batch, _labels:y_batch, _seqlens:seqlen_batch})

if step % 100 == 0:

acc = sess.run(accuracy, feed_dict={_inputs:x_batch, _labels:y_batch, _seqlens:seqlen_batch})

print("Accuracy at %d: %.5f" % (step, acc))

for test_batch in range(5):

x_test, y_test, seqlen_test = get_sentence_batch(batch_size, test_x, test_y, test_seqlens)

batch_pred, batch_acc = sess.run([tf.argmax(final_output,1),accuracy], feed_dict={_inputs:x_test, _labels:y_test, _seqlens:seqlen_test})

print("Test batch accuracy at %d: %.5f" % (test_batch, batch_acc))

output_examples = sess.run([outputs], feed_dict={_inputs:x_test, _labels:y_test, _seqlens:seqlen_test})

states_examples = sess.run([states[1]], feed_dict={_inputs:x_test, _labels:y_test, _seqlens:seqlen_test})