由于猫眼有简单的反爬,这里可以采用设置请求头header的方式防一下反爬.

# UserAgent是请求头中的一部分内容,简单的防反爬方法

def UserAgent():

list = ['Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36 OPR/26.0.1656.60',

'Mozilla/5.0 (Windows NT 5.1; U; en; rv:1.8.1) Gecko/20061208 Firefox/2.0.0 Opera 9.50',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; en) Opera 9.50',

'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0',

'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.71 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.16 (KHTML, like Gecko) Chrome/10.0.648.133 Safari/534.16',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.11 TaoBrowser/2.0 Safari/536.11',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; LBBROWSER)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E; LBBROWSER)',

'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)',

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; QQDownload 732; .NET4.0C; .NET4.0E)',

'Mozilla/5.0 (Windows NT 5.1) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.84 Safari/535.11 SE 2.X MetaSr 1.0',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Maxthon/4.4.3.4000 Chrome/30.0.1599.101 Safari/537.36',

'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/38.0.2125.122 UBrowser/4.0.3214.0 Safari/537.36',

'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36',]

return list

使用进程池,正则简单获取数据.并保存到文件.

需要注意的地方是: 汉字编码的问题.

'''

步骤:

1,抓取单页内容,利用requests请求目标站点,得到单个网页的html代码, 返回结果

2,用正则表达式分析,分析html代码,得到电影的名称,主演,上映时间,评分,图片等信息

3,把信息保存至文件

4,采用多线程,提高爬去效率

'''

import requests

from multiprocessing import Pool

from requests.exceptions import RequestException

from UA import *

import random

import re

import json

# 随机获取一个请求头的UA

# UA = UserAgent()

# headers = {'UserAgent':random.choice(UA)}

# print(headers)

#之前的UserAgent列表中的User-Agent都是Window下适用的,这里由于我使用的是Linux系统,所以单独从浏览器中找的一个.

headers = {'User-Agent':'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36'}

def get_one_page(url):

# 获取一页html代码

try:

response = requests.get(url,headers=headers)

if response.status_code == 200:

return response.text

return None

except RequestException:

return None

def parse_one_page(html):

# 用正则表达式解析网页

pattern = re.compile('<dd>[\s\S]+?board-index[\s\S]+?>([\s\S]+?)</i>[\s\S]+?data-src="([\s\S]+?)"[\s\S]+?name"><a[\s\S]+?>([\s\S]+?)</a>[\s\S]+?star">([\s\S]+?)</p>[\s\S]+?releasetime">([\s\S]+?</p>)[\s\S]+?integer">([\s\S]+?)</i>[\s\S]+?fraction">([\s\S]+?)</i>[\s\S]+?</dd>')

items = re.findall(pattern,html)

# print(items)

# 用生成器的方式返回一个字典信息

for item in items:

yield {

'index':item[0],

'pic':item[1],

'title':item[2],

'star':item[3].strip()[3:],

'time':item[4].strip()[5:],

'score': item[5]+item[6],

}

def write_2_file(item):

# 把爬取到的电影信息写入文件

content = json.dumps(item,ensure_ascii=False) #把json格式转为字符串 汉字编码问题,这里需要加入一个参数

with open('/home/xiaohaozi/进阶之路/爬虫/Top100.txt','a',encoding='utf-8') as f: # 这里也是汉字编码问题 encoding='utf-8'

f.write(content+'\n')

def main(offset):

url = 'http://maoyan.com/board/4?offset='+str(offset)

html = get_one_page(url)

# print(html)

for item in parse_one_page(html):

# print(item)

write_2_file(item)

if __name__ == '__main__':

# 多进程实现

pool = Pool()

pool.map(main,[i*10 for i in range(10)])

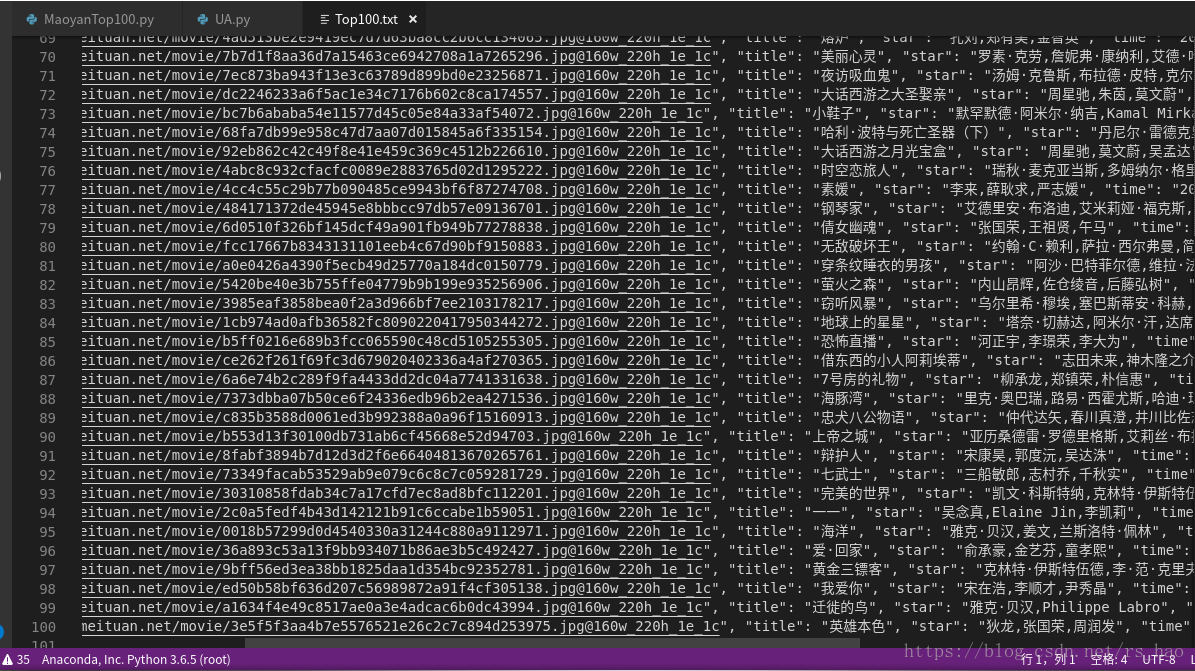

最后可得到 猫眼Top100的电影信息.

图就不再一一放了.