麦克风阵列仿真环境的搭建

1. 引言

之前,我在语音增强一文中,提到了有关麦克风阵列语音增强的介绍,当然,麦克风阵列能做的东西远远不只是在语音降噪上的应用,它还可以用来做声源定位、声源估计、波束形成、回声抑制等。个人认为,麦克风阵列在声源定位和波束形成(多指抑制干扰语音方面)的优势是单通道麦克风算法无法比拟的。因为,利用多麦克风以后,就会将空间信息考虑到算法中,这样就特别适合解决一些与空间相关性很强的语音处理问题。

然而,在做一些麦克风阵列相关的算法研究的时候,最先遇到的问题就是:实验环境的搭建。很多做麦克风阵列的爱好者并没有实际的硬件实验环境,这也就成了很多人进行麦克风阵列入门的难题。这里,我要分享的是爱丁堡大学语音实验室开源的基于MATLAB的麦克风阵列实验仿真环境。利用该仿真环境,我们就可以随意的设置房间的大小,混响程度,声源方向以及噪声等基本参数,然后得到我们想要的音频文件去测试你自己相应的麦克风阵列算法。

2. 代码介绍

原始的代码被我加以修改,也是为了更好的运行,如果有兴趣的话,大家还可以参考爱丁堡大学最初的源码,并且我也上传到我的CSDN码云上了,链接是:https://gitee.com/wind_hit/Microphone-Array-Simulation-Environment。 这套MATLAB代码的主函数是multichannelSignalGenerator(),具体如下:

function [mcSignals,setup] = multichannelSignalGenerator(setup)

%-----------------------------------------------------------------------

% Producing the multi_noisy_signals for Mic array Beamforming.

%

% Usage: multichannelSignalGenerator(setup)

%

% setup.nRirLength : The length of Room Impulse Response Filter

% setup.hpFilterFlag : use 'false' to disable high-pass filter, the high-%pass filter is enabled by default

% setup.reflectionOrder : reflection order, default is -1, i.e. maximum order.

% setup.micType : [omnidirectional, subcardioid, cardioid, hypercardioid, bidirectional], default is omnidirectional.

%

% setup.nSensors : The numbers of the Mic

% setup.sensorDistance : The distance between the adjacent Mics (m)

% setup.reverbTime : The reverberation time of room

% setup.speedOfSound : sound velocity (m/s)

%

% setup.noiseField : Two kinds of Typical noise field, 'spherical' and 'cylindrical'

% setup.sdnr : The target mixing snr for diffuse noise and clean siganl.

% setup.ssnr : The approxiated mixing snr for sensor noise and clean siganl.

%

% setup.roomDim : 1 x 3 array specifying the (x,y,z) coordinates of the room (m).

% setup.micPoints : 3 x M array, the rows specifying the (x,y,z) coordinates of the mic postions (m).

% setup.srcPoint : 3 x M array, the rows specifying the (x,y,z) coordinates of the audio source postion (m).

%

% srcHeight : The height of target audio source

% arrayHeight : The height of mic array

%

% arrayCenter : The Center Postion of mic array

%

% arrayToSrcDistInt :The distance between the array and audio source on the xy axis

%

%

%

%

%

%

% How To Use : JUST RUN

%

%

%

% Code From: Audio analysis Lab of Aalborg University (Website: https://audio.create.aau.dk/),

% slightly modified by Wind at Harbin Institute of Technology, Shenzhen, in 2018.3.24

%

% Copyright (C) 1989, 1991 Free Software Foundation, Inc.

%-------------------------------------------------------------------------

addpath([cd,'\..\rirGen\']);

%-----------------------------------------------initial parameters-----------------------------------

setup.nRirLength = 2048;

setup.hpFilterFlag = 1;

setup.reflectionOrder = -1;

setup.micType = 'omnidirectional';

setup.nSensors = 4;

setup.sensorDistance = 0.05;

setup.reverbTime = 0.1;

setup.speedOfSound = 340;

setup.noiseField = 'spherical';

setup.sdnr = 20;

setup.ssnr = 25;

setup.roomDim = [3;4;3];

srcHeight = 1;

arrayHeight = 1;

arrayCenter = [setup.roomDim(1:2)/2;1];

arrayToSrcDistInt = [1,1];

setup.srcPoint = [1.5;1;1];

setup.micPoints = generateUlaCoords(arrayCenter,setup.nSensors,setup.sensorDistance,0,arrayHeight);

[cleanSignal,setup.sampFreq] = audioread('..\data\twoMaleTwoFemale20Seconds.wav');

%---------------------------------------------------initial end----------------------------------------

%-------------------------------algorithm processing--------------------------------------------------

if setup.reverbTime == 0,

setup.reverbTime = 0.2;

reflectionOrder = 0;

else

reflectionOrder = -1;

end

rirMatrix = rir_generator(setup.speedOfSound,setup.sampFreq,setup.micPoints',setup.srcPoint',setup.roomDim',...

setup.reverbTime,setup.nRirLength,setup.micType,setup.reflectionOrder,[],[],setup.hpFilterFlag);

for iSens = 1:setup.nSensors,

tmpCleanSignal(:,iSens) = fftfilt(rirMatrix(iSens,:)',cleanSignal);

end

mcSignals.clean = tmpCleanSignal(setup.nRirLength:end,:);

setup.nSamples = length(mcSignals.clean);

mcSignals.clean = mcSignals.clean - ones(setup.nSamples,1)*mean(mcSignals.clean);

%-------produce the microphone recieved clean signals---------------------------------------------

mic_clean1=10*mcSignals.clean(:,1); %Because of the attenuation of the recievd signals,Amplify the signals recieved by Mics with tenfold

mic_clean2=10*mcSignals.clean(:,2);

mic_clean3=10*mcSignals.clean(:,3);

mic_clean4=10*mcSignals.clean(:,4);

audiowrite('mic_clean1.wav' ,mic_clean1,setup.sampFreq);

audiowrite('mic_clean2.wav' ,mic_clean2,setup.sampFreq);

audiowrite('mic_clean3.wav' ,mic_clean3,setup.sampFreq);

audiowrite('mic_clean4.wav' ,mic_clean4,setup.sampFreq);

%----------------------------------end--------------------------------------------------

addpath([cd,'\..\nonstationaryMultichanNoiseGenerator\']);

cleanSignalPowerMeas = var(mcSignals.clean);

mcSignals.diffNoise = generateMultichanBabbleNoise(setup.nSamples,setup.nSensors,setup.sensorDistance,...

setup.speedOfSound,setup.noiseField);

diffNoisePowerMeas = var(mcSignals.diffNoise);

diffNoisePowerTrue = cleanSignalPowerMeas/10^(setup.sdnr/10);

mcSignals.diffNoise = mcSignals.diffNoise*...

diag(sqrt(diffNoisePowerTrue)./sqrt(diffNoisePowerMeas));

mcSignals.sensNoise = randn(setup.nSamples,setup.nSensors);

sensNoisePowerMeas = var(mcSignals.sensNoise);

sensNoisePowerTrue = cleanSignalPowerMeas/10^(setup.ssnr/10);

mcSignals.sensNoise = mcSignals.sensNoise*...

diag(sqrt(sensNoisePowerTrue)./sqrt(sensNoisePowerMeas));

mcSignals.noise = mcSignals.diffNoise + mcSignals.sensNoise;

mcSignals.observed = mcSignals.clean + mcSignals.noise;

%------------------------------processing end-----------------------------------------------------------

%----------------produce the noisy speech of MIc in the specific ervironment sets------------------------

noisy_mix1=10*mcSignals.observed(:,1); %Amplify the signals recieved by Mics with tenfold

noisy_mix2=10*mcSignals.observed(:,2);

noisy_mix3=10*mcSignals.observed(:,3);

noisy_mix4=10*mcSignals.observed(:,4);

l1=size(noisy_mix1);

l2=size(noisy_mix2);

l3=size(noisy_mix3);

l4=size(noisy_mix4);

audiowrite('diffused_babble_noise1_20dB.wav' ,noisy_mix1,setup.sampFreq);

audiowrite('diffused_babble_noise2_20dB.wav' ,noisy_mix2,setup.sampFreq);

audiowrite('diffused_babble_noise3_20dB.wav' ,noisy_mix3,setup.sampFreq);

audiowrite('diffused_babble_noise4_20dB.wav' ,noisy_mix4,setup.sampFreq);

%-----------------------------end-------------------------------------------------------------------------

这个是主函数,直接运行尽可以得到想要的音频文件,但是你需要先给出你的纯净音频文件和噪声音频,分别对应着:multichannelSignalGenerator()函数中的语句:[cleanSignal,setup.sampFreq] = audioread('..\data\twoMaleTwoFemale20Seconds.wav'),和generateMultichanBabbleNoise()函数中的语句:[singleChannelData,samplingFreq] = audioread('babble_8kHz.wav') 。

直接把它们替换成你想要处理的音频文件即可。

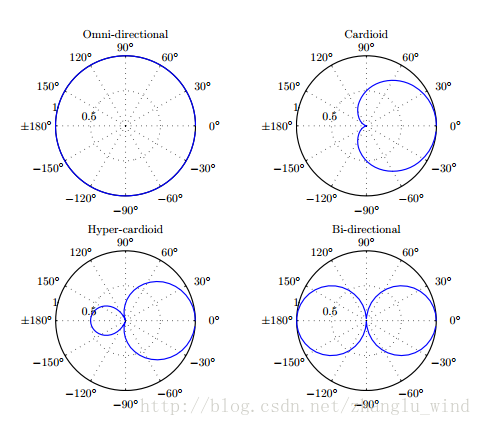

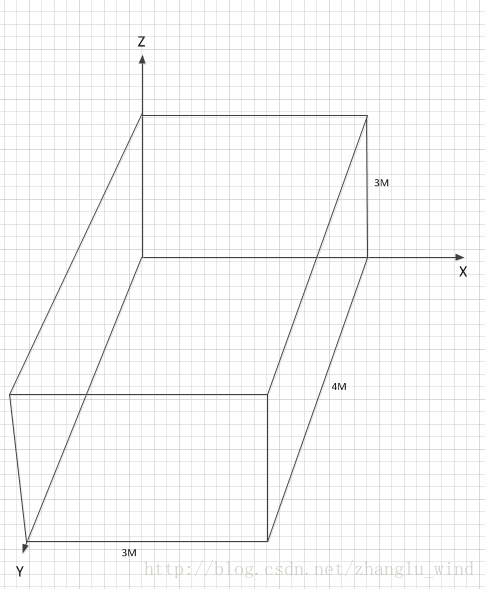

除此之外,还有一些基本实验环境参数设置,包括:麦克风的形状为线性麦克风阵列(该代码只能对线性阵列进行仿真建模,并且还是均匀线性阵列,这个不需要设置);麦克风的类型(micType),有全指向型(omnidirectional),心型指向(cardioid),亚心型指向(subcardioid,不知道咋翻译,请见谅) , 超心型(hypercardioid), 双向型(bidirectional),一般默认是全指向型,如下图1所示;麦克风的数量(nSensors);各麦克风之间的间距(sensorDistance);麦克风阵列的中心位置(arrayCenter),用(x,y,z)坐标来表示;麦克风阵列的高度(arrayHeight),感觉和前面的arrayCenter有所重复,不知道为什么还要设置这么一个参数;目标声源的位置(srcPoint),也是用(x,y,z)坐标来表示;目标声源的高度(srcHeight);麦克风阵列距离目标声源的距离(arrayToSrcDistInt),是在xy平面上的投影距离;房间的大小(roomDim),另外房间的(x,y,z)坐标系如图2所示;房间的混响时间(reverbTime);散漫噪声场的类型(noiseField),分为球形场(spherical)和圆柱形场(cylindrical)。

图1 麦克风类型图

图二 房间的坐标系

以上便是整个仿真实验环境的参数配置,虽然只能对均匀线性的麦克风阵列进行实验测试,但是这对满足我们进行线阵阵列算法的测试是有很大的帮助。说到底,这种麦克风阵列环境的音频数据产生方法还是基于数学模型的仿真,并不可能取代实际的硬件实验环境测试,所以要想在工程上实现麦克风阵列的一些算法,仍然避免不了在实际的环境中进行测试。最后,希望分享的这套代码对大家进行麦克风阵列算法的入门提供帮助。