主要操作HDFS文件的查看,添加,删除,上传,下载。完整源码见后面

环境搭建见:https://blog.csdn.net/qq_25948717/article/details/82015131

Maven就是方便包的管理版本匹配

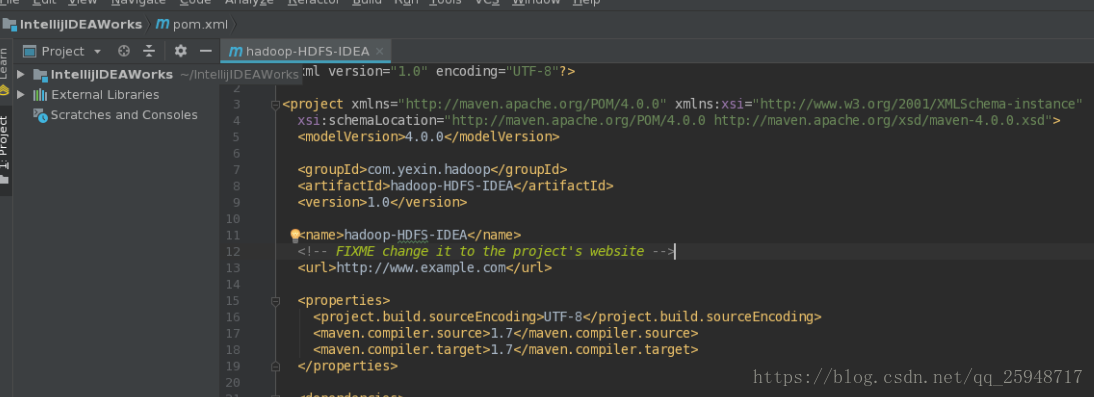

搭建好如图:

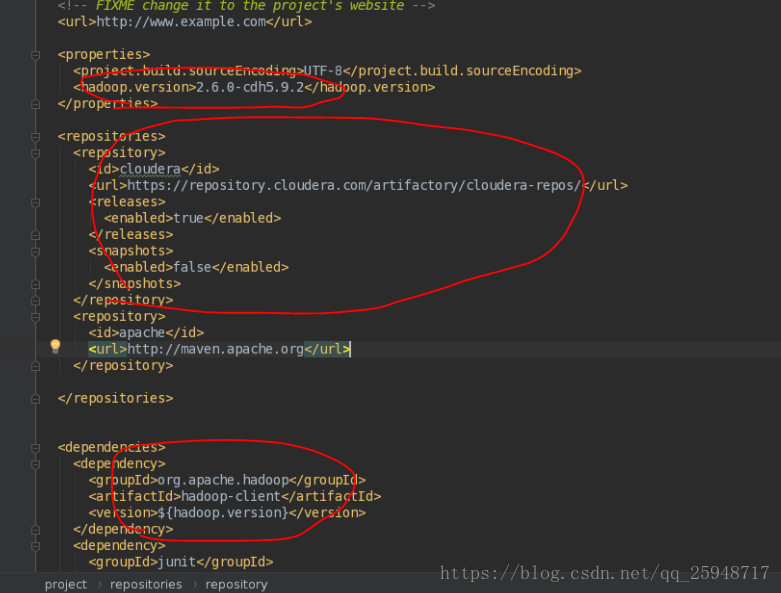

配置:

可以看到右下角正在下载依赖,第一耗时很长

====================================================================================

开发:将所有操作都作为单元测试,先删除test下的AppTest,新建包和java测试类

创建文件:

package com.yexin.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.net.URI;

/**

* @Company: Huazhong University of science and technology

* @version: V1.0

* @author: YEXIN

* @contact: [email protected] or [email protected] 2018--2020

* @software: IntelliJ IDEA

* @file: HDFSApp

* @time: 8/24/18 2:11 PM

* @Desc:Hadoop HDFS Java API Operation

**/

public class HDFSApp {

public static final String HDFS_PATH = "hdfs://node40:9000";

FileSystem fileSystem = null;//important class for operating the hdfs file

Configuration configuration = null;

/**

*mkdir HDFS file

* */

@Test

public void mkdir() throws Exception{

fileSystem.mkdirs(new Path("/hdfsapi/test"));

}

//prepare the environment before execute the junit test

@Before

public void setUp() throws Exception{

System.out.println("HDFSApp.setUp");

configuration = new Configuration();

fileSystem = FileSystem.get(new URI(HDFS_PATH),configuration,"test");

}

//release resource of app

@After

public void tearDown() throws Exception{

configuration = null;

fileSystem = null;

System.out.println("HDFSApp.tearDown");

}

}

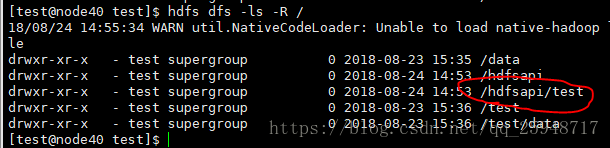

鼠标选择mkdir()方法,右键选择运行mkdir(),

在控制台输入查看可以看到创建成功:

网页查看:

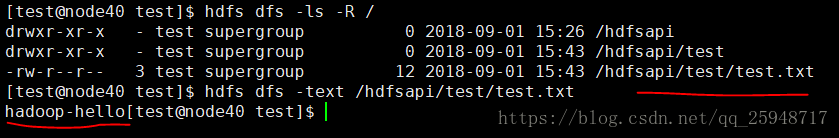

创建文件并且写入内容:同样的方式运行

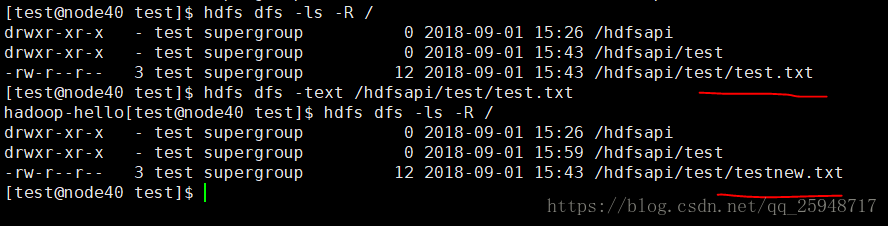

在终端测查看:

重命名:

结果:

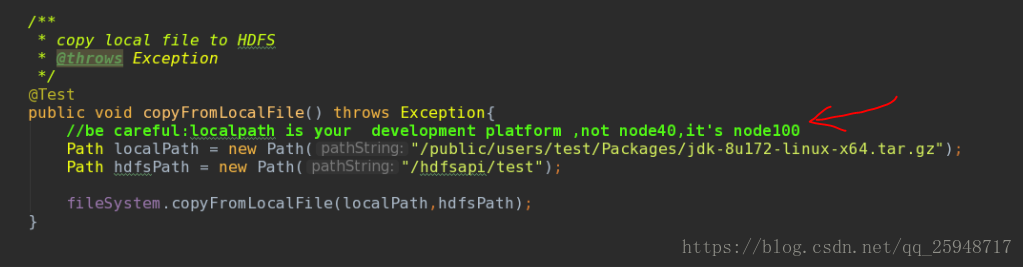

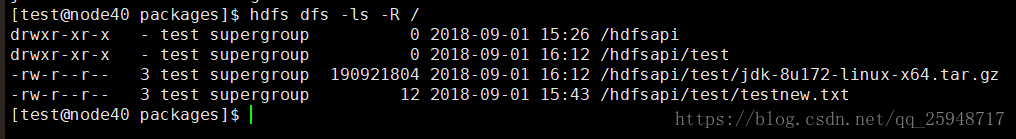

从本地传输文件到HDFS:

结果:

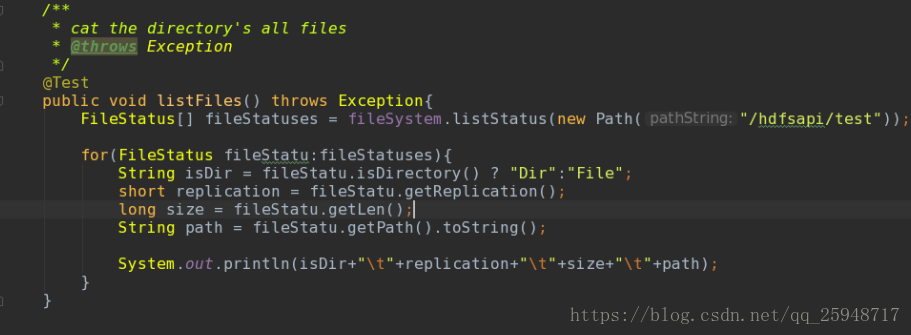

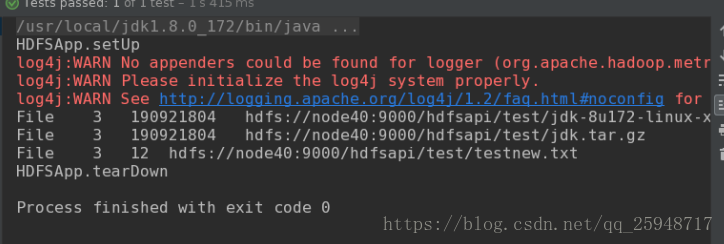

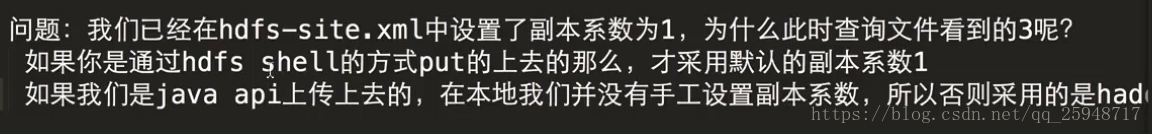

输出目录下的所有文件:

结果:

结果解读:

完整源码:

package com.yexin.hadoop.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import sun.nio.ch.IOUtil;

import java.io.BufferedInputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.net.URI;

/**

* Company: Huazhong University of science and technology

* version: V1.0

* author: YEXIN

* contact: [email protected] or [email protected] 2018--2020

* software: IntelliJ IDEA

* file: HDFSApp

* time: 8/24/18 2:11 PM

* Desc:Hadoop HDFS Java API Operation

**/

public class HDFSApp {

public static final String HDFS_PATH = "hdfs://node40:9000";

FileSystem fileSystem = null;//important class for operating the hdfs file

Configuration configuration = null;

/**

*mkdir HDFS file

* */

@Test

public void mkdir() throws Exception{

fileSystem.mkdirs(new Path("/hdfsapi/test"));

}

/**

* create file

* @throws Exception

*/

@Test

public void createFile() throws Exception{

FSDataOutputStream output = fileSystem.create(new Path("/hdfsapi/test/test.txt"));//fileSystem.create() returns a data stream,

// so we will get the steam,and use it to write some contents.

output.writeBytes("hadoop-hello");

//flush and close

output.flush();

output.close();

}

/**

* cat file contents

* @throws Exception

*/

@Test

public void cat() throws Exception{

FSDataInputStream input = fileSystem.open(new Path("/hdfsapi/test/test.txt"));

//output to console

IOUtils.copyBytes(input,System.out,1024);

input.close();

}

/**

* rename file's name

* @throws Exception

*/

@Test

public void rename() throws Exception{

Path oldPath = new Path("/hdfsapi/test/test.txt");

Path newPath = new Path("/hdfsapi/test/testnew.txt");

fileSystem.rename(oldPath,newPath);

}

/**

* copy local file to HDFS

* @throws Exception

*/

@Test

public void copyFromLocalFile() throws Exception{

//be careful:localpath is your development platform ,not node40,it's node100

Path localPath = new Path("/public/users/test/Packages/jdk-8u172-linux-x64.tar.gz");

Path hdfsPath = new Path("/hdfsapi/test");

fileSystem.copyFromLocalFile(localPath,hdfsPath);

}

/**

* cpoy local file to hdfs with Progress

* @throws Exception

*/

@Test

public void copyFromLocalFilewithProgress() throws Exception{

//be careful:localpath is your development platform ,not node40,it's node100

Path localPath = new Path("/public/users/test/Packages/jdk-8u172-linux-x64.tar.gz");

Path hdfsPath = new Path("/hdfsapi/test");

fileSystem.copyFromLocalFile(localPath,hdfsPath);

InputStream input = new BufferedInputStream(

new FileInputStream(

new File("/public/users/test/Packages/jdk-8u172-linux-x64.tar.gz")));

FSDataOutputStream output = fileSystem.create(new Path("/hdfsapi/test/jdk.tar.gz"),

new Progressable() {

public void progress() {

System.out.println("===");//show Progress

}

});

IOUtils.copyBytes(input,output,1024);

}

/**

* download hdfs file to local

* @throws Exception

*/

@Test

public void copyToLocal() throws Exception{

Path localPath = new Path("/public/users/test/Packages");

Path hdfspath = new Path("/hdfsapi/test/jdk.tar.gz");

fileSystem.copyToLocalFile(hdfspath,localPath);

}

/**

* cat the directory's all files

* @throws Exception

*/

@Test

public void listFiles() throws Exception{

FileStatus[] fileStatuses = fileSystem.listStatus(new Path("/hdfsapi/test"));//the method returns a array

for(FileStatus fileStatu:fileStatuses){

String isDir = fileStatu.isDirectory() ? "Dir":"File";

short replication = fileStatu.getReplication();

long size = fileStatu.getLen();

String path = fileStatu.getPath().toString();

System.out.println(isDir+"\t"+replication+"\t"+size+"\t"+path);

}

}

/**

* delete file

* @throws Exception

*/

@Test

public void delete() throws Exception{

fileSystem.delete(new Path("/hdfsapi/test/jdk.tar.gz"),true);

}

//prepare the environment before execute the junit test

@Before

public void setUp() throws Exception{

System.out.println("HDFSApp.setUp");

configuration = new Configuration();

fileSystem = FileSystem.get(new URI(HDFS_PATH),configuration,"test");

}

//release resource of app

@After

public void tearDown() throws Exception{

configuration = null;

fileSystem = null;

System.out.println("HDFSApp.tearDown");

}

}