Spark Streaming自定义Receiver类

1.自定义CustomReceiver

class CustomReveicer(host: String, port: Int) extends Receiver[String](StorageLevels.MEMORY_AND_DISK_2) with Logging {

override def onStart(): Unit = {

// 通过线程接受数据

new Thread("Custom Receive") {

override def run(): Unit = {

receive()

}

}.start()

}

override def onStop(): Unit = {

}

/**

* 自定义Receiver的方法,接收receiver的数据

*/

private def receive(): Unit = {

var socket: Socket = null

var reader: BufferedReader = null

try {

logInfo(s"Connecting to $host : $port")

// 创建Socket流,监听host,port

socket = new Socket(host, port)

logInfo(s"Connected to $host : $port")

// 创建一个BufferRead流,读取Socket的流数据

reader = new BufferedReader(new InputStreamReader(socket.getInputStream, StandardCharsets.UTF_8))

var line = reader.readLine()

// 循环读取

while (!isStopped() && line != null) {

// 读取的一行数据,进行储存成块

store(line)

line = reader.readLine()

}

// 关闭流

reader.close()

socket.close()

logInfo("Stopped receiving")

restart("")

} catch {

case e: java.net.ConnectException => restart(s"Error connecting to $host : $port", e)

case t: Throwable => restart("Error receiving data", t)

}

}

}

2.使用CustomReceiver接收数据

object CustomReveicer {

def main(args: Array[String]): Unit = {

if (args.length != 2) {

System.err.println("Usage <host> <port>")

System.exit(1)

}

// 设置Spark Streaming的log的级别,设置为WARN级别

StreamingLogger.setLoggerLevel()

// 设置SparkConf的参数

val sparkConf = new SparkConf().setAppName("CustomReveicer").setMaster("local[2]")

// 初始化StreamingContext,并设置批处理间隔时间

val ssc = new StreamingContext(sparkConf, Seconds(2))

// 设置Receiver Stream的监听

val line = ssc.receiverStream(new CustomReveicer(host = args(0), port = args(1).toInt))

// 处理数据

val word = line.flatMap(_.split(" "))

val wordCount = word.map((_, 1))

val wordCounts = wordCount.reduceByKey(_ + _)

// 输出数据

wordCounts.print()

// 启动SparkStreaming

ssc.start()

ssc.awaitTermination()

}

}3.StreamingLogger设置Logger级别的类

object StreamingLogger extends Logging {

def setLoggerLevel(): Unit = {

val elements = Logger.getRootLogger.getAllAppenders.hasMoreElements

if (!elements) {

logInfo("set logger level warn")

Logger.getRootLogger.setLevel(Level.WARN)

}

}

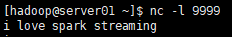

}4.启动nc命令发送数据,并执行Spark Streaming程序

4.1 启动nc命令

nc -l 9999

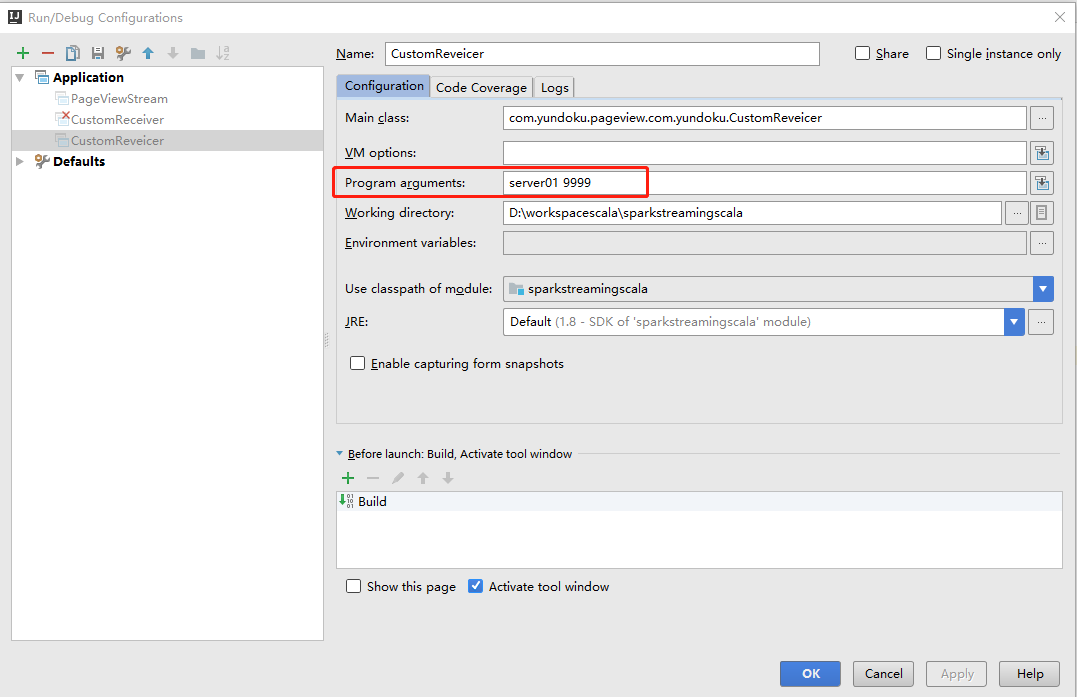

4.2 添加参数,执行Spark Streaming程序

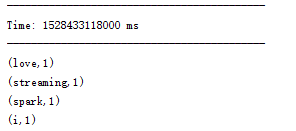

4.3 结果输出