word2vec有CBOW与Skip-Gram模型

CBOW是根据上下文预测中间值,Skip-Gram则恰恰相反

本文首先介绍Skip-Gram模型,是基于tensorflow官方提供的一个demo,第二大部分是经过简单修改的CBOW模型,主要参考:

https://www.cnblogs.com/pinard/p/7160330.html

两部分以###########################为界限

好了,现在开始!!!!!!

###################################################################################################

tensorflow官方demo:

https://github.com/tensorflow/tensorflow/tree/master/tensorflow/examples/tutorials/word2vec

(一)首先:就是导入一些包没什么可说的

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import collections

import math

import os

import sys

import argparse

import random

from tempfile import gettempdir

import zipfile

import numpy as np

from six.moves import urllib

from six.moves import xrange # pylint: disable=redefined-builtin

import tensorflow as tf

from tensorflow.contrib.tensorboard.plugins import projector(二)接下来就是获取当前路径,以及创建log目录(主要用于后续的tensorboard可视化),默认log目录在当前目录下:

current_path = os.path.dirname(os.path.realpath(sys.argv[0]))

parser = argparse.ArgumentParser()

parser.add_argument(

'--log_dir',

type=str,

default=os.path.join(current_path, 'log'),

help='The log directory for TensorBoard summaries.')

FLAGS, unparsed = parser.parse_known_args()

# Create the directory for TensorBoard variables if there is not.

if not os.path.exists(FLAGS.log_dir):

os.makedirs(FLAGS.log_dir)sys.argv[]就是一个从程序外部获取参数的桥梁,sys.argv[0]就是返回第一个参数,即获取当前脚本

关于其更多用法可以参考:https://www.cnblogs.com/aland-1415/p/6613449.html

os.path.realpath就是获取脚本的绝对路径

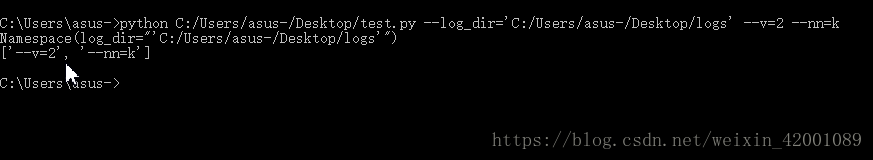

parser.parse_known_args()用 来解析不定长的命令行参数,其返回的是2个参数,第一个参数是已经定义了的参数,第二个是没有定义的参数。

具体到这里举个例子就是:写一个test.py

import argparse

import os

import sys

current_path = os.path.dirname(os.path.realpath(sys.argv[0]))

parser = argparse.ArgumentParser()

parser.add_argument(

'--log_dir',

type=str,

default=os.path.join(current_path, 'log'),

help='The log directory for TensorBoard summaries.')

FLAGS, unparsed = parser.parse_known_args()

print(FLAGS)

print(unparsed)

(三)接下来是下载数据集(这里稍微做了一点修改):

def maybe_download(filename, expected_bytes):

"""Download a file if not present, and make sure it's the right size."""

if not os.path.exists(filename):

filename, _ = urllib.request.urlretrieve(url + filename, filename)

# 获取文件相关属性

statinfo = os.stat(filename)

# 比对文件的大小是否正确

if statinfo.st_size == expected_bytes:

print('Found and verified', filename)

else:

print(statinfo.st_size)

raise Exception(

'Failed to verify ' + filename + '. Can you get to it with a browser?')

return filename

filename = maybe_download('text8.zip', 31344016)下载好后就会在当前文件夹下有一个叫做text8.zip的压缩包

(四)生成单词表

# Read the data into a list of strings.

def read_data(filename):

"""Extract the first file enclosed in a zip file as a list of words."""

with zipfile.ZipFile(filename) as f:

data = tf.compat.as_str(f.read(f.namelist()[0])).split()

return data

vocabulary = read_data(filename)

print('Data size', len(vocabulary))

f.namelist()[0]是解压后第一个文件,不过这里解压后本来就只有一个文件,然后以空格分开,所以最后的vocabulary中就是单词表,最后打印一下看看有多少单词

(五)建立有50000个词的字典,没在该词典的单词用UNK表示

vocabulary_size = 50000

def build_dataset(words, n_words):

"""Process raw inputs into a dataset."""

count = [['UNK', -1]]

count.extend(collections.Counter(words).most_common(n_words - 1))

dictionary = dict()

for word, _ in count:

dictionary[word] = len(dictionary)

data = list()

unk_count = 0

for word in words:

index = dictionary.get(word, 0)

if index == 0: # dictionary['UNK']

unk_count += 1

data.append(index)

count[0][1] = unk_count

reversed_dictionary = dict(zip(dictionary.values(), dictionary.keys()))

return data, count, dictionary, reversed_dictionary

data, count, dictionary, reverse_dictionary = build_dataset(

vocabulary, vocabulary_size)

del vocabulary # Hint to reduce memory.

print('Most common words (+UNK)', count[:5])

print('Sample data', data[:10], [reverse_dictionary[i] for i in data[:10]])其中下面是统计每个单词的词频,并选取前50000个词频较高的单词作为字典的备选词

extend追加一个列表

count.extend(collections.Counter(words).most_common(n_words - 1))data是将数据集的单词都编号,没有在字典的中单词编号为UNK(0)

就想这样;

i love tensorflow very much .........

2 23 UNK 3 45 .........

count 记录的是每个单词对应的词频比如;[ ['UNK', -1] , ['a','200'] , ['i',150],...............]

dictionary是一个字典:记录的是单词对应编号 即key:单词、value:编号(编号越小,词频越高,但第一个永远是UNK)

reversed_dictionary是一个字典:编号对应的单词 即key:编号、value:单词(编号越小,词频越高,但第一个永远是UNK)

第一个永远是UNK是因为extend追加一个列表,变化的是追加的列表,第一个永远是UNK

(六)取labels,分批次

data_index = 0

def generate_batch(batch_size, num_skips, skip_window):

global data_index

assert batch_size % num_skips == 0

assert num_skips <= 2 * skip_window

batch = np.ndarray(shape=(batch_size), dtype=np.int32)

labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

span = 2 * skip_window + 1 # [ skip_window target skip_window ]

buffer = collections.deque(maxlen=span) # pylint: disable=redefined-builtin

if data_index + span > len(data):

data_index = 0

buffer.extend(data[data_index:data_index + span])

data_index += span

for i in range(batch_size // num_skips):

context_words = [w for w in range(span) if w != skip_window]

words_to_use = random.sample(context_words, num_skips)

for j, context_word in enumerate(words_to_use):

batch[i * num_skips + j] = buffer[skip_window]

labels[i * num_skips + j, 0] = buffer[context_word]

if data_index == len(data):

buffer.extend(data[0:span])

data_index = span

else:

buffer.append(data[data_index])

data_index += 1

# Backtrack a little bit to avoid skipping words in the end of a batch

data_index = (data_index + len(data) - span) % len(data)

return batch, labels

batch, labels = generate_batch(batch_size=8, num_skips=2, skip_window=1)

for i in range(8):

print(batch[i], reverse_dictionary[batch[i]], '->', labels[i, 0],

reverse_dictionary[labels[i, 0]])batch_size:就是批次大小

num_skips:就是重复用一个单词的次数,比如 num_skips=2时,对于一句话:i love tensorflow very much ..........

当tensorflow被选为目标词时,在产生label时要利用tensorflow两次即:

tensorflow---》 love tensorflow---》 very

skip_window:是考虑左右上下文的个数,比如skip_window=1,就是在考虑上下文的时候,左面一个,右面一个

skip_window=2时,就是在考虑上下文的时候,左面两个,右面两个

span :其实在分批次的过程中可以看做是一个固定大小的框框(比较流行的说法数滑动窗口)在不断移动,而这个框框的大小 就是 span,可以看到span = 2 * skip_window + 1

buffer = collections.deque(maxlen=span):就是申请了一个buffer(其实就是固定大小的窗口这里是3)即每次这个buffer队列中最 多 能容纳span个单词

所以过程应该是这样的:比如batch_size=6, num_skips=2,skip_window=1,data:

batch_size // num_skips=3,循环3次

( I am looking for the missing glass-shoes who has picked it up .............)

2 23 56 3 45 84 123 45 23 12 1 14 ...............

i=0时:2 ,23 ,56首先进入 buffer( context_words = [w for w in range(span) if w != skip_window]的意思就是取窗口中不包括目标词 的词即上下文),然后batch[i * num_skips + j] = buffer[skip_window](skip_window=1,所以每次就是取窗口的中间数为 目标词)即batch=23, labels[i * num_skips + j, 0] = buffer[context_word]就是取其上下文为labels即2和56

所以此时batch=[23,23] labels=[2,56](当然也可能是[2,56],因为可能先取右边,后取左面),同时data_index=3即单词for的 位置

i=1时:data[data_index]进队列,即 buffer为 23,56,3 赋值后为:batch=[23,23,56,56] labels=[2,56,23,3](也可能是换一下顺序)

同时data_index=4即单词the

i=2时:data[data_index]进队列,即 buffer为 56,3,45 赋值后为:batch=[23,23,56,56,3,3] labels=[2,56,23,3,56,45](也可能是换一 下顺序) 同时data_index=5即单词missing

至此循环结束,按要求取出大小为6的一个批次即:

batch=[23,23,56,56,3,3] labels=[2,56,23,3,56,45]

然后data_index = (data_index + len(data) - span) % len(data)即data_index回溯3个单位,回到 looking,因为global data_index

所以data_index全局变量,所以当在取下一个批次的时候,buffer从looking的位置开始装载,即从上一个批次结束的位置接着往下取batch和labels

(七)定义一些参数大小:

batch_size = 128

embedding_size = 128 # Dimension of the embedding vector.

skip_window = 1 # How many words to consider left and right.

num_skips = 2 # How many times to reuse an input to generate a label.

num_sampled = 64 # Number of negative examples to sample.

graph = tf.Graph()这里主要就是定义我们上面讲的一些参数的大小

(八)神经网络图model:

with graph.as_default():

# Input data.

with tf.name_scope('inputs'):

train_inputs = tf.placeholder(tf.int32, shape=[batch_size])

train_labels = tf.placeholder(tf.int32, shape=[batch_size, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

# Ops and variables pinned to the CPU because of missing GPU implementation

with tf.device('/cpu:0'):

# Look up embeddings for inputs.

with tf.name_scope('embeddings'):

embeddings = tf.Variable(

tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

embed = tf.nn.embedding_lookup(embeddings, train_inputs)

# Construct the variables for the NCE loss

with tf.name_scope('weights'):

nce_weights = tf.Variable(

tf.truncated_normal(

[vocabulary_size, embedding_size],

stddev=1.0 / math.sqrt(embedding_size)))

with tf.name_scope('biases'):

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

# Compute the average NCE loss for the batch.

# tf.nce_loss automatically draws a new sample of the negative labels each

# time we evaluate the loss.

# Explanation of the meaning of NCE loss:

# http://mccormickml.com/2016/04/19/word2vec-tutorial-the-skip-gram-model/

with tf.name_scope('loss'):

loss = tf.reduce_mean(

tf.nn.nce_loss(

weights=nce_weights,

biases=nce_biases,

labels=train_labels,

inputs=embed,

num_sampled=num_sampled,

num_classes=vocabulary_size))

# Add the loss value as a scalar to summary.

tf.summary.scalar('loss', loss)

# Construct the SGD optimizer using a learning rate of 1.0.

with tf.name_scope('optimizer'):

optimizer = tf.train.GradientDescentOptimizer(1.0).minimize(loss)

# Compute the cosine similarity between minibatch examples and all embeddings.

norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keepdims=True))

normalized_embeddings = embeddings / norm

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings,

valid_dataset)

similarity = tf.matmul(

valid_embeddings, normalized_embeddings, transpose_b=True)

# Merge all summaries.

merged = tf.summary.merge_all()

# Add variable initializer.

init = tf.global_variables_initializer()

# Create a saver.

saver = tf.train.Saver()这里可以分为两部分来看,一部分是训练Skip-gram模型的词向量,另一部分是计算余弦相似度,下面我们分开说:

首先看下tf.nn.embedding_lookup的API解释:

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/ops/embedding_ops.py

def embedding_lookup(

params,

ids,

partition_strategy="mod",

name=None,

validate_indices=True, # pylint: disable=unused-argument

max_norm=None):

"""Looks up `ids` in a list of embedding tensors.

This function is used to perform parallel lookups on the list of

tensors in `params`. It is a generalization of

@{tf.gather}, where `params` is

interpreted as a partitioning of a large embedding tensor. `params` may be

a `PartitionedVariable` as returned by using `tf.get_variable()` with a

partitioner.

If `len(params) > 1`, each element `id` of `ids` is partitioned between

the elements of `params` according to the `partition_strategy`.

In all strategies, if the id space does not evenly divide the number of

partitions, each of the first `(max_id + 1) % len(params)` partitions will

be assigned one more id.

If `partition_strategy` is `"mod"`, we assign each id to partition

`p = id % len(params)`. For instance,

13 ids are split across 5 partitions as:

`[[0, 5, 10], [1, 6, 11], [2, 7, 12], [3, 8], [4, 9]]`

If `partition_strategy` is `"div"`, we assign ids to partitions in a

contiguous manner. In this case, 13 ids are split across 5 partitions as:

`[[0, 1, 2], [3, 4, 5], [6, 7, 8], [9, 10], [11, 12]]`

The results of the lookup are concatenated into a dense

tensor. The returned tensor has shape `shape(ids) + shape(params)[1:]`.看到 The results of the lookup are concatenated into a dense tensor. The returned tensor has shape `shape(ids) + shape(params)[1:]`.,即假如params是:100*28,sp_ids是[2,56,3] 那么返回的便是3*28即分别对应params的第3、57、4行

其实往下看会发现其主要调用的是 _embedding_lookup_and_transform函数

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

来重点看下tf.nn.nce_loss源码(这也是本demo中最核心的东西):

源码https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/ops/nn_impl.py

def nce_loss(weights,

biases,

labels,

inputs,

num_sampled,

num_classes,

num_true=1,

sampled_values=None,

remove_accidental_hits=False,

partition_strategy="mod",

name="nce_loss"):

"""Computes and returns the noise-contrastive estimation training loss.

See [Noise-contrastive estimation: A new estimation principle for

unnormalized statistical

models](http://www.jmlr.org/proceedings/papers/v9/gutmann10a/gutmann10a.pdf).

Also see our [Candidate Sampling Algorithms

Reference](https://www.tensorflow.org/extras/candidate_sampling.pdf)

A common use case is to use this method for training, and calculate the full

sigmoid loss for evaluation or inference. In this case, you must set

`partition_strategy="div"` for the two losses to be consistent, as in the

following example:

```python

if mode == "train":

loss = tf.nn.nce_loss(

weights=weights,

biases=biases,

labels=labels,

inputs=inputs,

...,

partition_strategy="div")

elif mode == "eval":

logits = tf.matmul(inputs, tf.transpose(weights))

logits = tf.nn.bias_add(logits, biases)

labels_one_hot = tf.one_hot(labels, n_classes)

loss = tf.nn.sigmoid_cross_entropy_with_logits(

labels=labels_one_hot,

logits=logits)

loss = tf.reduce_sum(loss, axis=1)

```

Note: By default this uses a log-uniform (Zipfian) distribution for sampling,

so your labels must be sorted in order of decreasing frequency to achieve

good results. For more details, see

@{tf.nn.log_uniform_candidate_sampler}.

Note: In the case where `num_true` > 1, we assign to each target class

the target probability 1 / `num_true` so that the target probabilities

sum to 1 per-example.

Note: It would be useful to allow a variable number of target classes per

example. We hope to provide this functionality in a future release.

For now, if you have a variable number of target classes, you can pad them

out to a constant number by either repeating them or by padding

with an otherwise unused class.

Args:

weights: A `Tensor` of shape `[num_classes, dim]`, or a list of `Tensor`

objects whose concatenation along dimension 0 has shape

[num_classes, dim]. The (possibly-partitioned) class embeddings.

biases: A `Tensor` of shape `[num_classes]`. The class biases.

labels: A `Tensor` of type `int64` and shape `[batch_size,

num_true]`. The target classes.

inputs: A `Tensor` of shape `[batch_size, dim]`. The forward

activations of the input network.

num_sampled: An `int`. The number of classes to randomly sample per batch.

num_classes: An `int`. The number of possible classes.

num_true: An `int`. The number of target classes per training example.

sampled_values: a tuple of (`sampled_candidates`, `true_expected_count`,

`sampled_expected_count`) returned by a `*_candidate_sampler` function.

(if None, we default to `log_uniform_candidate_sampler`)

remove_accidental_hits: A `bool`. Whether to remove "accidental hits"

where a sampled class equals one of the target classes. If set to

`True`, this is a "Sampled Logistic" loss instead of NCE, and we are

learning to generate log-odds instead of log probabilities. See

our [Candidate Sampling Algorithms Reference]

(https://www.tensorflow.org/extras/candidate_sampling.pdf).

Default is False.

partition_strategy: A string specifying the partitioning strategy, relevant

if `len(weights) > 1`. Currently `"div"` and `"mod"` are supported.

Default is `"mod"`. See `tf.nn.embedding_lookup` for more details.

name: A name for the operation (optional).

Returns:

A `batch_size` 1-D tensor of per-example NCE losses.

"""

logits, labels = _compute_sampled_logits(

weights=weights,

biases=biases,

labels=labels,

inputs=inputs,

num_sampled=num_sampled,

num_classes=num_classes,

num_true=num_true,

sampled_values=sampled_values,

subtract_log_q=True,

remove_accidental_hits=remove_accidental_hits,

partition_strategy=partition_strategy,

name=name)

sampled_losses = sigmoid_cross_entropy_with_logits(

labels=labels, logits=logits, name="sampled_losses")

# sampled_losses is batch_size x {true_loss, sampled_losses...}

# We sum out true and sampled losses.

return _sum_rows(sampled_losses)

首先来看一下API:

def nce_loss(weights,

biases,

labels,

inputs,

num_sampled,

num_classes,

num_true=1,

sampled_values=None,

remove_accidental_hits=False,

partition_strategy="mod",

name="nce_loss"):假如现在输入数据是M*N(对应到我们这个demo就是说M=50000(词典单词数),N=128(word2vec的特征数))

那么:

weights:M*N

biases : N

labels : batch_size, num_true(num_true代表正样本的数量,本demo中为1)

inputs : batch_size *N

num_sampled: 采样的负样本

num_classes : M

sampled_values:是否用不同的采样器,即tuple(`sampled_candidates`, `true_expected_count` `sampled_expected_count`)

如果是None,这采用log_uniform_candidate_sampler

remove_accidental_hits:如果不下心采集到的负样本就是target,要不要舍弃

partition_strategy:并行策略问题。

再看一下返回的就是

一个batch_size内每一个类子的NCE losses

下面看一下其实现,主要由三部分构成:

_compute_sampled_logits-----------------------采样

sigmoid_cross_entropy_with_logits---------------------------logistic regression

_sum_rows------------------------------------------------------------求和。

(1)看一下_compute_sampled_logits

def _compute_sampled_logits(weights,

biases,

labels,

inputs,

num_sampled,

num_classes,

num_true=1,

sampled_values=None,

subtract_log_q=True,

remove_accidental_hits=False,

partition_strategy="mod",

name=None,

seed=None):

"""Helper function for nce_loss and sampled_softmax_loss functions.

Computes sampled output training logits and labels suitable for implementing

e.g. noise-contrastive estimation (see nce_loss) or sampled softmax (see

sampled_softmax_loss).

Note: In the case where num_true > 1, we assign to each target class

the target probability 1 / num_true so that the target probabilities

sum to 1 per-example.

Args:

weights: A `Tensor` of shape `[num_classes, dim]`, or a list of `Tensor`

objects whose concatenation along dimension 0 has shape

`[num_classes, dim]`. The (possibly-partitioned) class embeddings.

biases: A `Tensor` of shape `[num_classes]`. The (possibly-partitioned)

class biases.

labels: A `Tensor` of type `int64` and shape `[batch_size,

num_true]`. The target classes. Note that this format differs from

the `labels` argument of `nn.softmax_cross_entropy_with_logits_v2`.

inputs: A `Tensor` of shape `[batch_size, dim]`. The forward

activations of the input network.

num_sampled: An `int`. The number of classes to randomly sample per batch.

num_classes: An `int`. The number of possible classes.

num_true: An `int`. The number of target classes per training example.

sampled_values: a tuple of (`sampled_candidates`, `true_expected_count`,

`sampled_expected_count`) returned by a `*_candidate_sampler` function.

(if None, we default to `log_uniform_candidate_sampler`)

subtract_log_q: A `bool`. whether to subtract the log expected count of

the labels in the sample to get the logits of the true labels.

Default is True. Turn off for Negative Sampling.

remove_accidental_hits: A `bool`. whether to remove "accidental hits"

where a sampled class equals one of the target classes. Default is

False.

partition_strategy: A string specifying the partitioning strategy, relevant

if `len(weights) > 1`. Currently `"div"` and `"mod"` are supported.

Default is `"mod"`. See `tf.nn.embedding_lookup` for more details.

name: A name for the operation (optional).

seed: random seed for candidate sampling. Default to None, which doesn't set

the op-level random seed for candidate sampling.

Returns:

out_logits: `Tensor` object with shape

`[batch_size, num_true + num_sampled]`, for passing to either

`nn.sigmoid_cross_entropy_with_logits` (NCE) or

`nn.softmax_cross_entropy_with_logits_v2` (sampled softmax).

out_labels: A Tensor object with the same shape as `out_logits`.

"""

if isinstance(weights, variables.PartitionedVariable):

weights = list(weights)

if not isinstance(weights, list):

weights = [weights]

with ops.name_scope(name, "compute_sampled_logits",

weights + [biases, inputs, labels]):

if labels.dtype != dtypes.int64:

labels = math_ops.cast(labels, dtypes.int64)

labels_flat = array_ops.reshape(labels, [-1])

# Sample the negative labels.

# sampled shape: [num_sampled] tensor

# true_expected_count shape = [batch_size, 1] tensor

# sampled_expected_count shape = [num_sampled] tensor

if sampled_values is None:

sampled_values = candidate_sampling_ops.log_uniform_candidate_sampler(

true_classes=labels,

num_true=num_true,

num_sampled=num_sampled,

unique=True,

range_max=num_classes,

seed=seed)

# NOTE: pylint cannot tell that 'sampled_values' is a sequence

# pylint: disable=unpacking-non-sequence

sampled, true_expected_count, sampled_expected_count = (

array_ops.stop_gradient(s) for s in sampled_values)

# pylint: enable=unpacking-non-sequence

sampled = math_ops.cast(sampled, dtypes.int64)

# labels_flat is a [batch_size * num_true] tensor

# sampled is a [num_sampled] int tensor

all_ids = array_ops.concat([labels_flat, sampled], 0)

# Retrieve the true weights and the logits of the sampled weights.

# weights shape is [num_classes, dim]

all_w = embedding_ops.embedding_lookup(

weights, all_ids, partition_strategy=partition_strategy)

# true_w shape is [batch_size * num_true, dim]

true_w = array_ops.slice(all_w, [0, 0],

array_ops.stack(

[array_ops.shape(labels_flat)[0], -1]))

sampled_w = array_ops.slice(

all_w, array_ops.stack([array_ops.shape(labels_flat)[0], 0]), [-1, -1])

# inputs has shape [batch_size, dim]

# sampled_w has shape [num_sampled, dim]

# Apply X*W', which yields [batch_size, num_sampled]

sampled_logits = math_ops.matmul(inputs, sampled_w, transpose_b=True)

# Retrieve the true and sampled biases, compute the true logits, and

# add the biases to the true and sampled logits.

all_b = embedding_ops.embedding_lookup(

biases, all_ids, partition_strategy=partition_strategy)

# true_b is a [batch_size * num_true] tensor

# sampled_b is a [num_sampled] float tensor

true_b = array_ops.slice(all_b, [0], array_ops.shape(labels_flat))

sampled_b = array_ops.slice(all_b, array_ops.shape(labels_flat), [-1])

# inputs shape is [batch_size, dim]

# true_w shape is [batch_size * num_true, dim]

# row_wise_dots is [batch_size, num_true, dim]

dim = array_ops.shape(true_w)[1:2]

new_true_w_shape = array_ops.concat([[-1, num_true], dim], 0)

row_wise_dots = math_ops.multiply(

array_ops.expand_dims(inputs, 1),

array_ops.reshape(true_w, new_true_w_shape))

# We want the row-wise dot plus biases which yields a

# [batch_size, num_true] tensor of true_logits.

dots_as_matrix = array_ops.reshape(row_wise_dots,

array_ops.concat([[-1], dim], 0))

true_logits = array_ops.reshape(_sum_rows(dots_as_matrix), [-1, num_true])

true_b = array_ops.reshape(true_b, [-1, num_true])

true_logits += true_b

sampled_logits += sampled_b

if remove_accidental_hits:

acc_hits = candidate_sampling_ops.compute_accidental_hits(

labels, sampled, num_true=num_true)

acc_indices, acc_ids, acc_weights = acc_hits

# This is how SparseToDense expects the indices.

acc_indices_2d = array_ops.reshape(acc_indices, [-1, 1])

acc_ids_2d_int32 = array_ops.reshape(

math_ops.cast(acc_ids, dtypes.int32), [-1, 1])

sparse_indices = array_ops.concat([acc_indices_2d, acc_ids_2d_int32], 1,

"sparse_indices")

# Create sampled_logits_shape = [batch_size, num_sampled]

sampled_logits_shape = array_ops.concat(

[array_ops.shape(labels)[:1],

array_ops.expand_dims(num_sampled, 0)], 0)

if sampled_logits.dtype != acc_weights.dtype:

acc_weights = math_ops.cast(acc_weights, sampled_logits.dtype)

sampled_logits += sparse_ops.sparse_to_dense(

sparse_indices,

sampled_logits_shape,

acc_weights,

default_value=0.0,

validate_indices=False)

if subtract_log_q:

# Subtract log of Q(l), prior probability that l appears in sampled.

true_logits -= math_ops.log(true_expected_count)

sampled_logits -= math_ops.log(sampled_expected_count)

# Construct output logits and labels. The true labels/logits start at col 0.

out_logits = array_ops.concat([true_logits, sampled_logits], 1)

# true_logits is a float tensor, ones_like(true_logits) is a float

# tensor of ones. We then divide by num_true to ensure the per-example

# labels sum to 1.0, i.e. form a proper probability distribution.

out_labels = array_ops.concat([

array_ops.ones_like(true_logits) / num_true,

array_ops.zeros_like(sampled_logits)

], 1)

return out_logits, out_labels首先看一下开头注解的返回维数:

Returns:

out_logits: `Tensor` object with shape

`[batch_size, num_true + num_sampled]`, for passing to either

`nn.sigmoid_cross_entropy_with_logits` (NCE) or

`nn.softmax_cross_entropy_with_logits_v2` (sampled softmax).

out_labels: A Tensor object with the same shape as `out_logits`.即 返回的out_logits和 out_labels的维度都是[batch_size, num_true + num_sampled],其中 num_true + num_sampled代表的就是正样本数+负样本数

再看一下最后:

out_labels = array_ops.concat([

array_ops.ones_like(true_logits) / num_true,

array_ops.zeros_like(sampled_logits)

], 1)其中的array_ops.ones_like和array_ops.zeros_like就是赋值向量为全1和全0,也可以从下面的源码看到:

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/ops/array_ops.py

@tf_export("ones_like")

def ones_like(tensor, dtype=None, name=None, optimize=True):

"""Creates a tensor with all elements set to 1.

Given a single tensor (`tensor`), this operation returns a tensor of the same

type and shape as `tensor` with all elements set to 1. Optionally, you can

specify a new type (`dtype`) for the returned tensor.

For example:

```python

tensor = tf.constant([[1, 2, 3], [4, 5, 6]])

tf.ones_like(tensor) # [[1, 1, 1], [1, 1, 1]]

```

Args:

tensor: A `Tensor`.

dtype: A type for the returned `Tensor`. Must be `float32`, `float64`,

`int8`, `uint8`, `int16`, `uint16`, `int32`, `int64`,

`complex64`, `complex128` or `bool`.

name: A name for the operation (optional).

optimize: if true, attempt to statically determine the shape of 'tensor'

and encode it as a constant.

Returns:

A `Tensor` with all elements set to 1.

"""

with ops.name_scope(name, "ones_like", [tensor]) as name:

tensor = ops.convert_to_tensor(tensor, name="tensor")

ones_shape = shape_internal(tensor, optimize=optimize)

if dtype is None:

dtype = tensor.dtype

ret = ones(ones_shape, dtype=dtype, name=name)

if not context.executing_eagerly():

ret.set_shape(tensor.get_shape())

return ret所以总结一下就是:

out_logits返回的就是目标词汇

out_labels返回的就是正样本+负样本(其中正样本都标记为1,负样本都标记为0)

这也是负采样的精髓所在,因为结果只有两种结果,所以只做二分类就可以了,代替了之前需要预测整个词典的大小,比如要对本类子中的50000种结果的每一种都预测,所以减少了计算的复杂度!!!!!!!!!!!!!!!

二者的维度都是[batch_size, num_true + num_sampled]

同时因为该demo中sampled_values=None,所以

if sampled_values is None:

sampled_values = candidate_sampling_ops.log_uniform_candidate_sampler(

true_classes=labels,

num_true=num_true,

num_sampled=num_sampled,

unique=True,

range_max=num_classes,

seed=seed)用到的是:candidate_sampling_ops.log_uniform_candidate_sampler采样器

https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/ops/candidate_sampling_ops.py

def log_uniform_candidate_sampler(true_classes, num_true, num_sampled, unique,

range_max, seed=None, name=None):

"""Samples a set of classes using a log-uniform (Zipfian) base distribution.

This operation randomly samples a tensor of sampled classes

(`sampled_candidates`) from the range of integers `[0, range_max)`.

The elements of `sampled_candidates` are drawn without replacement

(if `unique=True`) or with replacement (if `unique=False`) from

the base distribution.

The base distribution for this operation is an approximately log-uniform

or Zipfian distribution:

`P(class) = (log(class + 2) - log(class + 1)) / log(range_max + 1)`

This sampler is useful when the target classes approximately follow such

a distribution - for example, if the classes represent words in a lexicon

sorted in decreasing order of frequency. If your classes are not ordered by

decreasing frequency, do not use this op.

In addition, this operation returns tensors `true_expected_count`

and `sampled_expected_count` representing the number of times each

of the target classes (`true_classes`) and the sampled

classes (`sampled_candidates`) is expected to occur in an average

tensor of sampled classes. These values correspond to `Q(y|x)`

defined in [this

document](http://www.tensorflow.org/extras/candidate_sampling.pdf).

If `unique=True`, then these are post-rejection probabilities and we

compute them approximately.

Args:

true_classes: A `Tensor` of type `int64` and shape `[batch_size,

num_true]`. The target classes.

num_true: An `int`. The number of target classes per training example.

num_sampled: An `int`. The number of classes to randomly sample.

unique: A `bool`. Determines whether all sampled classes in a batch are

unique.

range_max: An `int`. The number of possible classes.

seed: An `int`. An operation-specific seed. Default is 0.

name: A name for the operation (optional).

Returns:

sampled_candidates: A tensor of type `int64` and shape `[num_sampled]`.

The sampled classes.

true_expected_count: A tensor of type `float`. Same shape as

`true_classes`. The expected counts under the sampling distribution

of each of `true_classes`.

sampled_expected_count: A tensor of type `float`. Same shape as

`sampled_candidates`. The expected counts under the sampling distribution

of each of `sampled_candidates`.

"""

seed1, seed2 = random_seed.get_seed(seed)

return gen_candidate_sampling_ops.log_uniform_candidate_sampler(

true_classes, num_true, num_sampled, unique, range_max, seed=seed1,

seed2=seed2, name=name)可以看到其对负样本是基于以下概率采样的,之所以不使用词频直接作为概率采用是因为如果这样的话,那么采取的负样本就都会是哪些高频词汇类如:and , of , i 等等,显然并不好。另一个极端就是使用词频的倒数,但是这对英文也没有代表性,根据mikolov写的一篇论文,实验得出的经验值是

这里的话没有用上面的公式,但是也使得其处于两个极端之间了:还是可以看出P(class) 是递减函数,即class越小,P(class)越大,class在本类中代表的是单词的编号,由(五)可以知道,词频越大,编号越小(NUK除外),所以词频高的还是容易被作采用作为负样本的!

P(class) = (log(class + 2) - log(class + 1)) / log(range_max + 1)

(2)接下来看一下sigmoid_cross_entropy_with_logits函数

def sigmoid_cross_entropy_with_logits( # pylint: disable=invalid-name

_sentinel=None,

labels=None,

logits=None,

name=None):

"""Computes sigmoid cross entropy given `logits`.

Measures the probability error in discrete classification tasks in which each

class is independent and not mutually exclusive. For instance, one could

perform multilabel classification where a picture can contain both an elephant

and a dog at the same time.

For brevity, let `x = logits`, `z = labels`. The logistic loss is

z * -log(sigmoid(x)) + (1 - z) * -log(1 - sigmoid(x))

= z * -log(1 / (1 + exp(-x))) + (1 - z) * -log(exp(-x) / (1 + exp(-x)))

= z * log(1 + exp(-x)) + (1 - z) * (-log(exp(-x)) + log(1 + exp(-x)))

= z * log(1 + exp(-x)) + (1 - z) * (x + log(1 + exp(-x))

= (1 - z) * x + log(1 + exp(-x))

= x - x * z + log(1 + exp(-x))

For x < 0, to avoid overflow in exp(-x), we reformulate the above

x - x * z + log(1 + exp(-x))

= log(exp(x)) - x * z + log(1 + exp(-x))

= - x * z + log(1 + exp(x))

Hence, to ensure stability and avoid overflow, the implementation uses this

equivalent formulation

max(x, 0) - x * z + log(1 + exp(-abs(x)))

`logits` and `labels` must have the same type and shape.

Args:

_sentinel: Used to prevent positional parameters. Internal, do not use.

labels: A `Tensor` of the same type and shape as `logits`.

logits: A `Tensor` of type `float32` or `float64`.

name: A name for the operation (optional).

Returns:

A `Tensor` of the same shape as `logits` with the componentwise

logistic losses.

Raises:

ValueError: If `logits` and `labels` do not have the same shape.

"""

# pylint: disable=protected-access

nn_ops._ensure_xent_args("sigmoid_cross_entropy_with_logits", _sentinel,

labels, logits)

# pylint: enable=protected-access

with ops.name_scope(name, "logistic_loss", [logits, labels]) as name:

logits = ops.convert_to_tensor(logits, name="logits")

labels = ops.convert_to_tensor(labels, name="labels")

try:

labels.get_shape().merge_with(logits.get_shape())

except ValueError:

raise ValueError("logits and labels must have the same shape (%s vs %s)" %

(logits.get_shape(), labels.get_shape()))

# The logistic loss formula from above is

# x - x * z + log(1 + exp(-x))

# For x < 0, a more numerically stable formula is

# -x * z + log(1 + exp(x))

# Note that these two expressions can be combined into the following:

# max(x, 0) - x * z + log(1 + exp(-abs(x)))

# To allow computing gradients at zero, we define custom versions of max and

# abs functions.

zeros = array_ops.zeros_like(logits, dtype=logits.dtype)

cond = (logits >= zeros)

relu_logits = array_ops.where(cond, logits, zeros)

neg_abs_logits = array_ops.where(cond, -logits, logits)

return math_ops.add(

relu_logits - logits * labels,

math_ops.log1p(math_ops.exp(neg_abs_logits)),

name=name)可以看到其实最关键的就是下面这个公式:

z * -log(sigmoid(x)) + (1 - z) * -log(1 - sigmoid(x))

其实z * -log(x) + (1 - z) * -log(1 - x)就是交叉熵,对的,没看错这个函数其实就是将输入先sigmoid再计算交叉熵

如上所示最后化简结果为:x - x * z + log(1 + exp(-x))

这里考虑到当x<0时exp(-x)有可能溢出,所以当x<0时有- x * z + log(1 + exp(x))

最后综合两种情况归纳出:

max(x, 0) - x * z + log(1 + exp(-abs(x)))

关于交叉熵的概念可以看一下:

https://blog.csdn.net/rtygbwwwerr/article/details/50778098

(3)最后看一下_sum_rows

def _sum_rows(x):

"""Returns a vector summing up each row of the matrix x."""

# _sum_rows(x) is equivalent to math_ops.reduce_sum(x, 1) when x is

# a matrix. The gradient of _sum_rows(x) is more efficient than

# reduce_sum(x, 1)'s gradient in today's implementation. Therefore,

# we use _sum_rows(x) in the nce_loss() computation since the loss

# is mostly used for training.

cols = array_ops.shape(x)[1]

ones_shape = array_ops.stack([cols, 1])

ones = array_ops.ones(ones_shape, x.dtype)

return array_ops.reshape(math_ops.matmul(x, ones), [-1])这个相当简单了就是将矩阵的每一行都加起来,即根据上面的[batch_size, num_true + num_sampled],其实就是true loss与 sampled loss之和,即求batch_size中每一个example的总loss

到此tf.nn.nce_loss就结束了,这就是最重要的部分

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

从代码可以看出这里选择的 optimizer是GradientDescentOptimizer,然后就是通过normalized_embeddings = embeddings / norm

对word2vec矩阵进行了归一化

下面接着上面来看一下计算余弦相似度的部分

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings,

valid_dataset)

similarity = tf.matmul(

valid_embeddings, normalized_embeddings, transpose_b=True)这里很简单,这里就是抽取其中valid_dataset个词向量计算余弦相似度,余弦相似度就是通过两个向量的夹角的余弦值来衡量相似程度,显然当角度为0时,二者重合,最相近,此时其余弦值也最大为1,本demo中抽取的方式是从前100个词频最高的词中选取随机选取16个即:

valid_size = 16 # Random set of words to evaluate similarity on.

valid_window = 100 # Only pick dev samples in the head of the distribution.

valid_examples = np.random.choice(valid_window, valid_size, replace=False)(九)建立会话

num_steps = 100001

with tf.Session(graph=graph) as session:

# Open a writer to write summaries.

writer = tf.summary.FileWriter(FLAGS.log_dir, session.graph)

# We must initialize all variables before we use them.

init.run()

print('Initialized')

average_loss = 0

for step in xrange(num_steps):

batch_inputs, batch_labels = generate_batch(batch_size, num_skips,

skip_window)

feed_dict = {train_inputs: batch_inputs, train_labels: batch_labels}

# Define metadata variable.

run_metadata = tf.RunMetadata()

# We perform one update step by evaluating the optimizer op (including it

# in the list of returned values for session.run()

# Also, evaluate the merged op to get all summaries from the returned "summary" variable.

# Feed metadata variable to session for visualizing the graph in TensorBoard.

_, summary, loss_val = session.run(

[optimizer, merged, loss],

feed_dict=feed_dict,

run_metadata=run_metadata)

average_loss += loss_val

# Add returned summaries to writer in each step.

writer.add_summary(summary, step)

# Add metadata to visualize the graph for the last run.

if step == (num_steps - 1):

writer.add_run_metadata(run_metadata, 'step%d' % step)

if step % 2000 == 0:

if step > 0:

average_loss /= 2000

# The average loss is an estimate of the loss over the last 2000 batches.

print('Average loss at step ', step, ': ', average_loss)

average_loss = 0

# Note that this is expensive (~20% slowdown if computed every 500 steps)

if step % 10000 == 0:

sim = similarity.eval()

for i in xrange(valid_size):

valid_word = reverse_dictionary[valid_examples[i]]

top_k = 8 # number of nearest neighbors

nearest = (-sim[i, :]).argsort()[1:top_k + 1]

log_str = 'Nearest to %s:' % valid_word

for k in xrange(top_k):

close_word = reverse_dictionary[nearest[k]]

log_str = '%s %s,' % (log_str, close_word)

print(log_str)

final_embeddings = normalized_embeddings.eval()

# Write corresponding labels for the embeddings.

with open(FLAGS.log_dir + '/metadata.tsv', 'w') as f:

for i in xrange(vocabulary_size):

f.write(reverse_dictionary[i] + '\n')

# Save the model for checkpoints.

saver.save(session, os.path.join(FLAGS.log_dir, 'model.ckpt'))

# Create a configuration for visualizing embeddings with the labels in TensorBoard.

config = projector.ProjectorConfig()

embedding_conf = config.embeddings.add()

embedding_conf.tensor_name = embeddings.name

embedding_conf.metadata_path = os.path.join(FLAGS.log_dir, 'metadata.tsv')

projector.visualize_embeddings(writer, config)

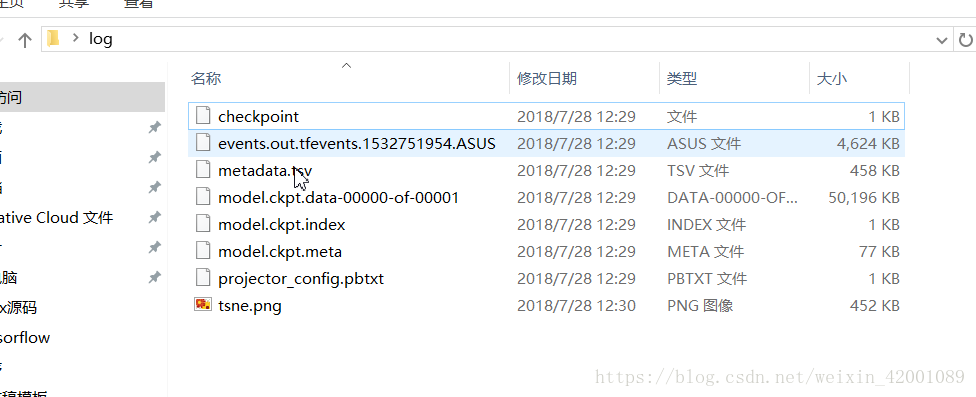

writer.close()这部分源码简单易懂主要依次做了以下几件事:

(1)训练模型

(2)在训练最后一次,保存模型用以后续可视化

(3)每2000次,计算一次平均loss

(4)每10000次,打印(八)中随机选取16个词各自对应的与其最相近的8个词

(5)保存训练好的embeddings矩阵为.tsv格式用于在tensorboard通过降维来可视化

(6)保存模型为.ckpt

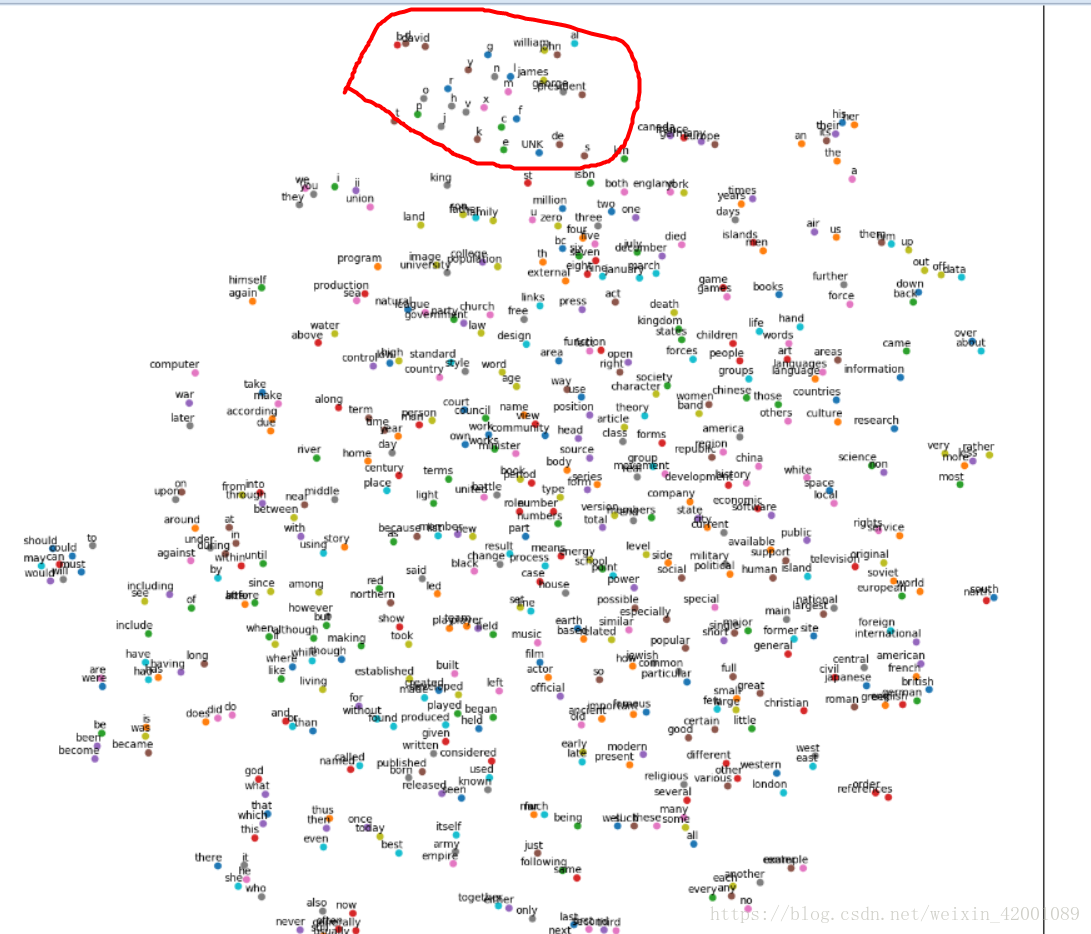

(十)二维可视化

def plot_with_labels(low_dim_embs, labels, filename):

assert low_dim_embs.shape[0] >= len(labels), 'More labels than embeddings'

plt.figure(figsize=(18, 18)) # in inches

for i, label in enumerate(labels):

x, y = low_dim_embs[i, :]

plt.scatter(x, y)

plt.annotate(

label,

xy=(x, y),

xytext=(5, 2),

textcoords='offset points',

ha='right',

va='bottom')

plt.savefig(filename)

try:

# pylint: disable=g-import-not-at-top

from sklearn.manifold import TSNE

import matplotlib.pyplot as plt

tsne = TSNE(

perplexity=30, n_components=2, init='pca', n_iter=5000, method='exact')

plot_only = 500

low_dim_embs = tsne.fit_transform(final_embeddings[:plot_only, :])

labels = [reverse_dictionary[i] for i in xrange(plot_only)]

plot_with_labels(low_dim_embs, labels, os.path.join(FLAGS.log_dir, 'tsne.png', 'tsne.png'))

except ImportError as ex:

print('Please install sklearn, matplotlib, and scipy to show embeddings.')

print(ex)这里其实归结起来就是做了一件事那就是:将训练好的embeddings降为2维,然后保存为图片形式用于可视化

这里只选择了embeddings前500个用于可视化,重点就是使用TSNE这个包用于降为

这里简单说一下参数:

perplexity为浮点型,可选(默认:30)较大的数据集通常需要更大的perplexity

n_components 降为几维,默认2

init 可选嵌入的初始化,默认值:“random”,这里选取pca是因为其通常比随机初始化更全局稳定。但需要注意的是pca初始化不 能用于预先计算的距离

n_iter 优化的最大迭代次数

method 梯度计算算法使用在O(NlogN)时间内运行的Barnes-Hut近似值。 method ='exact'将运行在O(N ^ 2)时间内较慢但精确的算法上。当最近邻的误差需要好于3%时,应该使用精确的算法。但是,确切的方法无法扩展到数百万个示例。0.17新版功能:通过Barnes-Hut近似优化方法。

更多参数可以参考:

https://blog.csdn.net/qq_23534759/article/details/80457557

到这里源码讲解完毕,下面是运行结果的部分截图:

可以看到词频最高的几个词以及其词频分别为:

Most common words (+UNK) [['UNK', 418391], ('the', 1061396), ('of', 593677), ('and', 416629), ('one', 411764)]

然后是去了文章十个单词以及其对应的编号:

Sample data [5234, 3081, 12, 6, 195, 2, 3134, 46, 59, 156] ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against']

然后是取了一个批次,其大小为8,打印了其目标词汇以及上下文:

3081 originated -> 5234 anarchism

3081 originated -> 12 as

12 as -> 6 a

12 as -> 3081 originated

6 a -> 195 term

6 a -> 12 as

195 term -> 2 of

195 term -> 6 a

从这里也可以看到每个目标词汇被用了2次

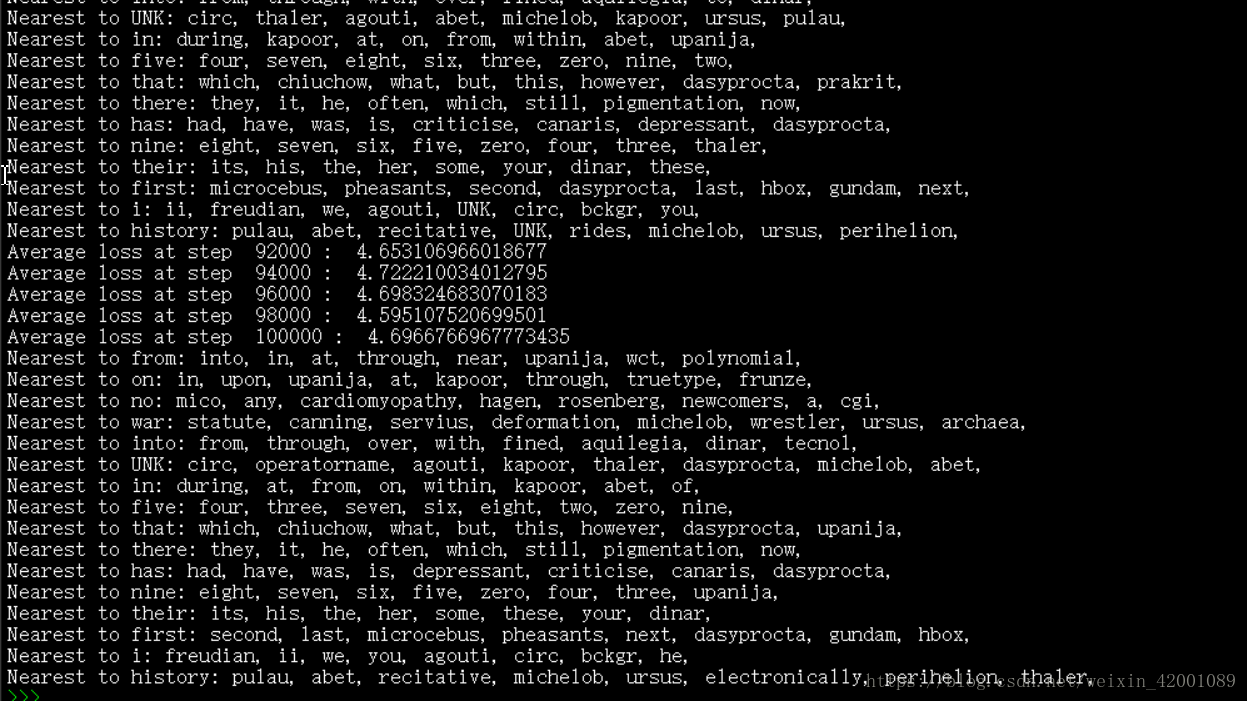

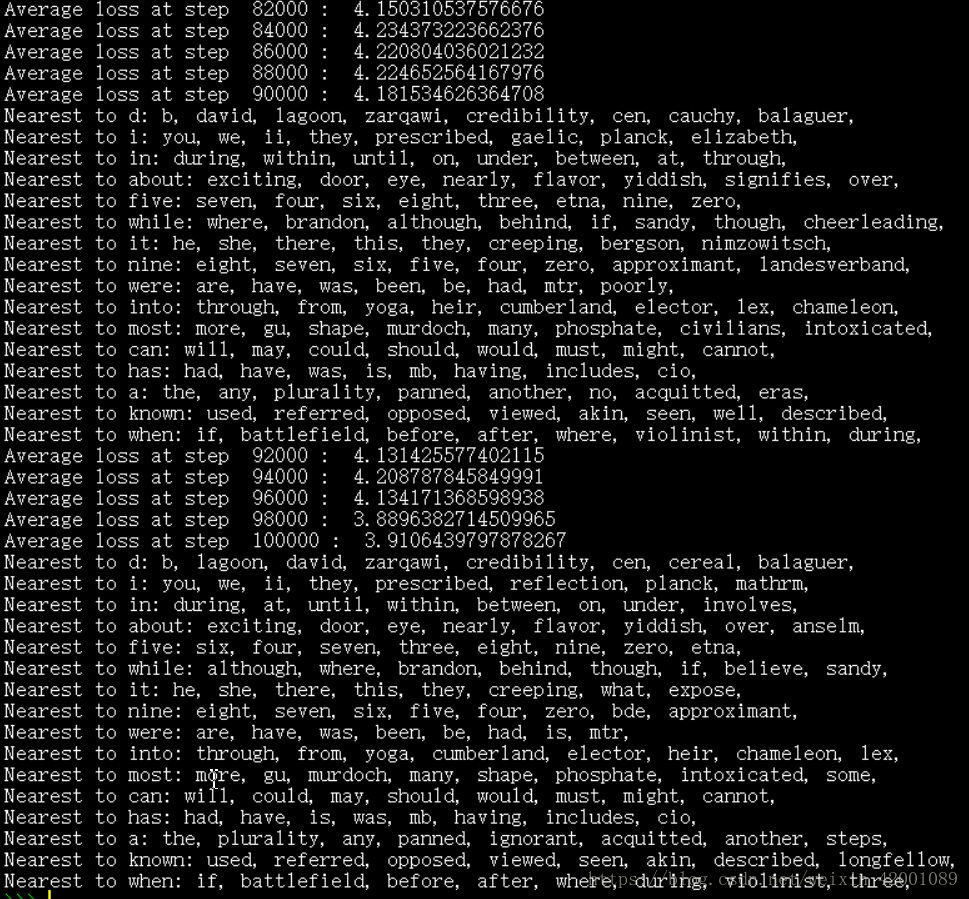

接下来就是一次次的迭代,我们看最后一次的:

可以看到最和from接近的词汇有into, in, at, through, near, upanija, wct, polynomial

和five接近的词汇有 four, three, seven, six, eight, two, zero, nine等等

总之结果还是比较好的

看一下上图可视化的二维的图片,比如红圈都是助动词

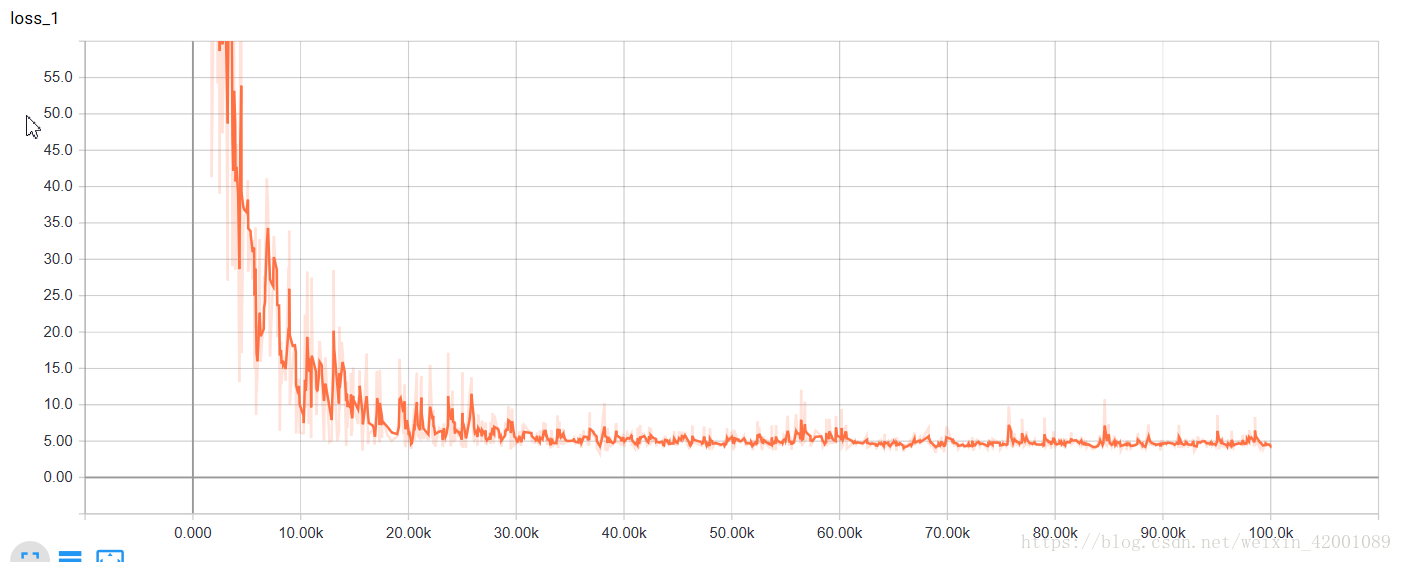

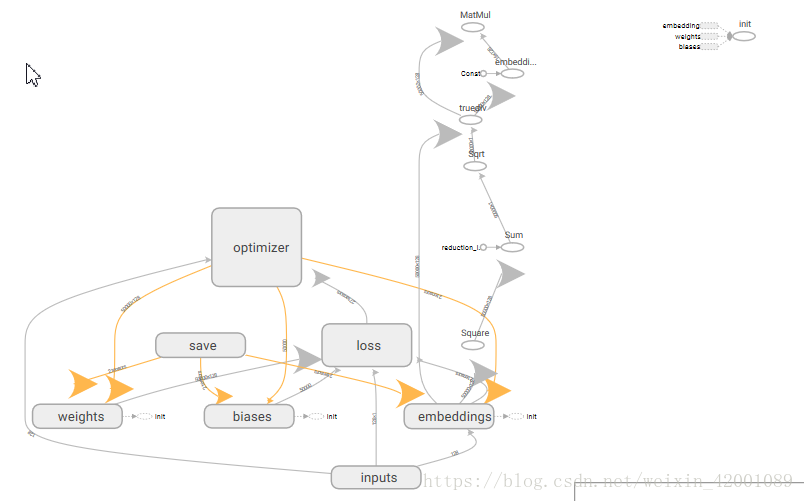

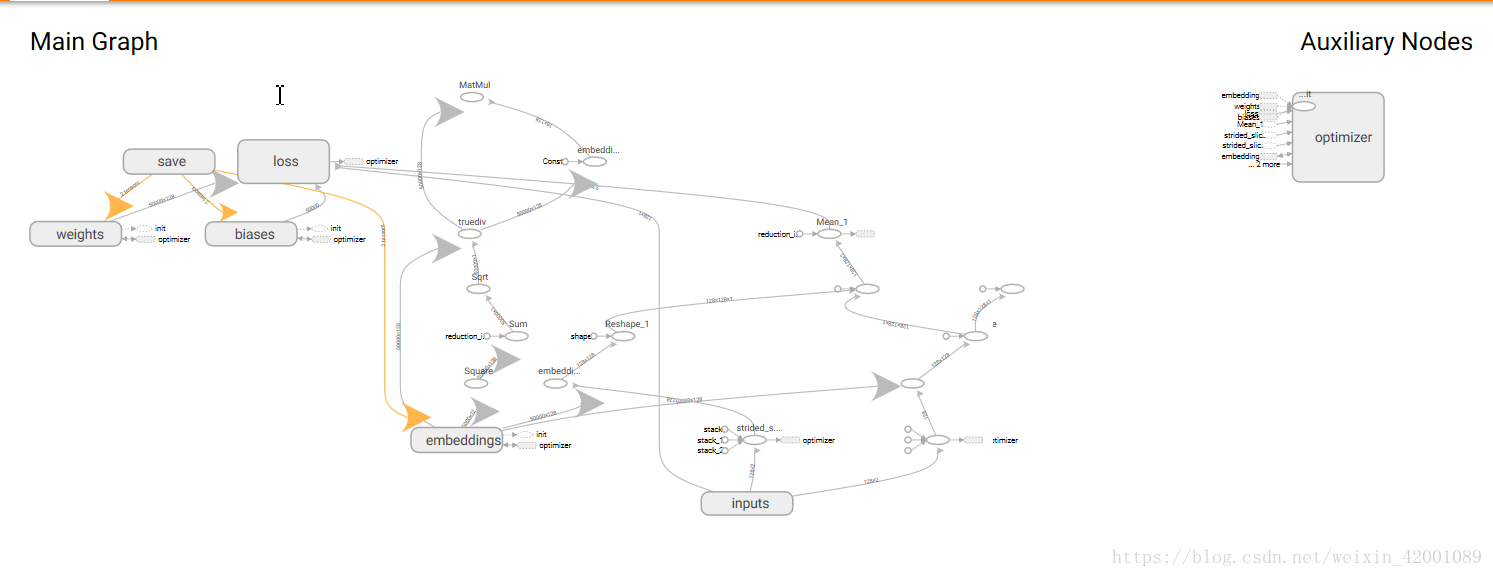

再来看一下tensorboard中的可视化

###################################################################################################

以上就是word2vec的Skip-Gram模型,下面介绍CBOW

对比以上Skip-Gram模型:

当CBOW_window=1时:

I am looking for the missing glass-shoes who has picked it up .............

batch:[ ' i ' , ' looking '] , [ ' am ' , ' for '] , [ ' looking ' , ' the '] , [ ' for ' , ' missing '] .................

labels: [ ' am ' , ' looking ' , ' for ' , ' the ' ]

所以首先要改的就是将上面的(六)(八)部分改为:

def generate_batch(batch_size, cbow_window):

global data_index

assert cbow_window % 2 == 1

span = 2 * cbow_window + 1

# 去除中心word: span - 1

batch = np.ndarray(shape=(batch_size, span - 1), dtype=np.int32)

labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

buffer = collections.deque(maxlen=span)

for _ in range(span):

buffer.append(data[data_index])

# 循环选取 data中数据,到尾部则从头开始

data_index = (data_index + 1) % len(data)

for i in range(batch_size):

# target at the center of span

target = cbow_window

# 仅仅需要知道context(word)而不需要word

target_to_avoid = [cbow_window]

col_idx = 0

for j in range(span):

# 略过中心元素 word

if j == span // 2:

continue

batch[i, col_idx] = buffer[j]

col_idx += 1

labels[i, 0] = buffer[target]

# 更新 buffer

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

return batch, labels

batch, labels = generate_batch(batch_size=8, cbow_window=1)

for i in range(8):

print(reverse_dictionary[batch[i,0]],'and',reverse_dictionary[batch[i,1]] ,'->',

reverse_dictionary[labels[i, 0]])with graph.as_default():

# Input data.

with tf.name_scope('inputs'):

train_dataset = tf.placeholder(tf.int32, shape=[batch_size,2 * cbow_window])

train_labels = tf.placeholder(tf.int32, shape=[batch_size, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

# Ops and variables pinned to the CPU because of missing GPU implementation

with tf.device('/cpu:0'):

# Look up embeddings for inputs.

with tf.name_scope('embeddings'):

embeddings = tf.Variable(

tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

# Construct the variables for the NCE loss

with tf.name_scope('weights'):

nce_weights = tf.Variable(

tf.truncated_normal(

[vocabulary_size, embedding_size],

stddev=1.0 / math.sqrt(embedding_size)))

with tf.name_scope('biases'):

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

embeds = None

for i in range(2 * cbow_window):

embedding_i = tf.nn.embedding_lookup(embeddings, train_dataset[:,i])

print('embedding %d shape: %s'%(i, embedding_i.get_shape().as_list()))

emb_x,emb_y = embedding_i.get_shape().as_list()

if embeds is None:

embeds = tf.reshape(embedding_i, [emb_x,emb_y,1])

else:

embeds = tf.concat([embeds, tf.reshape(embedding_i, [emb_x, emb_y,1])], 2)

print("Concat embedding size: %s"%embeds.get_shape().as_list())

avg_embed = tf.reduce_mean(embeds, 2, keep_dims=False)

print("Avg embedding size: %s"%avg_embed.get_shape().as_list())

print('--------------------------------------------------------------------------------------------')

print(avg_embed.shape)

print(train_labels.shape)

print('--------------------------------------------------------------------------------------------')

# Compute the average NCE loss for the batch.

# tf.nce_loss automatically draws a new sample of the negative labels each

# time we evaluate the loss.

# Explanation of the meaning of NCE loss:

# http://mccormickml.com/2016/04/19/word2vec-tutorial-the-skip-gram-model/

with tf.name_scope('loss'):

loss = tf.reduce_mean(

tf.nn.nce_loss(

weights=nce_weights,

biases=nce_biases,

labels=train_labels,

inputs=avg_embed,

num_sampled=num_sampled,

num_classes=vocabulary_size))

# Add the loss value as a scalar to summary.

tf.summary.scalar('loss', loss)

# Construct the SGD optimizer using a learning rate of 1.0.

with tf.name_scope('optimizer'):

optimizer = tf.train.GradientDescentOptimizer(1.0).minimize(loss)

# Compute the cosine similarity between minibatch examples and all embeddings.

norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keepdims=True))

normalized_embeddings = embeddings / norm

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings,

valid_dataset)

similarity = tf.matmul(

valid_embeddings, normalized_embeddings, transpose_b=True)

# Merge all summaries.

merged = tf.summary.merge_all()

# Add variable initializer.

init = tf.global_variables_initializer()

# Create a saver.

saver = tf.train.Saver()

这里的特殊之处在于怎么将batch和labels维数对应

以下假设embeding的特征数都是128

对于Skip-Gram模型模型

经过generate_batch后返回:

batch:batch_size

labels : batch_size*1

然后 经过(八)的(train_inputs=batch) embed = tf.nn.embedding_lookup(embeddings, train_inputs)后

embed : batch_size*128

labels : batch_size*1

所以传给 tf.nn.nce_loss进行训练的

此时对于CBOW模型

经过generate_batch后返回:

batch:batch_size*span - 1(batch_size*2)

labels : batch_size*1

如果还是按照Skip-Gram模型则

经过(八)的(train_inputs=batch) embed = tf.nn.embedding_lookup(embeddings, train_inputs)后

会报错,而且原先Skip-Gram中目标词汇是一个,现在CBOW中目标词汇是两个

基于此本程序是进行如下处理来产生embed 的:

首先先遍历目标词汇的一个(i=0),通过 embedding_i = tf.nn.embedding_lookup(embeddings, train_dataset[:,i])

此时embedding_i维度为batch_size*128然后reshape为batch_size*128*1

然后将embedding_i赋给 embeds

接着再遍历目标词中剩下的一个(i=1),通过 embedding_i = tf.nn.embedding_lookup(embeddings, train_dataset[:,i])

此时embedding_i维度为batch_size*128然后reshape为batch_size*128*1

然后再将embedding_i合并到embeds(合并的维度是2)此时embeds维度为batch_size*128*2

然后avg_embed = tf.reduce_mean(embeds, 2, keep_dims=False)后avg_embed维数为batch_size*128

最后将

avg_embed : batch_size*128

labels: batch_size*1

所以传给 tf.nn.nce_loss进行训练的

这样说不是很直观下面举个类子(batch_size=8)

即比如batch为[ [ 1 , 2 ] , [ 3 , 4 ], [ 5 , 6 ], [ 7 , 8 ], [ 9 , 10 ], [ 11 , 12 ], [ 13 , 14 ], [ 15 , 16 ]]

embeddings为 [ [ 1.1 , 1.2 ,1.3 ,.....................................................1.128]

[ 2.1 , 2.2 ,2.3 ,.....................................................2.128]

[ 3.1 , 3.2 ,3.3 ,.....................................................3.128]

................................

[ 50000.1 , 50000.2 ,50000.3 ,..................50000.128]

]

那么embeds为 [ [ [1.1 , 2.1] , [1.2 , 2.2] , [1.3 , 2.3] .......... , [1.128 , 2.128] ]

[ [3.1 , 4.1] , [3.2 , 4.2] , [3.3 , 4.3] .......... , [3.128 , 4.128] ]

[ [5.1 , 6.1] , [5.2 ,6.2] , [5.3 , 6.3] .......... , [5.128 , 6.128] ]

[ [7.1 , 8.1] , [7.2 , 8.2] , [7.3 , 8.3] .......... , [7.128 , 8.128] ]

[ [9.1 , 10.1] , [9.2 , 10.2] , [9.3 , 10.3] .......... , [9.128 , 10.128] ]

[ [11.1 , 12.1] , [11.2 , 12.2] , [11.3 , 12.3] .......... , [11.128 , 12.128] ]

[ [13.1 , 14.1] , [13.2 , 14.2] , [13.3 , 14.3] .......... , [13.128 , 14.128] ]

[ [15.1 , 16.1] , [15.2 , 16.2] , [15.3 , 16.3] .......... , [15.128 , 16.128] ]

]

avg_embed为

[ [ [ 3.2 ] , [ 3.4 ] , [ 3.6 ] .......... , [ 3.256 ] ]

[ [ 7.2 ] , [ 7.4 ] , [ 7.6 ] .......... , [ 7.256 ] ]

[ [ 11.2 ] , [ 11.4 ] , [11.6 ] .......... , [ 11.256 ] ]

.........................

[ [ 31.2 ] , [ 31.4 ] , [ 31.6 ] .......... , [ 31.256 ] ]

]

可以看出CBOW模型其实就是将目标词汇中的两个词对应的128个特征分别相加,整体作为一个输入的

其他代码就与Skip-Gram模型基本相同了

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

以下为运行结果的部分截图:

可以看到最后一步的loss为3.91,而Skip-Gram最后一步的loss为4.69,所以CBOW模型训练的结果更好一点,是因为其同时考虑了两个词去预测一个词,而Skip-Gram是用一个词去预测上下文

可视化也可以看到结果

tensorboard中的可视化:

记得点红色的部分就能看到三维的分布了

###################################################################################################

全部代码:https://github.com/Mryangkaitong/tensorflow/tree/master/word2vec

关于可视化,可以使用tensorboard内置的embedding工具进行可视化,可以参考:

https://blog.csdn.net/szj_huhu/article/details/75308970

http://www.360doc.com/content/17/0706/14/10408243_669327270.shtml

等等

或者将数据集制作成.csv,直接在tensorboard内置的embedding的工具中加载:

地址为:http://projector.tensorflow.org/

https://blog.csdn.net/u010099080/article/details/53560426?fps=1&locationNum=11

关于怎样制作.csv可以参考:

https://jingyan.baidu.com/article/9c69d48ff3123d13c9024e06.html