一 软件环境

- Oracle VM VirtualBox

- Oracle Linux 6.5

- p13390677_112040_Linux-x86-64

- 主机名称:node1,node2

- Xmanager Enterprise 5

二 主机设置

1、网络配置

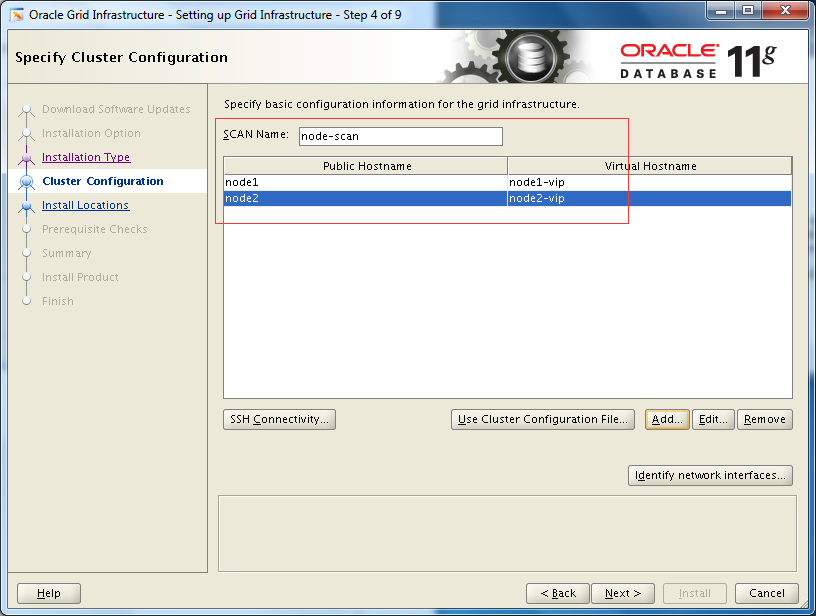

--node1,node2分别配置 [root@node1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 #public ip 192.168.1.117 node1 192.168.1.118 node2 #private ip 10.10.10.11 node1-priv 10.10.10.12 node2-priv #virtual ip 192.168.1.120 node1-vip 192.168.1.121 node2-vip #scan ip 192.168.1.123 node-scan 192.168.1.124 node-scan 192.168.1.125 node-scan [root@node1 ~]#

2、用户和组配置

--node1,node2分别配置 [root@node1 ~]# groupadd oinstall [root@node1 ~]# groupadd -g 1002 dba [root@node1 ~]# groupadd -g 1003 oper [root@node1 ~]# groupadd -g 1004 asmadmin [root@node1 ~]# groupadd -g 1005 asmdba [root@node1 ~]# groupadd -g 1006 asmoper [root@node1 ~]# [root@node1 ~]# useradd -u 1001 -g oinstall -G dba,asmdba,oper oracle [root@node1 ~]# passwd oracle Changing password for user oracle. New password: BAD PASSWORD: The password is shorter than 8 characters Retype new password: passwd: all authentication tokens updated successfully. [root@node1 ~]# useradd -u 1002 -g oinstall -G asmadmin,asmdba,asmoper,oper,dba grid [root@node1 ~]# passwd grid Changing password for user grid. New password: BAD PASSWORD: The password is shorter than 8 characters Retype new password: passwd: all authentication tokens updated successfully. [root@node1 ~]#

3、目录配置

--node1,node2分别配置 [root@node1 ~]# mkdir -p /u01/app/grid [root@node1 ~]# chown -R grid:oinstall /u01/app/ [root@node1 ~]# chmod -R 775 /u01/app/grid/ [root@node1 ~]# [root@node1 ~]# mkdir -p /u01/app/oracle [root@node1 ~]# chown -R oracle:oinstall /u01/app/oracle/ [root@node1 ~]# chmod -R 775 /u01/app/oracle/ [root@node1 ~]# [root@node1 ~]# ll /u01/app/ total 8 drwxrwxr-x. 2 grid oinstall 4096 Apr 13 22:25 grid drwxrwxr-x. 2 oracle oinstall 4096 Apr 13 22:26 oracle [root@node1 ~]# ll /u01/ total 20 drwxr-xr-x. 4 grid oinstall 4096 Apr 13 22:26 app drwx------. 2 root root 16384 Apr 12 23:30 lost+found [root@node1 ~]#

4、安装rpm

--node1,node2分别配置 [root@node1 ~]# yum -y install binutils compat-libcap1 compat-libstdc* gcc gcc-c++* glibc glibc-devel ksh libgcc libstdc libaio libaio-devel make elfutils-libelf-devel sysstat [root@node1 ~]# rpm -ivh pdksh-5.2.14-37.el5_8.1.x86_64.rpm --nodeps

5、内核参数配置

--node1,node2分别配置 [root@node1 ~]# vim /etc/sysctl.conf fs.aio-max-nr = 1048576 fs.file-max = 6815744 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 net.ipv4.ip_local_port_range = 9000 65500 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 [root@node1 ~]# sysctl -p [root@node1 ~]# vim /etc/security/limits.conf grid soft nproc 2047 grid hard nproc 16384 grid soft nofile 1024 grid hard nofile 65536 oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536

6、NTP(Network Time Protocol)和防火墙配置

--停用NTP [root@node1 ~]# /sbin/service ntpd stop Shutting down ntpd: [ OK ] [root@node1 ~]# chkconfig ntpd off [root@node1 ~]# mv /etc/ntp.conf /etc/ntp.conf.del --可直接删除 [root@node1 ~]# service ntpd status ntpd is stopped --禁用防火墙 [root@node1 ~]# service iptables stop iptables: Setting chains to policy ACCEPT: filter [ OK ] iptables: Flushing firewall rules: [ OK ] iptables: Unloading modules: [ OK ] [root@node1 ~]# chkconfig iptables off [root@node1 ~]# --禁用Selinux [root@node1 ~]# vim /etc/selinux/config SELINUX=disabled

7、ASM配置

具体配置参照:使用UDEV SCSI规则配置ASM

--使用udev方式分别在node1、node2设置 [root@node1 ~]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBb5d5b05d-340e9bd0", NAME="asm-disk1", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBd53cfb55-f69e8ef1", NAME="asm-disk2", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBbca0854b-b445b128", NAME="asm-disk3", OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd?1", BUS=="scsi", PROGRAM=="/sbin/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VB205f2eb0-8b04bf62", NAME="asm-disk4", OWNER="grid", GROUP="asmadmin", MODE="0660" [root@node1 ~]# [root@node1 ~]# partprobe /dev/sdb1 [root@node1 ~]# partprobe /dev/sdc1 [root@node1 ~]# partprobe /dev/sdd1 [root@node1 ~]# partprobe /dev/sde1 [root@node1 ~]# ll /dev/asm* brw-rw----. 1 grid asmadmin 8, 17 Apr 16 21:35 /dev/asm-disk1 brw-rw----. 1 grid asmadmin 8, 33 Apr 16 21:35 /dev/asm-disk2 brw-rw----. 1 grid asmadmin 8, 49 Apr 16 21:35 /dev/asm-disk3 brw-rw----. 1 grid asmadmin 8, 65 Apr 16 21:35 /dev/asm-disk4 [root@node1 ~]#

8、SSH等效性配置

具体配置参照:在所有集群节点手动配置SSH无密码访问

--node1,node2分别配置 [grid@node1 .ssh]$ ssh node1 date Sat Apr 14 00:25:23 CST 2018 [grid@node1 .ssh]$ ssh node2 date Sat Apr 14 00:25:27 CST 2018

9、环境变量设置

--node1,node2分别配置 [grid@node1 ~]$ cat .bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH ORACLE_BASE=/u01/app/grid export ORACLE_BASE ORACLE_SID=+ASM1 export ORACLE_SID

10、安装cvuqdisk包

--node1,node2分别配置 [root@node1 ~]# export CVUQDISK_GRP=oinstall [root@node1 ~]# cd /u01/soft/grid/rpm/ [root@node1 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm Preparing... ########################################### [100%] 1:cvuqdisk ########################################### [100%] [root@node1 rpm]#

11、校验

[grid@node1 ~]$ cd /u01/soft/grid/ [grid@node1 grid]$ ./runcluvfy.sh stage -post hwos -n node1,node2 -verbose Performing post-checks for hardware and operating system setup Checking node reachability... Check: Node reachability from node "node1" Destination Node Reachable? ------------------------------------ ------------------------ node1 yes node2 yes Result: Node reachability check passed from node "node1" Checking user equivalence... Check: User equivalence for user "grid" Node Name Status ------------------------------------ ------------------------ node2 passed node1 passed Result: User equivalence check passed for user "grid" Checking node connectivity... Checking hosts config file... Node Name Status ------------------------------------ ------------------------ node2 passed node1 passed Verification of the hosts config file successful Interface information for node "node2" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ eth0 192.168.1.118 192.168.1.0 0.0.0.0 192.168.1.1 08:00:27:92:B5:44 1500 eth1 10.10.10.12 10.0.0.0 0.0.0.0 192.168.1.1 08:00:27:BE:EA:49 1500 Interface information for node "node1" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ eth0 192.168.1.117 192.168.1.0 0.0.0.0 192.168.1.1 08:00:27:8B:BA:2C 1500 eth1 10.10.10.11 10.0.0.0 0.0.0.0 192.168.1.1 08:00:27:38:49:CF 1500 Check: Node connectivity of subnet "192.168.1.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- node2[192.168.1.118] node1[192.168.1.117] yes Result: Node connectivity passed for subnet "192.168.1.0" with node(s) node2,node1 Check: TCP connectivity of subnet "192.168.1.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- node1:192.168.1.117 node2:192.168.1.118 passed Result: TCP connectivity check passed for subnet "192.168.1.0" Check: Node connectivity of subnet "10.0.0.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- node2[10.10.10.12] node1[10.10.10.11] yes Result: Node connectivity passed for subnet "10.0.0.0" with node(s) node2,node1 Check: TCP connectivity of subnet "10.0.0.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- node1:10.10.10.11 node2:10.10.10.12 passed Result: TCP connectivity check passed for subnet "10.0.0.0" Interfaces found on subnet "192.168.1.0" that are likely candidates for VIP are: node2 eth0:192.168.1.118 node1 eth0:192.168.1.117 Interfaces found on subnet "10.0.0.0" that are likely candidates for a private interconnect are: node2 eth1:10.10.10.12 node1 eth1:10.10.10.11 Checking subnet mask consistency... Subnet mask consistency check passed for subnet "192.168.1.0". Subnet mask consistency check passed for subnet "10.0.0.0". Subnet mask consistency check passed. Result: Node connectivity check passed Checking multicast communication... Checking subnet "192.168.1.0" for multicast communication with multicast group "230.0.1.0"... Check of subnet "192.168.1.0" for multicast communication with multicast group "230.0.1.0" passed. Checking subnet "10.0.0.0" for multicast communication with multicast group "230.0.1.0"... Check of subnet "10.0.0.0" for multicast communication with multicast group "230.0.1.0" passed. Check of multicast communication passed. Checking for multiple users with UID value 0 Result: Check for multiple users with UID value 0 passed Check: Time zone consistency Result: Time zone consistency check passed Checking shared storage accessibility... Disk Sharing Nodes (2 in count) ------------------------------------ ------------------------ /dev/sdb node2 node1 Disk Sharing Nodes (2 in count) ------------------------------------ ------------------------ /dev/sdc node2 node1 Disk Sharing Nodes (2 in count) ------------------------------------ ------------------------ /dev/sdd node2 node1 Disk Sharing Nodes (2 in count) ------------------------------------ ------------------------ /dev/sde node2 node1 Shared storage check was successful on nodes "node2,node1" Checking integrity of name service switch configuration file "/etc/nsswitch.conf" ... Checking if "hosts" entry in file "/etc/nsswitch.conf" is consistent across nodes... Checking file "/etc/nsswitch.conf" to make sure that only one "hosts" entry is defined More than one "hosts" entry does not exist in any "/etc/nsswitch.conf" file All nodes have same "hosts" entry defined in file "/etc/nsswitch.conf" Check for integrity of name service switch configuration file "/etc/nsswitch.conf" passed Post-check for hardware and operating system setup was successful. [grid@node1 grid]$

三 集群软件安装

1、进入安装目录并进行安装

[grid@node1 ~]$ cd /u01/soft/grid/ [grid@node1 grid]$ ls install readme.html response rpm runcluvfy.sh runInstaller sshsetup stage welcome.html [grid@node1 grid]$ . runInstaller

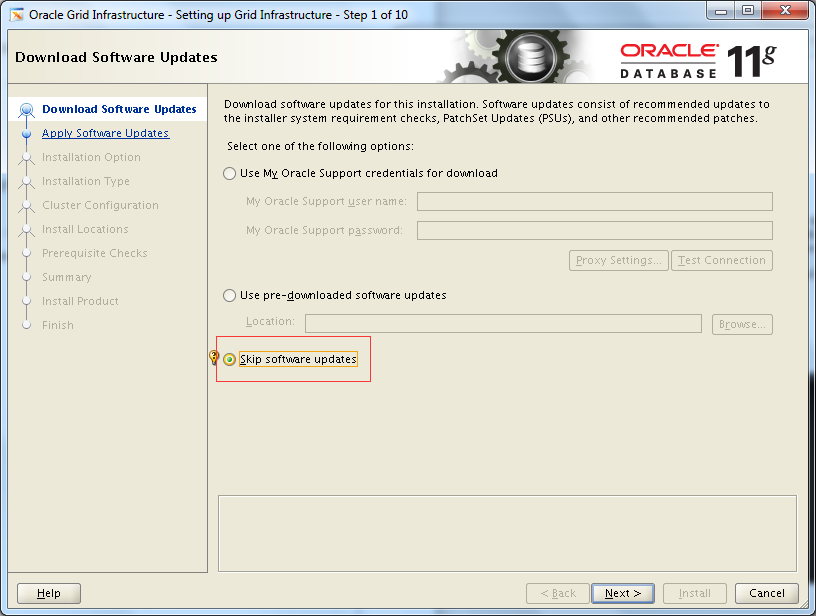

2、下载软件更新

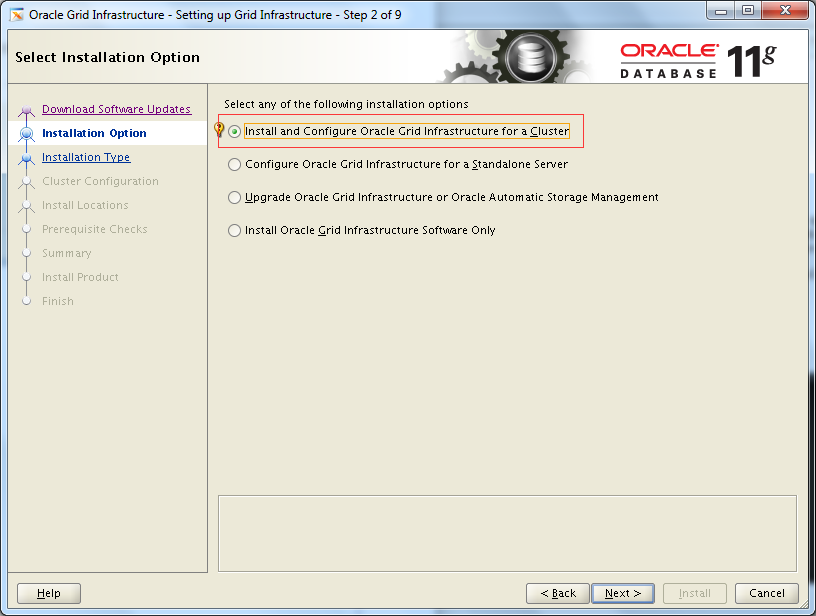

3、选择安装选项

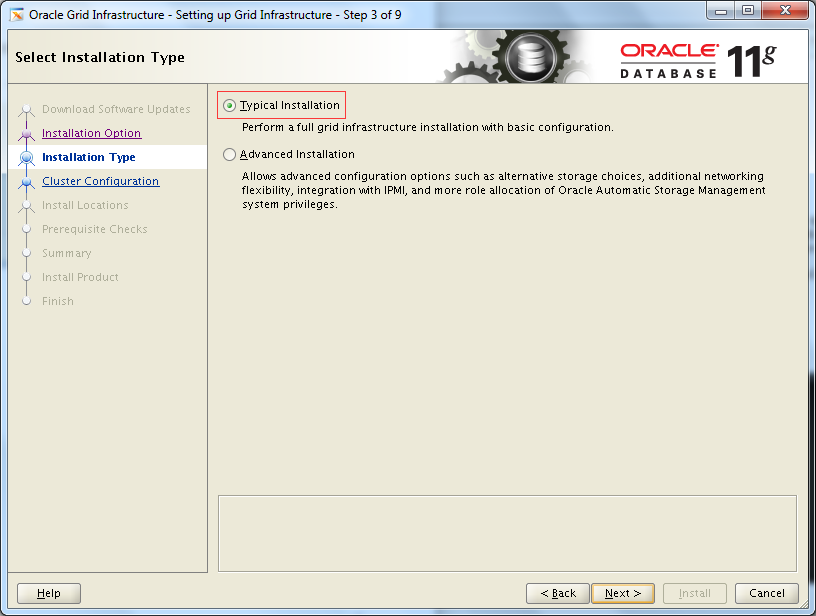

4、选择安装类型

5、指定集群配置

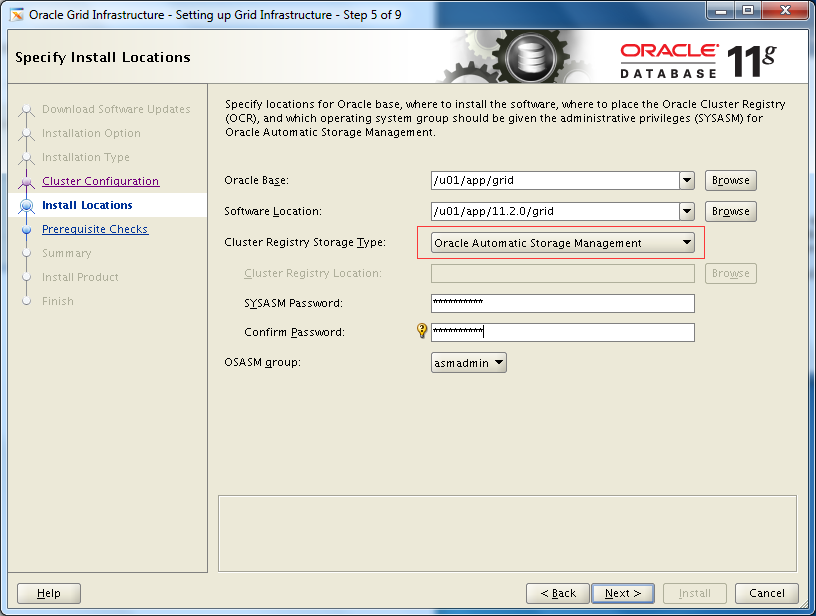

6、指定安装位置

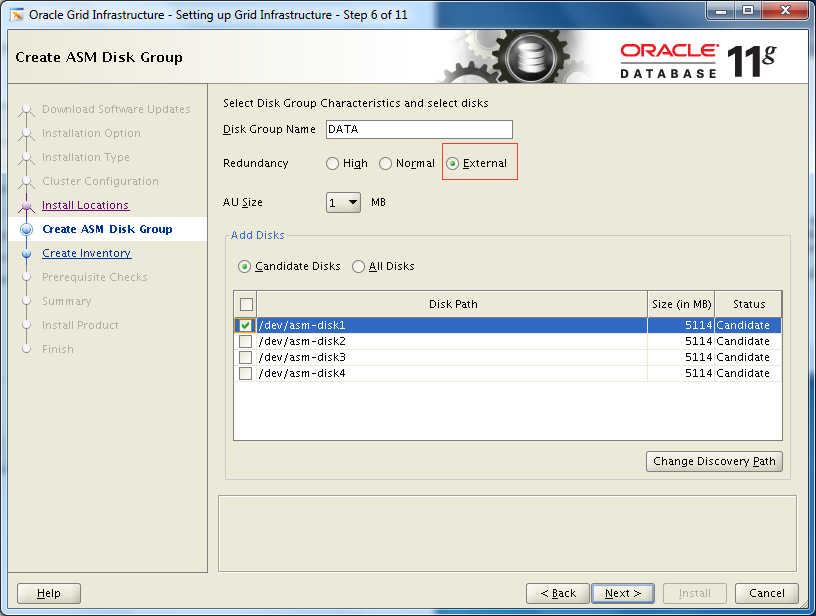

7、创建ASM磁盘组

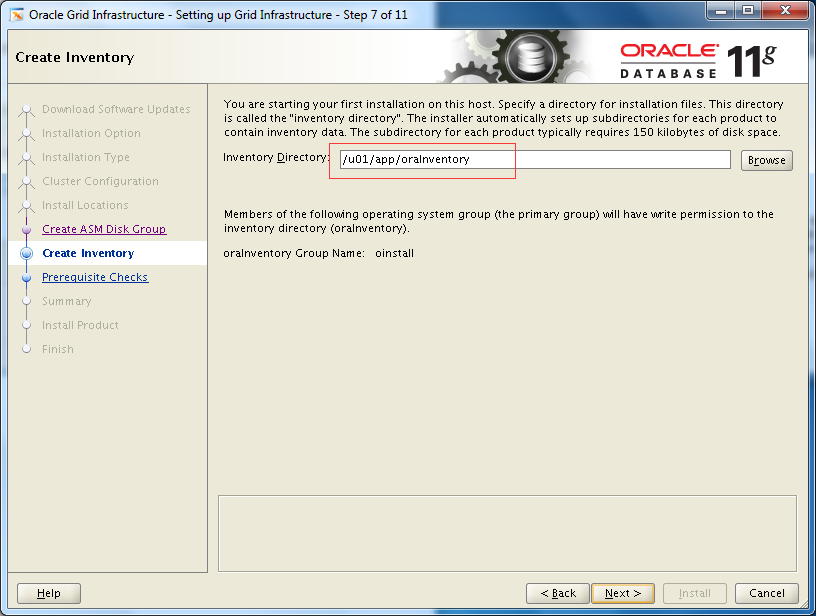

8、创建安装库目录

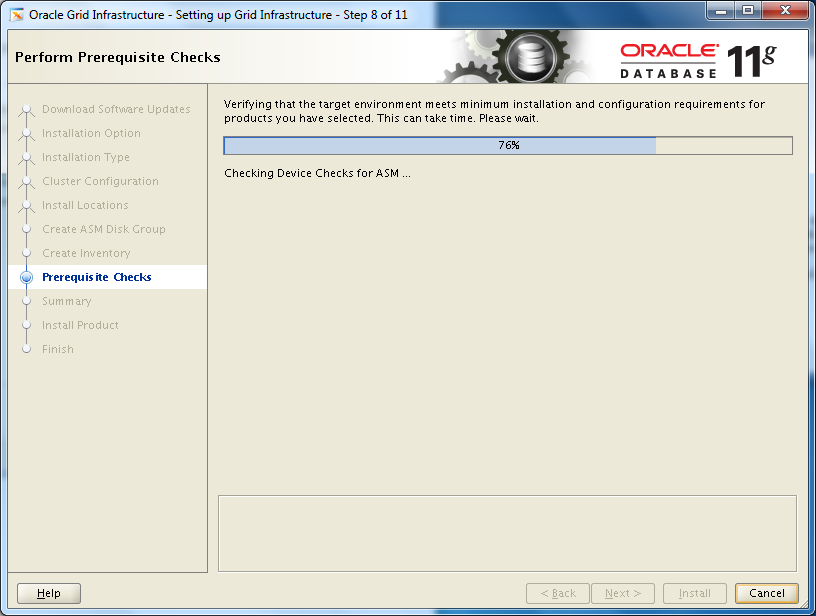

9、执行检查

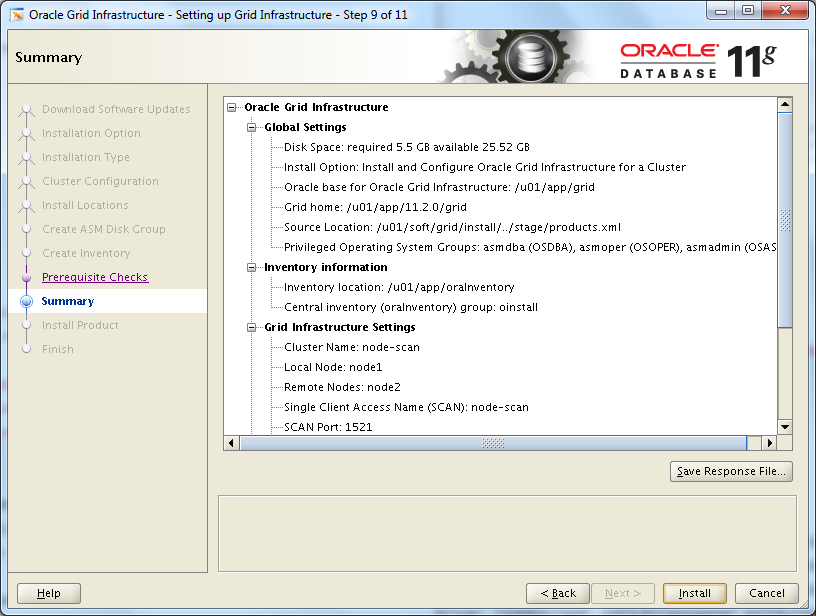

10、检查通过出现概要页

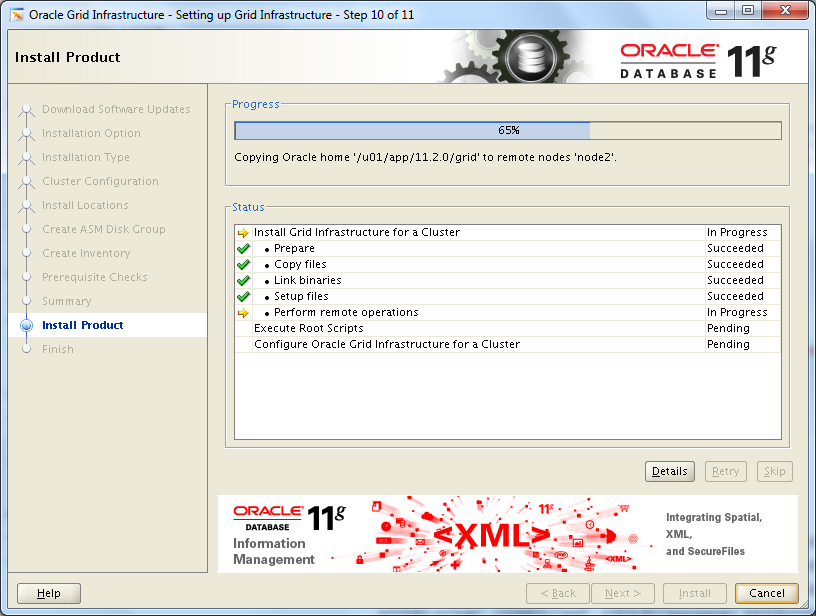

11、安装产品

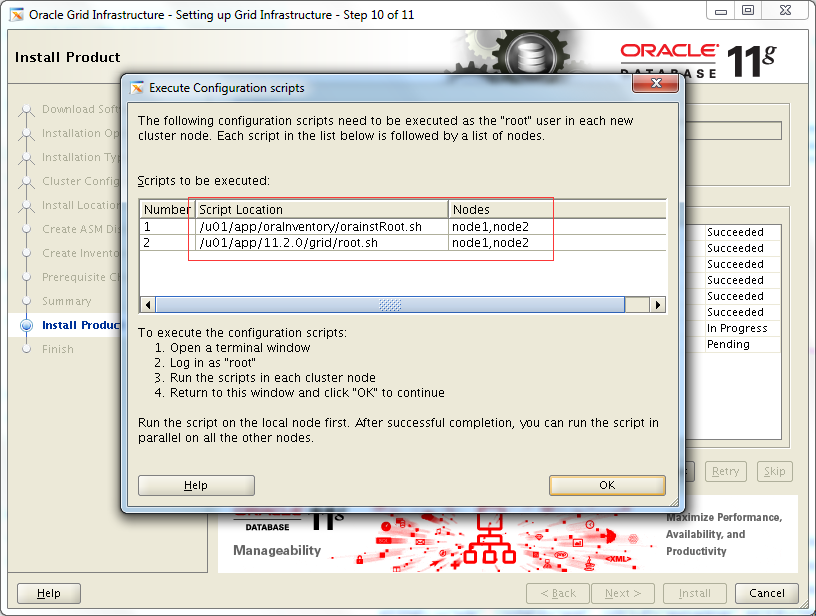

12、执行脚本

--Node1执行

[root@node1 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@node1 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to upstart

CRS-2672: Attempting to start 'ora.mdnsd' on 'node1'

CRS-2676: Start of 'ora.mdnsd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.gpnpd' on 'node1'

CRS-2676: Start of 'ora.gpnpd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cssdmonitor' on 'node1'

CRS-2672: Attempting to start 'ora.gipcd' on 'node1'

CRS-2676: Start of 'ora.cssdmonitor' on 'node1' succeeded

CRS-2676: Start of 'ora.gipcd' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.cssd' on 'node1'

CRS-2672: Attempting to start 'ora.diskmon' on 'node1'

CRS-2676: Start of 'ora.diskmon' on 'node1' succeeded

CRS-2676: Start of 'ora.cssd' on 'node1' succeeded

ASM created and started successfully.

Disk Group DATA created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 04baecfa77fa4ff0bff4f26ac277ffe7.

Successfully replaced voting disk group with +DATA.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 04baecfa77fa4ff0bff4f26ac277ffe7 (/dev/asm-disk1) [DATA]

Located 1 voting disk(s).

CRS-2672: Attempting to start 'ora.asm' on 'node1'

CRS-2676: Start of 'ora.asm' on 'node1' succeeded

CRS-2672: Attempting to start 'ora.DATA.dg' on 'node1'

CRS-2676: Start of 'ora.DATA.dg' on 'node1' succeeded

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@node1 ~]#

--Node2执行

[root@node2 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@node2 ~]# /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization - successful

Adding Clusterware entries to upstart

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node node1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@node2 ~]#

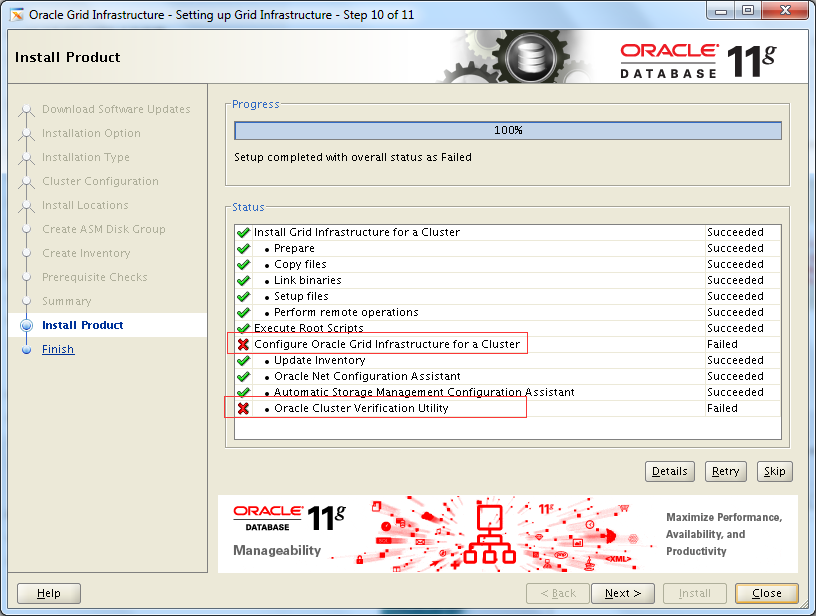

13、安装完成查看状态

查看错误日志:

[root@node1 ~]# tail -n 100 /u01/app/oraInventory/logs/installActions2018-04-16_09-55-13PM.log …………省略……………… INFO: Checking existence of VIP node application (required) INFO: VIP node application check passed INFO: Checking existence of NETWORK node application (required) INFO: NETWORK node application check passed INFO: Checking existence of GSD node application (optional) INFO: GSD node application is offline on nodes "node2,node1" INFO: Checking existence of ONS node application (optional) INFO: ONS node application check passed INFO: Checking Single Client Access Name (SCAN)... INFO: Checking TCP connectivity to SCAN Listeners... INFO: TCP connectivity to SCAN Listeners exists on all cluster nodes INFO: Checking name resolution setup for "node-scan"... INFO: Checking integrity of name service switch configuration file "/etc/nsswitch.conf" ... INFO: All nodes have same "hosts" entry defined in file "/etc/nsswitch.conf" INFO: Check for integrity of name service switch configuration file "/etc/nsswitch.conf" passed INFO: ERROR: INFO: PRVG-1101 : SCAN name "node-scan" failed to resolve INFO: ERROR: INFO: PRVF-4657 : Name resolution setup check for "node-scan" (IP address: 192.168.1.123) failed INFO: ERROR: INFO: PRVF-4657 : Name resolution setup check for "node-scan" (IP address: 192.168.1.124) failed INFO: ERROR: INFO: PRVF-4657 : Name resolution setup check for "node-scan" (IP address: 192.168.1.125) failed INFO: ERROR: INFO: PRVF-4664 : Found inconsistent name resolution entries for SCAN name "node-scan" INFO: Verification of SCAN VIP and Listener setup failed INFO: Checking OLR integrity... INFO: Checking OLR config file... INFO: OLR config file check successful INFO: Checking OLR file attributes... INFO: OLR file check successful INFO: WARNING: INFO: This check does not verify the integrity of the OLR contents. Execute 'ocrcheck -local' as a privileged user to verify the contents of OLR. INFO: OLR integrity check passed INFO: User "grid" is not part of "root" group. Check passed INFO: Checking if Clusterware is installed on all nodes... INFO: Check of Clusterware install passed INFO: Checking if CTSS Resource is running on all nodes... INFO: CTSS resource check passed INFO: Querying CTSS for time offset on all nodes... INFO: Query of CTSS for time offset passed INFO: Check CTSS state started... ………………省略…………………… --查看集群状态 [grid@node1 grid]$ cd /u01/app/11.2.0/grid [grid@node1 grid]$ cd bin/ [grid@node1 bin]$ ./crs_stat -t -v Name Type R/RA F/FT Target State Host ---------------------------------------------------------------------- ora.DATA.dg ora....up.type 0/5 0/ ONLINE ONLINE node1 ora....N1.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE node2 ora....N2.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE node1 ora....N3.lsnr ora....er.type 0/5 0/0 ONLINE ONLINE node1 ora.asm ora.asm.type 0/5 0/ ONLINE ONLINE node1 ora.cvu ora.cvu.type 0/5 0/0 ONLINE ONLINE node1 ora.gsd ora.gsd.type 0/5 0/ OFFLINE OFFLINE ora....network ora....rk.type 0/5 0/ ONLINE ONLINE node1 ora....SM1.asm application 0/5 0/0 ONLINE ONLINE node1 ora.node1.gsd application 0/5 0/0 OFFLINE OFFLINE ora.node1.ons application 0/3 0/0 ONLINE ONLINE node1 ora.node1.vip ora....t1.type 0/0 0/0 ONLINE ONLINE node1 ora....SM2.asm application 0/5 0/0 ONLINE ONLINE node2 ora.node2.gsd application 0/5 0/0 OFFLINE OFFLINE ora.node2.ons application 0/3 0/0 ONLINE ONLINE node2 ora.node2.vip ora....t1.type 0/0 0/0 ONLINE ONLINE node2 ora.oc4j ora.oc4j.type 0/1 0/2 ONLINE ONLINE node1 ora.ons ora.ons.type 0/3 0/ ONLINE ONLINE node1 ora.scan1.vip ora....ip.type 0/0 0/0 ONLINE ONLINE node2 ora.scan2.vip ora....ip.type 0/0 0/0 ONLINE ONLINE node1 ora.scan3.vip ora....ip.type 0/0 0/0 ONLINE ONLINE node1 [grid@node1 bin]$

14、完善环境变量配置

--node1,node2分别配置 [grid@node1 ~]$ vim .bash_profile--增加以下内容 ORACLE_HOME=/u01/app/11.2.0/grid export ORACLE_HOME PATH=$ORACLE_HOME/bin:$PATH export PATH [grid@node1 ~]$ . .bash_profile [grid@node1 ~]$ echo $ORACLE_HOME /u01/app/11.2.0/grid至此,RAC的安装已完成,接下来将会演示安装数据库软件以及DBCA建库。