urllib是python 内置的一个http请求库,利用这个库可以实现一些简单的网页扒取。

urllib有4个模块,分别是:

Urllib.request 请求模块

Urllib.error 异常处理模块

Urllib.parse url解析模块

Urllib.robotparser robots.txt解析模块

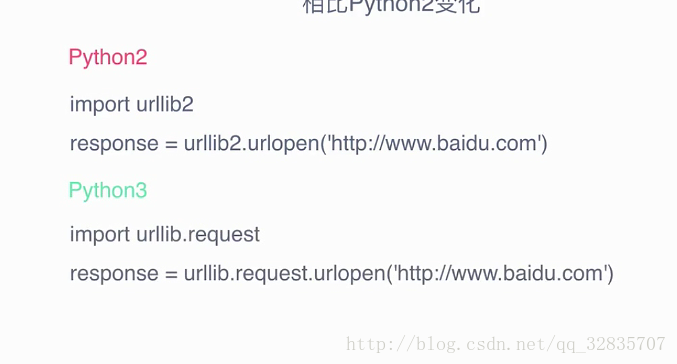

因为学习的是python3,所以说一下相对python2的变化

Urlopen用法

import urllib.request

response =urllib.request.urlopen(‘http://www.baidu.com’)

print(response.read().decode(‘utf-8’))

import urllib.parse

import urllib.request

data=bytes(urllib.parse.urlencode({‘word’:’hello’}),encoding=’utf8’)

response=urllib.request.urlopen(‘http://httpbin.org/post’,data=data)

print(response.read())

//网址是用来做http测试的网址

返回一些json字符串

超时设置

import urllib.request

response=urllib.request.urlopen(‘http://httpbin.org/get’,timeout=1)

print(response.read())

import socket

import urllib.request

import urllib.error

try:

response=urllib.request.urlopen(‘http://httpbin.org/get’,timeout=0.1)

except urllib.error.URL.Error as e:

if isinstance(e.reason,socket.timeout):

print(‘TIME OUT’)

响应

响应类型

import urllib.request

response=urllib.request.urlopen(‘https://www.python.org’)

print(type(response))

<class ‘http.client.HTTPResponse’>

状态码、响应头

import urllib.request

response=urllib.request.urlopen(‘https://www.python.org’)

print(response.status)

print(response.getheaders())

print(response.getheaders(‘Server’))

response.read().decode(‘utf-8’) //read方法获取响应体内容,是字节流形式的数据,所以要加decode

发送Request对象的方式:

import urllib.request

request=urllib.request.Request(‘https://pt=python.org’)

response=urllib.request.urlopen(request)

print(response.read().decode(‘utf-8’))

用构造request对象的方式添加header参数和一些额外的数据:

from urllib import request.parse

url=’http://httpbin.org/post’

headers={

‘User-Agent’:’’

‘Host’:’httpbin.org’

}

dict={

‘name’:’Germey’

}

data=bytes(parse.urlencode(dict),encoding=’utf8’)

req=request.Request(url=url,data=data,headers=headers,method=’POST’)

response=request.urlopen(req)

print(response.read().decode(‘utf-8’))

//也可以用reuqest的add_header 多次添加信息头

Handler

相当于一些辅助的工具,来帮助我们做一些额外的操作,例如FTP、代理、cookie

代理 ,设置代理可以伪装自己的登录IP,防止被封掉

import urllib.request

proxy_handler=urllib.reuqest.ProxyHandler({

‘http’:’http://127.0.0.1:9743’,

‘https’:’https://127.0.0.1:9743’

})

opener=urllib.request.build_opener(proxy_handler)

response=opener.open(‘http://www.baidu.com’)

print(response.read())

Cookie 维持登录状态

import http.cookiejar,urllib.request

cookie=http.cookiejar.CookieJar()

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open('http://www.baidu.com')

for item incookie:

print(item.name+"="+item.value)

import http.cookiejar,urllib.request

filename="cookie.txt"

cookie=http.cookiejar.MozillaCookieJar(filename)

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open('http://www.baidu.com')

cookie.save(ignore_discard=True,ignore_expires=True)

异常处理

from urllib import request,error

try:

response=request.urlopen(‘http://www.baidu.com/dd’)

except error.URLErro as e:

print(e.reason)

from urllib import request.error

try:

response=request.urlopen(‘http://www.baidu.com/dd’)

except error.HTTPErro as e:

print(e.reason,e.code,e.headers,sep=’\n’)

except error.URLError as e:

print(e.reason)

else:

print(‘Rquest Successfully’)

import socket

import urllib.request

import urllib.error

try:

response=urllib.request.urlopen(‘http://www.baidu.com’,timeout=0.01)

except urllib.error.URLErro as e:

print(type(e.reason))

if isinstance(e.reason,socket.timeout);

print(‘TIME OUT’)

URL解析

from urllib.parse importurlparse

result=urlparse('http://www.baidu.com/index.html;uesr?idk=5#comment')

print(type(result),result)

<class 'urllib.parse.ParseResult'> ParseResult(scheme='http', netloc='www.baidu.com', path='/index.html', params='uesr', query='idk=5', fragment='comment')