直接上代码,因为都是函数都是ppt里面的也没有太多解释,具体看注释即可

from sklearn import datasets

from sklearn import model_selection

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

import matplotlib.pyplot as plt

# 旧版

# from sklearn import cross_validation

NB_accuracy = []

NB_f1_score = []

NB_auc_roc = []

SVM_accuracy = []

SVM_f1_score = []

SVM_auc_roc = []

RAMF_accuracy = []

RAMF_f1_score = []

RAMF_auc_roc = []

def evaluate(y_test, pred):

acc = metrics.accuracy_score(y_test, pred)

print('\tAccuracy:', acc)

f1 = metrics.f1_score(y_test, pred)

print('\tF1-score:', f1)

auc = metrics.roc_auc_score(y_test, pred)

print('\tAUC ROC:', auc)

return acc, f1, auc

# Train the algorithm

# Naive Bayes

def NaiveBayesTrain(X_train, y_train, X_test):

clf = GaussianNB()

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

return pred

# SVM

def SVMTrain(X_train, y_train, X_test):

clf = SVC(C=1e-01, kernel='rbf', gamma=0.1)

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

return pred

# Random Forest

def RandomForestTrain(X_train, y_train, X_test):

clf = RandomForestClassifier(n_estimators=10)

clf.fit(X_train, y_train)

pred = clf.predict(X_test)

return pred

# Create a classification dataset (n samples >= 1000, n features >= 10)

dataset = datasets.make_classification(

n_samples=1000, n_features=10, n_informative=2, n_redundant=2,

n_repeated=0, n_classes=2)

X = dataset[0]

y = dataset[1]

# Split the dataset using 10-fold cross validation

# 新版写法

index = 1

kf = model_selection.KFold(n_splits=10)

for tran_index, test_index in kf.split(X):

X_train, X_test = X[tran_index], X[test_index]

y_train, y_test = y[tran_index], y[test_index]

# Evaluate the cross-validated performance

print('train', index)

index += 1

print('Naive Bayes:')

acc, f1, auc = evaluate(y_test, NaiveBayesTrain(X_train, y_train, X_test))

NB_accuracy.append(acc)

NB_f1_score.append(f1)

NB_auc_roc.append(auc)

print('SVM:')

acc, f1, auc = evaluate(y_test, SVMTrain(X_train, y_train, X_test))

SVM_accuracy.append(acc)

SVM_f1_score.append(f1)

SVM_auc_roc.append(auc)

print('Random Forest:')

acc, f1, auc = evaluate(

y_test, RandomForestTrain(X_train, y_train, X_test))

RAMF_accuracy.append(acc)

RAMF_f1_score.append(f1)

RAMF_auc_roc.append(auc)

print()

# 旧版写法

# kf = cross_validation.KFold(len(X), n_folds=10)

# for tran_index, test_index in kf:

# X_train, X_test = X[tran_index], X[test_index]

# y_train, y_test = y[tran_index], y[test_index]

# NB_accuracy = []

# NB_f1_score = []

# NB_auc_roc = []

# SVM_accuracy = []

# SVM_f1_score = []

# SVM_auc_roc = []

# RAMF_accuracy = []

# RAMF_f1_score = []

# RAMF_auc_roc = []

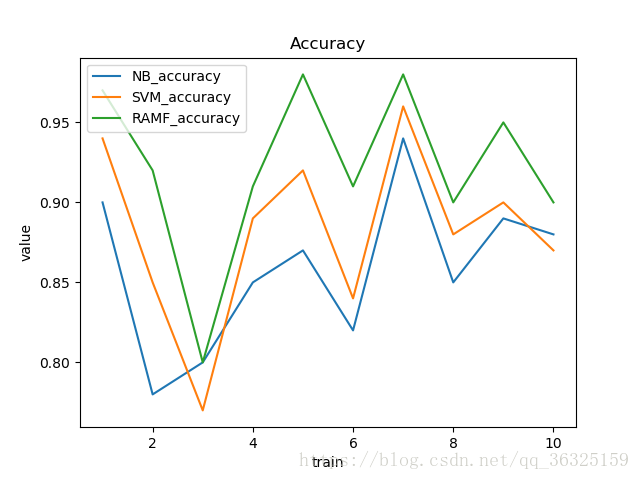

x = [i + 1 for i in range(len(NB_accuracy))]

plt.figure()

plt.plot(x, NB_accuracy, label='NB_accuracy')

plt.plot(x, SVM_accuracy, label='SVM_accuracy')

plt.plot(x, RAMF_accuracy, label='RAMF_accuracy')

plt.xlabel('train')

plt.ylabel('value')

plt.title('Accuracy')

plt.legend(loc='upper left')

plt.savefig('Accuracy.png')

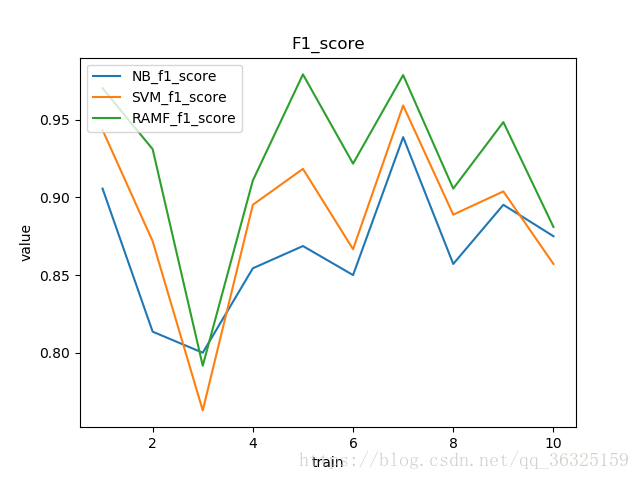

plt.figure()

plt.plot(x, NB_f1_score, label='NB_f1_score')

plt.plot(x, SVM_f1_score, label='SVM_f1_score')

plt.plot(x, RAMF_f1_score, label='RAMF_f1_score')

plt.xlabel('train')

plt.ylabel('value')

plt.title('F1_score')

plt.legend(loc='upper left')

plt.savefig('F1_score.png')

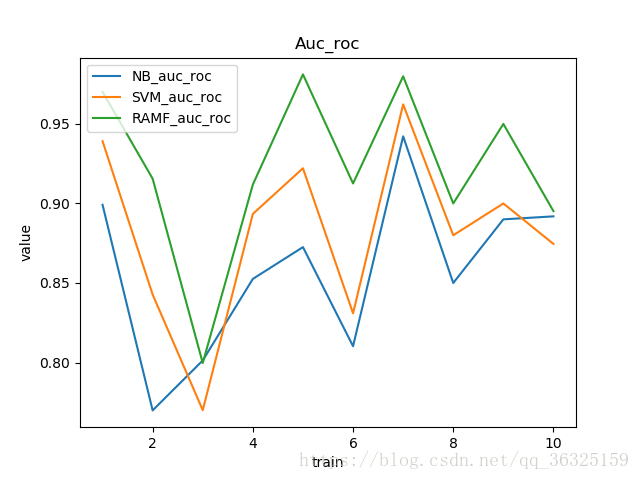

plt.figure()

plt.plot(x, NB_auc_roc, label='NB_auc_roc')

plt.plot(x, SVM_auc_roc, label='SVM_auc_roc')

plt.plot(x, RAMF_auc_roc, label='RAMF_auc_roc')

plt.xlabel('train')

plt.ylabel('value')

plt.title('Auc_roc')

plt.legend(loc='upper left')

plt.savefig('Auc_roc.png')注意我的程序会打印出每次训练后每个算法的accuracy,f1 score,auc roc,并且最后会画图作比较并且图片自动保存在当前目录,结果如下:

train 1

Naive Bayes:

Accuracy: 0.9

F1-score: 0.9056603773584905

AUC ROC: 0.8991596638655462

SVM:

Accuracy: 0.94

F1-score: 0.9433962264150944

AUC ROC: 0.9391756702681072

Random Forest:

Accuracy: 0.97

F1-score: 0.9702970297029702

AUC ROC: 0.9701880752300921

train 2

Naive Bayes:

Accuracy: 0.78

F1-score: 0.8135593220338982

AUC ROC: 0.7698898408812729

SVM:

Accuracy: 0.85

F1-score: 0.8717948717948718

AUC ROC: 0.8427172582619339

Random Forest:

Accuracy: 0.92

F1-score: 0.9310344827586206

AUC ROC: 0.9155446756425948

train 3

Naive Bayes:

Accuracy: 0.8

F1-score: 0.8

AUC ROC: 0.8012820512820513

SVM:

Accuracy: 0.77

F1-score: 0.7628865979381444

AUC ROC: 0.7700320512820513

Random Forest:

Accuracy: 0.8

F1-score: 0.7916666666666666

AUC ROC: 0.7996794871794872

train 4

Naive Bayes:

Accuracy: 0.85

F1-score: 0.854368932038835

AUC ROC: 0.8525641025641024

SVM:

Accuracy: 0.89

F1-score: 0.8952380952380952

AUC ROC: 0.8934294871794872

Random Forest:

Accuracy: 0.91

F1-score: 0.910891089108911

AUC ROC: 0.9118589743589745

train 5

Naive Bayes:

Accuracy: 0.87

F1-score: 0.8686868686868686

AUC ROC: 0.8725411481332798

SVM:

Accuracy: 0.92

F1-score: 0.9183673469387754

AUC ROC: 0.9221196306704136

Random Forest:

Accuracy: 0.98

F1-score: 0.9791666666666666

AUC ROC: 0.9811320754716981

train 6

Naive Bayes:

Accuracy: 0.82

F1-score: 0.85

AUC ROC: 0.8102521703183133

SVM:

Accuracy: 0.84

F1-score: 0.8666666666666666

AUC ROC: 0.8309218685407193

Random Forest:

Accuracy: 0.91

F1-score: 0.9217391304347826

AUC ROC: 0.9125671765192229

train 7

Naive Bayes:

Accuracy: 0.94

F1-score: 0.9387755102040817

AUC ROC: 0.9421918908069049

SVM:

Accuracy: 0.96

F1-score: 0.9591836734693878

AUC ROC: 0.9622641509433962

Random Forest:

Accuracy: 0.98

F1-score: 0.9787234042553191

AUC ROC: 0.9799277398635086

train 8

Naive Bayes:

Accuracy: 0.85

F1-score: 0.8571428571428572

AUC ROC: 0.85

SVM:

Accuracy: 0.88

F1-score: 0.888888888888889

AUC ROC: 0.88

Random Forest:

Accuracy: 0.9

F1-score: 0.9056603773584904

AUC ROC: 0.8999999999999999

train 9

Naive Bayes:

Accuracy: 0.89

F1-score: 0.8952380952380952

AUC ROC: 0.89

SVM:

Accuracy: 0.9

F1-score: 0.9038461538461539

AUC ROC: 0.8999999999999999

Random Forest:

Accuracy: 0.95

F1-score: 0.9484536082474226

AUC ROC: 0.95

train 10

Naive Bayes:

Accuracy: 0.88

F1-score: 0.875

AUC ROC: 0.8918808649530804

SVM:

Accuracy: 0.87

F1-score: 0.8571428571428572

AUC ROC: 0.8745410036719705

Random Forest:

Accuracy: 0.9

F1-score: 0.8809523809523809

AUC ROC: 0.8951448388412893

[Finished in 2.6s]对结果画图:

由上面三幅图来看,可以明显看出,从accuracy,f1 score,auc roc这三个值的比较得到,性能最高的是Random Forest,然后到SVM,最后到Naive Bayes。