进入数据分析领域的四年来,我构建的模型的80%多都是分类模型,而回归模型仅占15-20%。这个数字会有浮动,但是整个行业的普遍经验值。分类模型占主流的原因是大多数分析问题都涉及到做出决定。例如一个客户是否会流失,我们是否应该针对一个客户进行数字营销,以及客户是否有很大的潜力等等。这些分析有很强的洞察力,并且直接关系到实现路径。在本文中,我们将讨论另一种被广泛使用的分类技术,称为k近邻(KNN)。本文的重点主要集中在算法的工作原理以及输入参数如何影响输出/预测。

目录

什么情况下使用KNN算法?

什么情况下使用KNN算法? KNN算法如何工作?

KNN算法如何工作? 如何选择因子K?

如何选择因子K? 分解--KNN的伪代码

分解--KNN的伪代码 从零开始的Python实现

从零开始的Python实现 和Scikit-learn比较

和Scikit-learn比较

什么情况使用KNN算法?

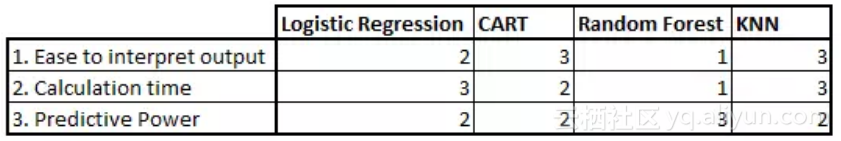

KNN算法既可以用于分类也可以用于回归预测。然而,业内主要用于分类问题。在评估一个算法时,我们通常从以下三个角度出发:

模型解释性

模型解释性 运算时间

运算时间 预测能力

预测能力

让我们通过几个例子来评估KNN:

KNN算法在这几项上的表现都比较均衡。由于便于解释和计算时间较短,这种算法使用很普遍。

KNN算法的原理是什么?

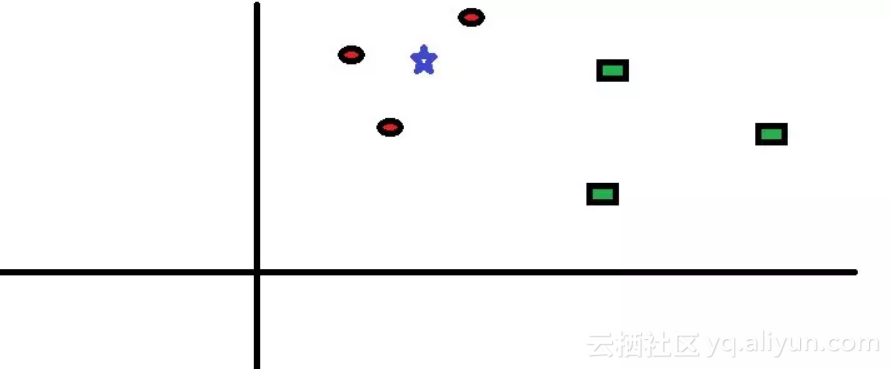

让我们通过一个简单的案例来理解这个算法。下图是红圆圈和绿方块的分布图:

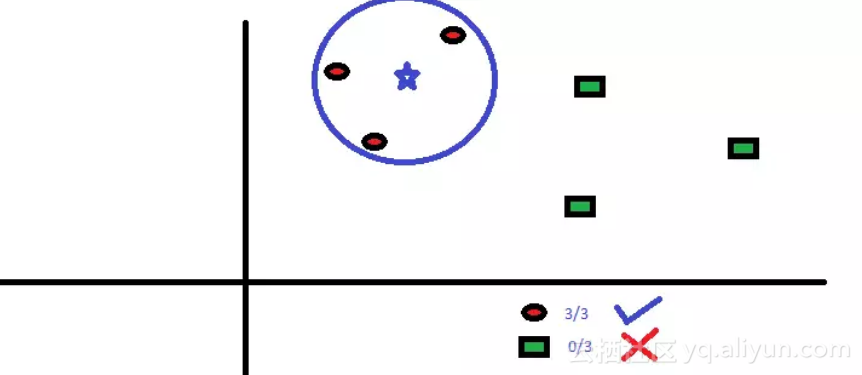

现在我们要预测蓝星星属于哪个类别。蓝星星可能属于红圆圈,或属于绿方块,也可能不属于任何类别。KNN中的“K”是我们要找到的最近邻的数量。假设K = 3。因此,我们现在以蓝星星为圆心来作一个圆,使其恰巧只能包含平面内的3个数据点。参阅下图:

离蓝星星最近的三个点都是红圆圈。因此,我们可以以较高的置信水平判定蓝星星应属于红圆圈这个类别。在KNN算法中,参数K的选择是非常关键的。接下来,我们将探索哪些因素可以得到K的最佳值。

如何选择因子K?

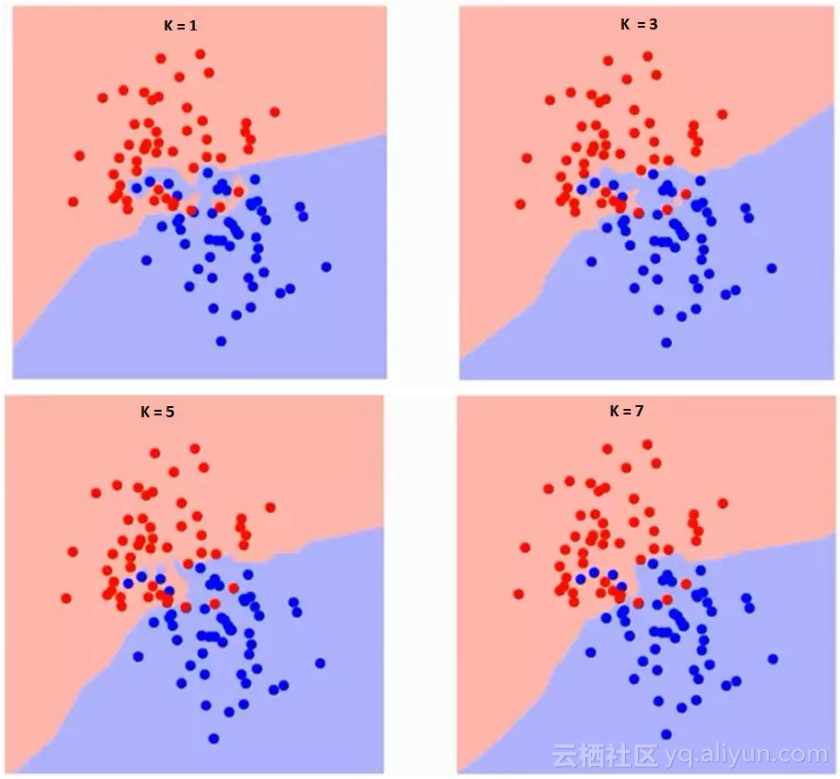

首先要了解K在算法中到底有什么影响。在前文的案例中,假定总共只有6个训练数据,给定K值,我们可以划分两个类的边界。现在让我们看看不同K值下两个类别的边界的差异。

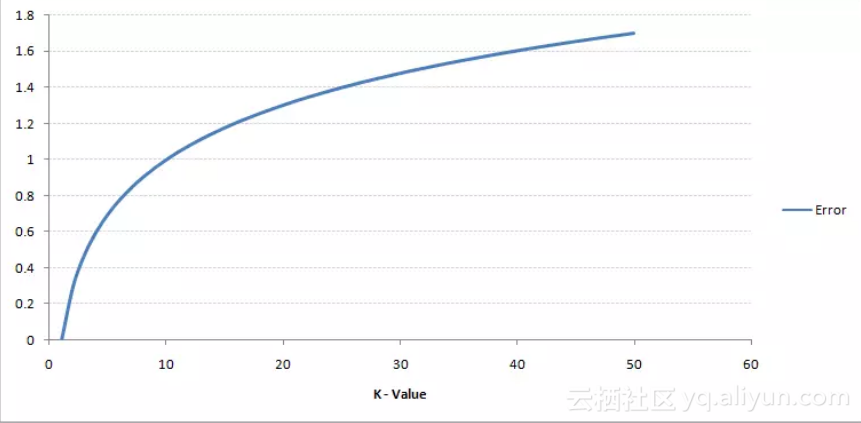

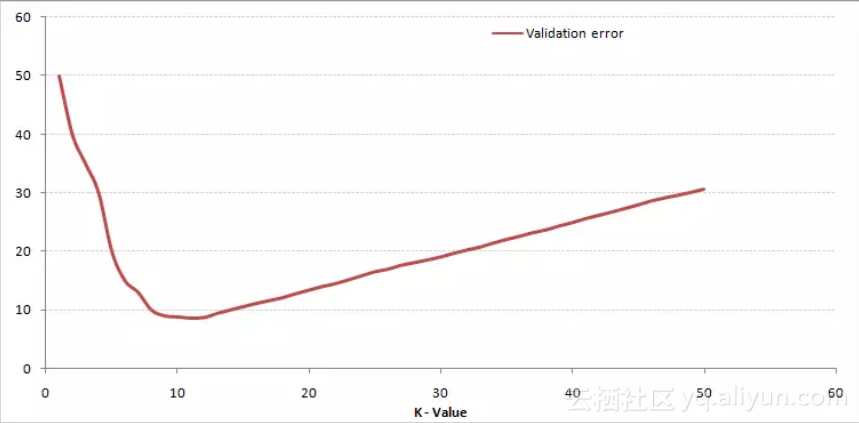

仔细观察,我们会发现随着K值的增加,边界变得更平滑。当K值趋于无穷大时,分类区域最终会全部变成蓝色或红色,这取决于占主导地位的是蓝点还是红点。我们需要基于不同K值获取训练错误率和验证错误率这两个参数。以下为训练错误率随K值变化的曲线:

如图所示,对于训练样本而言,K=1时的错误率总是为零。这是因为对任何训练数据点来说,最接近它的点就是其本身。因此,K=1时的预测总是准确的。如果验证错误曲线也是这样的形状,我们只要设定K为1就可以了。以下是随K值变化的验证错误曲线:

显然,在K=1的时候,我们过度拟合了边界。因此,错误率最初是下降的,达到最小值后又随着K的增加而增加。为了得到K的最优值,我们将初始数据集分割为训练集和验证集,然后通过绘制验证错误曲线得到K的最优值,应用于所有预测。

分解--KNN的伪代码

我们可以通过以下步骤实现KNN模型:

加载数据。

加载数据。 预设K值。

预设K值。 对训练集中数据点进行迭代,进行预测。

对训练集中数据点进行迭代,进行预测。

STEPS:

计算测试数据与每一个训练数据的距离。我们选用最常用的欧式距离作为度量。其他度量标准还有切比雪夫距离、余弦相似度等

计算测试数据与每一个训练数据的距离。我们选用最常用的欧式距离作为度量。其他度量标准还有切比雪夫距离、余弦相似度等 根据计算得到的距离值,按升序排序

根据计算得到的距离值,按升序排序 从已排序的数组中获取靠前的k个点

从已排序的数组中获取靠前的k个点 获取这些点中的出现最频繁的类别

获取这些点中的出现最频繁的类别 得到预测类别

得到预测类别

从零开始的Python实现

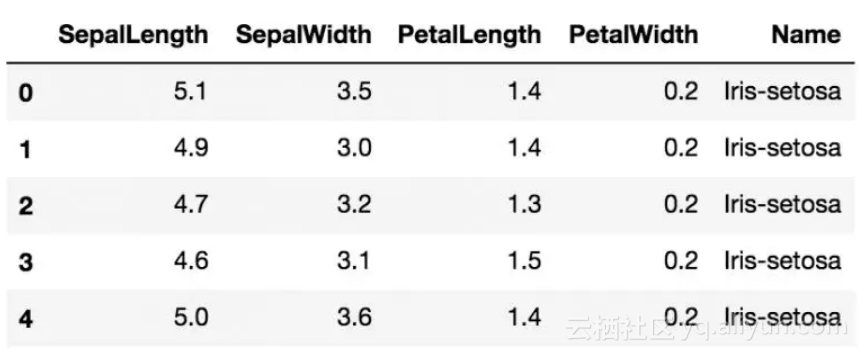

我们将使用流行的Iris数据集来构建KNN模型。你可以从这里下载(数据集链接:https://gist.githubusercontent.com/gurchetan1000/ec90a0a8004927e57c24b20a6f8c8d35/raw/fcd83b35021a4c1d7f1f1d5dc83c07c8ffc0d3e2/iris.csv)

<span style="color:#f8f8f2"><code class="language-c"><span style="color:#f92672"># Importing libraries</span>

import pandas as pd

import numpy as np

import math

import <span style="color:#f92672">operator</span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span>

<span style="color:#f92672"># Importing data</span>

data <span style="color:#f8f8f2">=</span> pd<span style="color:#f8f8f2">.</span><span style="color:#e6db74">read_csv</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e"><span style="color:#e6db74">"iris.csv"</span></span><span style="color:#f8f8f2">)</span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span>

data<span style="color:#f8f8f2">.</span><span style="color:#e6db74">head</span><span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span></code></span>

<span style="color:#f8f8f2"><code class="language-c"><span style="color:#f92672"># Defining a function which calculates euclidean distance between two data points</span>

def <span style="color:#e6db74"><span style="color:#a6e22e">euclideanDistance</span></span><span style="color:#f8f8f2"><span style="color:#f8f8f2">(</span></span><span style="color:#f8f8f2">data1</span><span style="color:#f8f8f2"><span style="color:#f8f8f2">,</span></span><span style="color:#f8f8f2"> data2</span><span style="color:#f8f8f2"><span style="color:#f8f8f2">,</span></span><span style="color:#f8f8f2"> length</span><span style="color:#f8f8f2"><span style="color:#f8f8f2">)</span></span><span style="color:#f8f8f2">:</span>

distance <span style="color:#f8f8f2">=</span> <span style="color:#ae81ff"><span style="color:#ae81ff">0</span></span>

<span style="color:#66d9ef"><span style="color:#f92672">for</span></span> x in <span style="color:#e6db74"><span style="color:#a6e22e">range</span></span><span style="color:#f8f8f2"><span style="color:#f8f8f2">(</span></span><span style="color:#f8f8f2">length</span><span style="color:#f8f8f2"><span style="color:#f8f8f2">)</span></span><span style="color:#f8f8f2">:</span>

distance <span style="color:#f8f8f2">+</span><span style="color:#f8f8f2">=</span> np<span style="color:#f8f8f2">.</span><span style="color:#e6db74">square</span><span style="color:#f8f8f2">(</span>data1<span style="color:#f8f8f2">[</span>x<span style="color:#f8f8f2">]</span> <span style="color:#f8f8f2">-</span> data2<span style="color:#f8f8f2">[</span>x<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef"><span style="color:#f92672">return</span></span> np<span style="color:#f8f8f2">.</span><span style="color:#e6db74"><span style="color:#e6db74">sqrt</span></span><span style="color:#f8f8f2">(</span>distance<span style="color:#f8f8f2">)</span>

<span style="color:#f92672"># Defining our KNN model</span>

def <span style="color:#e6db74">knn</span><span style="color:#f8f8f2">(</span>trainingSet<span style="color:#f8f8f2">,</span> testInstance<span style="color:#f8f8f2">,</span> k<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

distances <span style="color:#f8f8f2">=</span> <span style="color:#f8f8f2">{</span><span style="color:#f8f8f2">}</span>

sort <span style="color:#f8f8f2">=</span> <span style="color:#f8f8f2">{</span><span style="color:#f8f8f2">}</span>

length <span style="color:#f8f8f2">=</span> testInstance<span style="color:#f8f8f2">.</span>shape<span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span><span style="color:#f8f8f2">]</span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3</span></span>

<span style="color:#f92672"># Calculating euclidean distance between each row of training data and test data</span>

<span style="color:#66d9ef"><span style="color:#f92672">for</span></span> x in <span style="color:#e6db74">range</span><span style="color:#f8f8f2">(</span><span style="color:#e6db74">len</span><span style="color:#f8f8f2">(</span>trainingSet<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.1</span></span>

dist <span style="color:#f8f8f2">=</span> <span style="color:#e6db74">euclideanDistance</span><span style="color:#f8f8f2">(</span>testInstance<span style="color:#f8f8f2">,</span> trainingSet<span style="color:#f8f8f2">.</span>iloc<span style="color:#f8f8f2">[</span>x<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">,</span> length<span style="color:#f8f8f2">)</span>

distances<span style="color:#f8f8f2">[</span>x<span style="color:#f8f8f2">]</span> <span style="color:#f8f8f2">=</span> dist<span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">0</span></span><span style="color:#f8f8f2">]</span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.1</span></span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.2</span></span>

<span style="color:#f92672"># Sorting them on the basis of distance</span>

sorted_d <span style="color:#f8f8f2">=</span> <span style="color:#e6db74">sorted</span><span style="color:#f8f8f2">(</span>distances<span style="color:#f8f8f2">.</span><span style="color:#e6db74">items</span><span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">,</span> key<span style="color:#f8f8f2">=</span><span style="color:#f92672">operator</span><span style="color:#f8f8f2">.</span><span style="color:#e6db74">itemgetter</span><span style="color:#f8f8f2">(</span><span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.2</span></span>

neighbors <span style="color:#f8f8f2">=</span> <span style="color:#f8f8f2">[</span><span style="color:#f8f8f2">]</span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.3</span></span>

<span style="color:#f92672"># Extracting top k neighbors</span>

<span style="color:#66d9ef"><span style="color:#f92672">for</span></span> x in <span style="color:#e6db74">range</span><span style="color:#f8f8f2">(</span>k<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

neighbors<span style="color:#f8f8f2">.</span><span style="color:#e6db74">append</span><span style="color:#f8f8f2">(</span>sorted_d<span style="color:#f8f8f2">[</span>x<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">0</span></span><span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">)</span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.3</span></span>

classVotes <span style="color:#f8f8f2">=</span> <span style="color:#f8f8f2">{</span><span style="color:#f8f8f2">}</span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.4</span></span>

<span style="color:#f92672"># Calculating the most freq <span style="color:#f92672">class</span> in the neighbors</span>

<span style="color:#66d9ef"><span style="color:#f92672">for</span></span> x in <span style="color:#e6db74">range</span><span style="color:#f8f8f2">(</span><span style="color:#e6db74">len</span><span style="color:#f8f8f2">(</span>neighbors<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

response <span style="color:#f8f8f2">=</span> trainingSet<span style="color:#f8f8f2">.</span>iloc<span style="color:#f8f8f2">[</span>neighbors<span style="color:#f8f8f2">[</span>x<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">[</span><span style="color:#f8f8f2"><span style="color:#ae81ff">-</span></span><span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span><span style="color:#f8f8f2">]</span>

<span style="color:#66d9ef"><span style="color:#f92672">if</span></span> response in classVotes<span style="color:#f8f8f2">:</span>

classVotes<span style="color:#f8f8f2">[</span>response<span style="color:#f8f8f2">]</span> <span style="color:#f8f8f2">+</span><span style="color:#f8f8f2">=</span> <span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span>

<span style="color:#66d9ef"><span style="color:#f92672">else</span></span><span style="color:#f8f8f2">:</span>

classVotes<span style="color:#f8f8f2">[</span>response<span style="color:#f8f8f2">]</span> <span style="color:#f8f8f2">=</span> <span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.4</span></span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.5</span></span>

sortedVotes <span style="color:#f8f8f2">=</span> <span style="color:#e6db74">sorted</span><span style="color:#f8f8f2">(</span>classVotes<span style="color:#f8f8f2">.</span><span style="color:#e6db74">items</span><span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">,</span> key<span style="color:#f8f8f2">=</span><span style="color:#f92672">operator</span><span style="color:#f8f8f2">.</span><span style="color:#e6db74">itemgetter</span><span style="color:#f8f8f2">(</span><span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">,</span> reverse<span style="color:#f8f8f2">=</span>True<span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef"><span style="color:#f92672">return</span></span><span style="color:#f8f8f2">(</span>sortedVotes<span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">0</span></span><span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">0</span></span><span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">,</span> neighbors<span style="color:#f8f8f2">)</span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">3.5</span></span>

<span style="color:#f92672"># Creating a dummy testset</span>

testSet <span style="color:#f8f8f2">=</span> <span style="color:#f8f8f2">[</span><span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">7.2</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">3.6</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">5.1</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">2.5</span></span><span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">]</span>

test <span style="color:#f8f8f2">=</span> pd<span style="color:#f8f8f2">.</span><span style="color:#e6db74">DataFrame</span><span style="color:#f8f8f2">(</span>testSet<span style="color:#f8f8f2">)</span>

#### Start of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">2</span></span>

<span style="color:#f92672"># Setting number of neighbors = <span style="color:#ae81ff">1</span></span>

k <span style="color:#f8f8f2">=</span> <span style="color:#ae81ff"><span style="color:#ae81ff">1</span></span>

#### End of STEP <span style="color:#ae81ff"><span style="color:#ae81ff">2</span></span>

<span style="color:#f92672"># Running KNN model</span>

result<span style="color:#f8f8f2">,</span>neigh <span style="color:#f8f8f2">=</span> <span style="color:#e6db74">knn</span><span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">,</span> test<span style="color:#f8f8f2">,</span> k<span style="color:#f8f8f2">)</span>

<span style="color:#f92672"># Predicted <span style="color:#f92672">class</span></span>

<span style="color:#e6db74">print</span><span style="color:#f8f8f2">(</span>result<span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">-></span> Iris<span style="color:#f8f8f2">-</span>virginica

<span style="color:#f92672"># Nearest neighbor</span>

<span style="color:#e6db74">print</span><span style="color:#f8f8f2">(</span>neigh<span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">-></span> <span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">141</span></span><span style="color:#f8f8f2">]</span></code></span>现在我们改变k的值并观察预测结果的变化:

<span style="color:#f8f8f2"><code class="language-c"><span style="color:#f92672"># Setting number of neighbors = <span style="color:#ae81ff">3</span></span>

k <span style="color:#f8f8f2">=</span> <span style="color:#ae81ff"><span style="color:#ae81ff">3</span></span>

<span style="color:#f92672"># Running KNN model</span>

result<span style="color:#f8f8f2">,</span>neigh <span style="color:#f8f8f2">=</span> <span style="color:#e6db74">knn</span><span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">,</span> test<span style="color:#f8f8f2">,</span> k<span style="color:#f8f8f2">)</span>

<span style="color:#f92672"># Predicted <span style="color:#f92672">class</span></span>

<span style="color:#e6db74">print</span><span style="color:#f8f8f2">(</span>result<span style="color:#f8f8f2">)</span> <span style="color:#f8f8f2">-></span> Iris<span style="color:#f8f8f2">-</span>virginica

# <span style="color:#ae81ff"><span style="color:#ae81ff">3</span></span> nearest neighbors

<span style="color:#e6db74">print</span><span style="color:#f8f8f2">(</span>neigh<span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">-></span> <span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">141</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">139</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">120</span></span><span style="color:#f8f8f2">]</span>

<span style="color:#f92672"># Setting number of neighbors = <span style="color:#ae81ff">5</span></span>

k <span style="color:#f8f8f2">=</span> <span style="color:#ae81ff"><span style="color:#ae81ff">5</span></span>

<span style="color:#f92672"># Running KNN model</span>

result<span style="color:#f8f8f2">,</span>neigh <span style="color:#f8f8f2">=</span> <span style="color:#e6db74">knn</span><span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">,</span> test<span style="color:#f8f8f2">,</span> k<span style="color:#f8f8f2">)</span>

<span style="color:#f92672"># Predicted <span style="color:#f92672">class</span></span>

<span style="color:#e6db74">print</span><span style="color:#f8f8f2">(</span>result<span style="color:#f8f8f2">)</span> <span style="color:#f8f8f2">-></span> Iris<span style="color:#f8f8f2">-</span>virginica

# <span style="color:#ae81ff"><span style="color:#ae81ff">5</span></span> nearest neighbors

<span style="color:#e6db74">print</span><span style="color:#f8f8f2">(</span>neigh<span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">-></span> <span style="color:#f8f8f2">[</span><span style="color:#ae81ff"><span style="color:#ae81ff">141</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">139</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">120</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">145</span></span><span style="color:#f8f8f2">,</span> <span style="color:#ae81ff"><span style="color:#ae81ff">144</span></span><span style="color:#f8f8f2">]</span></code></span>

和scikit-learn比较

可以看到,两个模型都预测了同样的类别(“irisi –virginica”)和同样的最近邻([141 139 120])。因此我们可以得出结论:模型是按照预期运行的。

尾注

KNN算法是最简单的分类算法之一。即使如此简单,它也能得到很理想的结果。KNN算法也可用于回归问题,这时它使用最近点的均值而不是最近邻的类别。R中KNN可以通过单行代码实现,但我还没有探索如何在SAS中使用KNN算法。

您觉得这篇文章有用吗?您最近使用过其他机器学习工具吗?您是否打算在一些业务问题中使用KNN?如果是的话,请与我们分享你打算如何去做。