1. 准备环境

Ubuntu 12.04

Dpdk版本 16.04

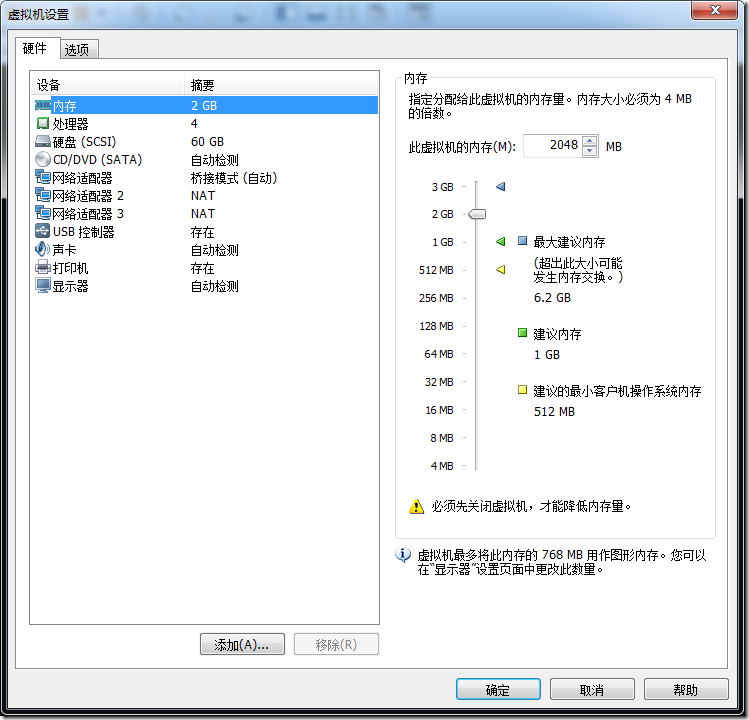

2. Vmware Workstations设置

3. Dpdk环境搭建

3.1. 设置环境变量

export RTE_SDK=/home/username/Downloads/dpdk-16.04 //将RET_SDK的路径设置为dpdk源码路径

export RTE_TARGET=x86_64-native-linuxapp-gcc //64bit系统

3.2. 编译测试

编译测试有两种方案

3.2.1. 脚本方案

./tools/setup.sh

root@ubuntu:/home/zhaojie/Downloads/dpdk-16.04# ./tools/setup.sh ------------------------------------------------------------------------------ RTE_SDK exported as /home/zhaojie/Downloads/dpdk-16.04 ------------------------------------------------------------------------------ ---------------------------------------------------------- Step 1: Select the DPDK environment to build ---------------------------------------------------------- [1] arm64-armv8a-linuxapp-gcc [2] arm64-thunderx-linuxapp-gcc [3] arm64-xgene1-linuxapp-gcc [4] arm-armv7a-linuxapp-gcc [5] i686-native-linuxapp-gcc [6] i686-native-linuxapp-icc [7] ppc_64-power8-linuxapp-gcc [8] tile-tilegx-linuxapp-gcc [9] x86_64-ivshmem-linuxapp-gcc [10] x86_64-ivshmem-linuxapp-icc [11] x86_64-native-bsdapp-clang [12] x86_64-native-bsdapp-gcc [13] x86_64-native-linuxapp-clang [14] x86_64-native-linuxapp-gcc [15] x86_64-native-linuxapp-icc [16] x86_x32-native-linuxapp-gcc ---------------------------------------------------------- Step 2: Setup linuxapp environment ---------------------------------------------------------- [17] Insert IGB UIO module [18] Insert VFIO module [19] Insert KNI module [20] Setup hugepage mappings for non-NUMA systems [21] Setup hugepage mappings for NUMA systems [22] Display current Ethernet device settings [23] Bind Ethernet device to IGB UIO module [24] Bind Ethernet device to VFIO module [25] Setup VFIO permissions ---------------------------------------------------------- Step 3: Run test application for linuxapp environment ---------------------------------------------------------- [26] Run test application ($RTE_TARGET/app/test) [27] Run testpmd application in interactive mode ($RTE_TARGET/app/testpmd) ---------------------------------------------------------- Step 4: Other tools ---------------------------------------------------------- [28] List hugepage info from /proc/meminfo ---------------------------------------------------------- Step 5: Uninstall and system cleanup ---------------------------------------------------------- [29] Unbind NICs from IGB UIO or VFIO driver [30] Remove IGB UIO module [31] Remove VFIO module [32] Remove KNI module [33] Remove hugepage mappings [34] Exit Script Option:

1)第一步先选择编译环境。我的Ubuntu虚拟机是12.04 64位的。所以这里选择x86_64-native-linuxapp-gcc(14)

这里可能会出错,根据错误的提示查找解决方案

2)加载uio模块Insert IGB UIO module (17)

UIO(Userspace I/O)是运行在用户空间的I/O技术,Linux系统中一般的驱动设备都是运行在内核空间,而在用户空间用应用程序调用即可,而UIO则是将驱动的很少一部分运行在内核空间,而在用户空间实现驱动的绝大多数功能!

使用UIO可以避免设备的驱动程序需要随着内核的更新而更新的问题

3)配置大页Setup hugepage mappings for non-NUMA systems(20)

Removing currently reserved hugepages Unmounting /mnt/huge and removing directory Input the number of 2048kB hugepages Example: to have 128MB of hugepages available in a 2MB huge page system, enter '64' to reserve 64 * 2MB pages Number of pages:

经过多次尝试后选择了512页(配置128会因为太小了不能运行示例程序= =)

配置成功之后可以选择List hugepage info from /proc/meminfo(28)查看大页情况

Option: 28 AnonHugePages: 77824 kB HugePages_Total: 512 HugePages_Free: 512 HugePages_Rsvd: 0 HugePages_Surp: 0 Hugepagesize: 2048 kB Press enter to continue ...

4)将网卡绑定到uio模块上Bind Ethernet device to IGB UIO module(23)

Network devices using DPDK-compatible driver ============================================ <none> Network devices using kernel driver =================================== 0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth0 drv=e1000 unused=igb_uio *Active* 0000:02:02.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth1 drv=e1000 unused=igb_uio *Active* 0000:02:03.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth2 drv=e1000 unused=igb_uio *Active* Other network devices ===================== <none> Enter PCI address of device to bind to IGB UIO driver:

注意:

需要绑定的接口,在绑定前需要down掉(这里可以看到需要绑定的eth1 eth2都是Active,因此需要手动down掉)

//获取root权限 zhaojie@ubuntu:~$ su Password: root@ubuntu:/home/zhaojie# ifconfig eth1 down;ifconfig eth2 down root@ubuntu:/home/zhaojie#

再次尝试绑定:

Network devices using DPDK-compatible driver ============================================ <none> Network devices using kernel driver =================================== 0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth0 drv=e1000 unused=igb_uio *Active* 0000:02:02.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth1 drv=e1000 unused=igb_uio 0000:02:03.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth2 drv=e1000 unused=igb_uio Other network devices ===================== <none>

这里直接操作两次,将0000:02:02.0和0000:02:03.0绑定至uio

通过Display current Ethernet device settings(22)常看绑定情况

Network devices using DPDK-compatible driver ============================================ 0000:02:02.0 '82545EM Gigabit Ethernet Controller (Copper)' drv=igb_uio unused=e1000 0000:02:03.0 '82545EM Gigabit Ethernet Controller (Copper)' drv=igb_uio unused=e1000 Network devices using kernel driver =================================== 0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper)' if=eth0 drv=e1000 unused=igb_uio *Active* Other network devices ===================== <none>

现在就可以运行示例程序了

Run testpmd application in interactive mode ($RTE_TARGET/app/testpmd)(27)

Enter hex bitmask of cores to execute testpmd app on Example: to execute app on cores 0 to 7, enter 0xff bitmask:

由于我配置vmware的时候配置的4个cpu,也就是跑0-4core,也就是说低四位都是1。因此bitmask选择0x0f

注意:

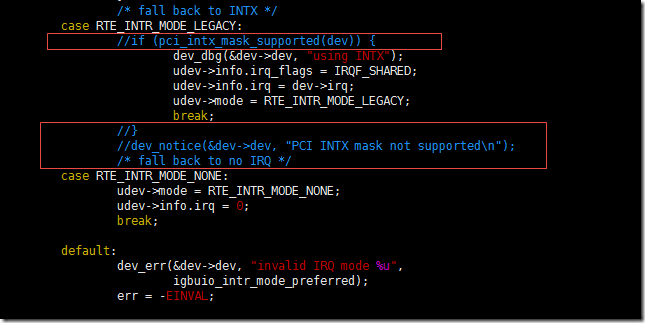

有可能因为虚拟机的缘故,可能会出现

EAL: Error reading from file descriptor

需要将lib/librte_eal/linuxapp/igb_uio/igb_uio.c文件中一下几行注释掉

正常运行后的情况如下

Interactive-mode selected Configuring Port 0 (socket 0) PMD: eth_em_tx_queue_setup(): sw_ring=0x7f2369190780 hw_ring=0x7f2369192880 dma_addr=0x48f92880 PMD: eth_em_rx_queue_setup(): sw_ring=0x7f2369180240 hw_ring=0x7f2369180740 dma_addr=0x48f80740 PMD: eth_em_start(): << Port 0: 00:0C:29:51:25:F5 Configuring Port 1 (socket 0) PMD: eth_em_tx_queue_setup(): sw_ring=0x7f236916e000 hw_ring=0x7f2369170100 dma_addr=0x48f70100 PMD: eth_em_rx_queue_setup(): sw_ring=0x7f236915dac0 hw_ring=0x7f236915dfc0 dma_addr=0x48f5dfc0 PMD: eth_em_start(): << Port 1: 00:0C:29:51:25:FF Checking link statuses... Port 0 Link Up - speed 1000 Mbps - full-duplex Port 1 Link Up - speed 1000 Mbps - full-duplex Done testpmd>

键入start开始测试

键入stop结束测试

至此环境搭建完毕

3.2.2. 命令行方案

命令行方案的目的主要是为了能够将配置写入开机脚本中,系统启动之后自动执行

操作前需要先获取root权限(su)

//设置环境变量 export RTE_SDK=/home/zhaojie/Downloads/dpdk-16.04 export RET_TARGET=x86_64-native-linuxapp-gcc //编译 make install T=x86_64-native-linuxapp-gcc

root@ubuntu:/home/zhaojie/Downloads/dpdk-16.04# make install T=x86_64-native-linuxapp-gcc make[5]: Nothing to be done for `depdirs'. Configuration done == Build lib == Build lib/librte_compat == Build lib/librte_eal == Build lib/librte_eal/common == Build lib/librte_eal/linuxapp //省略一部分 == Build app/test-pipeline == Build app/test-pmd == Build app/cmdline_test == Build app/proc_info Build complete [x86_64-native-linuxapp-gcc] Installation cannot run with T defined and DESTDIR undefined root@ubuntu:/home/zhaojie/Downloads/dpdk-16.04#

结尾出现Installation cannot run with T defined and DESTDIR undefined

由于我们只是编译。不安装,所以这里无伤大雅,继续往后走

配置大页

//设置页大小 echo 512 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages //创建路径用于挂载 mkdir /mnt/huge/ //挂载大页 mount -t hugetlbfs nodev /mnt/huge/ //查看Huge内存情况 cat /proc/meminfo | grep Huge

绑定接口

/加载模块/ modprobe uio insmod x86_64-native-linuxapp-gcc/kmod/igb_uio.ko //查看接口状态 ./tools/dpdk_nic_bind.py --status //将需要绑定的接口down ifconfig eth1 down ifconfig eth2 down //绑定 ./tools/dpdk_nic_bind.py -b igb_uio 0000:02:02.0 ./tools/dpdk_nic_bind.py -b igb_uio 0000:02:03.0

运行示例程序

//-c:core bitmask -ninterface number ./x86_64-native-linuxapp-gcc/app/testpmd -c 0xf -n 2 -- -i

至此,配置环境完成

当调试dpdk的程序,这里以所有程序的开始示例helloworld作例子

该例子是在每个使用的核打印 hello from core xx

在./examples/目录下有许多例子,其中有一个hellowoeld路径

进入helloworld路径直接make

之后会在当前helloworld路径下生成build文件夹

build文件夹中存在helloworld可执行程序

./helloworld -c 0x0f -n 2 -- -i

EAL: Detected lcore 0 as core 0 on socket 0 EAL: Detected lcore 1 as core 0 on socket 0 EAL: Detected lcore 2 as core 0 on socket 0 EAL: Detected lcore 3 as core 0 on socket 0 EAL: Support maximum 128 logical core(s) by configuration. EAL: Detected 4 lcore(s) EAL: Setting up physically contiguous memory... EAL: Ask a virtual area of 0x200000 bytes EAL: Virtual area found at 0x7f64ea200000 (size = 0x200000) EAL: Ask a virtual area of 0x2e000000 bytes EAL: Virtual area found at 0x7f64bc000000 (size = 0x2e000000) EAL: Ask a virtual area of 0x11c00000 bytes EAL: Virtual area found at 0x7f64aa200000 (size = 0x11c00000) EAL: Ask a virtual area of 0x200000 bytes EAL: Virtual area found at 0x7f64a9e00000 (size = 0x200000) EAL: Requesting 512 pages of size 2MB from socket 0 EAL: TSC frequency is ~2494369 KHz EAL: Master lcore 0 is ready (tid=eb6e6900;cpuset=[0]) EAL: lcore 3 is ready (tid=a83f6700;cpuset=[3]) EAL: lcore 1 is ready (tid=a93f8700;cpuset=[1]) EAL: lcore 2 is ready (tid=a8bf7700;cpuset=[2]) EAL: PCI device 0000:02:01.0 on NUMA socket -1 EAL: probe driver: 8086:100f rte_em_pmd EAL: Not managed by a supported kernel driver, skipped EAL: PCI device 0000:02:02.0 on NUMA socket -1 EAL: probe driver: 8086:100f rte_em_pmd EAL: PCI memory mapped at 0x7f64ea400000 EAL: PCI memory mapped at 0x7f64ea420000 PMD: eth_em_dev_init(): port_id 0 vendorID=0x8086 deviceID=0x100f EAL: PCI device 0000:02:03.0 on NUMA socket -1 EAL: probe driver: 8086:100f rte_em_pmd EAL: PCI memory mapped at 0x7f64ea430000 EAL: PCI memory mapped at 0x7f64ea450000 PMD: eth_em_dev_init(): port_id 1 vendorID=0x8086 deviceID=0x100f hello from core 1 hello from core 2 hello from core 3 hello from core 0

成功打印出每一个核的打印

至此本次dpdk环境搭建的记录结束