The main purpose of the recurrent neural network is to process and predict the sequence data. In a fully connected neural network or a convolutional neural network, the network results are from the input layer to the hidden layer to the output layer, and the layer is fully connected. Partially connected, but the nodes between each layer are connectionless. Consider such a problem, if you want to predict what the next word of the sentence is, you generally need to use the current word and the previous word, because the word before and after the sentence is not independent, for example, the current word is "very", the previous word is "Sky", then the next word is very likely to be "blue." The source of the recurrent neural network is to characterize the relationship between the current output of a sequence and the previous information. From the network results, the RNN will remember the previous information and use the previous information to influence the subsequent output. In other words, the nodes between the hidden layers of the RNN are connected. The input of the hidden layer includes not only the output of the input layer but also the output of the hidden layer at the previous time.

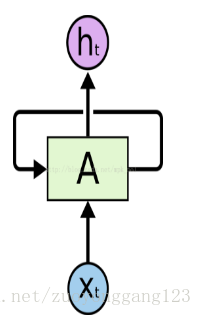

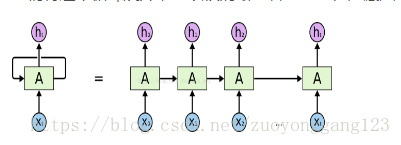

The typical RNN structure is shown in the figure below. For RNN, a very important concept is the moment. The RNN will give an output for each moment of the input combined with the state of the current model. It can be seen from the figure that the main body of the RNN The input to structure A, in addition to the Xt from the input layer, has a circular edge to provide the current state of the moment. At the same time, the status of A will be passed from the current step to the next step.

Chained features reveal that RNNs are essentially related to sequences and lists. They are the most natural neural network architecture for such data.

And RNN has also been used by people! In the past few years, the application of RNN has achieved some success in speech recognition, language modeling, translation, picture description and other issues, and this list is still growing.