环境:

操作系统:CentOS7.3

Java: jdk1.8.0_45

Hadoop:hadoop-2.6.0-cdh5.14.0.tar.gz

1. spark-shell 使用帮助

[hadoop@hadoop01 ~]$ cd app/spark-2.2.0-bin-2.6.0-cdh5.7.0/bin

[hadoop@hadoop01 bin]$ ./spark-shell --help

Usage: ./bin/spark-shell [options]

# 重要参数

Options:

--master MASTER_URL spark://host:port, mesos://host:port, yarn, or local.

--name NAME A name of your application.

--jars JARS Comma-separated list of local jars to include on the driver

and executor classpaths.

--exclude-packages Comma-separated list of groupId:artifactId, to exclude while

resolving the dependencies provided in --packages to avoid

dependency conflicts.

--conf PROP=VALUE Arbitrary Spark configuration property.

--driver-memory MEM Memory for driver (e.g. 1000M, 2G) (Default: 1024M).

--executor-memory MEM Memory per executor (e.g. 1000M, 2G) (Default: 1G).

YARN-only:

--driver-cores NUM Number of cores used by the driver, only in cluster mode

(Default: 1).

--queue QUEUE_NAME The YARN queue to submit to (Default: "default").

--num-executors NUM Number of executors to launch (Default: 2).

If dynamic allocation is enabled, the initial number of

executors will be at least NUM.

[hadoop@hadoop01 bin]$注意:因为生产中一般不用standalone模式,这里我们只关注spark on yarn的用法。

2. 启动一个spark-shell(hdfs和yarn已启动)

[hadoop@hadoop01 ~]$ spark-shell --master local[2] # 2=两个cores

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

18/07/12 22:30:58 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/07/12 22:31:05 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

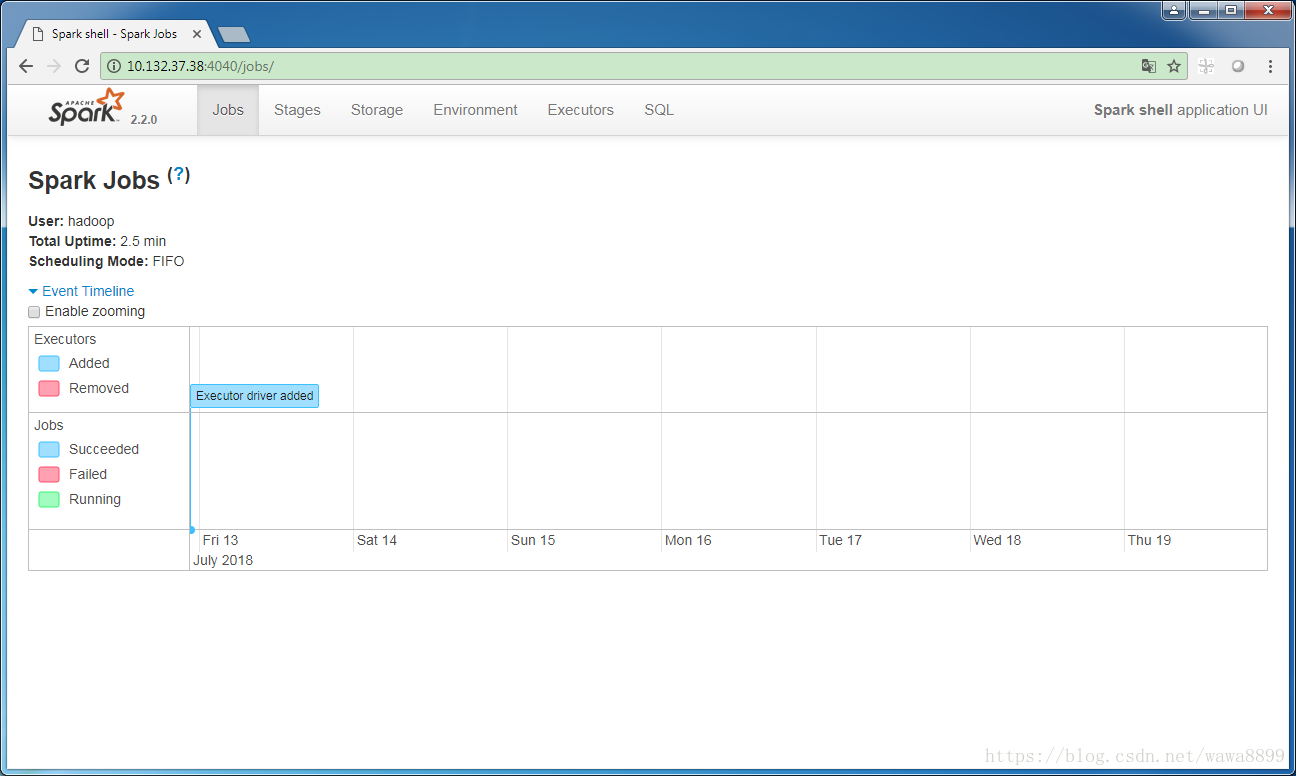

Spark context Web UI available at http://10.132.37.38:4040

Spark context available as 'sc' (master = local[2], app id = local-1531405859803). # spark-shell启动时创建的一个spark context

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.0

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_45)

Type in expressions to have them evaluated.

Type :help for more information.

scala> 说明:

1) local[x] 中x代表使用主机上的x个cores;

2) spark-shell启动时创建了一个spark context:'sc' (master = local[2], app id = local-1531405859803)。一个spark-shell就是一个spark应用程序,一个spark应用程序必然有一个spark context;

重要:正因为启动spark-shell时候自动创建了别名叫sc的这个spark context,我们再创建自己的SparkContext不会有效,这一点官网有明确说明;

3) 如果spark-shell需要引用一些其他的jar包(比如mysql jdbc),可以通过--jars 引用它;或者将jar包的路径添加到classpath;

4) 如果要使用maven依赖,使用参数 --packages "org.example:example:0.1",其中引号里面的内容即为maven 的dependency的g:a:v;

5) spark-shell启动时会启动一个Spark 的Web UI。由于刚刚启动spark-shell的时候并没有指定appName,所以Web UI右上角显示Spark shell application UI(源码$SPARK_HOME/bin/spark-shell里面定义)。如果指定了AppName,则这里显示AppName。

Spark Web UI默认使用端口号4040,如果4040被占用,它会自动+1,即使用4041;若4041也被占用,依此类推。

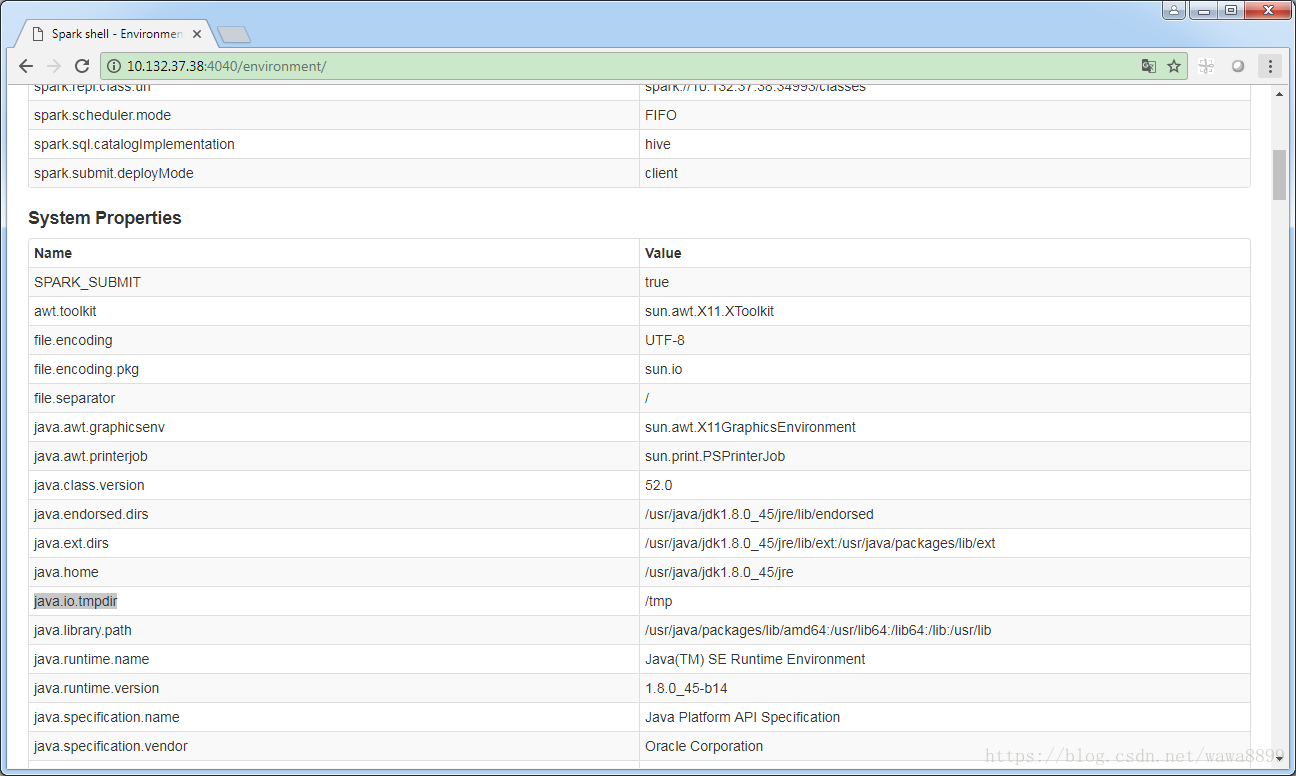

Spark Environment里面也是一样的道理

注意:这里有一个参数java.io.tmpdir,生产中必须要调优!

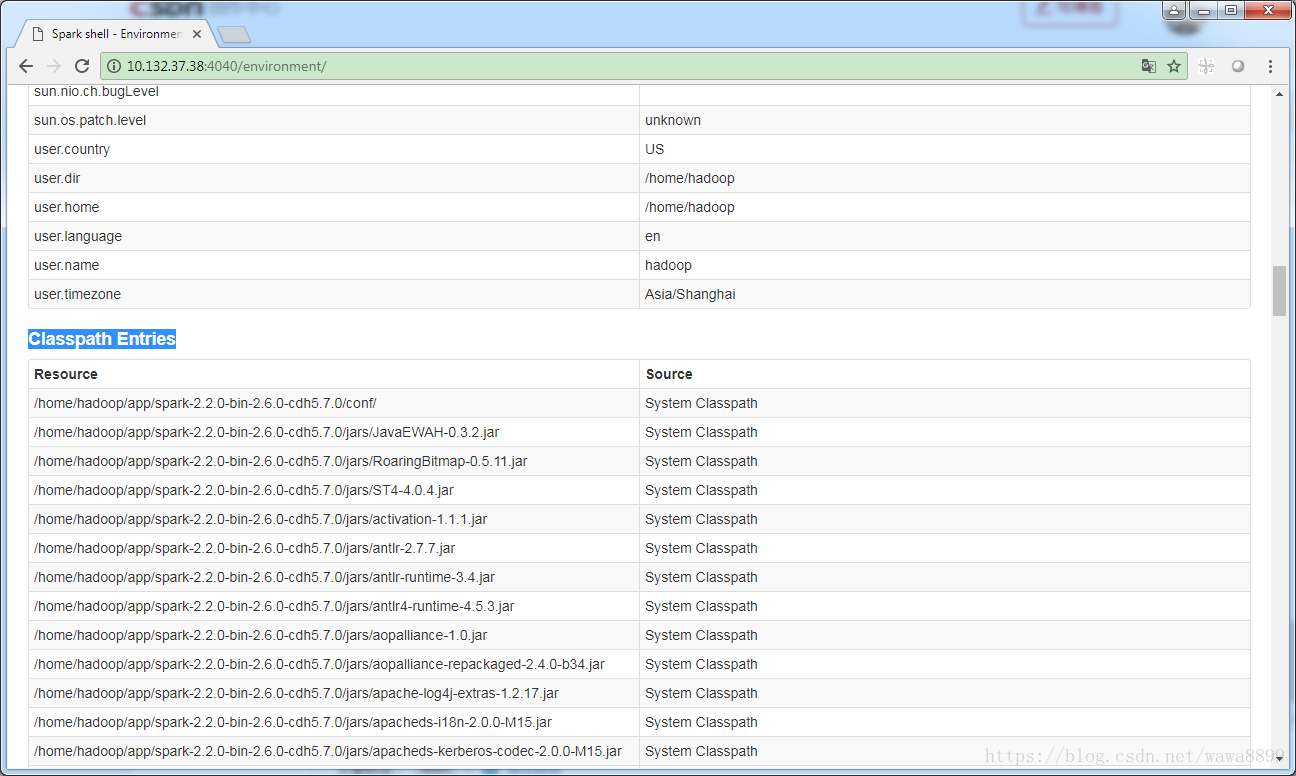

Environment下面的Classpath Entries 这里会把$HADOOP_HOME/conf/ 和$HADOOP_HOME/jars/下面的包全部都导进来。

6) spark-shell 底层也是调用spark-submit进行作业的提交的(源码查看: $SPARK_HOME/bin/spark-shell)

其他的诸如spark-sql、sparkR等底层也都是由spark-submit来进行作业提交的。

[hadoop@hadoop01 ~]$ vi $SPARK_HOME/bin/spark-shell # 搜索submit

function main() {

if $cygwin; then

# Workaround for issue involving JLine and Cygwin

# (see http://sourceforge.net/p/jline/bugs/40/).

# If you're using the Mintty terminal emulator in Cygwin, may need to set the

# "Backspace sends ^H" setting in "Keys" section of the Mintty options

# (see https://github.com/sbt/sbt/issues/562).

stty -icanon min 1 -echo > /dev/null 2>&1

export SPARK_SUBMIT_OPTS="$SPARK_SUBMIT_OPTS -Djline.terminal=unix"

"${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.repl.Main --name "Spark shell" "$@"

stty icanon echo > /dev/null 2>&1

else

export SPARK_SUBMIT_OPTS

"${SPARK_HOME}"/bin/spark-submit --class org.apache.spark.repl.Main --name "Spark shell" "$@"

fi

}