【原文链接】https://doc.scrapy.org/en/latest/intro/tutorial.html

In this tutorial, we’ll assume that Scrapy is already installed on your system. If that’s not the case, see Installation guide.

We are going to scrape quotes.toscrape.com, a website that lists quotes from famous authors.

This tutorial will walk you through these tasks:

- Creating a new Scrapy project

- Writing a spider to crawl a site and extract data

- Exporting the scraped data using the command line

- Changing spider to recursively follow links

- Using spider arguments

Creating a project

Before you start scraping, you will have to set up a new Scrapy project. Enter a directory where you’d like to store your code and run:

scrapy startproject tutorialThis will create a tutorial directory with the following contents:

tutorial/

scrapy.cfg # deploy configuration file

tutorial/ # project's Python module, you'll import your code from here

__init__.py

items.py # project items definition file

middlewares.py # project middlewares file

pipelines.py # project pipelines file

settings.py # project settings file

spiders/ # a directory where you'll later put your spiders

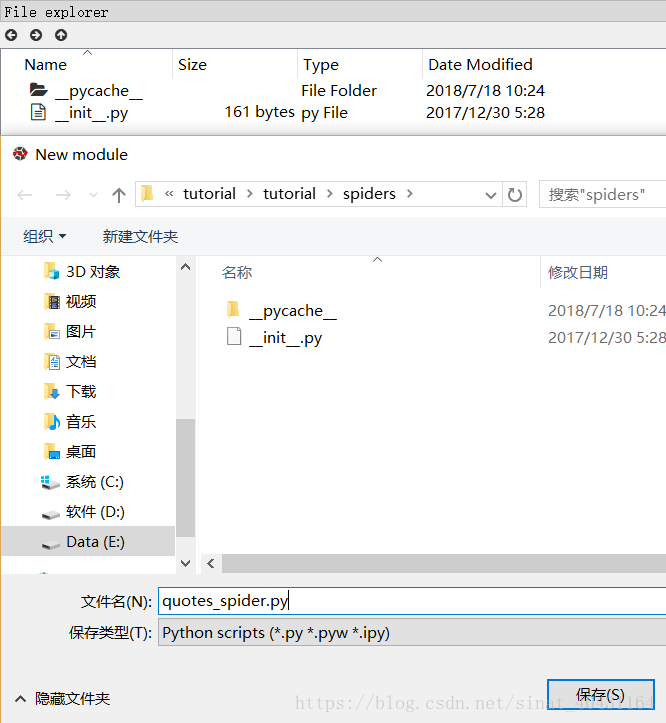

__init__.pyOur first Spider

Spiders are 一些你自定义的类, Scrapy uses these classes to scrape information from a website (or a group of websites). They 必须是 scrapy.Spider 的子类 and 定义最开始的请求, optionally how to 追踪网页中的链接, and how to 解析下载下来的网页内容并从中获取数据.

This is the code for our first Spider. Save it in a file named quotes_spider.py under the tutorial/spiders directory in your project:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

def start_requests(self):

urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

for url in urls:

#yield 是一个类似 return 的关键字,迭代一次遇到yield时就返回yield后面(右边)的值。重点是:下一次迭代时,从上一次迭代遇到的yield后面的代码(下一行)开始执行。

#简要理解:yield就是 return 返回一个值,并且记住这个返回的位置,下次迭代就从这个位置后(下一行)开始。

yield scrapy.Request(url=url, callback=self.parse)

def parse(self, response):

page = response.url.split("/")[-2]

filename = 'quotes-%s.html' % page

with open(filename, 'wb') as f:

f.write(response.body)

self.log('Saved file %s' % filename)As you can see, our Spider 是 scrapy.Spider 的子类 and defines some attributes and methods:

-

name: 标识了 the Spider. 在一个工程下面name必须唯一, that is, you can’t set the same name for different Spiders. -

start_requests(): 必须返回一个可迭代对象,内容是 Requests (你可以返回 a list of 请求或者 write a generator function) which the Spider will begin to crawl from. 后续的请求会根据这些初始请求be generated successively. -

parse(): a method that 会被调用 to 处理每一条请求下载的响应. The response 形参是TextResponse的一个实例,这个实例会持有网页内容 and has further helpful 方法 to handle it.The

parse()方法通常解析响应, 将爬取下来的数据抽取成 dicts and also 找到新的URLs并追踪,然后从这些新的URLs上面创建新的请求(Request).

How to run our spider

To put our spider to work, go to the project’s 最高一层的目录 and run:

scrapy crawl quotesThis command runs the spider with name quotes that we’ve just added, that will 发送一些请求到 quotes.toscrape.com domain. You will get an output similar to this:

... (omitted for brevity)

2016-12-16 21:24:05 [scrapy.core.engine] INFO: Spider opened

2016-12-16 21:24:05 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2016-12-16 21:24:05 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2016-12-16 21:24:05 [scrapy.core.engine] DEBUG: Crawled (404) <GET http://quotes.toscrape.com/robots.txt> (referer: None)

2016-12-16 21:24:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://quotes.toscrape.com/page/1/> (referer: None)

2016-12-16 21:24:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://quotes.toscrape.com/page/2/> (referer: None)

2016-12-16 21:24:05 [quotes] DEBUG: Saved file quotes-1.html

2016-12-16 21:24:05 [quotes] DEBUG: Saved file quotes-2.html

2016-12-16 21:24:05 [scrapy.core.engine] INFO: Closing spider (finished)

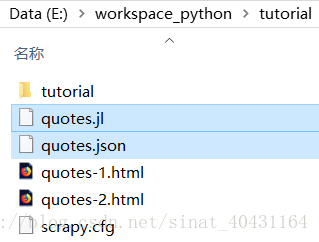

...Now, check the files in the current directory. You should notice that two new files have been created: quotes-1.html and quotes-2.html, with the content for the respective URLs, as our parse method instructs.

Note: If you are wondering why we 还没有解析 HTML yet, hold on, we will cover that soon.

底层 What just happened?

Scrapy schedules 被 start_requests method of the Spider 返回的 scrapy.Request 对象. 每次接收一个响应, it 初始化 Response objects and calls the callback method associated with the request (in this case, the parse method) passing the response as argument.

A shortcut to the start_requests method

Instead of implementing a start_requests() method that 通过URLs生成 scrapy.Request objects, 你可以仅仅定义一个 start_urls 类 attribute with a list of URLs. This list will then be used by the 默认实现 of start_requests() to 创建最初的请求 for your spider:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

def parse(self, response):

page = response.url.split("/")[-2]

filename = 'quotes-%s.html' % page

with open(filename, 'wb') as f:

f.write(response.body)The parse() method will be called to handle each of the requests for those URLs, even though we haven’t explicitly told Scrapy to do so. This happens because parse() is Scrapy’s default callback method, which is called for requests without an explicitly assigned callback.

Extracting data

The best way to learn how to extract data with Scrapy is trying selectors using the shell Scrapy shell. Run:

scrapy shell 'http://quotes.toscrape.com/page/1/'Note: Remember to always enclose urls in quotes when running Scrapy shell from command-line, otherwise urls containing arguments (ie. & character) will not work.

On Windows, use double quotes instead:

scrapy shell "http://quotes.toscrape.com/page/1/"You will see something like:

[ ... Scrapy log here ... ]

2016-09-19 12:09:27 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://quotes.toscrape.com/page/1/> (referer: None)

[s] Available Scrapy objects:

[s] scrapy scrapy module (contains scrapy.Request, scrapy.Selector, etc)

[s] crawler <scrapy.crawler.Crawler object at 0x7fa91d888c90>

[s] item {}

[s] request <GET http://quotes.toscrape.com/page/1/>

[s] response <200 http://quotes.toscrape.com/page/1/>

[s] settings <scrapy.settings.Settings object at 0x7fa91d888c10>

[s] spider <DefaultSpider 'default' at 0x7fa91c8af990>

[s] Useful shortcuts:

[s] shelp() Shell help (print this help)

[s] fetch(req_or_url) Fetch request (or URL) and update local objects

[s] view(response) View response in a browser

>>>Using the shell, you can try selecting elements using CSS with the 响应对象:

>>> response.css('title')

[<Selector xpath='descendant-or-self::title' data='<title>Quotes to Scrape</title>'>]The 结果 of running response.css('title') is a 类似list的对象,叫做 SelectorList, which 代表了 a list of Selector objects that wrap around (装饰) XML/HTML elements (元素) and 让你可以运行其他 queries to 细粒度地控制数据地选取或提取.

To 从上面的标题提取文本, you can do:

>>> response.css('title::text').extract()

['Quotes to Scrape']There are two things to note here: one is that we’ve added ::text to the CSS query, to mean we 只想选择在 <title> 元素中的 text 元素,且只选择 text 元素. If we don’t specify ::text, we’d get the full title 元素, including its tags:

>>> response.css('title').extract()

['<title>Quotes to Scrape</title>']The other thing is that 调用 .extract() 的结果是一个 list, 因为我们正在处理 SelectorList 的实例. When you know you just want the first result, as in this case, you can do:

>>> response.css('title::text').extract_first()

'Quotes to Scrape'As an alternative, you could’ve written:

>>> response.css('title::text')[0].extract()

'Quotes to Scrape'However, using .extract_first() avoids an IndexError and returns None when it doesn’t find any element matching the selection.

There’s a lesson here: for most scraping code, you want it to be resilient to errors due to things not being found on a page, so that even if some parts fail to be scraped, you can at least get some data.

Besides the extract() and extract_first() methods, you can also use the re() method to extract using regular expressions:

>>> response.css('title::text').re(r'Quotes.*')

['Quotes to Scrape']

>>> response.css('title::text').re(r'Q\w+')

['Quotes']

>>> response.css('title::text').re(r'(\w+) to (\w+)')

['Quotes', 'Scrape']In order to find the proper CSS 选择器, you might find useful opening the response page from the shell in your web browser using view(response). You can use your 浏览器开发者工具 or 插件 like Firebug (see sections about Using Firebug for scraping and Using Firefox for scraping).

Selector Gadget is also a nice tool to 快速找到 CSS 选择器 for visually selected elements, which works in many browsers.

XPath: a brief intro

Besides CSS, Scrapy selectors also support using XPath expressions:

>>> response.xpath('//title')

[<Selector xpath='//title' data='<title>Quotes to Scrape</title>'>]

>>> response.xpath('//title/text()').extract_first()

'Quotes to Scrape'XPath expressions are very powerful, and are the foundation of Scrapy Selectors. In fact, CSS selectors 在底层 are converted to XPath. You can see that if you read closely the text representation of the selector objects in the shell.

While perhaps not as popular as CSS selectors, XPath expressions offer more power because besides navigating the structure, it can also look at the content. Using XPath, you’re able to select things like: select the link that contains the text “Next Page”. This makes XPath very fitting to the task of scraping, and we encourage you to learn XPath even if you already know how to construct CSS selectors, it will make scraping much easier.

We won’t cover much of XPath here, but you can read more about using XPath with Scrapy Selectors here. To learn more about XPath, we recommend this tutorial to learn XPath through examples, and this tutorial to learn “how to think in XPath”.

Extracting quotes and authors

Now that you know a bit about selection and extraction, let’s complete our spider by writing the code to extract the quotes from the web page.

Each quote in http://quotes.toscrape.com is represented by HTML elements that look like this:

<div class="quote">

<span class="text">“The world as we have created it is a process of our

thinking. It cannot be changed without changing our thinking.”</span>

<span>

by <small class="author">Albert Einstein</small>

<a href="/author/Albert-Einstein">(about)</a>

</span>

<div class="tags">

Tags:

<a class="tag" href="/tag/change/page/1/">change</a>

<a class="tag" href="/tag/deep-thoughts/page/1/">deep-thoughts</a>

<a class="tag" href="/tag/thinking/page/1/">thinking</a>

<a class="tag" href="/tag/world/page/1/">world</a>

</div>

</div>Let’s open up scrapy shell and play a bit to find out how to extract the data we want:

scrapy shell 'http://quotes.toscrape.com'We get a list of selectors for the quote HTML elements with:

>>> response.css("div.quote")

[<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>,

<Selector xpath="descendant-or-self::div[@class and contains(concat(' ', normalize-space(@class), ' '), ' quote ')]" data='<div class="quote" itemscope itemtype="h'>]Each of the 选择器 returned by the query above allows us to 运行 further queries over their 子元素. Let’s 将第一个选择器赋值给一个变量, so that we can run our CSS 选择器 directly on a particular quote:

>>> quote = response.css("div.quote")[0]Now, let’s 抽取 title, author and the tags from that quote using the quote object we just created:

>>> title = quote.css("span.text::text").extract_first()

>>> title

'“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”'

>>> author = quote.css("small.author::text").extract_first()

>>> author

'Albert Einstein'Given that the tags are a list of strings, we can use the .extract() method to get all of them:

>>> tags = quote.css("div.tags a.tag::text").extract()

>>> tags

['change', 'deep-thoughts', 'thinking', 'world']Having figured out how to extract each bit, we can now iterate over all the quotes elements and put them together into a Python dictionary:

>>> for quote in response.css("div.quote"):

... text = quote.css("span.text::text").extract_first()

... author = quote.css("small.author::text").extract_first()

... tags = quote.css("div.tags a.tag::text").extract()

... print(dict(text=text, author=author, tags=tags))

{'tags': ['change', 'deep-thoughts', 'thinking', 'world'], 'author': 'Albert Einstein', 'text': '“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”'}

{'tags': ['abilities', 'choices'], 'author': 'J.K. Rowling', 'text': '“It is our choices, Harry, that show what we truly are, far more than our abilities.”'}

... a few more of these, omitted for brevityExtracting data in our spider

Let’s get back to our spider. Until now, it doesn’t extract any data in particular, just saves the whole HTML page to a local file. Let’s integrate the extraction logic above into our spider.

A Scrapy spider typically generates many dictionaries containing the data extracted from the page. To do that, we use the yield Python keyword in the callback, as you can see below:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

'http://quotes.toscrape.com/page/2/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('small.author::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}If you run this spider, it will output the extracted data with the log:

scrapy crawl quotes

2016-09-19 18:57:19 [scrapy.core.scraper] DEBUG: Scraped from <200 http://quotes.toscrape.com/page/1/>

{'tags': ['life', 'love'], 'author': 'André Gide', 'text': '“It is better to be hated for what you are than to be loved for what you are not.”'}

2016-09-19 18:57:19 [scrapy.core.scraper] DEBUG: Scraped from <200 http://quotes.toscrape.com/page/1/>

{'tags': ['edison', 'failure', 'inspirational', 'paraphrased'], 'author': 'Thomas A. Edison', 'text': "“I have not failed. I've just found 10,000 ways that won't work.”"}Storing the scraped data

The simplest way to store the scraped data is by using Feed exports, with the following command:

scrapy crawl quotes -o quotes.jsonThat will generate an quotes.json file containing all scraped items, serialized in JSON.

[

{"text": "\u201cThe world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.\u201d", "author": "Albert Einstein", "tags": ["change", "deep-thoughts", "thinking", "world"]},

......,

{"text": "\u201cLife is what happens to us while we are making other plans.\u201d", "author": "Allen Saunders", "tags": ["fate", "life", "misattributed-john-lennon", "planning", "plans"]}

]For historic reasons, Scrapy 追加内容到 a given file 而不是 overwriting its contents. If you run this command twice without removing the file before the second time, you’ll end up with a broken JSON file.

You can also use other formats, like JSON Lines:

scrapy crawl quotes -o quotes.jlThe JSON Lines format is useful because it’s stream-like, you can easily append new records to it. It doesn’t have the same problem of JSON when you run twice. Also, as each record is a separate line, you can process big files without having to fit everything in memory, there are tools like JQ to help doing that at the command-line.

{"text": "\u201cThe world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.\u201d", "author": "Albert Einstein", "tags": ["change", "deep-thoughts", "thinking", "world"]}

......

{"text": "\u201cLife is what happens to us while we are making other plans.\u201d", "author": "Allen Saunders", "tags": ["fate", "life", "misattributed-john-lennon", "planning", "plans"]}In small projects (like the one in this tutorial), that should be enough. However, if you want to perform more complex things with the scraped items, you can write an Item Pipeline. A placeholder file for Item Pipelines has been set up for you when the project is created, in tutorial/pipelines.py. Though you don’t need to implement any item pipelines if you just want to store the scraped items.

Following links

Let’s say, instead of just scraping the stuff from the first two pages from http://quotes.toscrape.com, you want quotes from all the pages in the website.

Now that you know how to extract data from pages, let’s see how to follow links from them.

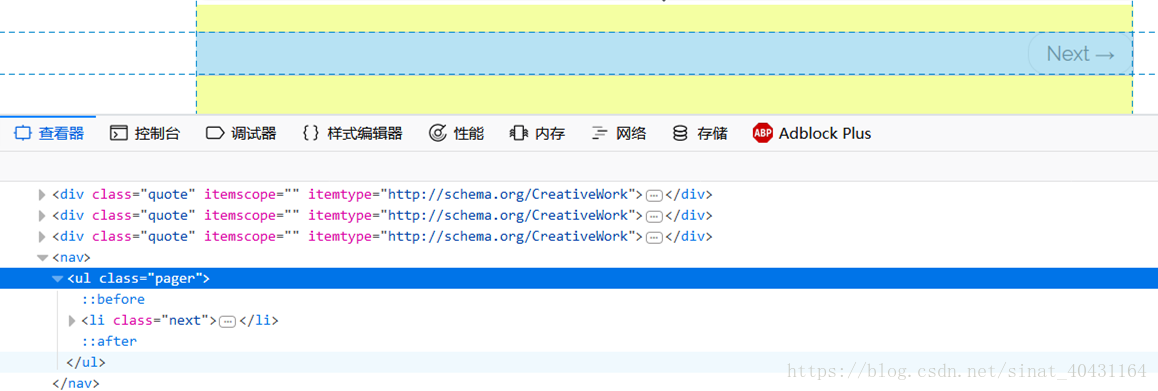

First thing is to extract the link to the page we want to follow. Examining our page, we can see there is a link to the next page with the following 标记:

<ul class="pager">

<li class="next">

<a href="/page/2/">Next <span aria-hidden="true">→</span></a>

</li>

</ul>如果是在Windows中,执行:

scrapy shell "http://quotes.toscrape.com/page/1/"We can try extracting it in the shell:

>>> response.css('li.next a').extract_first()

'<a href="/page/2/">Next <span aria-hidden="true">→</span></a>'This gets the anchor element (<a>), but we want the attribute href. For that, Scrapy supports a CSS extension that let’s you select the attribute contents, like this:

>>> response.css('li.next a::attr(href)').extract_first()

'/page/2/'Let’s see now our spider modified to recursively follow the link to the next page, extracting data from it:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('small.author::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

next_page = response.css('li.next a::attr(href)').extract_first()

if next_page is not None:

next_page = response.urljoin(next_page)

yield scrapy.Request(next_page, callback=self.parse)scrapy crawl quotes -o quotes2.jlNow, after extracting the data, the parse() method looks for the link to the next page, builds a full absolute URL using the urljoin() method (since the links can be relative) and yields 一个新的请求 to the next page, registering itself as 回调函数 to handle the data extraction for the next page and to keep the crawling going through all the pages.

What you see here is Scrapy’s mechanism of following links: when you yield a Request in a callback method, Scrapy will schedule 这个请求被发送 and 注册一个回调函数 to be executed 当这个请求结束的时候.

Using this, you can build complex crawlers that follow links according to rules you define, and extract different kinds of data depending on the page it’s visiting.

In our example, it creates a sort of loop, following all the links to the next page until it doesn’t find one – handy for crawling blogs, forums and other sites with pagination (分页).

A shortcut for creating Requests

As a shortcut for creating Request objects you can use response.follow:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('span small::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

next_page = response.css('li.next a::attr(href)').extract_first()

if next_page is not None:

yield response.follow(next_page, callback=self.parse)Unlike scrapy.Request, response.follow supports relative URLs directly - no need to call urljoin. Note that response.follow just returns a Request instance; you still have to yield this Request.

You can also pass a 选择器 to response.follow instead of a string; this selector should extract necessary attributes:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('span small::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

#注意此处变化

for href in response.css('li.next a::attr(href)'):

yield response.follow(href, callback=self.parse)For <a> elements there is a shortcut: response.follow uses their href attribute automatically. So the code can be shortened further:

import scrapy

class QuotesSpider(scrapy.Spider):

name = "quotes"

start_urls = [

'http://quotes.toscrape.com/page/1/',

]

def parse(self, response):

for quote in response.css('div.quote'):

yield {

'text': quote.css('span.text::text').extract_first(),

'author': quote.css('span small::text').extract_first(),

'tags': quote.css('div.tags a.tag::text').extract(),

}

#注意此处变化

for a in response.css('li.next a'):

yield response.follow(a, callback=self.parse)Note: response.follow(response.css('li.next a')) is not valid because response.css returns a list-like object with selectors for all results, not a single selector. A for 循环 like in the example above, or response.follow(response.css('li.next a')[0]) is fine.

More examples and patterns (略)

Using spider arguments (略)

Next steps

This tutorial covered only the basics of Scrapy, but there’s a lot of other features not mentioned here. Check the What else? section in Scrapy at a glance chapter for a quick overview of the most important ones.

You can continue from the section Basic concepts to know more about the command-line tool, spiders, selectors and other things the tutorial hasn’t covered like modeling the scraped data. If you prefer to play with an example project, check the Examples section.