kubernetes 容器介绍与安装(一)

标签(空格分隔): kubernetes系列

- 一:Kubernetes介绍与功能

- 二:kubernetes基本对象概念

- 三:kubernetes 部署环境准备

- 四:kubernetes 集群部署

- 五:kubernetes 运行一个测试案例

- 六:kubernetes UI 的配置

一:Kubernetes介绍与功能

1.1: kubernetes介绍

Kubernetes是Google在2014年6月开源的一个容器集群管理系统,使用Go语言开发,Kubernetes也叫K8S。

K8S是Google内部一个叫Borg的容器集群管理系统衍生出来的,Borg已经在Google大规模生产运行十年之久。

K8S主要用于自动化部署、扩展和管理容器应用,提供了资源调度、部署管理、服务发现、扩容缩容、监控等一整套功能。

2015年7月,Kubernetes v1.0正式发布,截止到2018年1月27日最新稳定版本是v1.9.2。

Kubernetes目标是让部署容器化应用简单高效。

官方网站:www.kubernetes.io1.2 Kubernetes 主要功能

数据卷

Pod中容器之间共享数据,可以使用数据卷。

应用程序健康检查

容器内服务可能进程堵塞无法处理请求,可以设置监控检查策略保证应用健壮性。

复制应用程序实例

控制器维护着Pod副本数量,保证一个Pod或一组同类的Pod数量始终可用。

弹性伸缩

根据设定的指标(CPU利用率)自动缩放Pod副本数。

服务发现

使用环境变量或DNS服务插件保证容器中程序发现Pod入口访问地址。

负载均衡

一组Pod副本分配一个私有的集群IP地址,负载均衡转发请求到后端容器。在集群内部其他Pod可通过这个ClusterIP访问应用。

滚动更新

更新服务不中断,一次更新一个Pod,而不是同时删除整个服务。

服务编排

通过文件描述部署服务,使得应用程序部署变得更高效。

资源监控

Node节点组件集成cAdvisor资源收集工具,可通过Heapster汇总整个集群节点资源数据,然后存储到InfluxDB时序数据库,再由Grafana展示。

提供认证和授权

支持角色访问控制(RBAC)认证授权等策略。二:kubernetes基本对象概念

2.1:基于基本对象更高层次抽象

ReplicaSet

下一代Replication Controller。确保任何给定时间指定的Pod副本数量,并提供声明式更新等功能。

RC与RS唯一区别就是lable selector支持不同,RS支持新的基于集合的标签,RC仅支持基于等式的标签。

Deployment

Deployment是一个更高层次的API对象,它管理ReplicaSets和Pod,并提供声明式更新等功能。

官方建议使用Deployment管理ReplicaSets,而不是直接使用ReplicaSets,这就意味着可能永远不需要直接操作ReplicaSet对象。

StatefulSet

StatefulSet适合持久性的应用程序,有唯一的网络标识符(IP),持久存储,有序的部署、扩展、删除和滚动更新。

DaemonSet

DaemonSet确保所有(或一些)节点运行同一个Pod。当节点加入Kubernetes集群中,Pod会被调度到该节点上运行,当节点从集群中

移除时,DaemonSet的Pod会被删除。删除DaemonSet会清理它所有创建的Pod。

Job

一次性任务,运行完成后Pod销毁,不再重新启动新容器。还可以任务定时运行。2.2 系统架构及组件功能

Master 组件:

kube- - apiserver

Kubernetes API,集群的统一入口,各组件协调者,以HTTP API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。

kube- - controller- - manager

处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。

kube- - scheduler

根据调度算法为新创建的Pod选择一个Node节点。

Node 组件:

kubelet

kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、

下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。

kube- - proxy

在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。

docker 或 rocket/rkt

运行容器。

第三方服务:

etcd

分布式键值存储系统。用于保持集群状态,比如Pod、Service等对象信息。

三:安装kubernetes

3.1 集群的规划

系统:

CentOS7.5x64

主机规划:

master:

172.17.100.11:

kube-apiserver

kube-controller-manager

kube-scheduler

etcd

slave1:

172.17.100.12

kubelet

kube-proxy

docker

flannel

etcd

slave2:

172.17.100.13

kubelet

kube-proxy

docker

flannel

etcd3.2 安装kubernetes

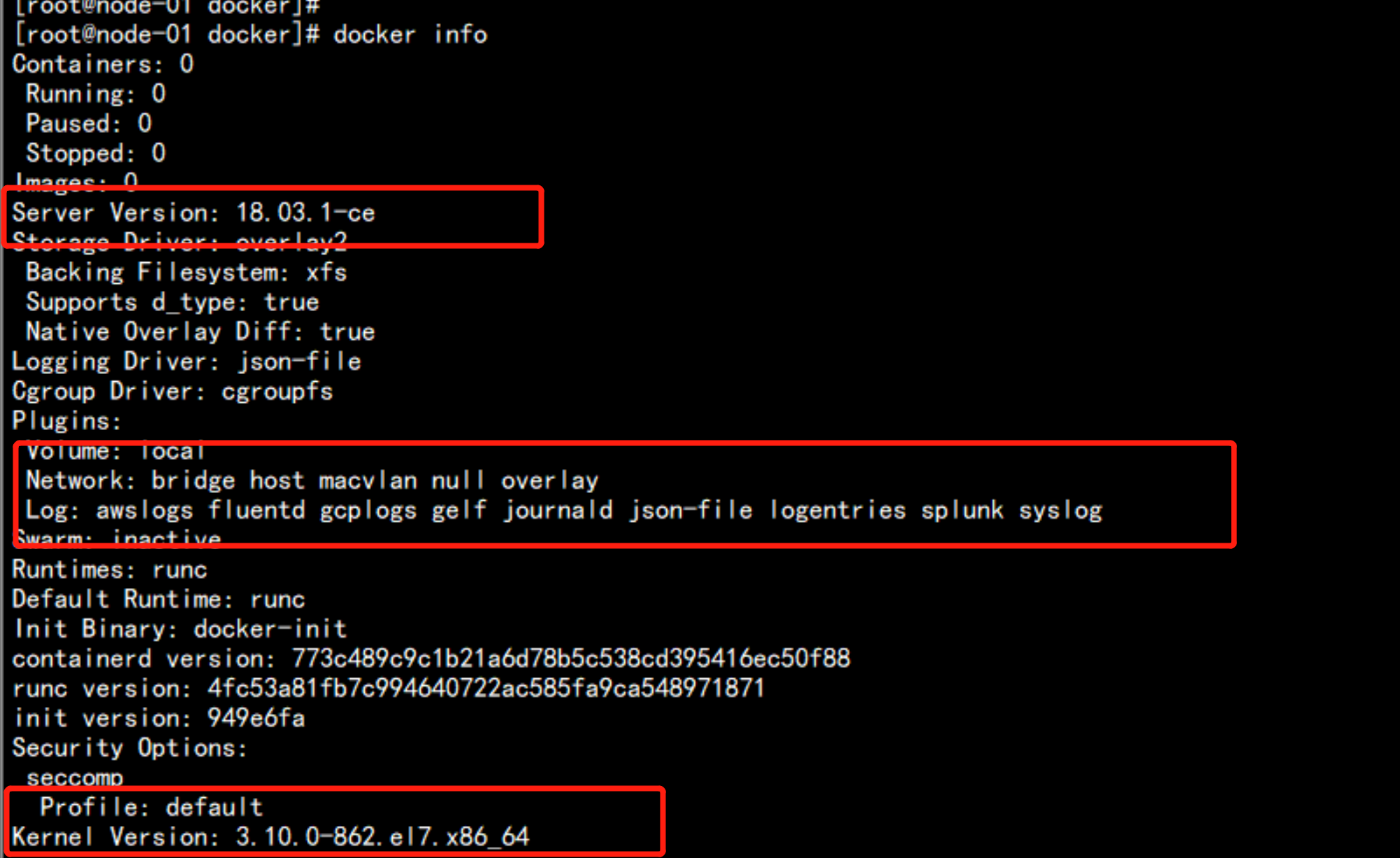

3.2.1 集群安装dockers

yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

# yum install docker-ce

# cat << EOF > /etc/docker/daemon.json

{

"registry-mirrors": [ "https://registry.docker-cn.com"],

"insecure-registries":["192.168.0.210:5000"]

}

EOF

# systemctl start docker

# systemctl enable docker

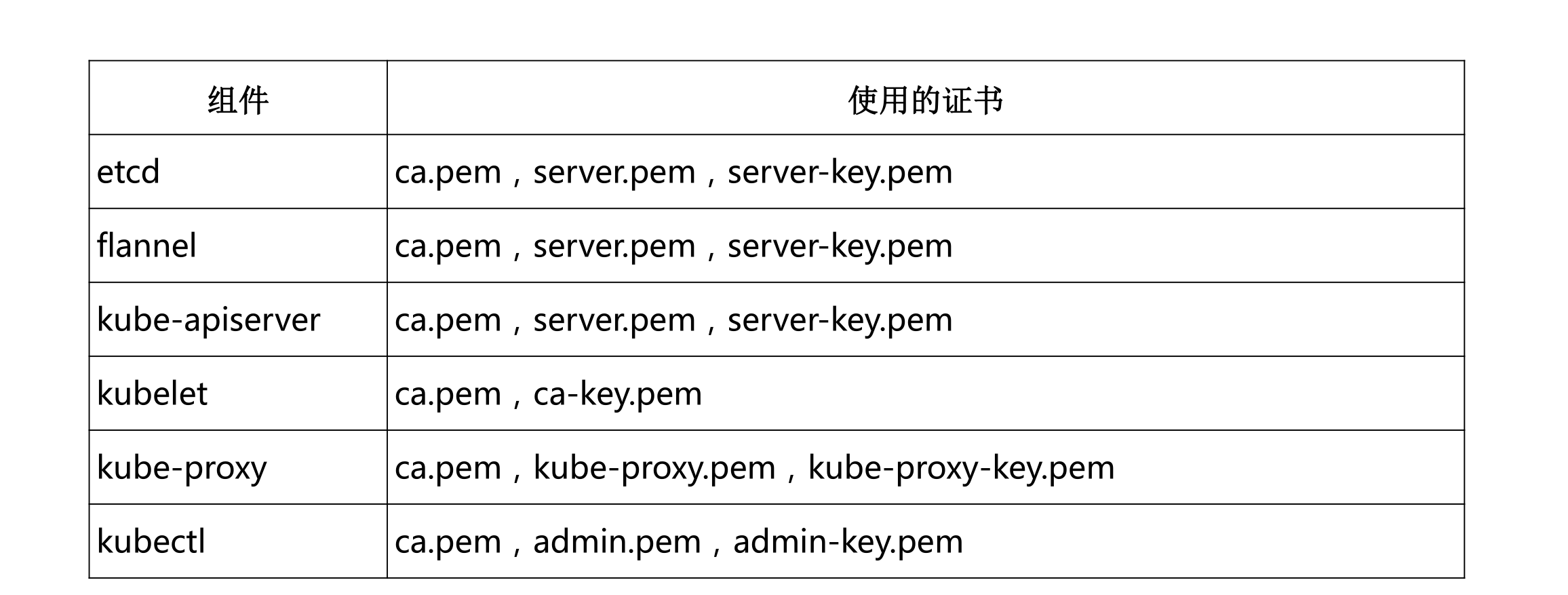

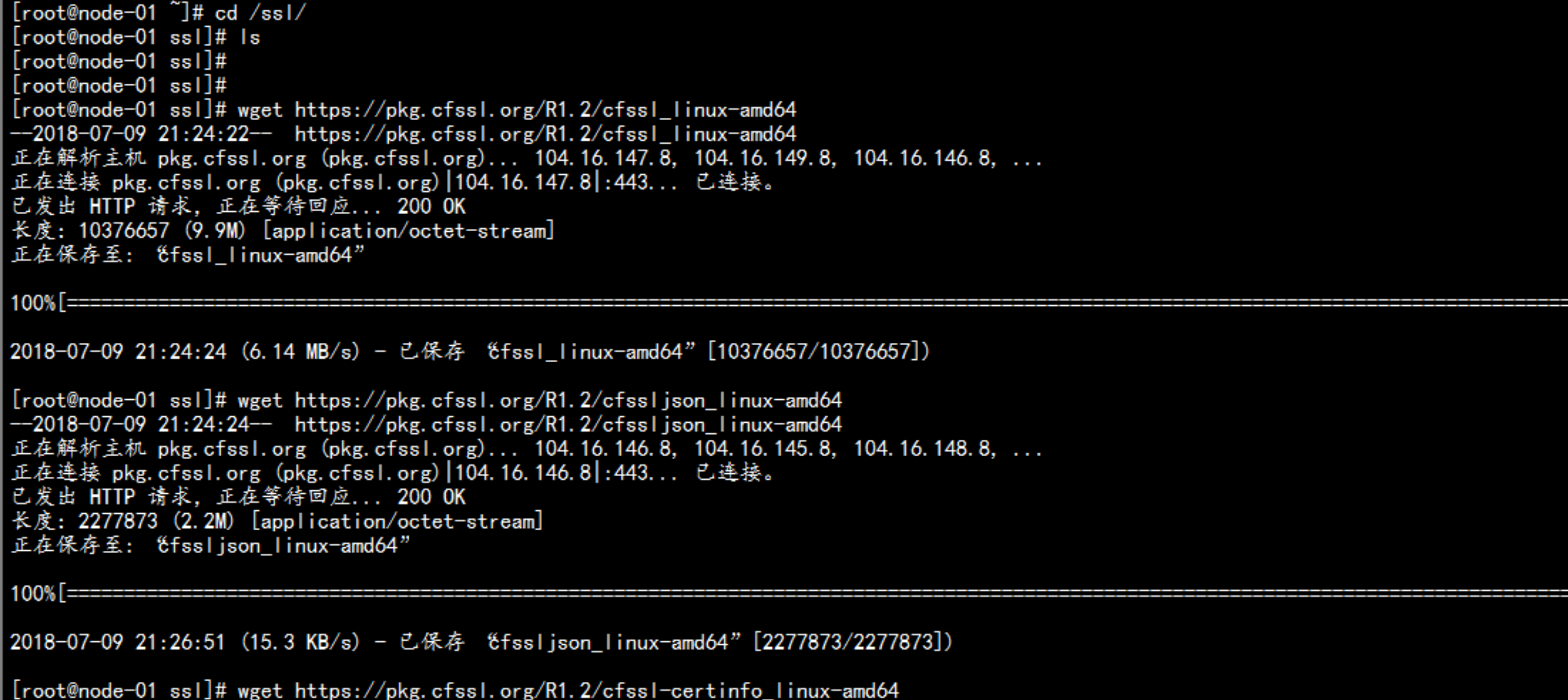

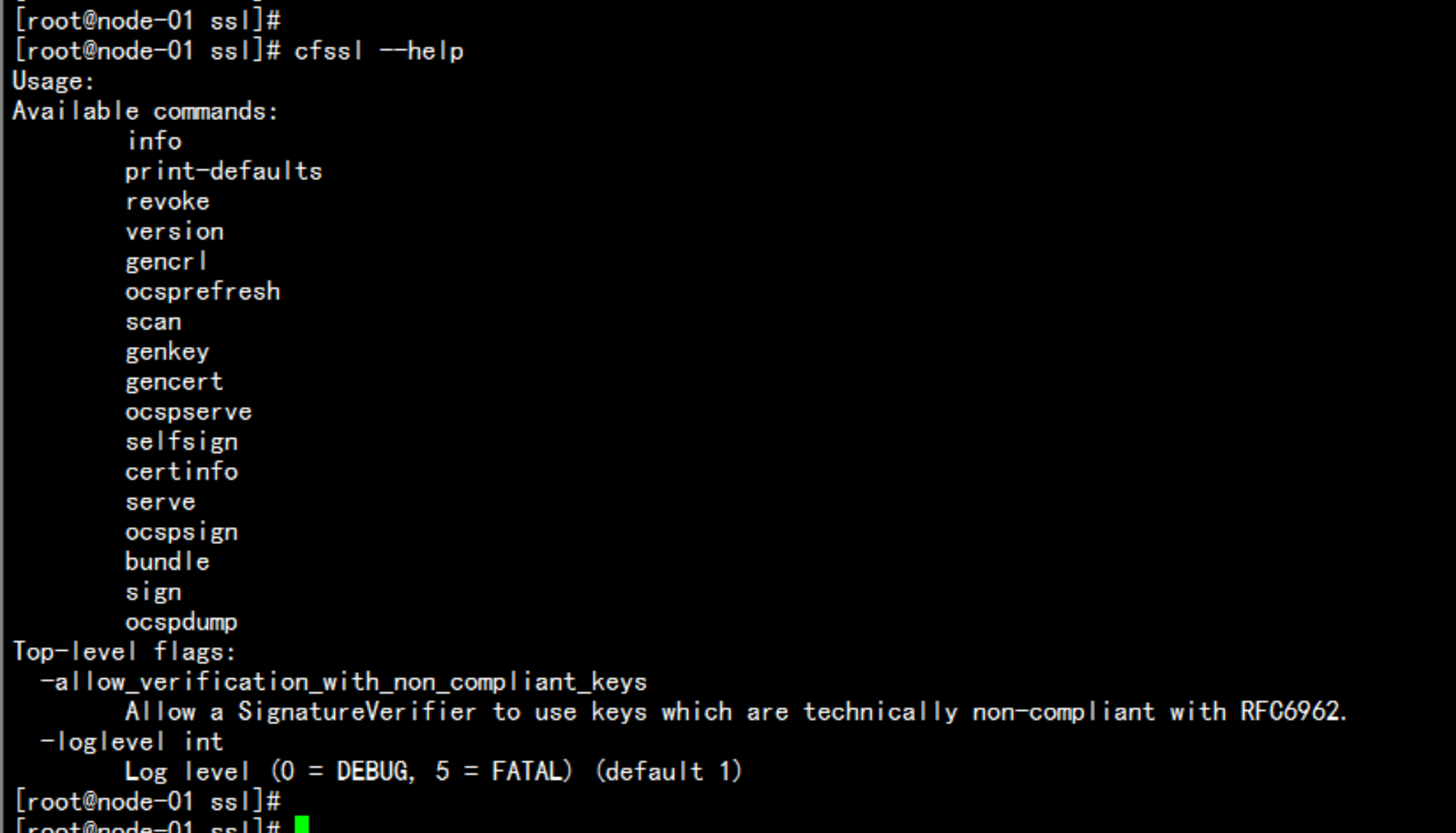

3.2.2 集群部署 – 自签TLS证书

安装证书生成工具 cfssl :

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfomkdir /ssl

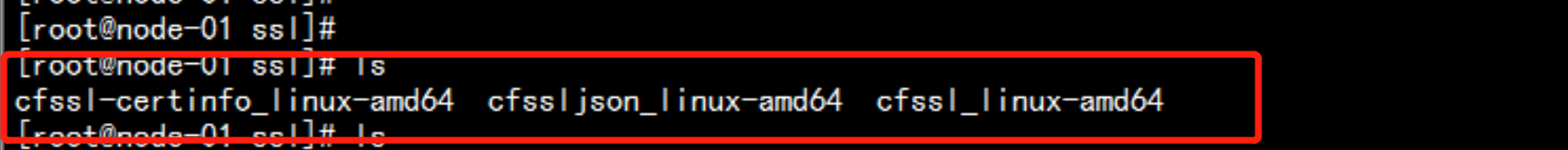

cd /ssl

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

准备好证书文件

cd /ssl/Deploy/

chmod +x certificate.sh

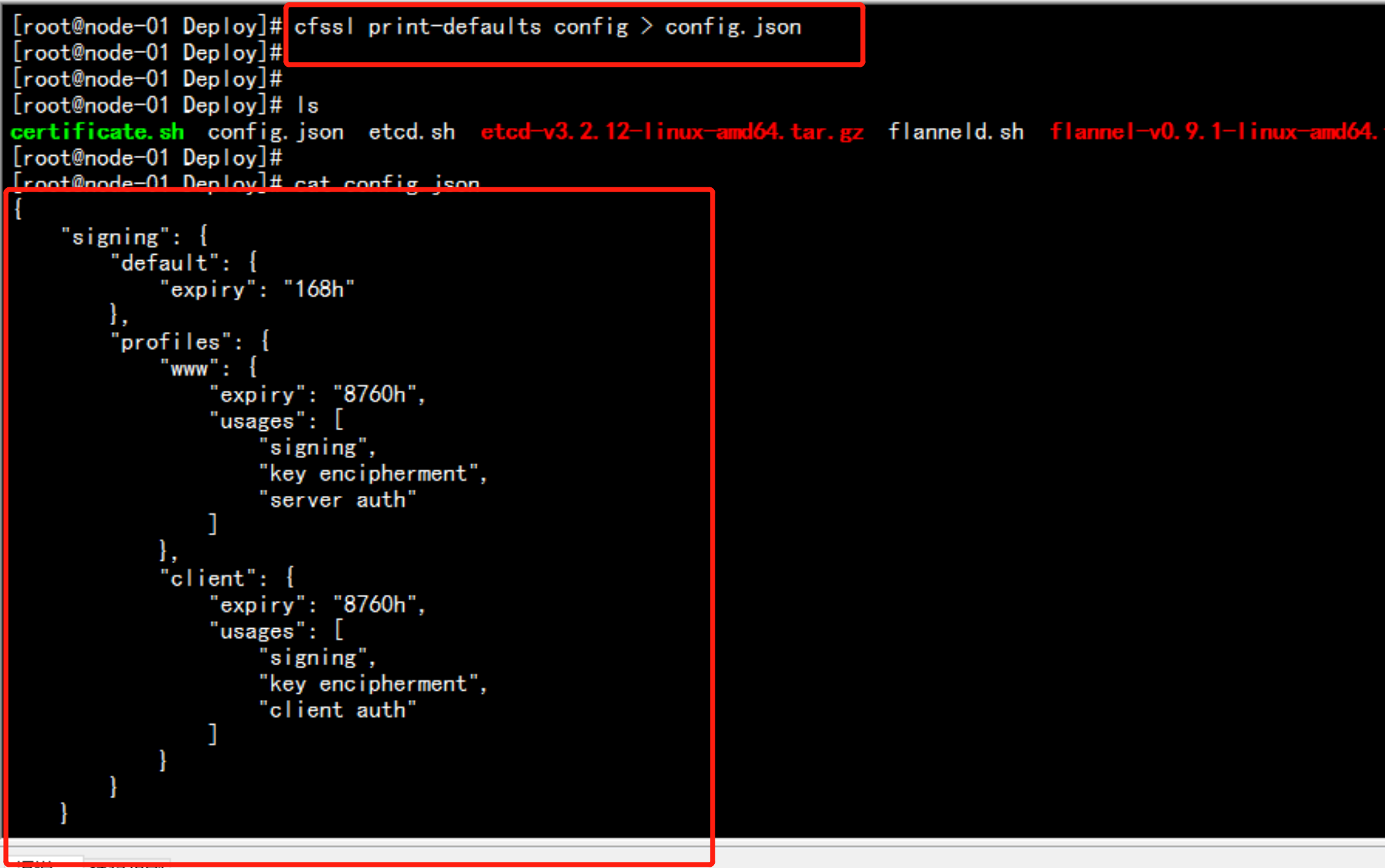

生成证书的模板文件:

cfssl print-defaults config > config.json

cat config.json

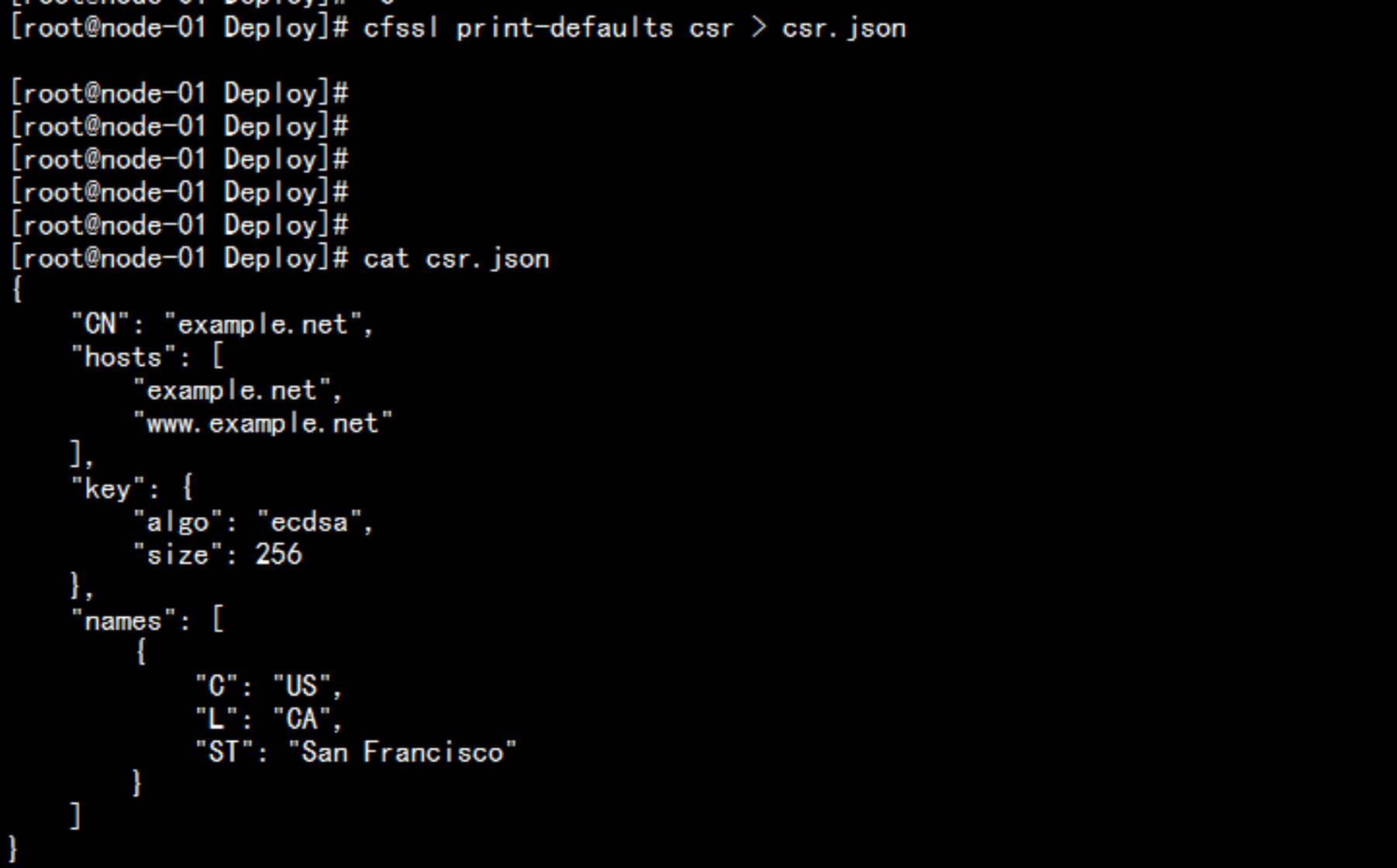

cfssl print-defaults csr > csr.json ### 请求证书的颁发方式

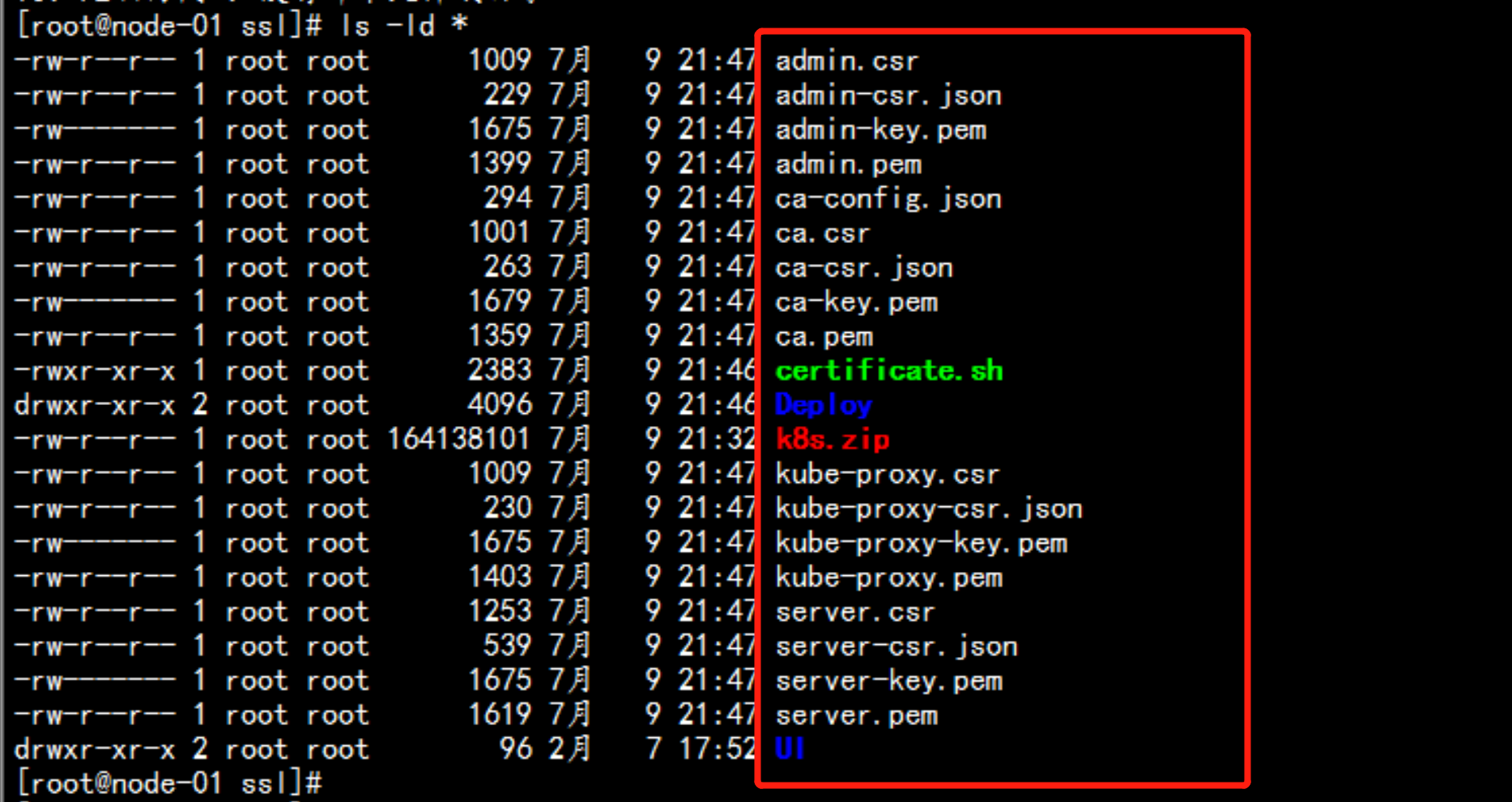

查看证书

cat certificate.sh

./certificate.sh

ls *.pem |grep -v |xargs rm -rf {}

certificate.sh 文件

---

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.17.100.11",

"172.17.100.12",

"172.17.100.13",

"10.10.10.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

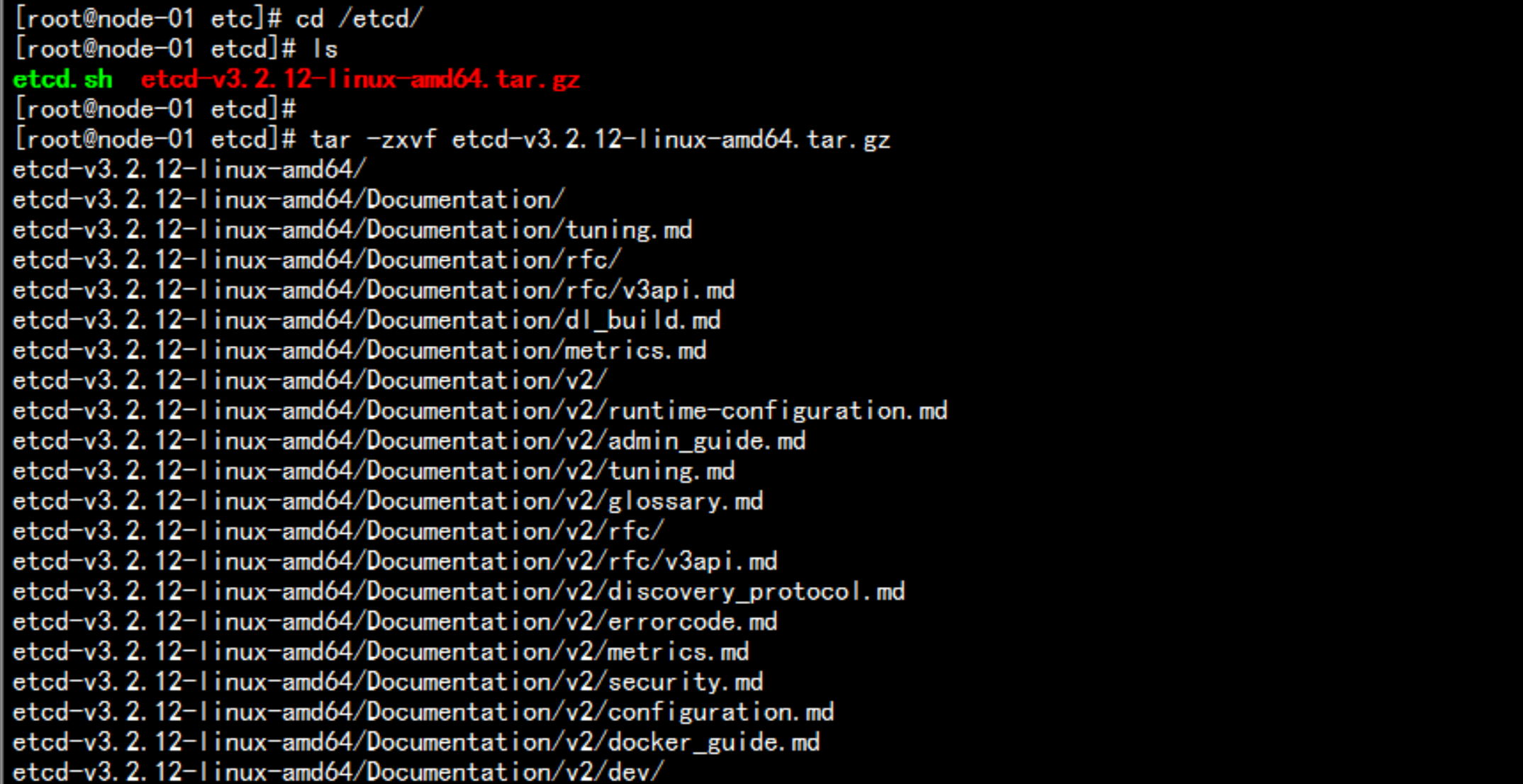

---3.2.3 部署ETCD 集群

二进制包下载地址:https://github.com/coreos/etcd/releases/tag/v3.2.12

查看集群状态:

# /opt/kubernetes/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" \

cluster-healthmkdir -p /opt/kubernetes

mkdir -p /opt/kubernetes/{bin,cfg,ssl}

cd /etcd/

tar -zxvf etcd-v3.2.12-linux-amd64.tar.gz

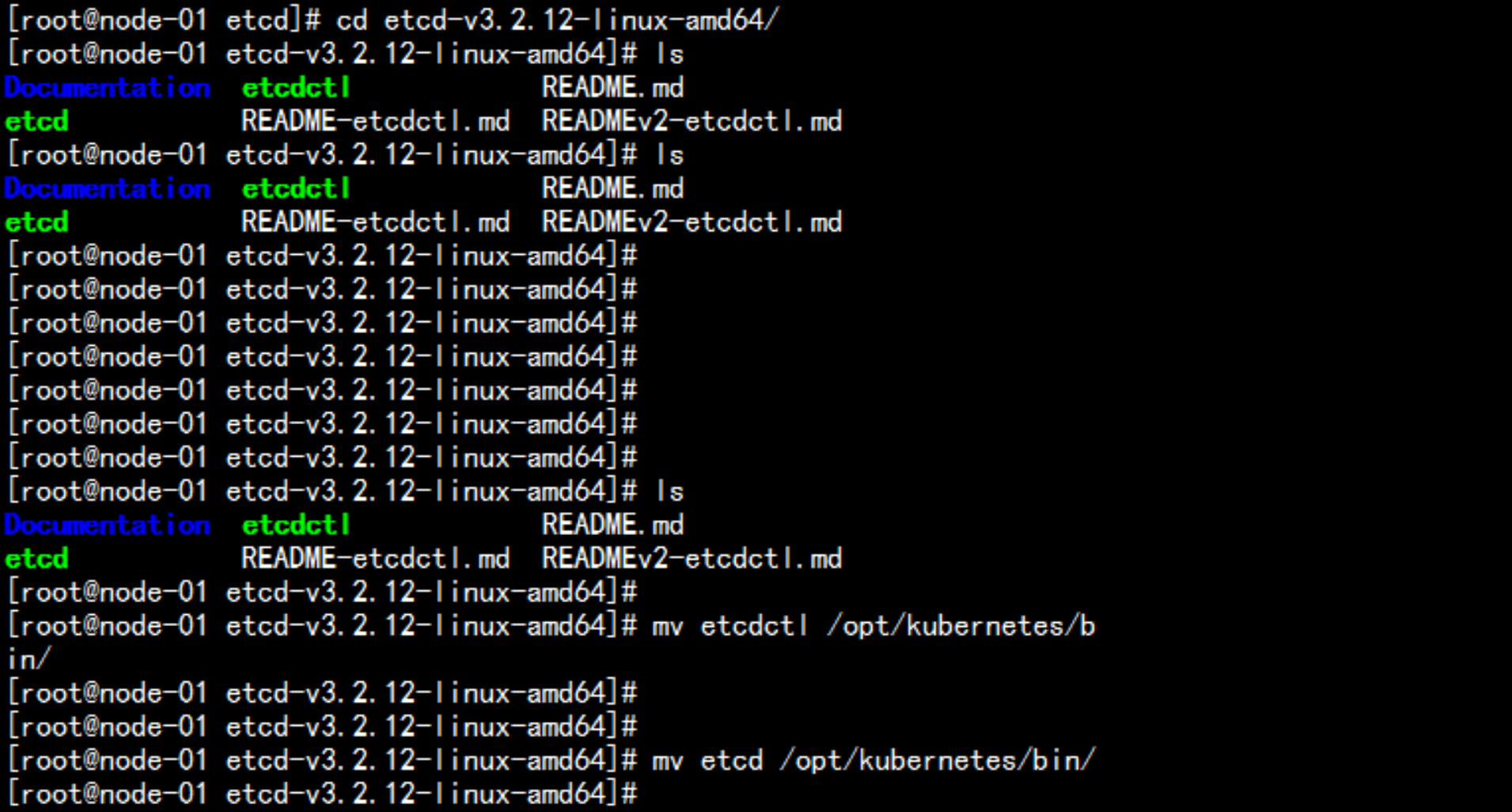

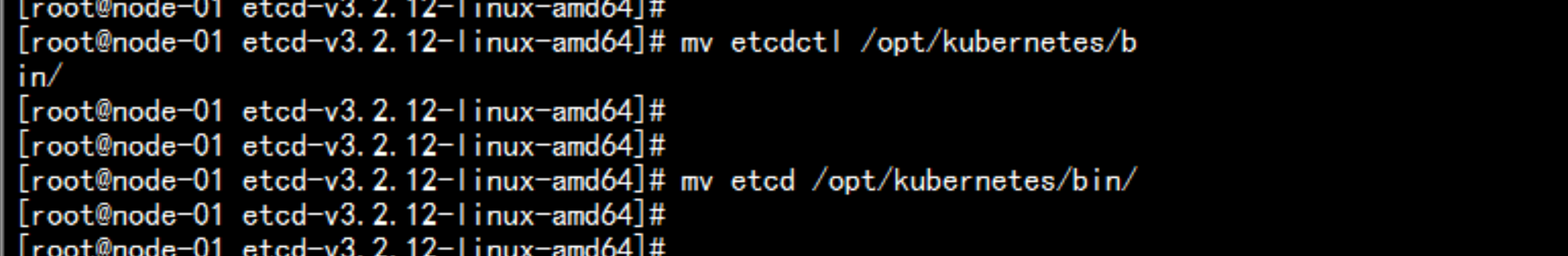

cd etcd-v3.2.12-linux-amd64/

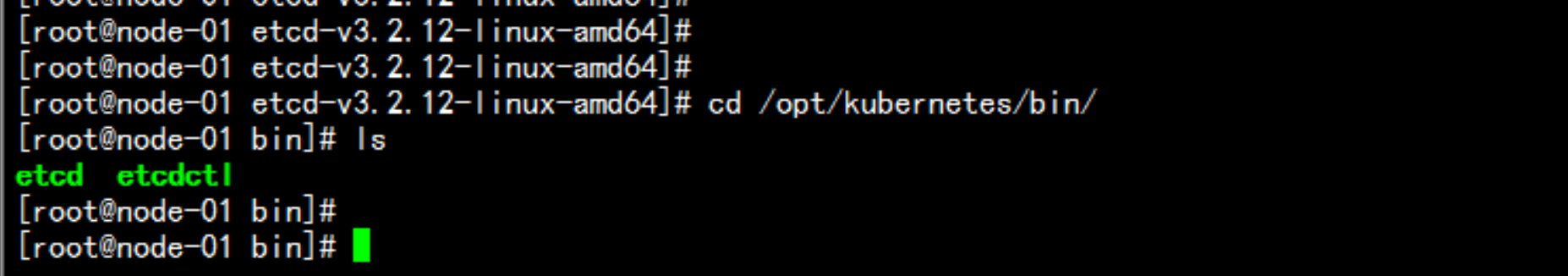

mv etcdctl /opt/kubernetes/bin/

mv etcd /opt/kubernetes/bin/

vim /opt/kubernetes/cfg/etcd

---

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.17.100.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.17.100.11:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.17.100.11:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.17.100.11:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.17.100.11:2380,etcd02=https://172.17.100.12:2380,etcd03=https://172.17.100.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

---vim /usr/lib/systemd/system/etcd.service

---

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/kubernetes/cfg/etcd

ExecStart=/opt/kubernetes/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-state=new \

--cert-file=/opt/kubernetes/ssl/server.pem \

--key-file=/opt/kubernetes/ssl/server-key.pem \

--peer-cert-file=/opt/kubernetes/ssl/server.pem \

--peer-key-file=/opt/kubernetes/ssl/server-key.pem \

--trusted-ca-file=/opt/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

---cd /ssl/pem/

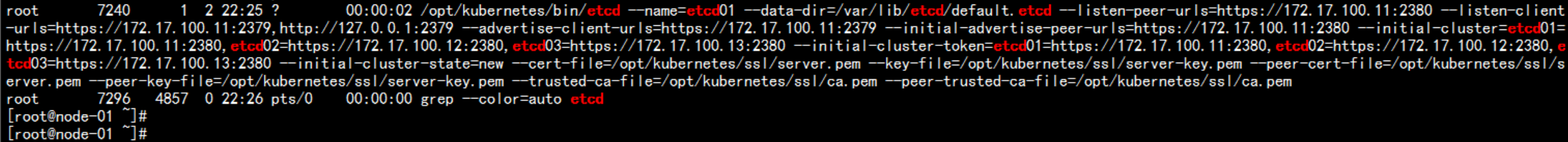

cp -ap *pem /opt/kubernetes/ssl/启动etcd

systemctl start etcd

ps -ef |grep etcd

scp -r /opt/kubernetes 172.17.100.12:/opt/

scp -r /opt/kubernets 172.17.100.13:/opt/

scp /usr/lib/systemd/system/etcd.service 172.17.100.12:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service 172.17.100.13:/usr/lib/systemd/system/172.17.100.12 更改 配置文件

vim /opt/kubernetes/cfg/etcd

---

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.17.100.12:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.17.100.12:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.17.100.12:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.17.100.12:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.17.100.11:2380,etcd02=https://172.17.100.12:2380,etcd03=https://172.17.100.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

---

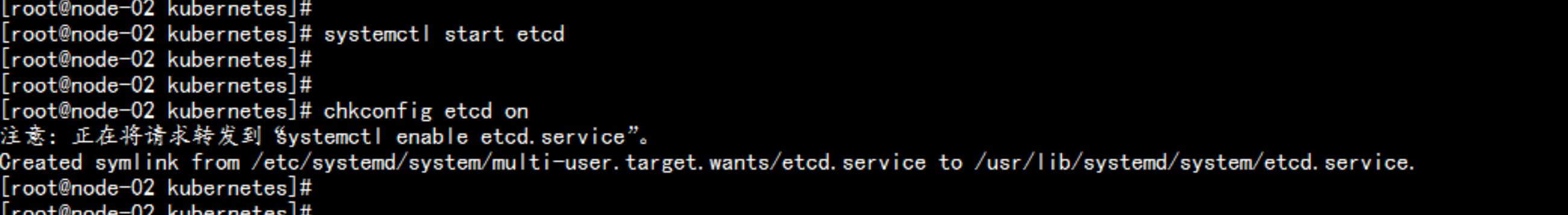

systemctl start etcd

chkconfig etcd on

172.17.100.13 更改 配置文件

vim /opt/kubernetes/cfg/etcd

---

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.17.100.13:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.17.100.13:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.17.100.13:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.17.100.13:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://172.17.100.11:2380,etcd02=https://172.17.100.12:2380,etcd03=https://172.17.100.13:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

---

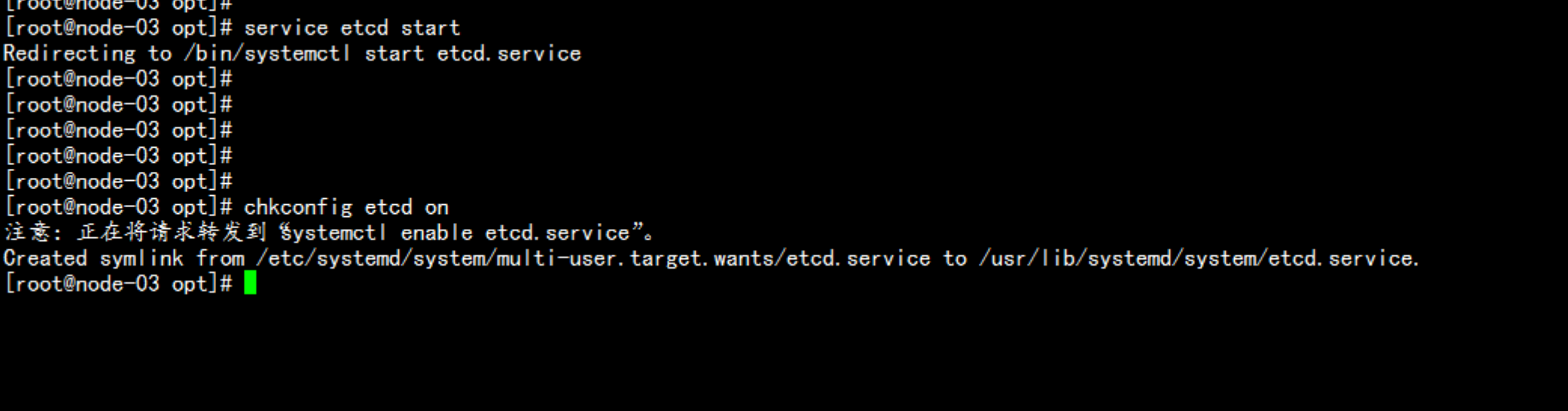

systemctl start etcd

chkconfig etcd on

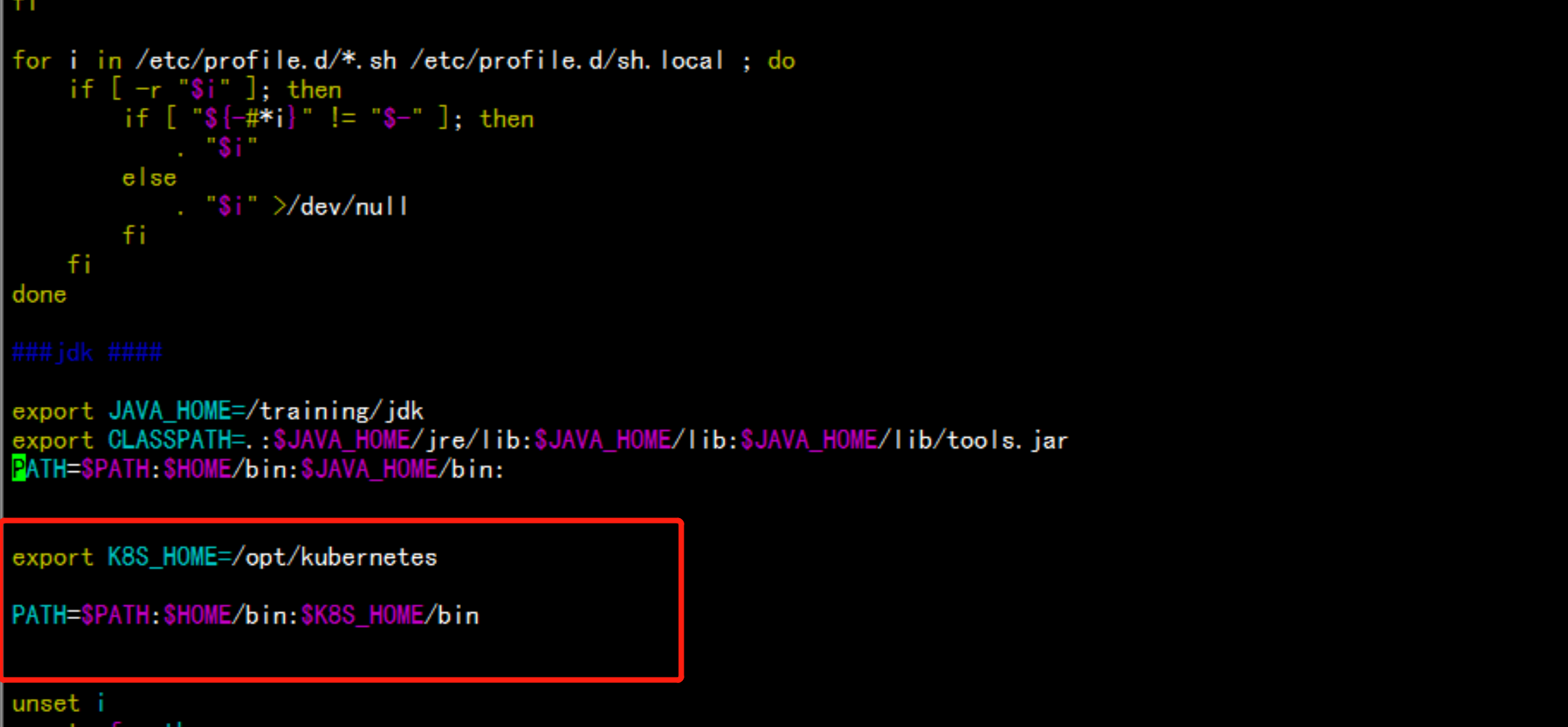

vim /etc/profile

export K8S_HOME=/opt/kubernetes

PATH=$PATH:$HOME/bin:$K8S_HOME/bin

---

source /etc/profile

--

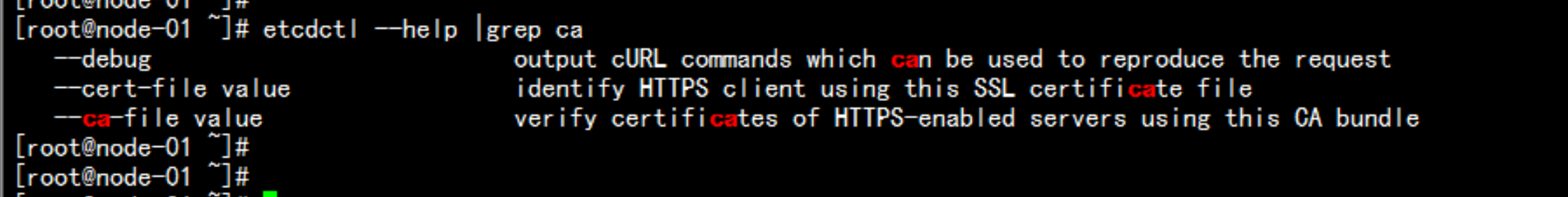

etcdctl --help

ectdctl --help |grep ca

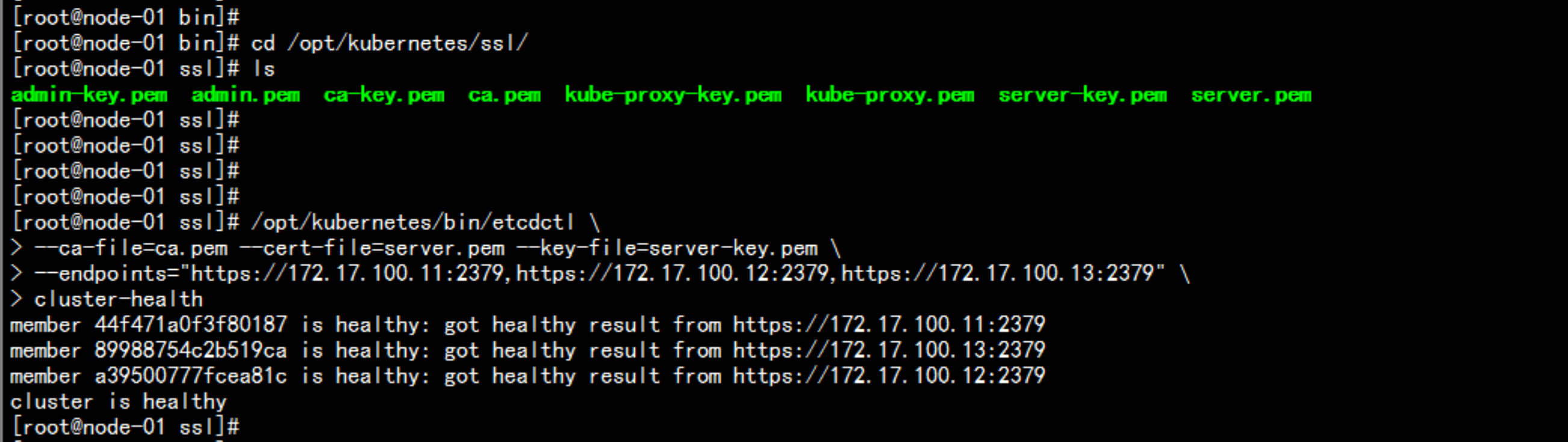

查看集群状态:

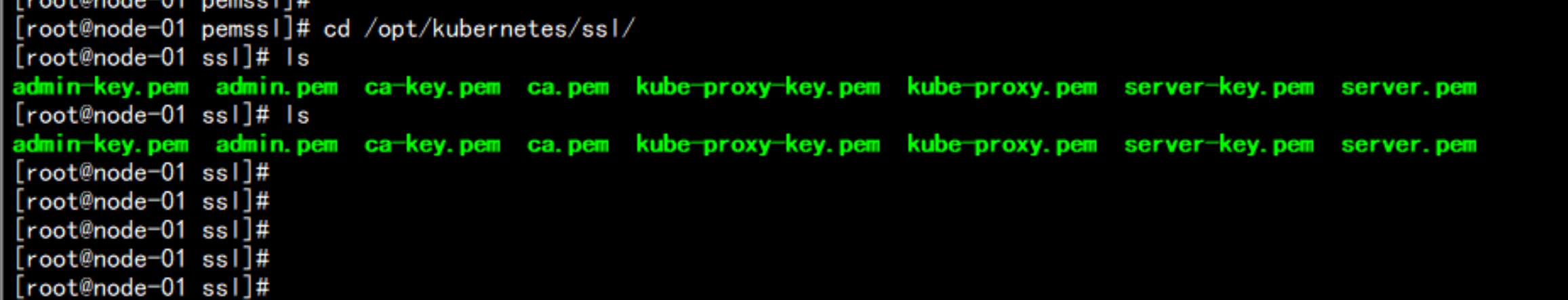

cd /opt/kubernetes/ssl/

# /opt/kubernetes/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" \

cluster-health

3.2.4 集群部署 – 部署Flannel网络

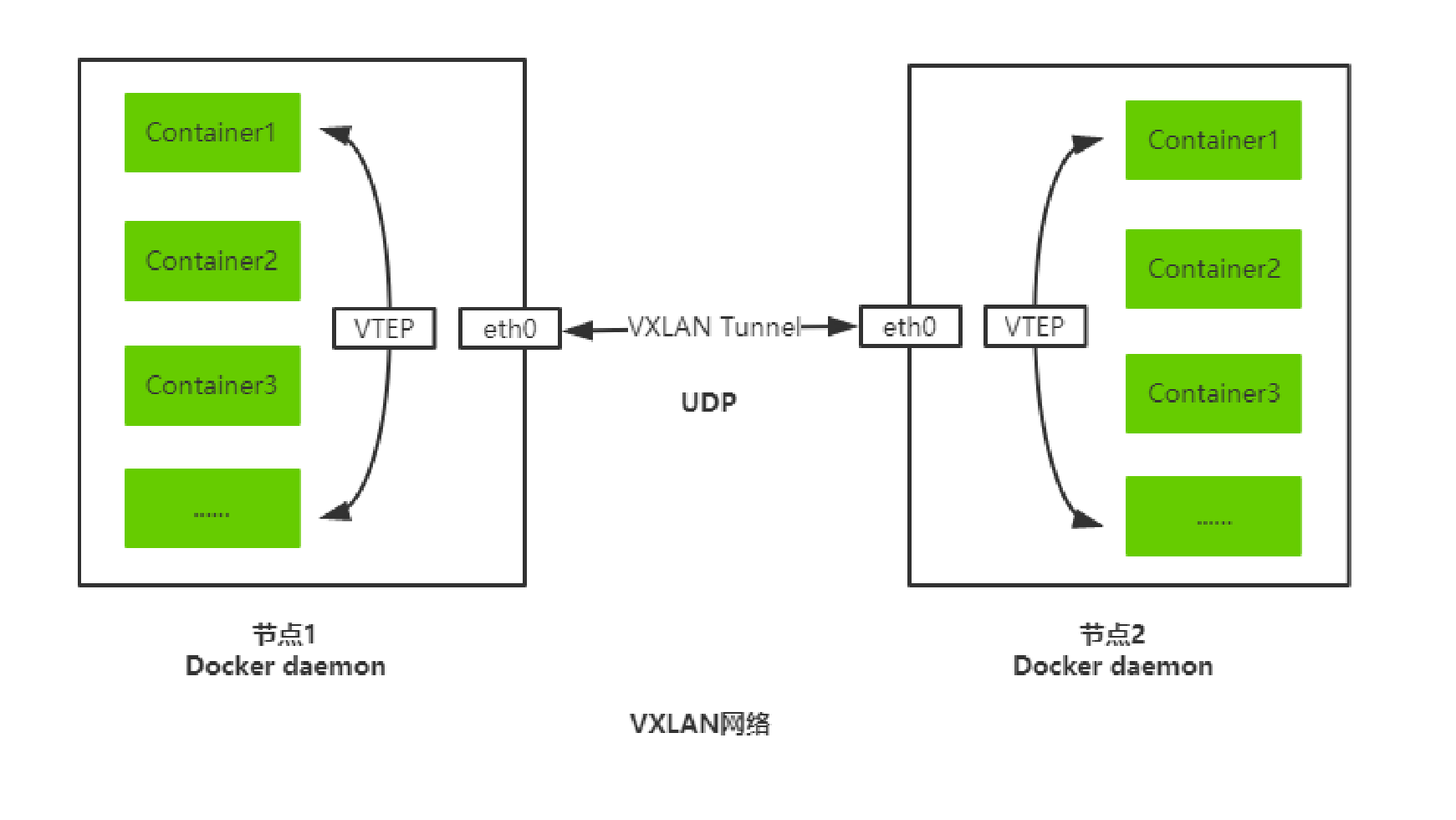

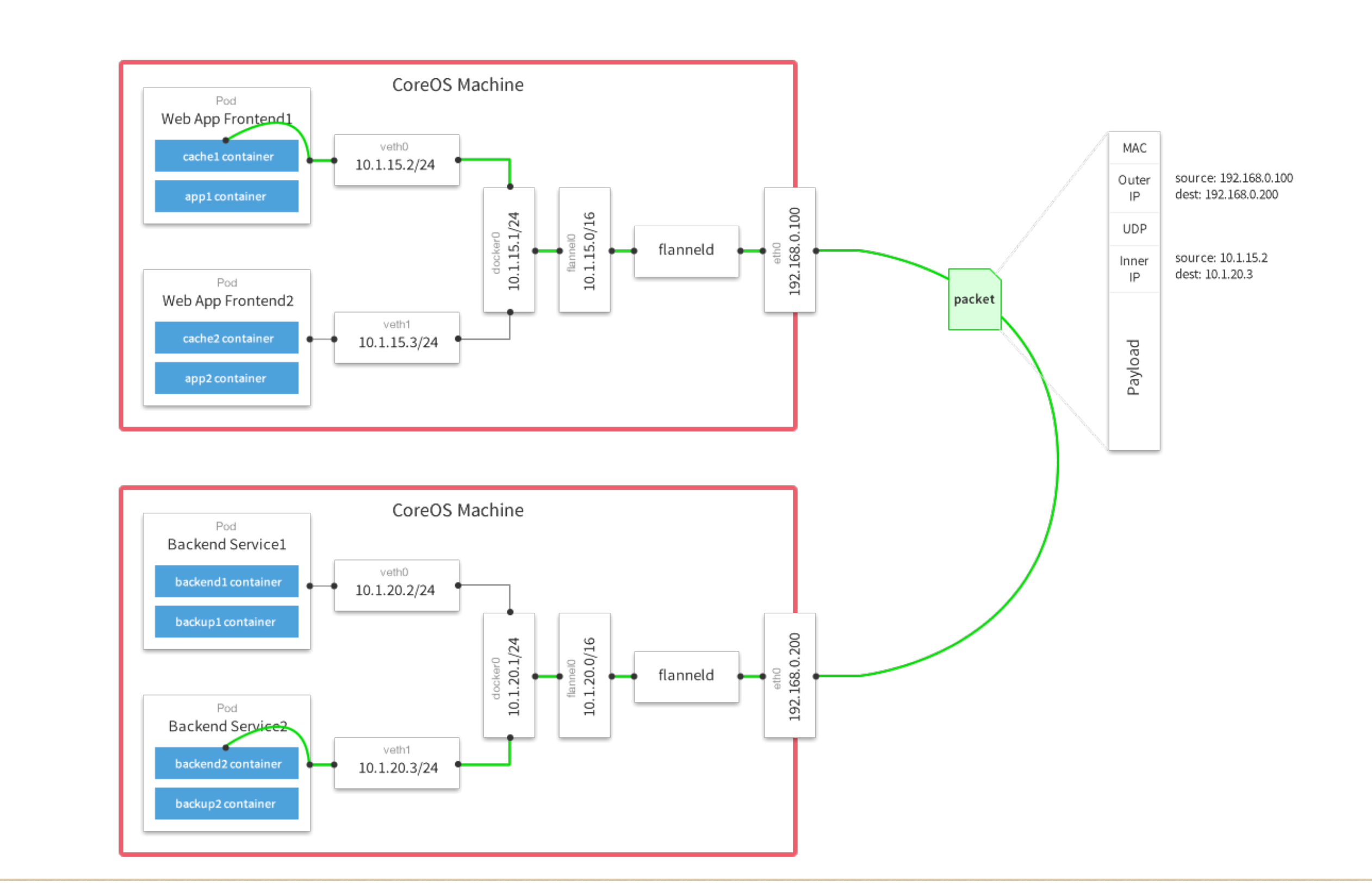

Overlay Network :覆盖网络,在基础网络上叠加的一种虚拟网络技术模式,该网络中的主机通过虚拟链路连接起来。

VXLAN :将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上传输,到达目的地后由隧道端点解封装并将数据发送给目标地址。

Flannel :是Overlay网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS VPC和GCE路由等数据转发方式。

多主机容器网络通信其他主流方案:隧道方案( Weave、OpenvSwitch ),路由方案(Calico)等。

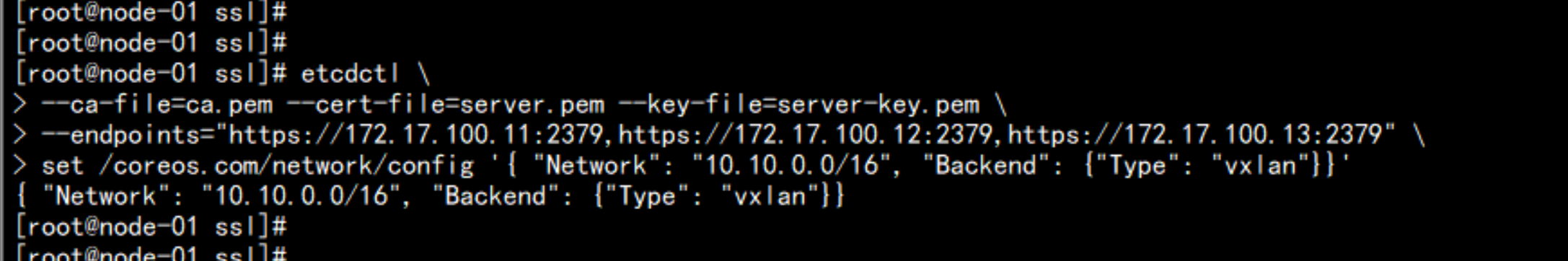

1 )写入分配的子网段到 etcd ,供 flanneld 使用

# /opt/kubernetes/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" \

set /coreos.com/network/config '{ "Network": "10.0.0.0/16", "Backend": {"Type": "vxlan"}}'

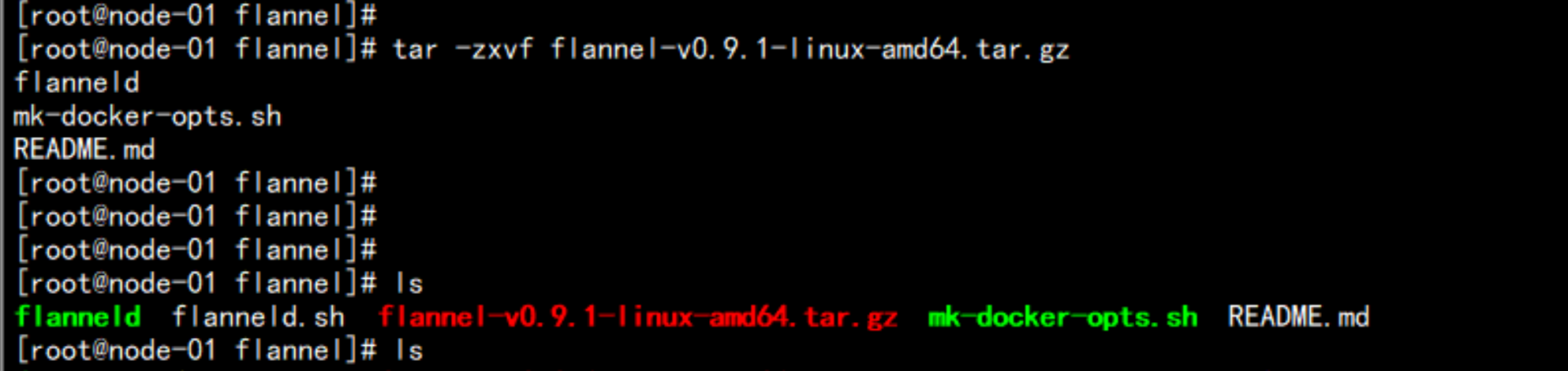

2 )下载二进制包

# wget https://github.com/coreos/flannel/releases/download/v0.9.1/flannel-v0.9.1-linux-amd64.tar.gz

3 )配置 Flannel

4 ) systemd 管理 Flannel

5 )配置 Docker 启动指定子网段

6 )部署:

mkdir /root/flannel

ls -ld *

cp -p flanneld mk-docker-opts.sh /opt/kubernetes/bin/

tar -zxvf flannel-v0.9.1-linux-amd64.tar.gz

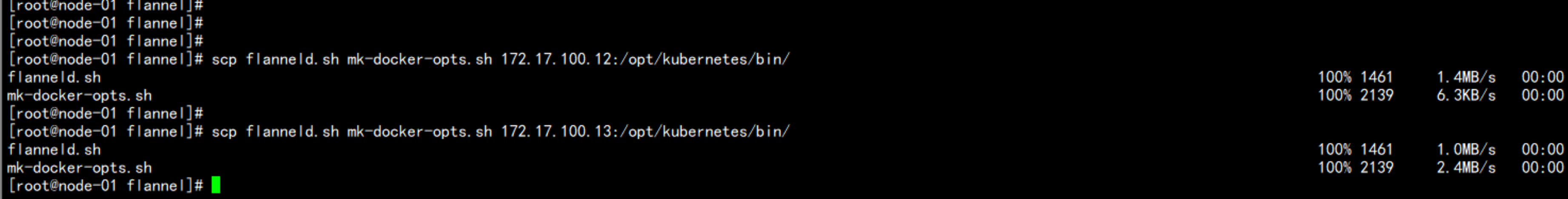

scp flanneld mk-docker-opts.sh 172.17.100.12:/opt/kubernetes/bin/

scp flanneld mk-docker-opts.sh 172.17.100.13:/opt/kubernetes/bin/

flannel 配置的文件

---

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/kubernetes/ssl/ca.pem \

-etcd-certfile=/opt/kubernetes/ssl/server.pem \

-etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

---配置172.17.100.12的node节点的配置文件

---

vim /opt/kubernetes/conf/flanneld

FLANNEL_OPTIONS="--etcd-endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379 -etcd-cafile=/opt/kubernetes/ssl/ca.pem \

-etcd-certfile=/opt/kubernetes/ssl/server.pem \

-etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

---配置启动flanned 文件

vim /usr/lib/systemd/system/flanneld.service

---

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

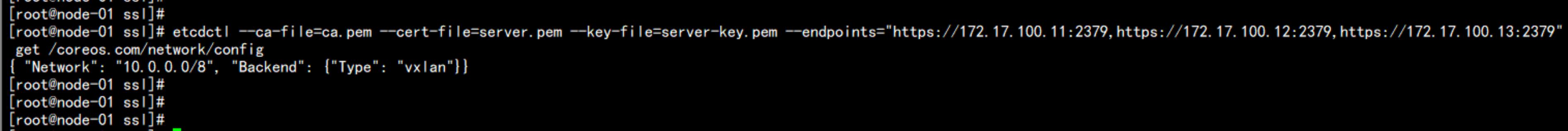

---172.17.100.11 执行

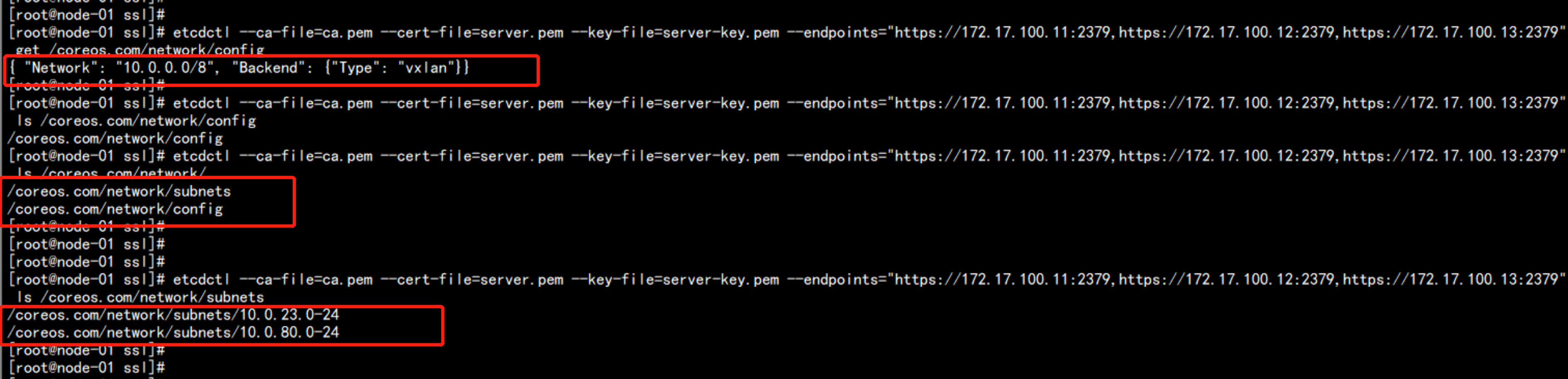

设置VXLAN 网络

cd /opt/kubernetes/ssl/

etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" \

set /coreos.com/network/config '{ "Network": "10.0.0.0/8", "Backend": {"Type": "vxlan"}}'

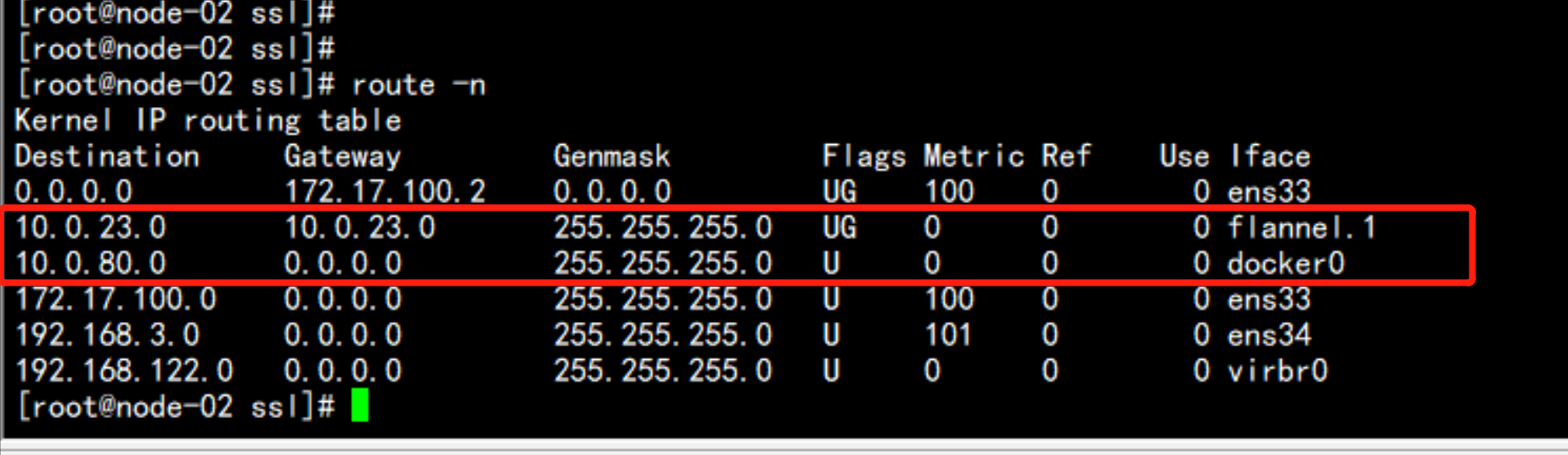

在node 节点上面检查

172.17.100.12:

cd /opt/kubernetes/ssl/

etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" get /coreos.com/network/config

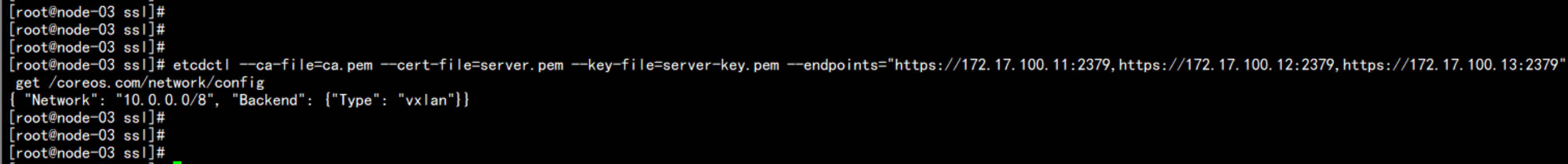

172.17.100.13

cd /opt/kubernetes/ssl/

etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" get /coreos.com/network/config

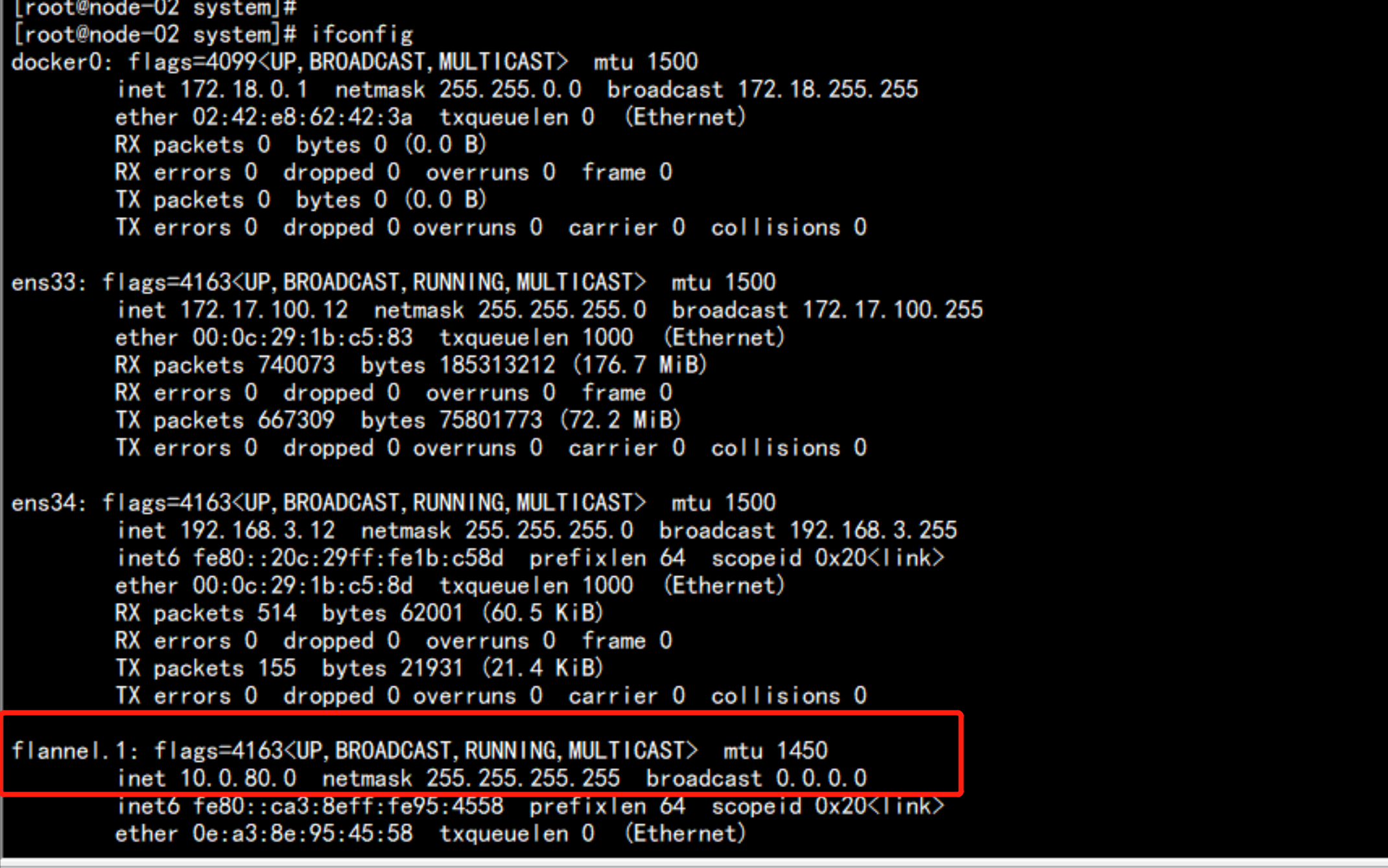

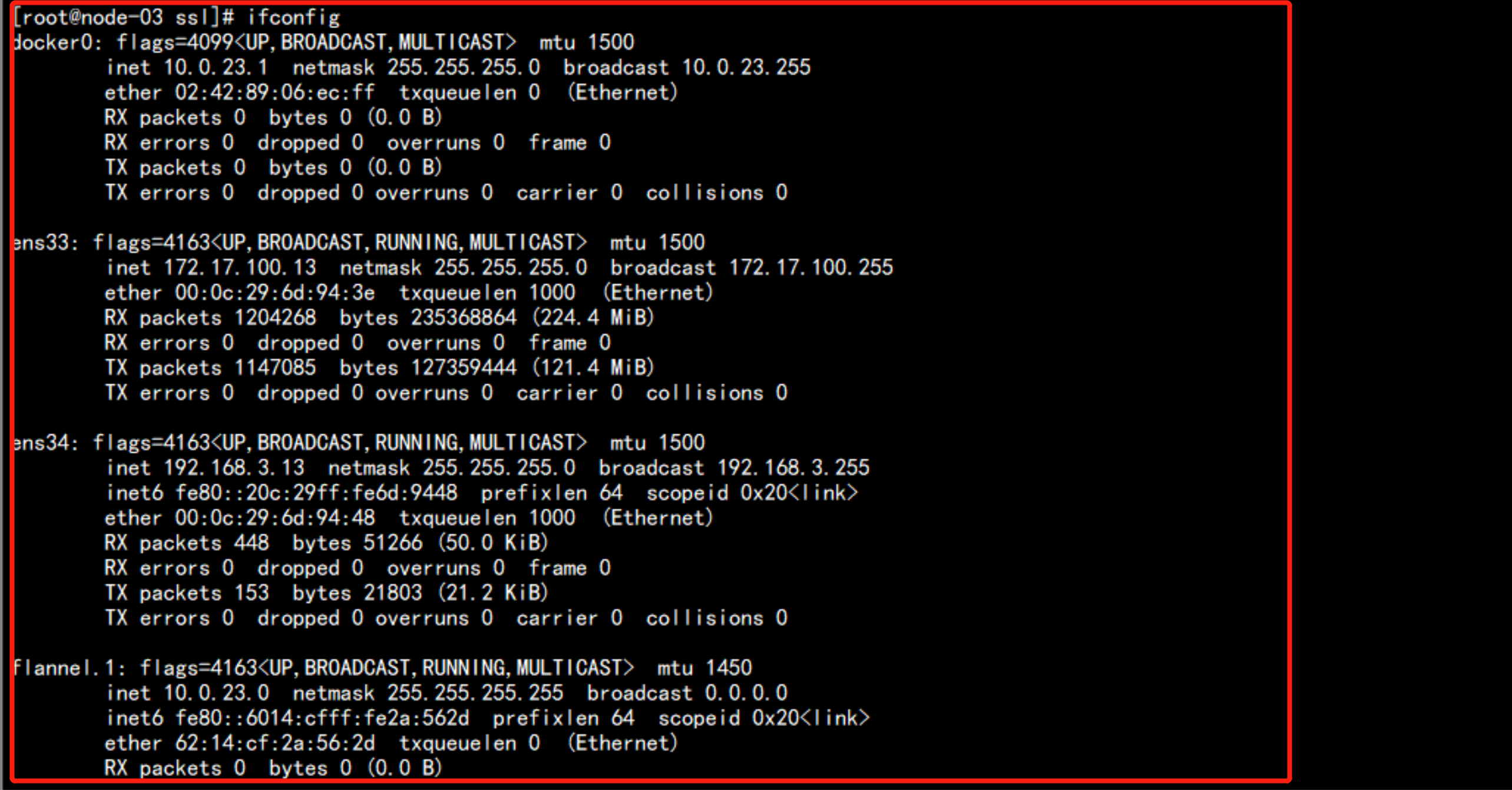

ifconfig

会生成一个flannel.1 的网段

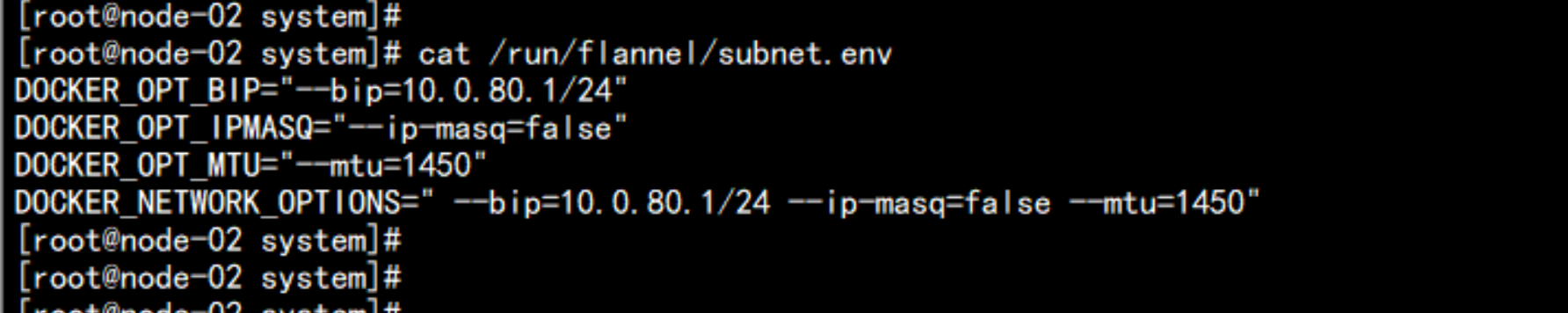

cat /run/flannel/subnet.env

配置docker 的启动 加载应用flanneld 网络

---

vim /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd \$DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP \$MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

---

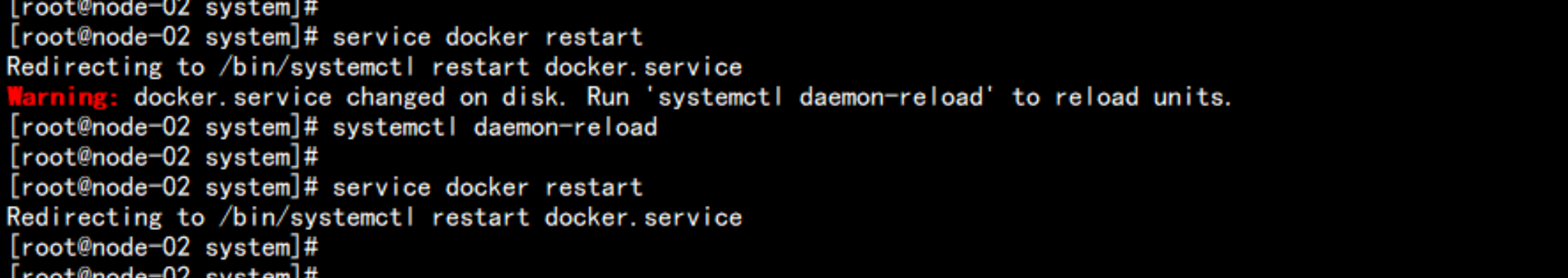

启动docker

systemctl daemon-reload

service docker restart

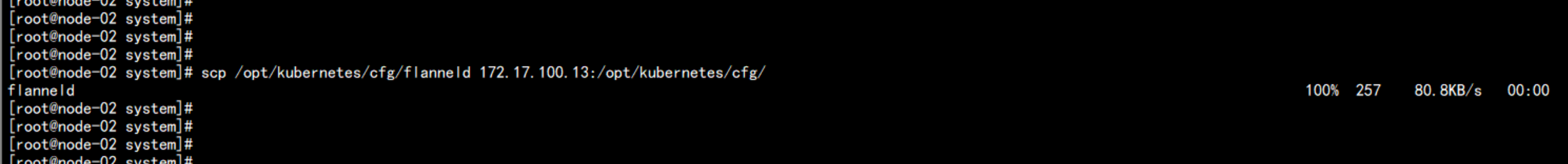

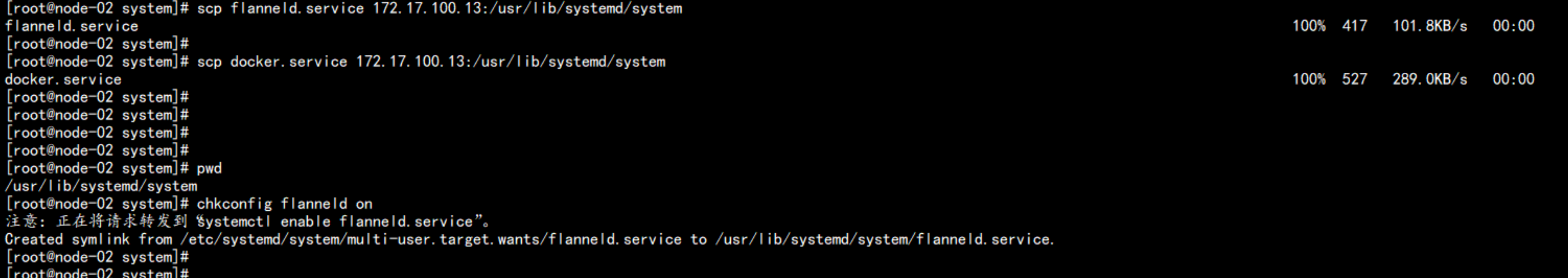

部署172.17.100.13

---

从 172.17.100.12 当中去同步文件数据

cd /usr/lib/systemd/system

scp /opt/kubernetes/cfg/flanneld 172.17.100.13:/opt/kubernetes/cfg/

scp flanneld.service 172.17.100.13:/usr/lib/systemd/system

scp docker.service 172.17.100.13:/usr/lib/systemd/system

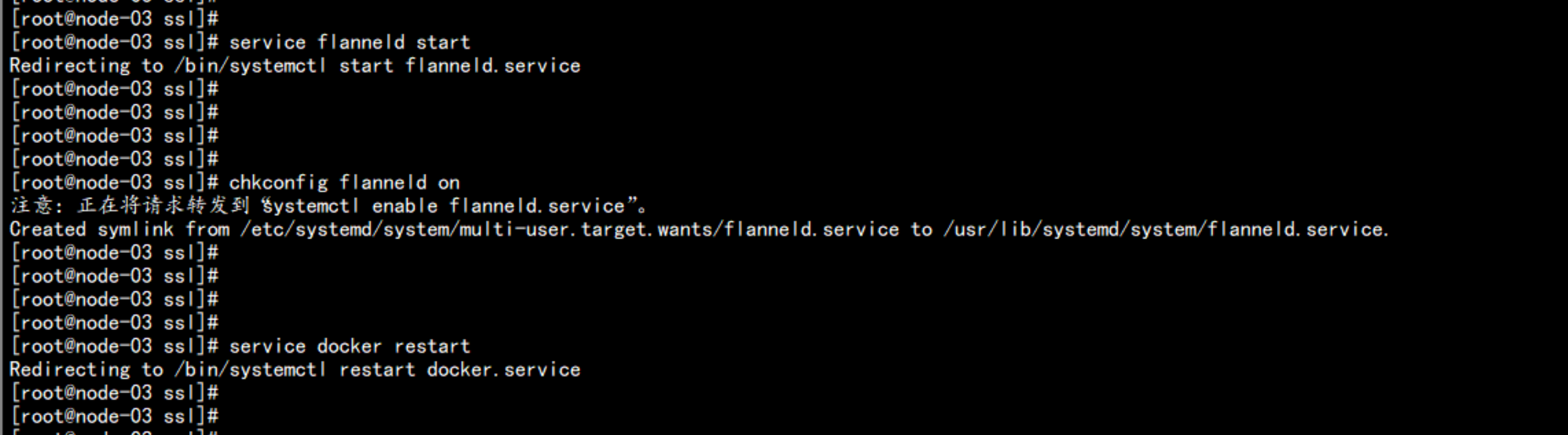

启动 flanneld 与 重启docker

service flanneld start

service docker restart

chkconfig flanneld on

ifconfig |more

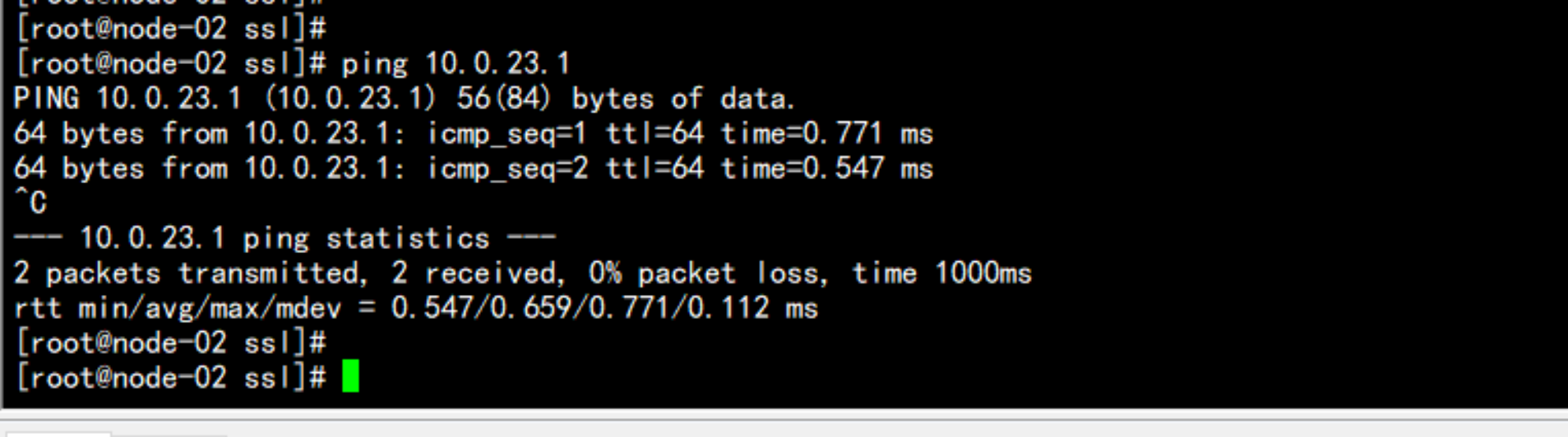

测试:

从 172.17.100.12 当中去测试flanneld 网络是否能通

ping 10.0.23.1

在主节点上面查看flannel 的网络

etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" ls /coreos.com/network/subnets

判断 flanneld 网络的 节点

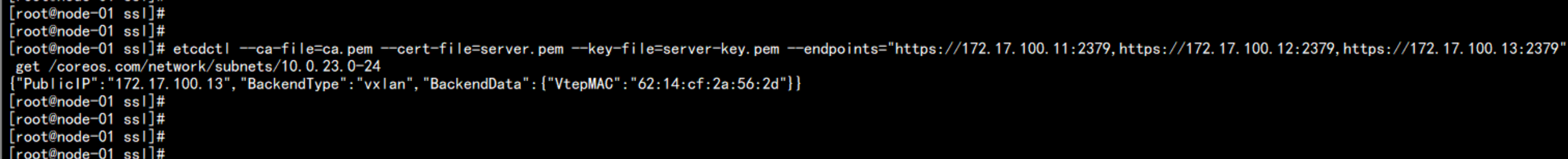

etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379" get /coreos.com/network/subnets/10.0.23.0-24

route -n

3.2.5 集群部署 – 创建Node节点kubeconfig文件

集群部署 – 创建Node节点kubeconfig文件

将文件 kubeconfig.sh 上传到172。17.100.11 的主节点上面去

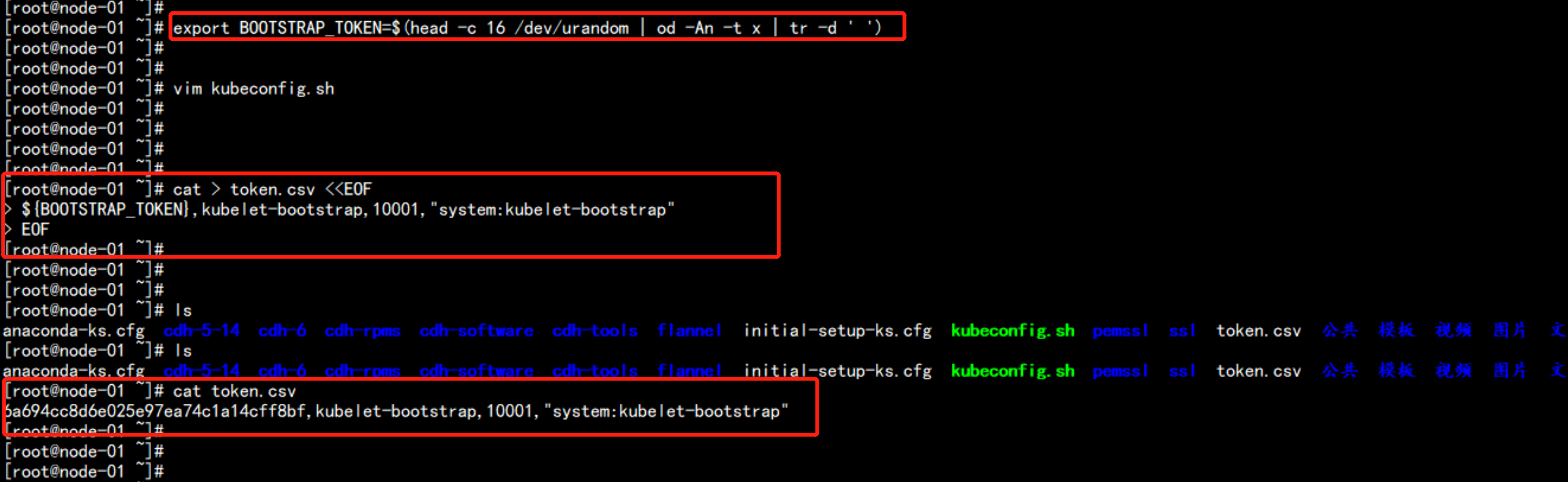

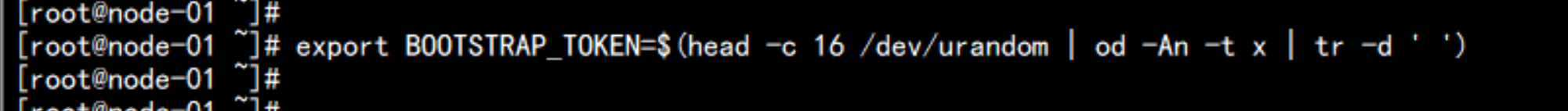

1、创建TLS Bootstrapping Token

---

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

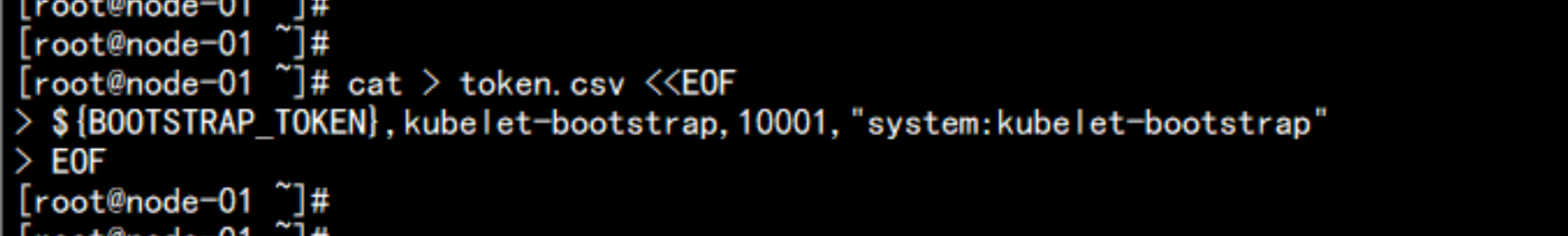

cat > token.csv <<EOF

> ${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

> EOF

----

查看Token文件

cat token.csv

---

6a694cc8d6e025e97ea74c1a14cff8bf,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

---

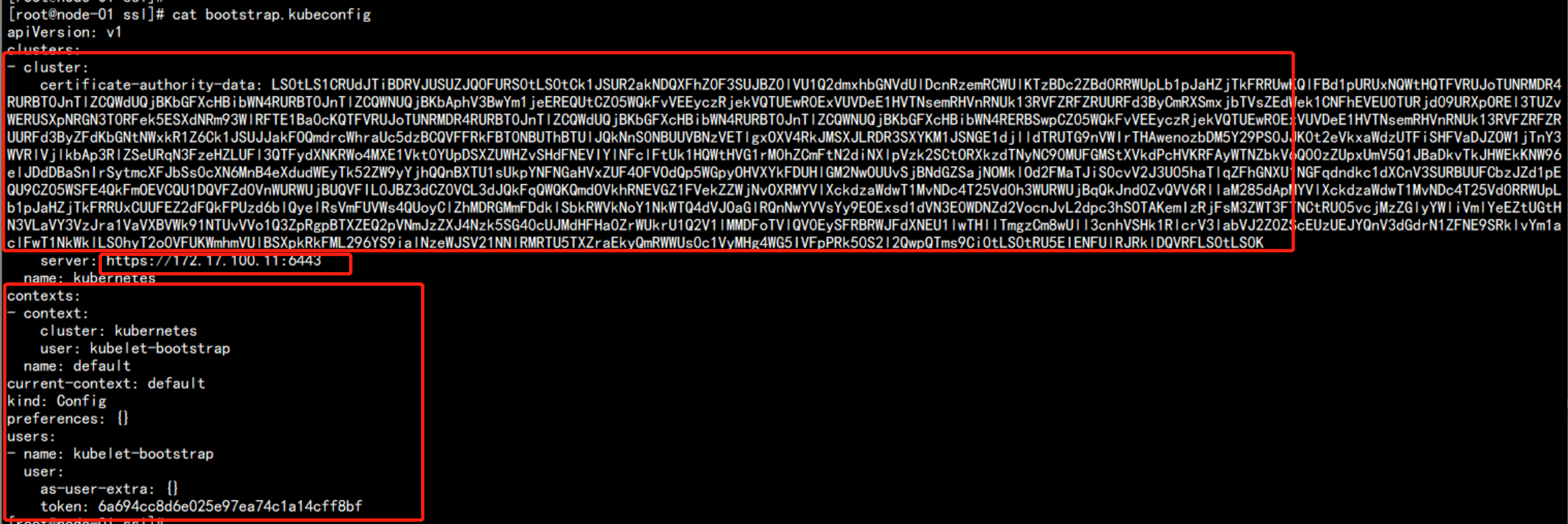

2. 设置 bootstrap.kubeconfig的 配置文件

设置kube_apiserver

export KUBE_APISERVER="https://172.17.100.11:6443"

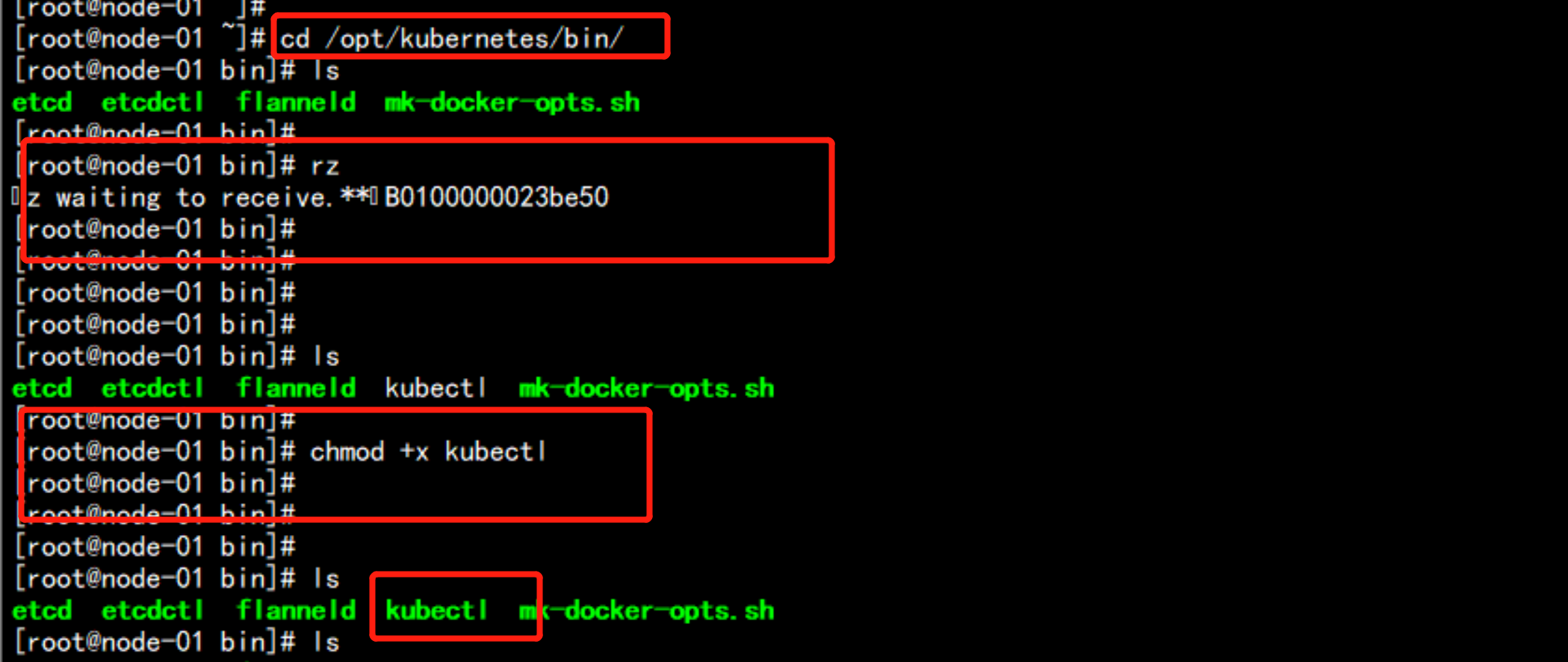

上传kubectl的命令到 /opt/kubernetes/bin 下面:

cd /opt/kubernetes/bin

chmod +x kubectl

# 设置集群参数

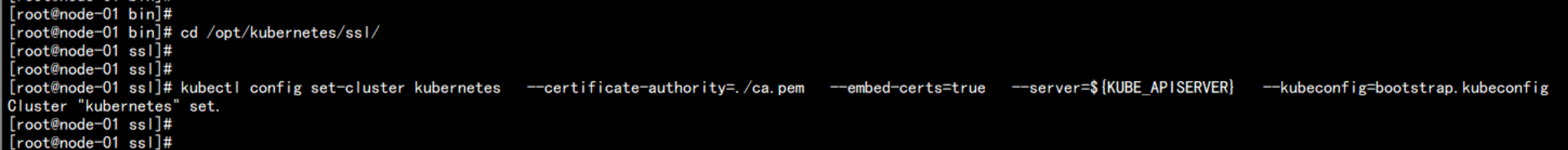

cd /opt/kubernetes/ssl/

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

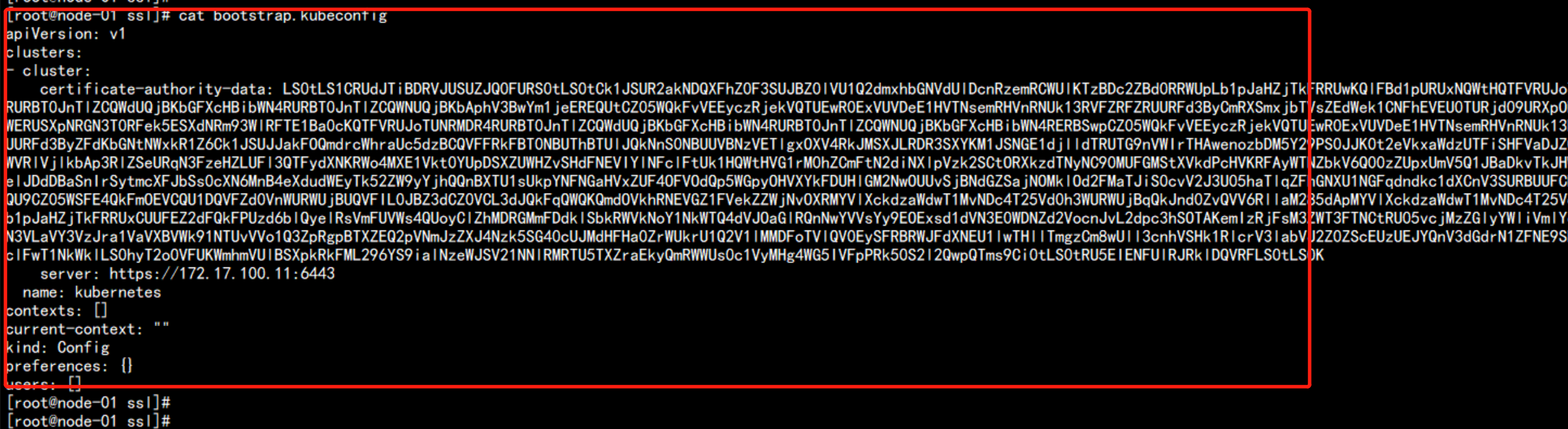

生成bootstrap.kubeconfig 文件

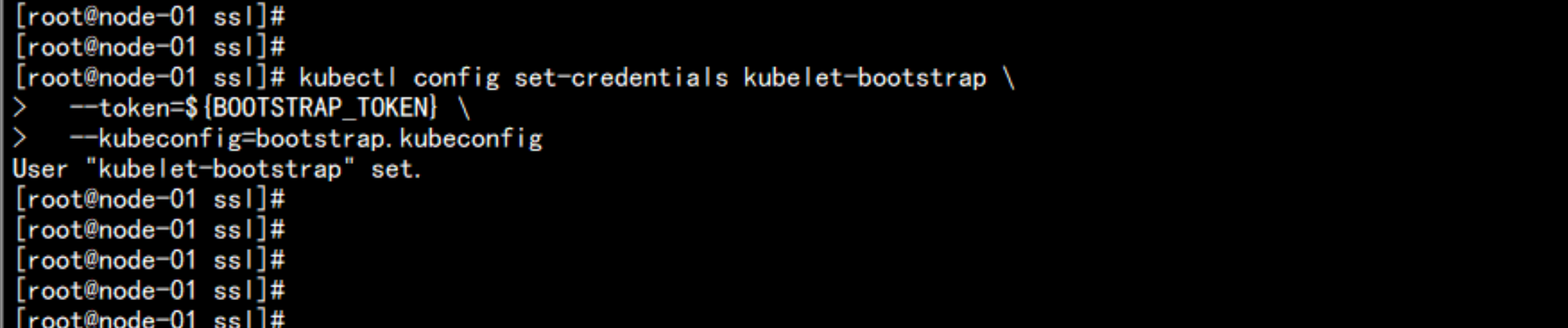

设置 证书的信息:

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

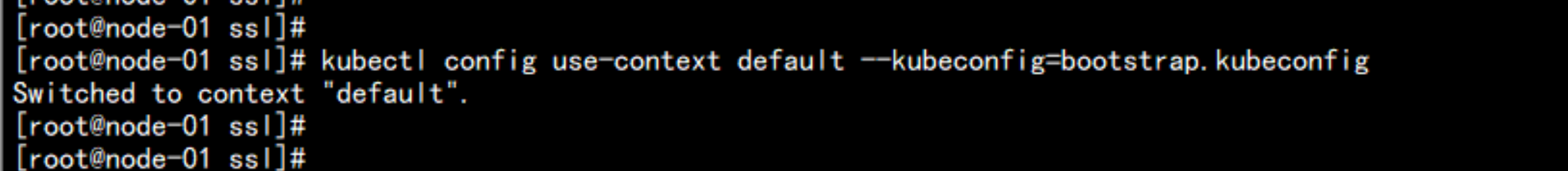

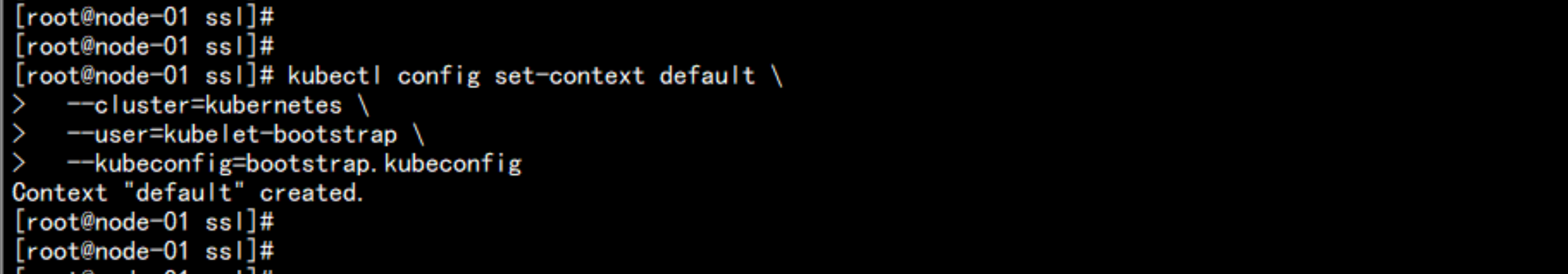

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfigcat bootstrap.kubeconfig3. 创建kube-proxy kubeconfig文件

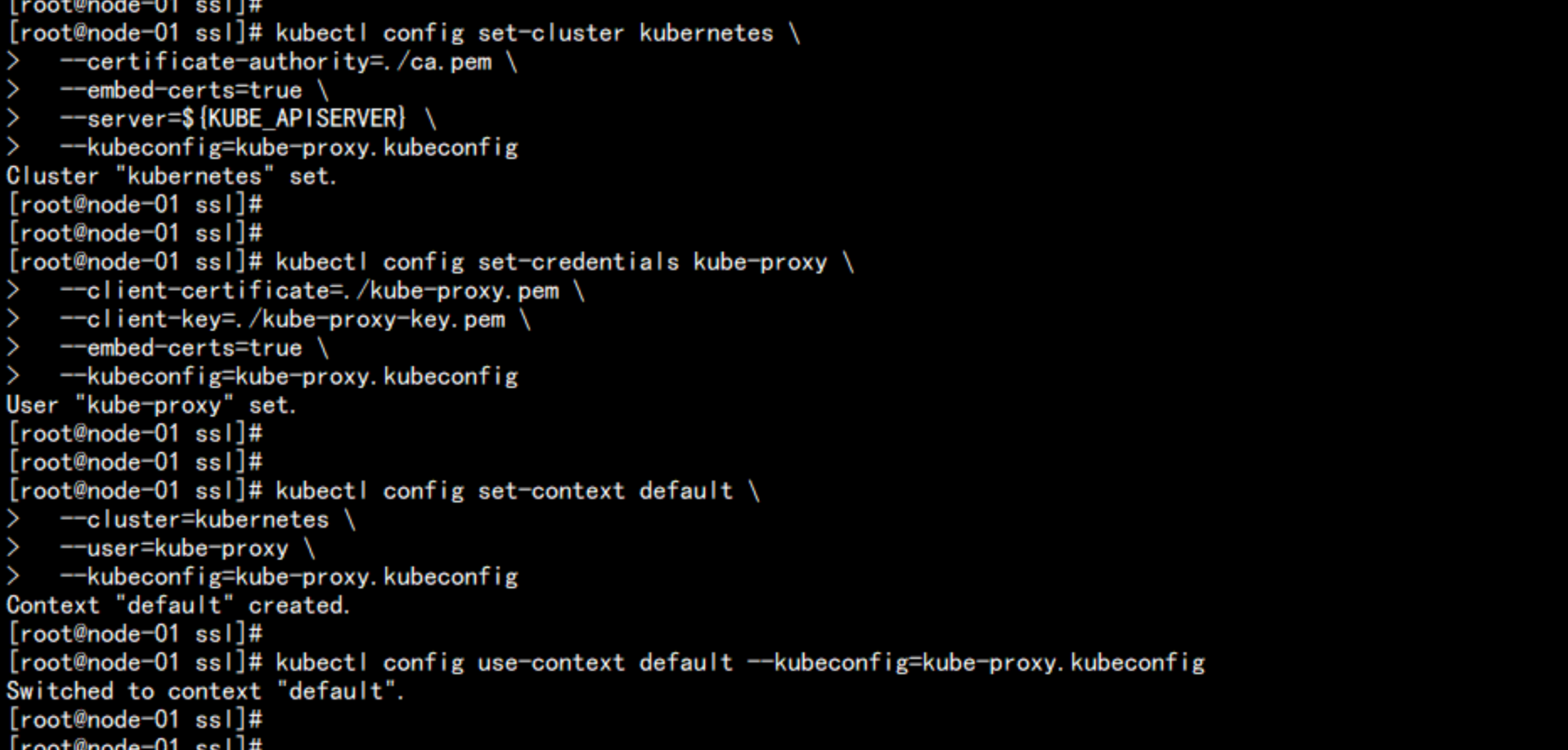

kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

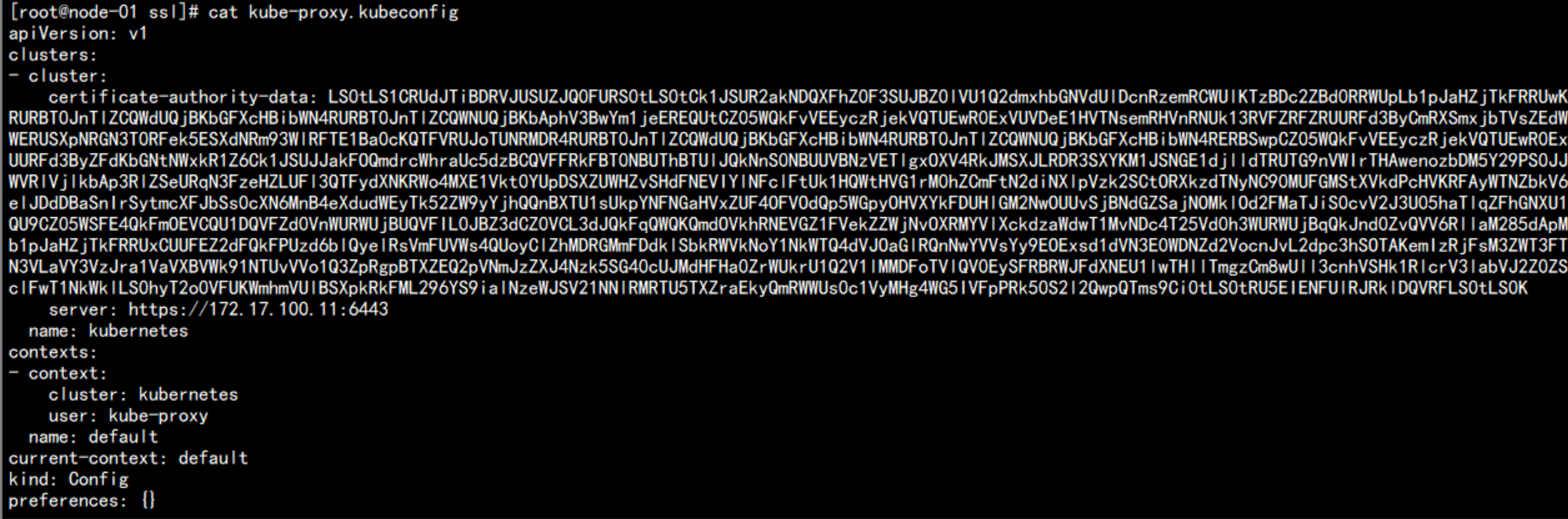

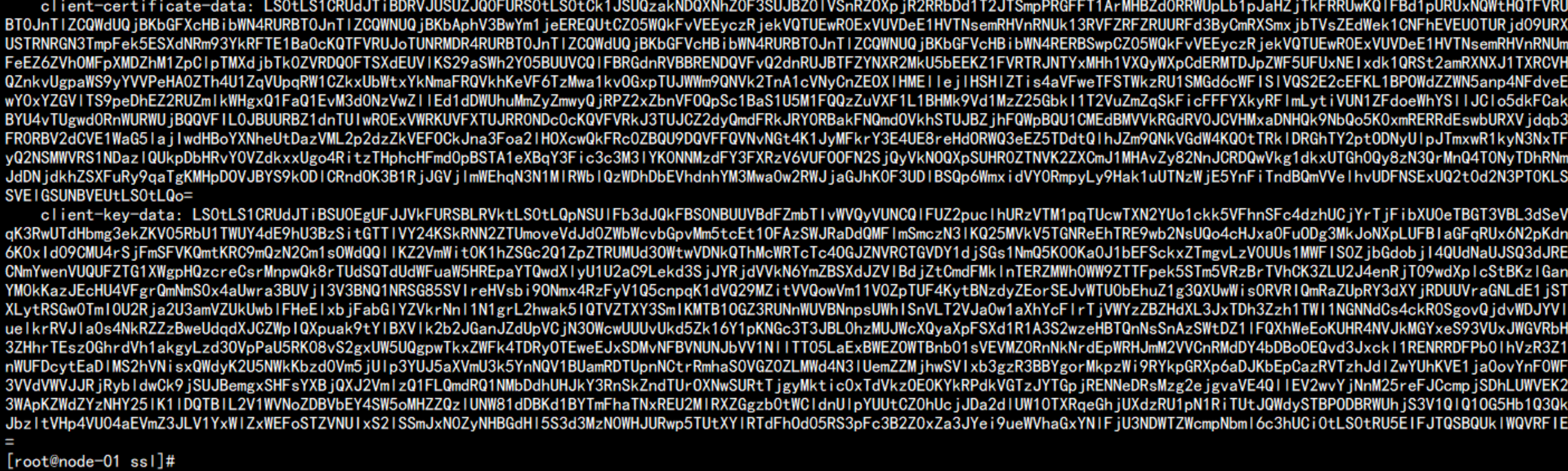

cat kube-proxy.kubeconfig

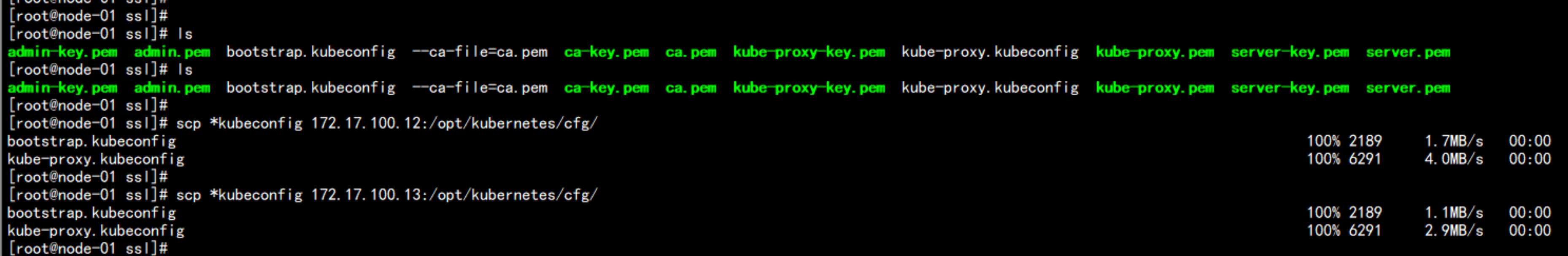

将 bootstrap.kubeconfig 和 kube-proxy.kubeconfig 同步到其它节点

cp -p *kubeconfig /opt/kubernetes/cfg

scp *kubeconfig 172.17.100.12:/opt/kubernetes/cfg/

scp *kubeconfig 172.17.100.13:/opt/kubernetes/cfg/

四:kubernetes 集群部署 – 获取K8S二进制包

下载k8s的 软件包

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.9.md

下载 k8s 的版本:1.9.2 server 包

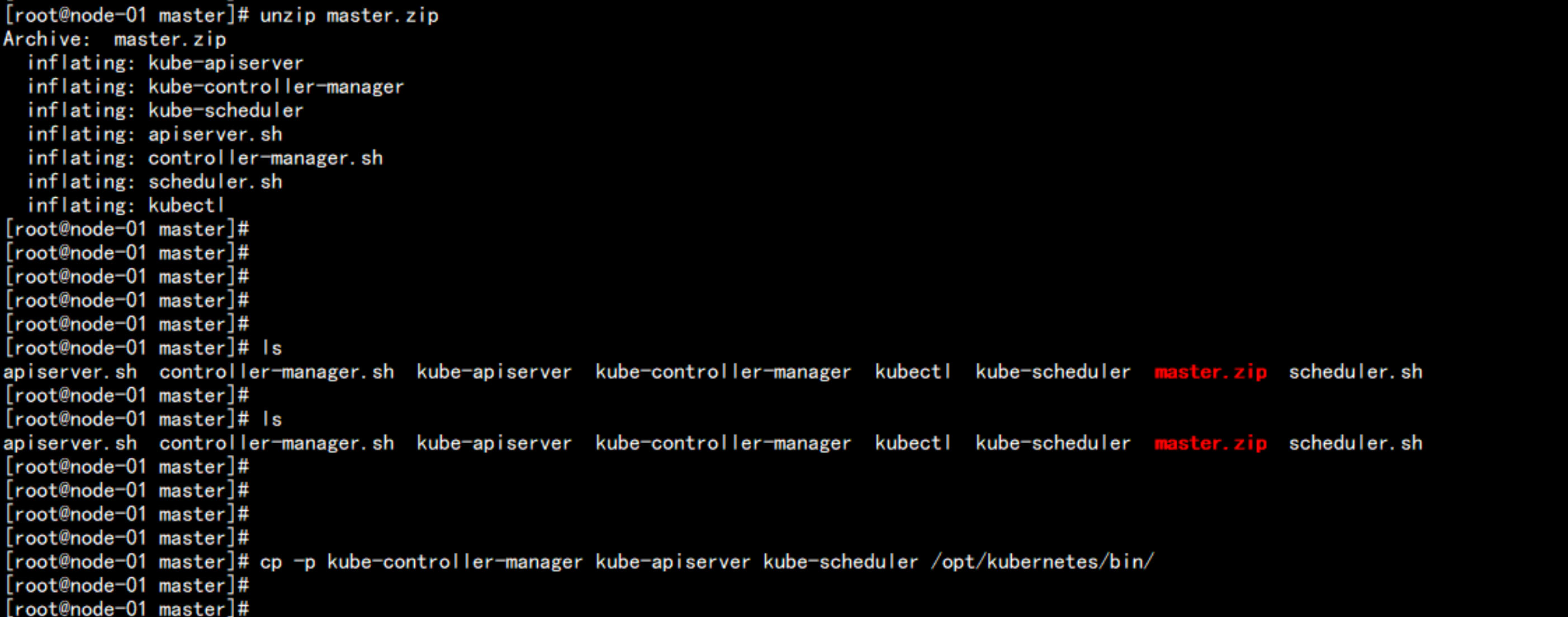

kubernetes-server-linux-amd64.tar.gz4.1 部署 master 节点

上传 master.zip 到 172.17.100.11 上面的/root/master 目录下面

mkdir master

cd master

unzip master.zip

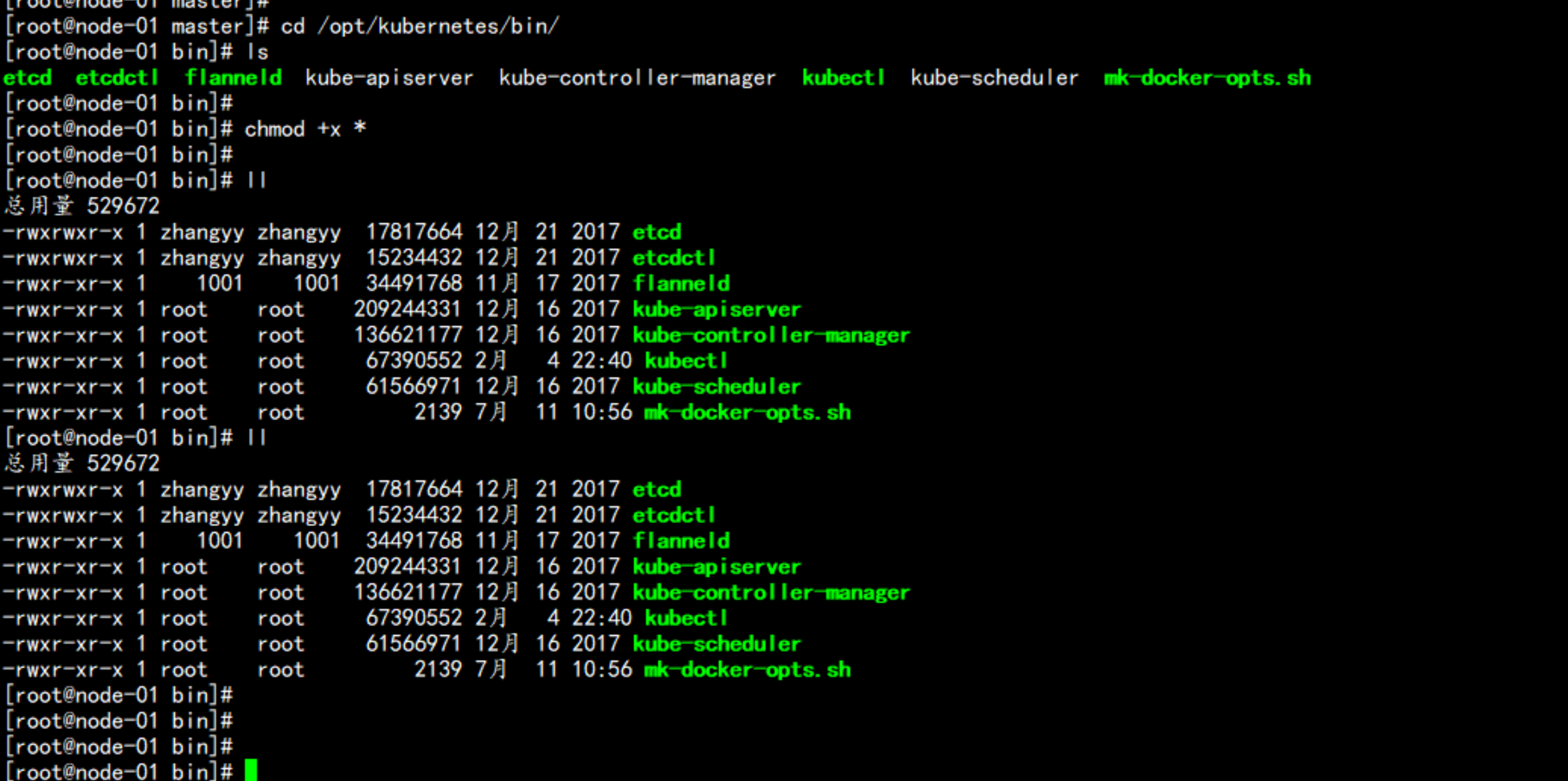

cp -p kube-controller-manager kube-apiserver kube-scheduler /opt/kubernetes/bin/

cd /opt/kubernetes/bin/

chmod +x *

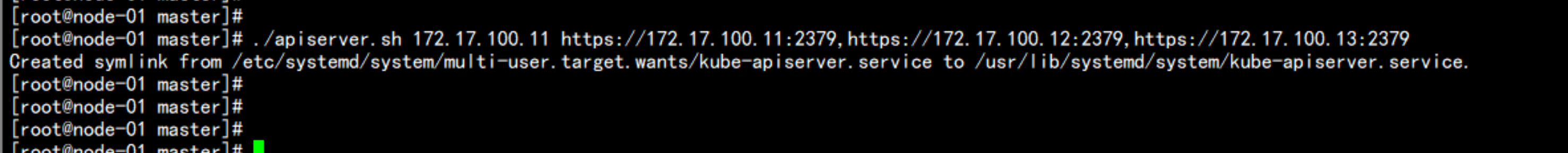

cp -p /root/token.csv /opt/kubernetes/cfg/

cd /root/master/

./apiserver.sh 172.17.100.11 https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379

cd /opt/kubernetes/cfg/

cat kube-apiserver

---

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://172.17.100.11:2379,https://172.17.100.12:2379,https://172.17.100.13:2379 \

--insecure-bind-address=127.0.0.1 \

--bind-address=172.17.100.11 \

--insecure-port=8080 \

--secure-port=6443 \

--advertise-address=172.17.100.11 \

--allow-privileged=true \

--service-cluster-ip-range=10.10.10.0/24 \

--admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/kubernetes/ssl/ca.pem \

--etcd-certfile=/opt/kubernetes/ssl/server.pem \

--etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

---cd /usr/lib/systemd/system/

cat kube-apiserver.service

---

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target

---

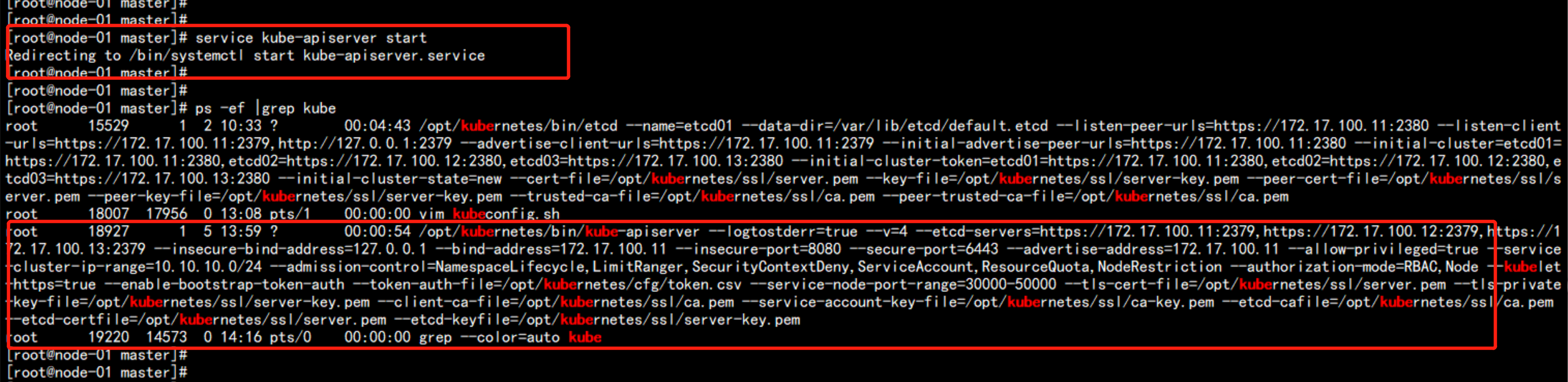

启动apiserver

service kube-apiserver start

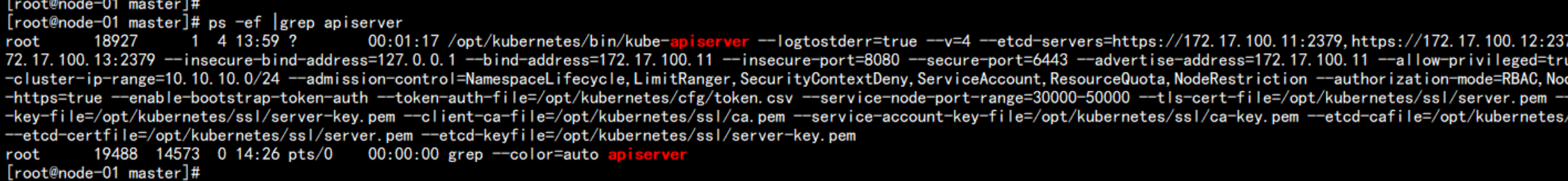

ps -ef |grep apiserver

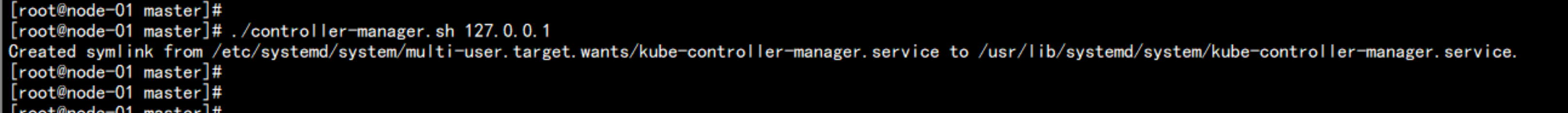

执行contronller 脚本

./controller-manager.sh 127.0.0.1

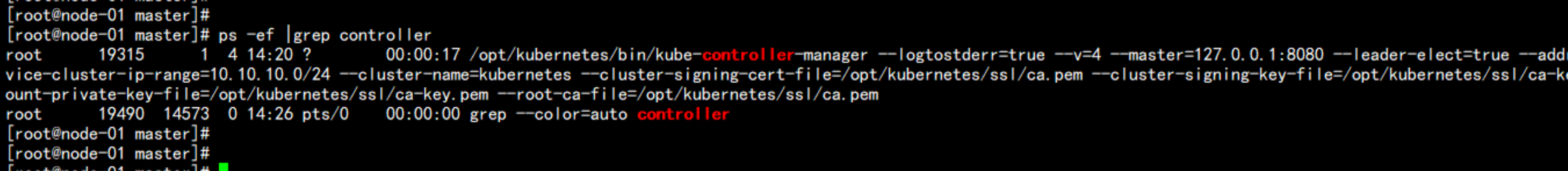

ps -ef |grep controller

启动调度

./scheduler.sh 127.0.0.1

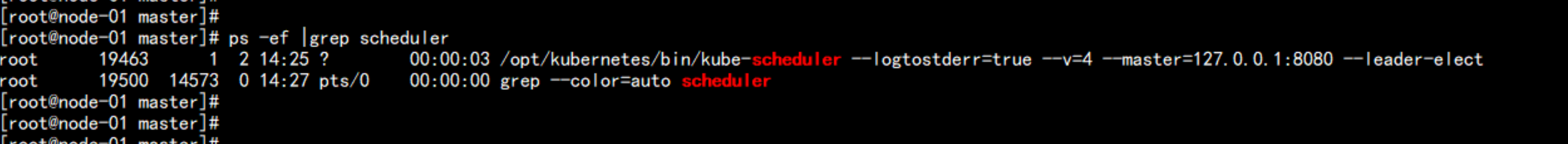

ps -ef |grep scheduler

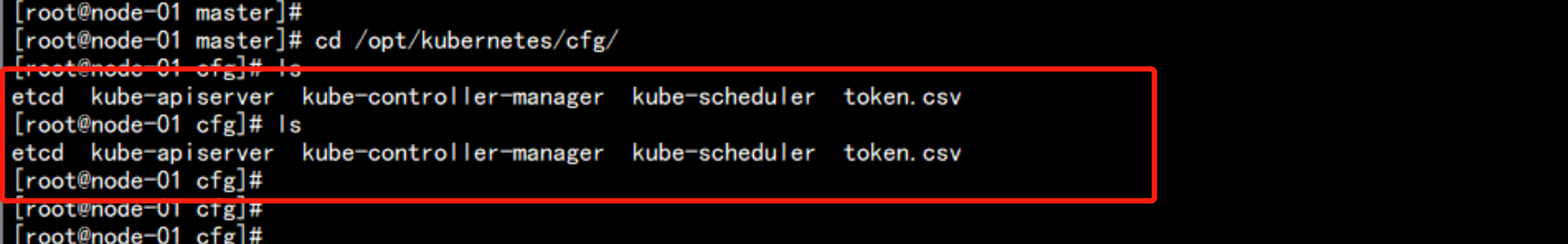

cd /opt/kubernetes/cfg/

cat kube-controller-manager

---

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect=true \

--address=127.0.0.1 \

--service-cluster-ip-range=10.10.10.0/24 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem"

---

cat kube-scheduler

---

KUBE_SCHEDULER_OPTS="--logtostderr=true \

--v=4 \

--master=127.0.0.1:8080 \

--leader-elect"

---

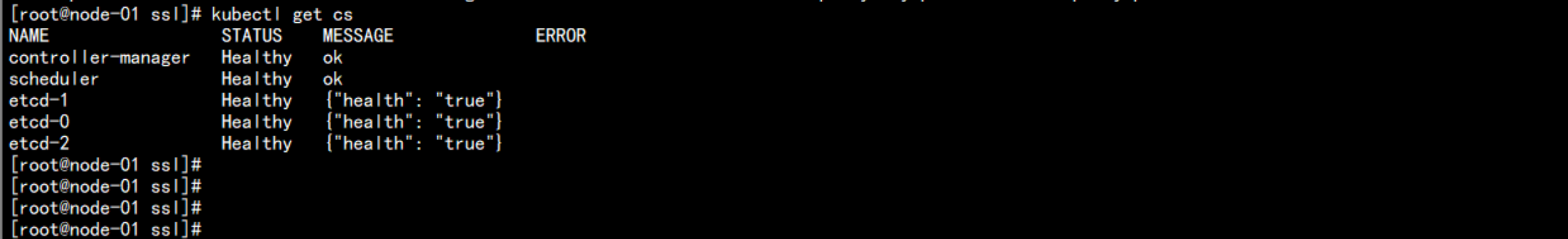

检查节点:

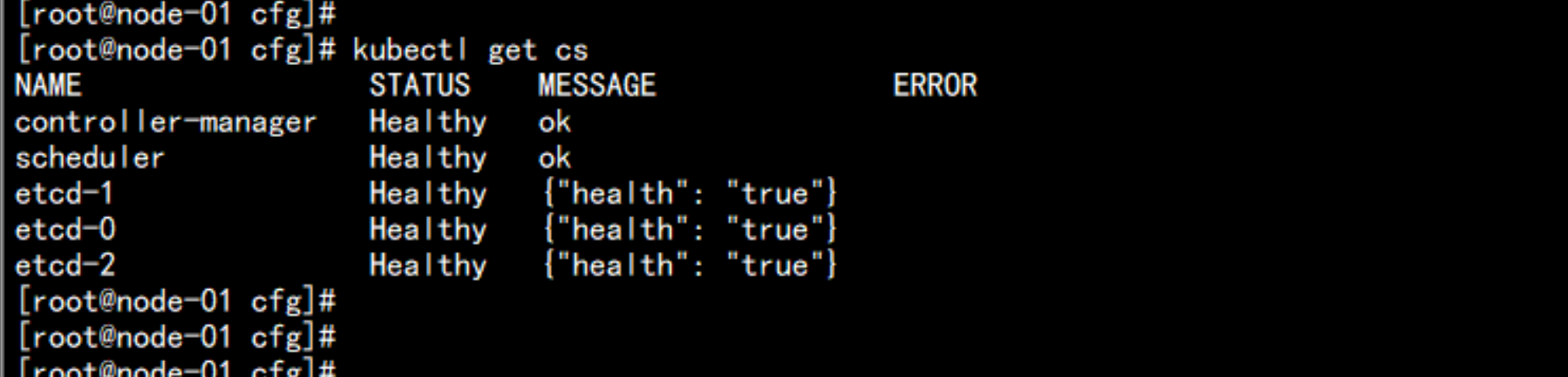

kubectl get cs

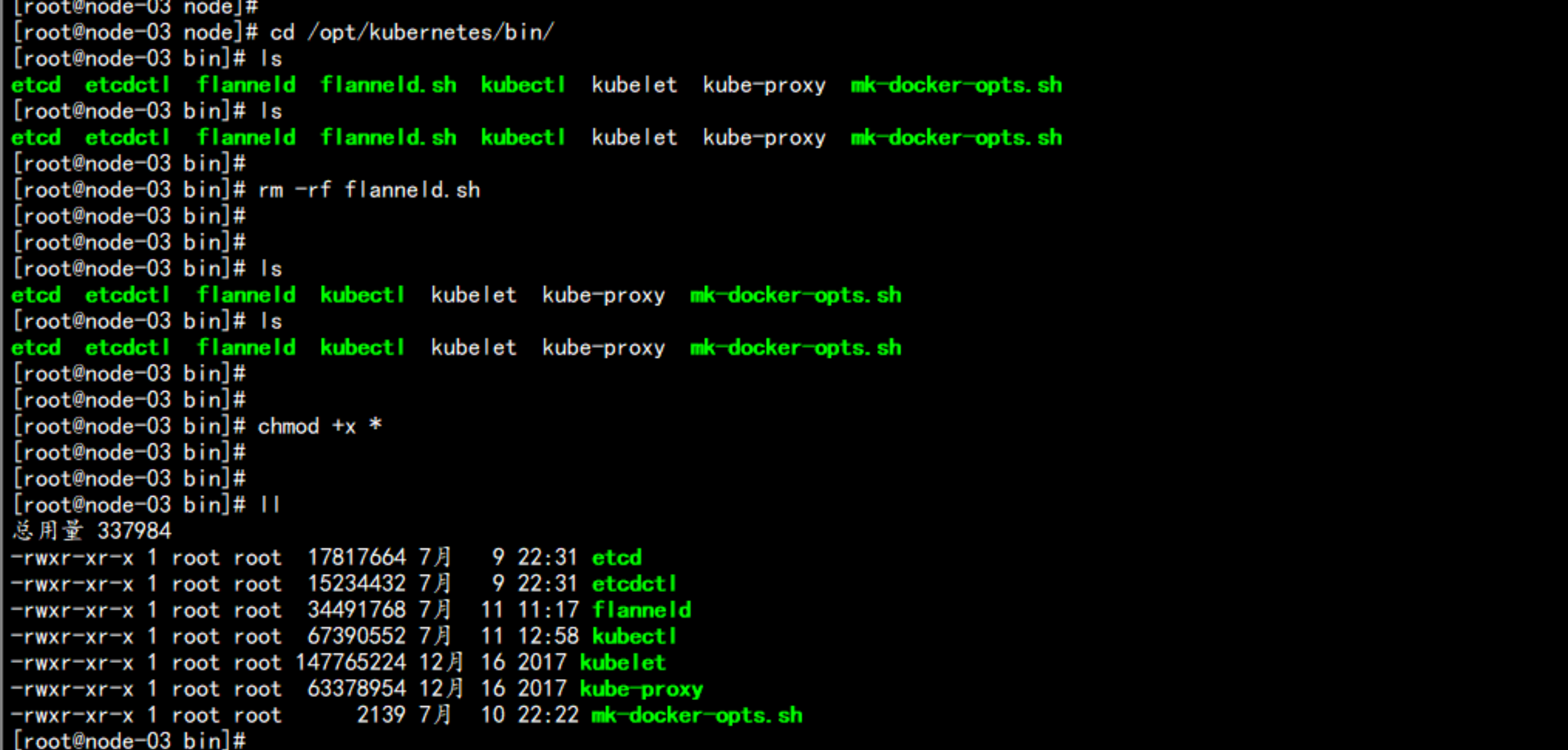

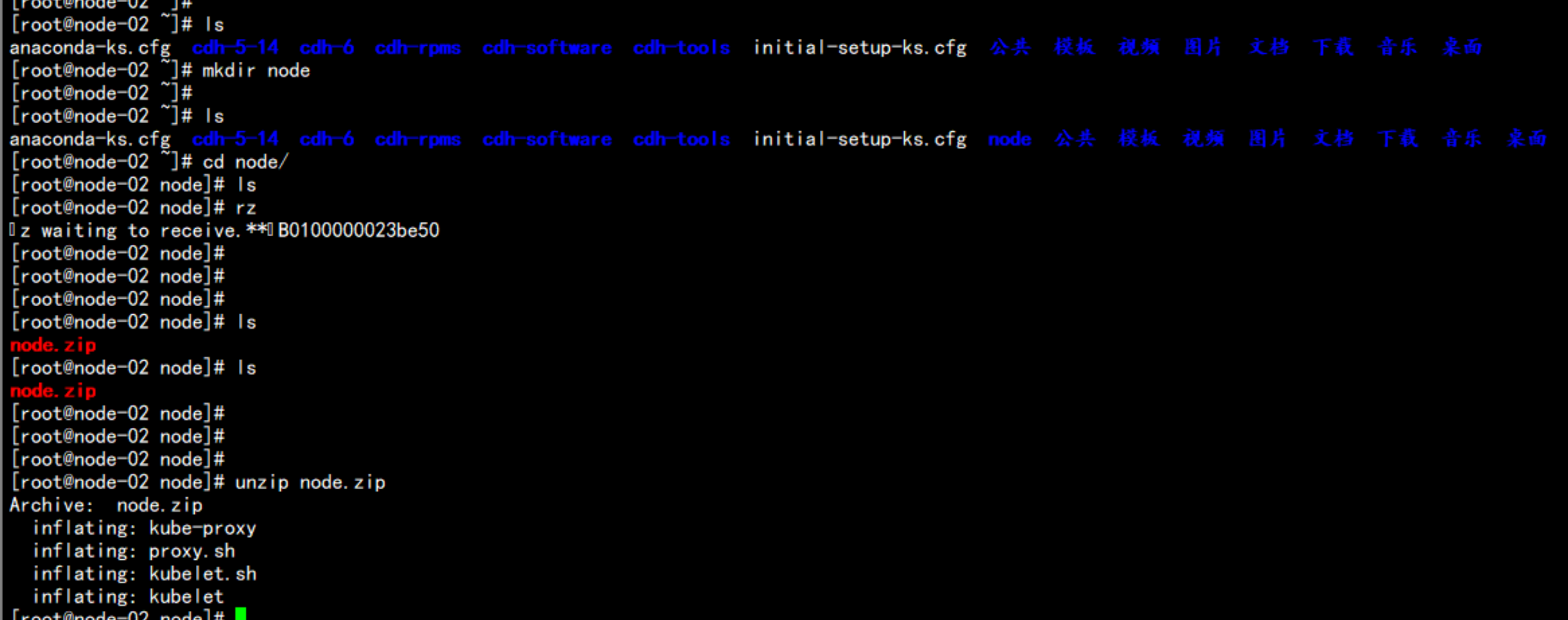

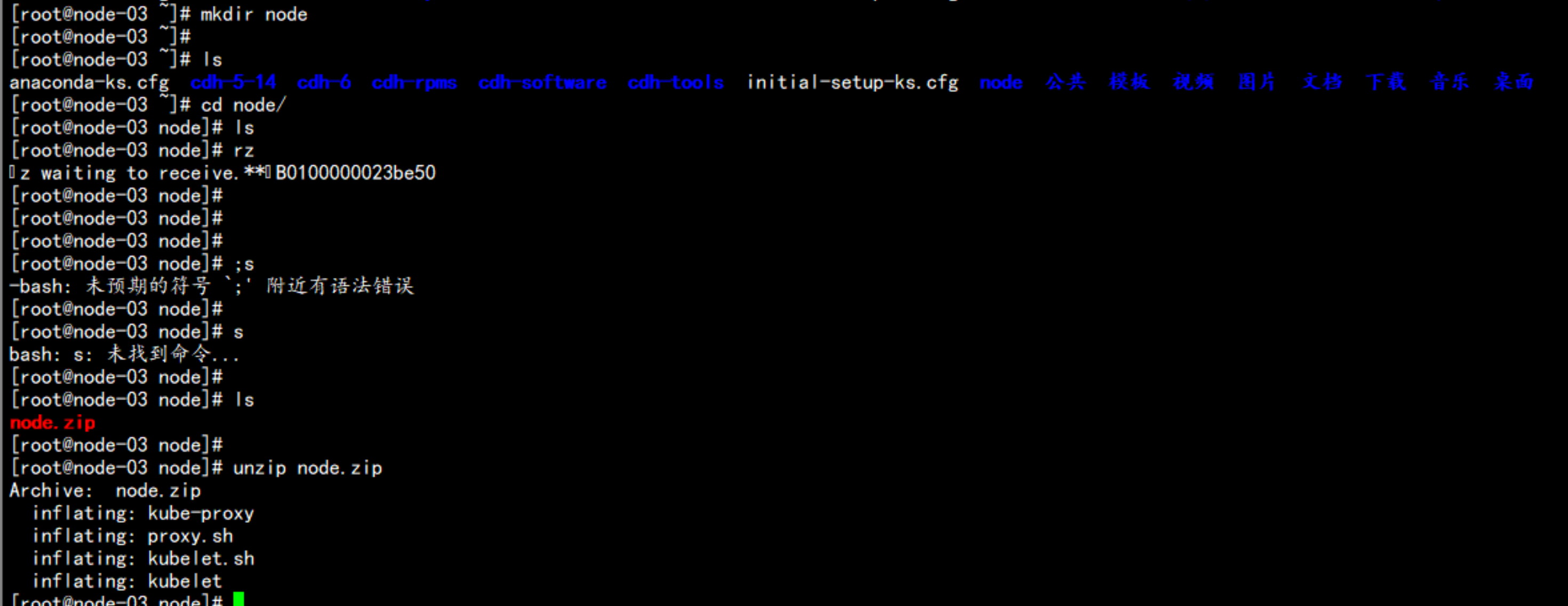

4.2 部署node 节点

172.17.100.12 与 172.17.100.13

mkdir /root/node

上传node.zip 到 /root/node 目录下面

cd /root/node

unzip node.zip

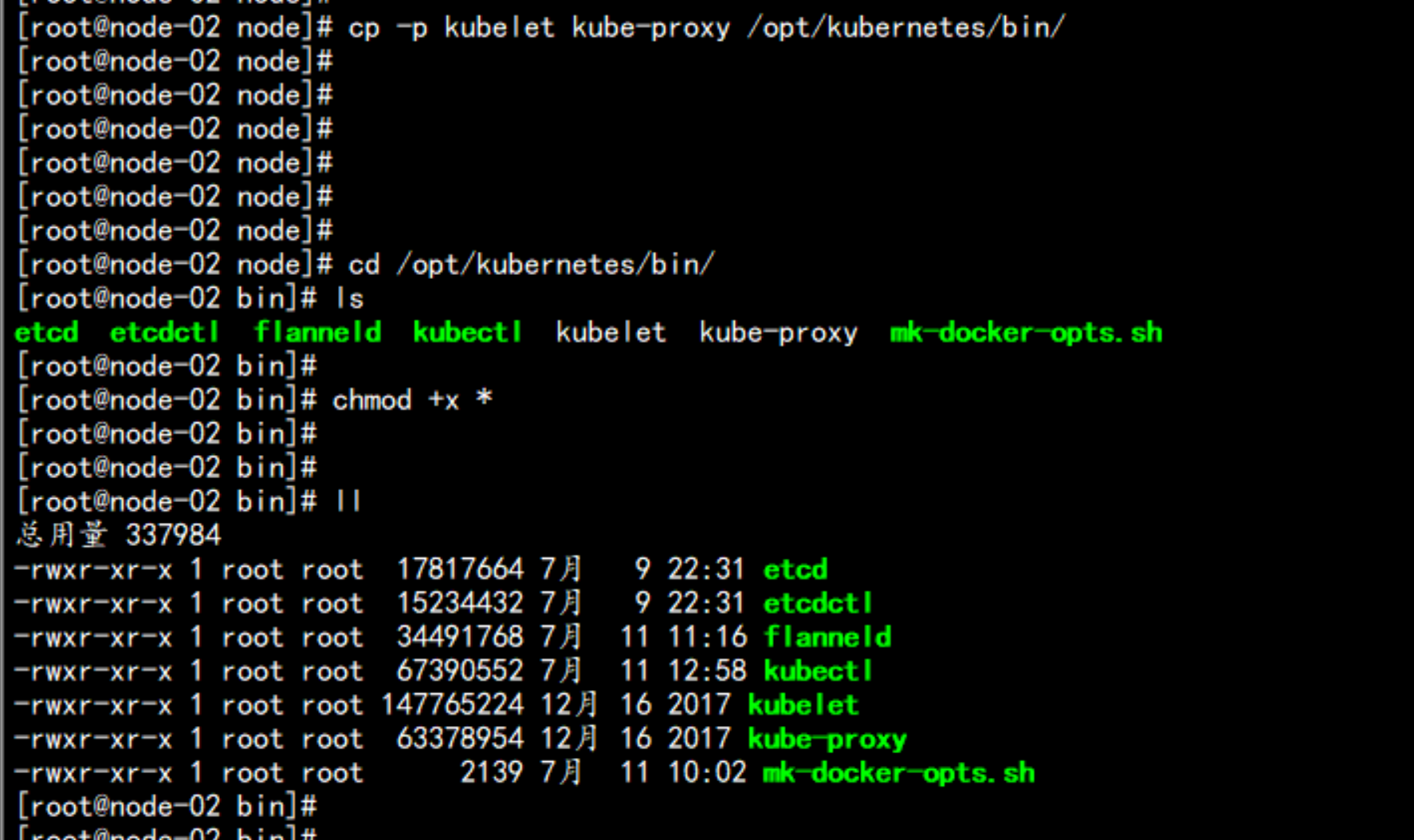

cp -p kube-proxy kubelet /opt/kubernetes/bin/

cd /opt/kubernetes/bin/

chmod +x *

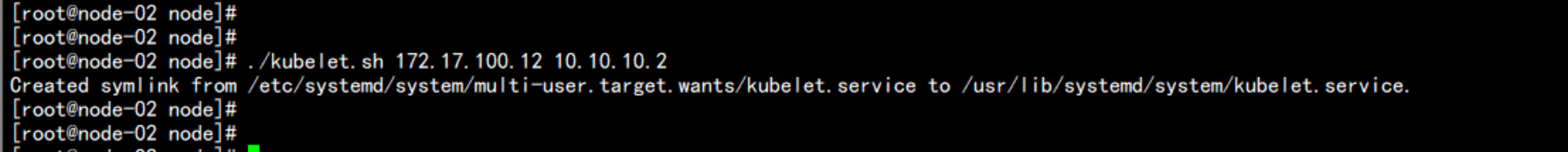

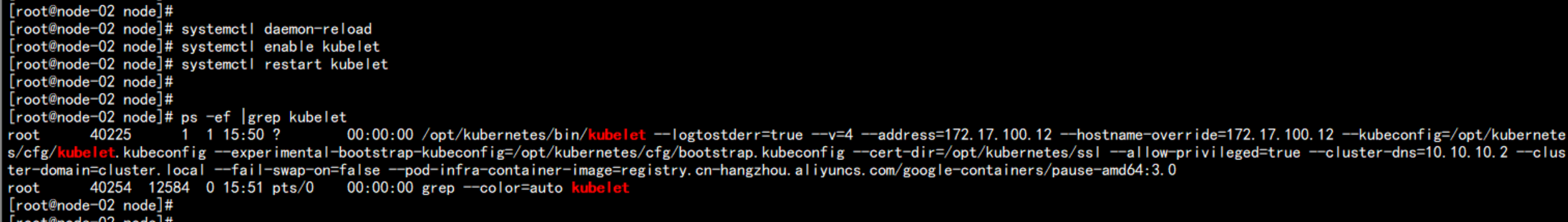

172.17.100.12 node 节点

cd /root/node

chmod +x *.sh

./kubelet.sh 172.17.100.12 10.10.10.2

----

注意报错:

Jul 11 15:40:18 node-02 kubelet: error: failed to run Kubelet: cannot create certificate signing request: certificatesigningrequests.certificates.k8s.io is forbidden: User "kubelet-bootstrap" cannot create certificatesigningrequests.certificates.k8s.io at the cluster scope

-----

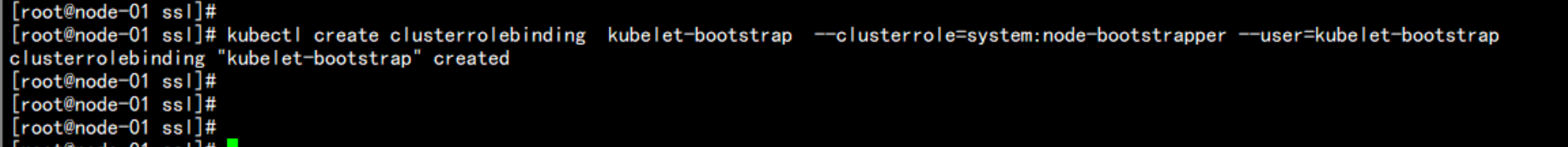

去master(172.17.100.11) 节点上面执行命令:

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

然后重启

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

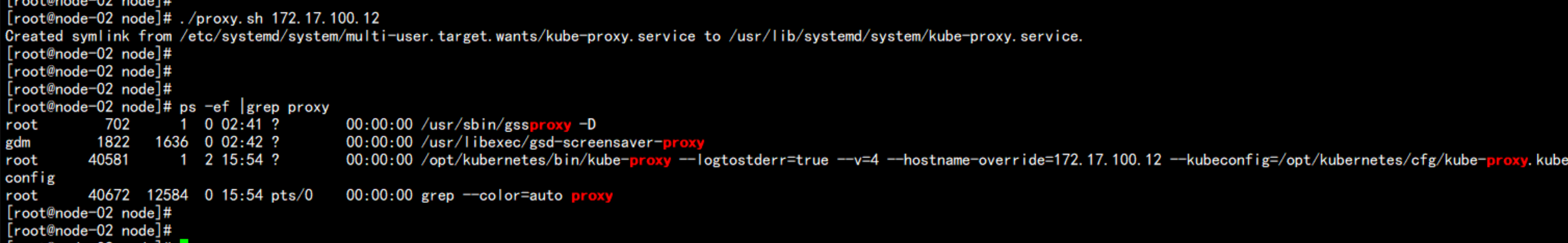

proxy的配置:

./proxy.sh 172.17.100.12

ps -ef |grep proxy

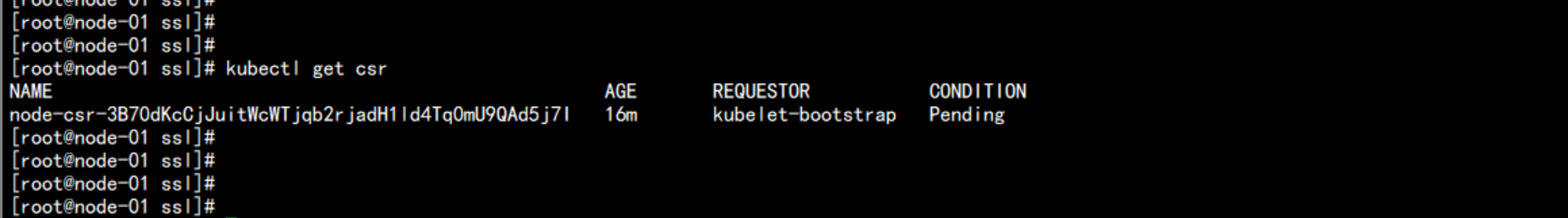

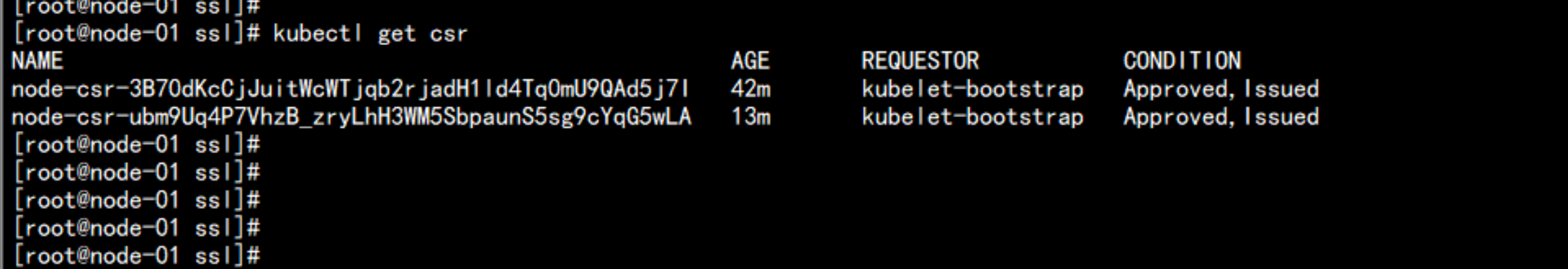

去master 节点上面查看证书

kubectl get csr

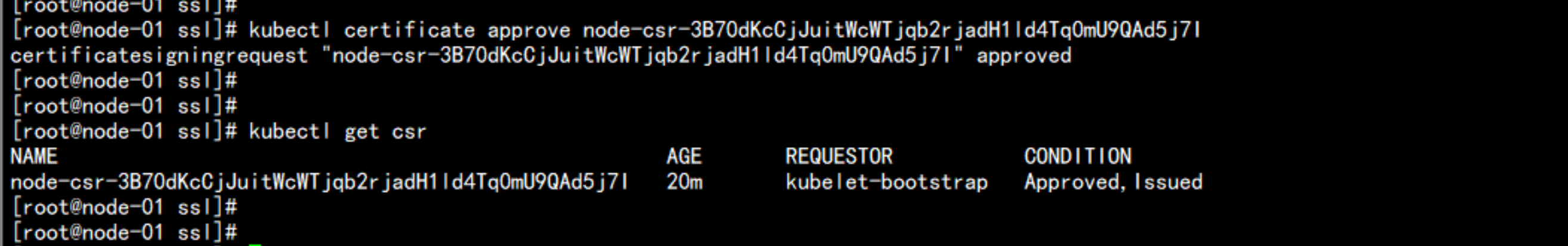

kubectl certificate approve node-csr-3B70dKcCjJuitWcWTjqb2rjadH1ld4Tq0mU9QAd5j7I

kubectl get csr

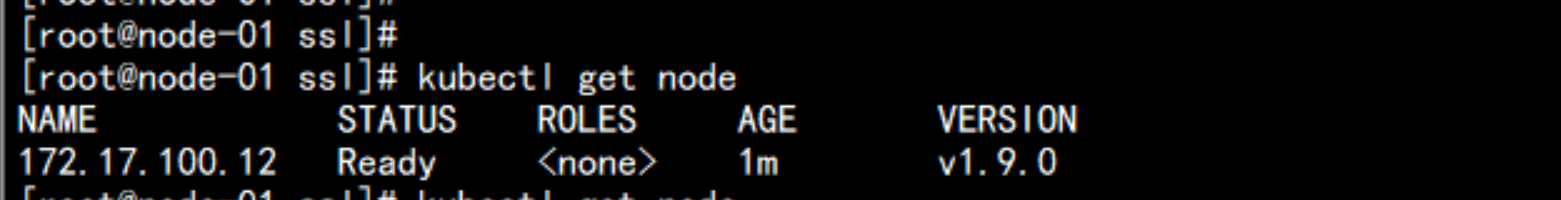

节点加入

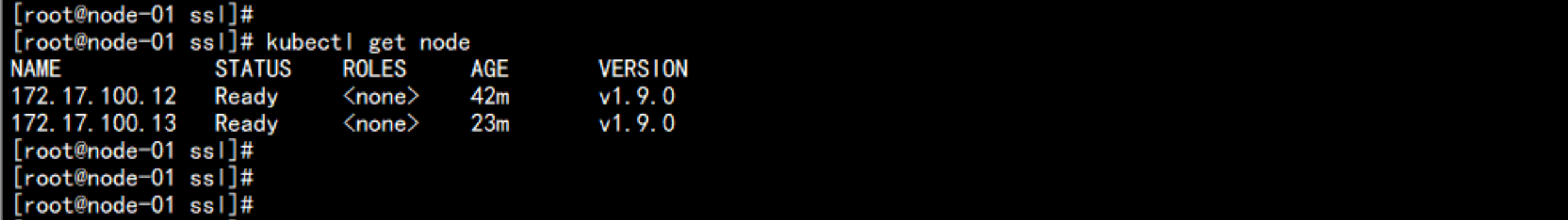

kubectl get node

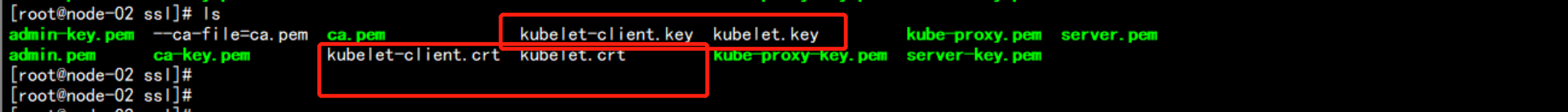

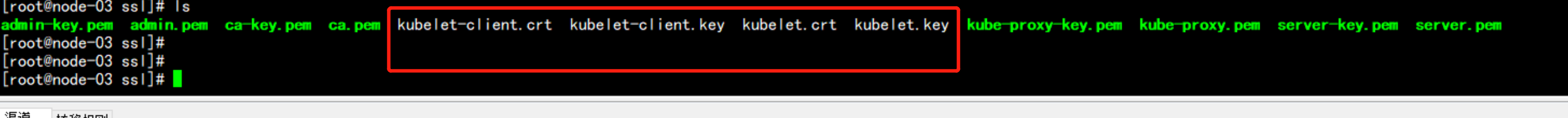

172.17.100.12 多出了证书

cd /opt/kubernetes/ssl/

ls

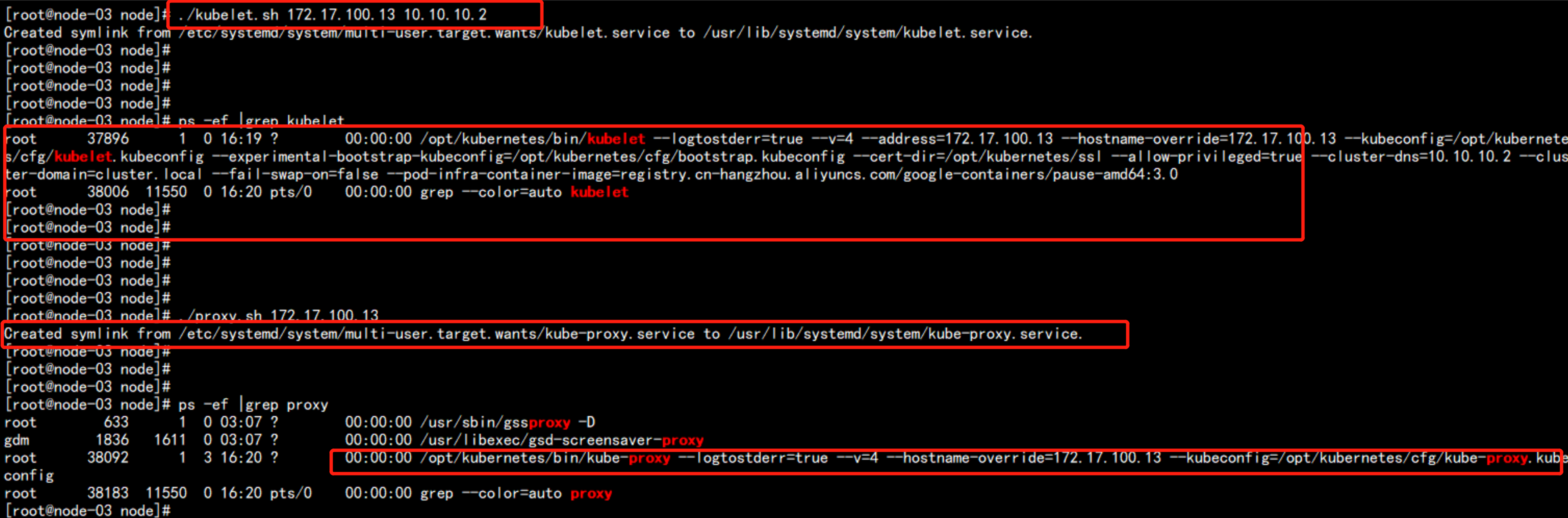

172.17.100.13 节点:

cd /root/node

./kubelet.sh 172.17.100.13 10.10.10.2

./proxy.sh 172.17.100.13

在master(172.17.100.11) 上面执行

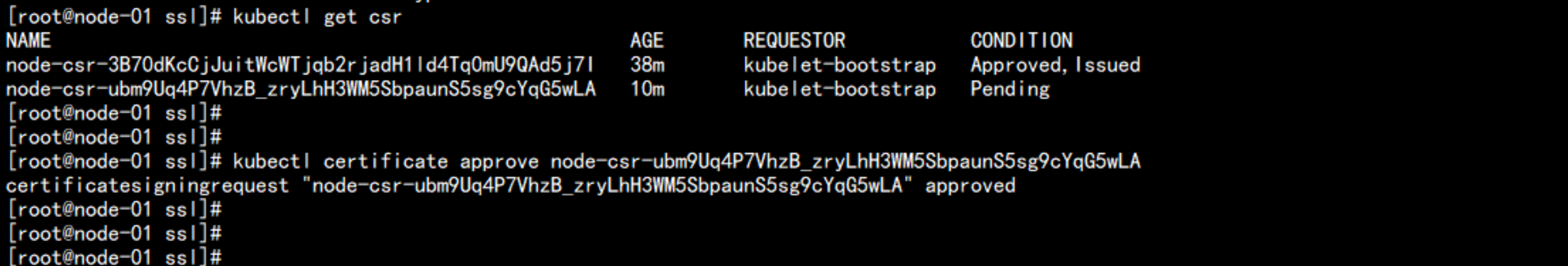

kubectl get csr

kubectl certificate approve node-csr-ubm9Uq4P7VhzB_zryLhH3WM5SbpaunS5sg9cYqG5wLA

kubectl get csr

172.17.100.13 上面会自动生成kubelet的 证书

cd /opt/kubernetes/ssl

ls

在master 上面执行命令:

kubectl get node

kubectl get cs

至此kubernetes 部署完成

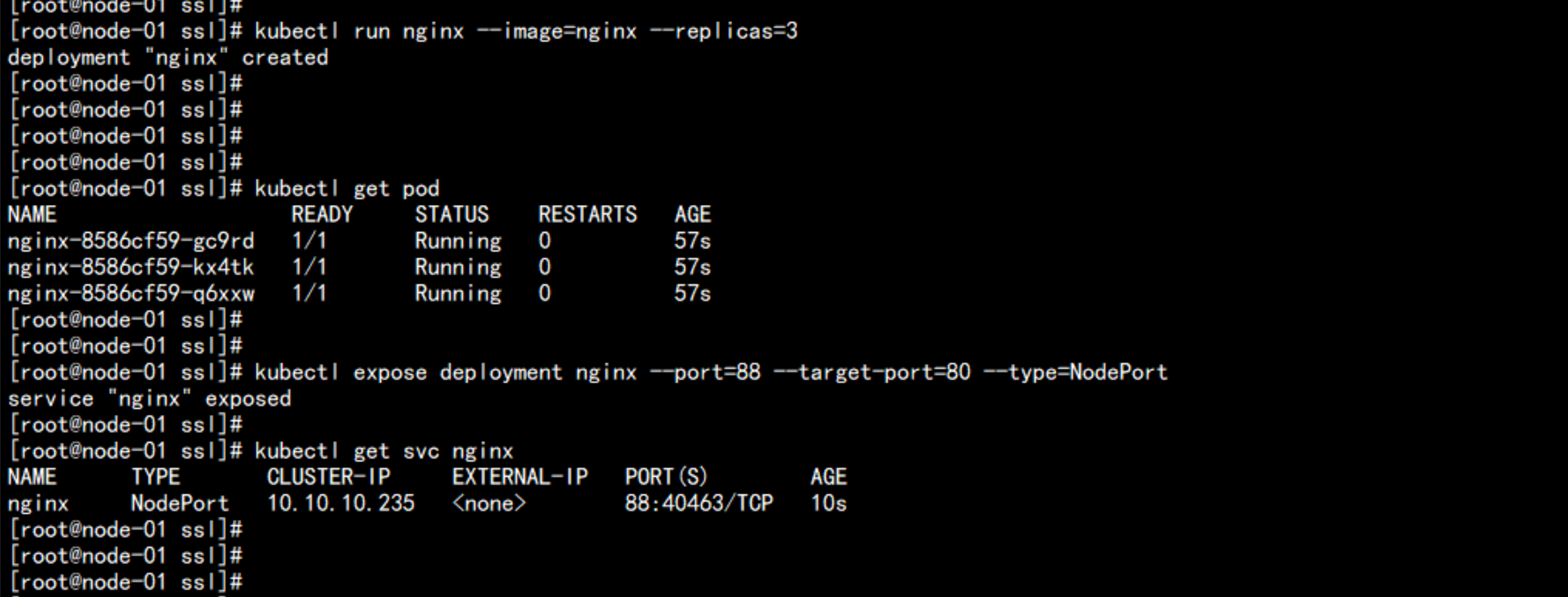

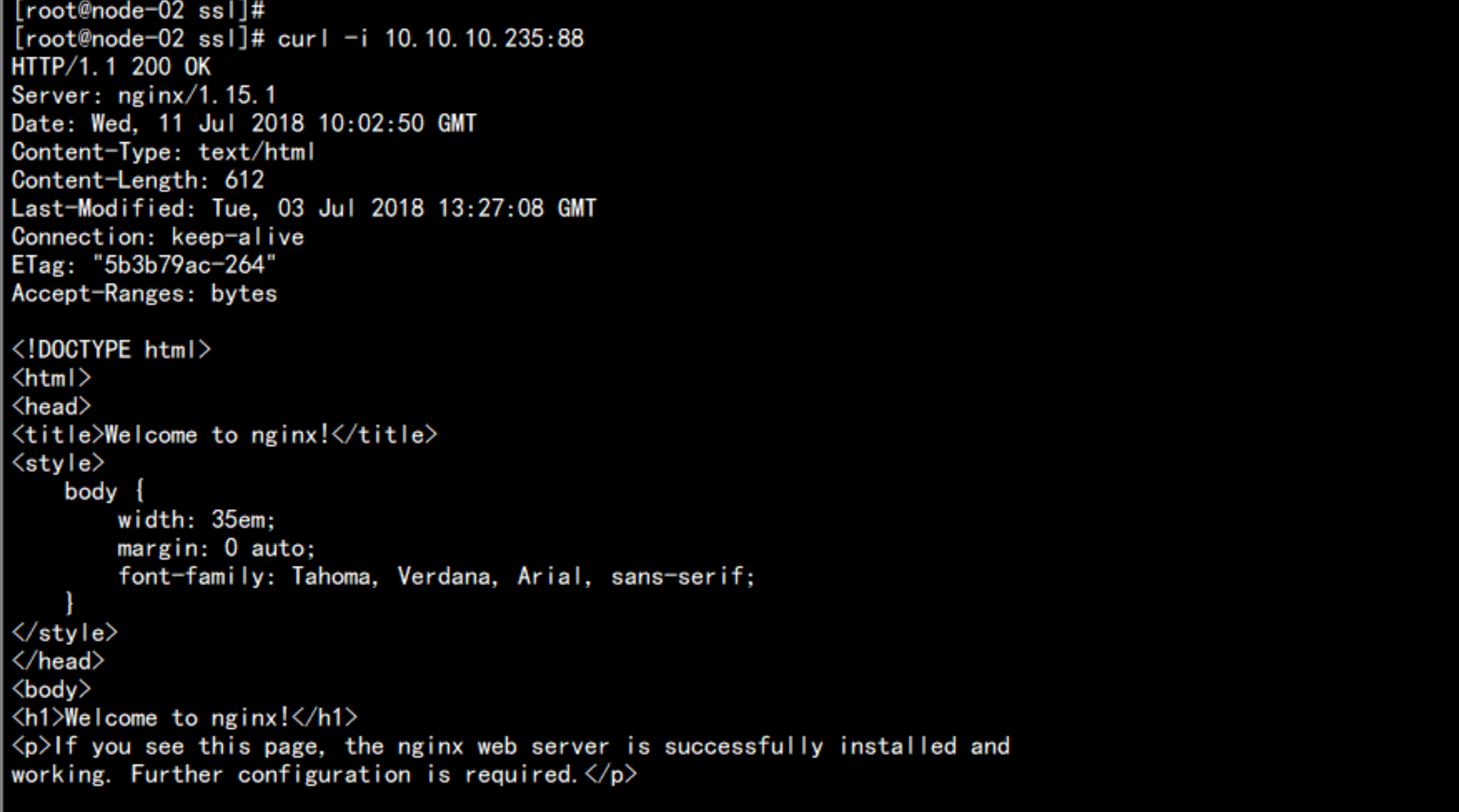

五:启动一个测试示例

# kubectl run nginx --image=nginx --replicas=3

# kubectl get pod

# kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort

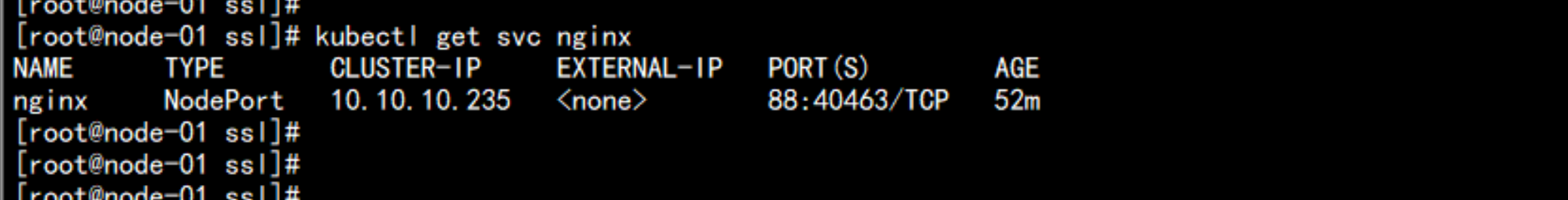

# kubectl get svc nginx

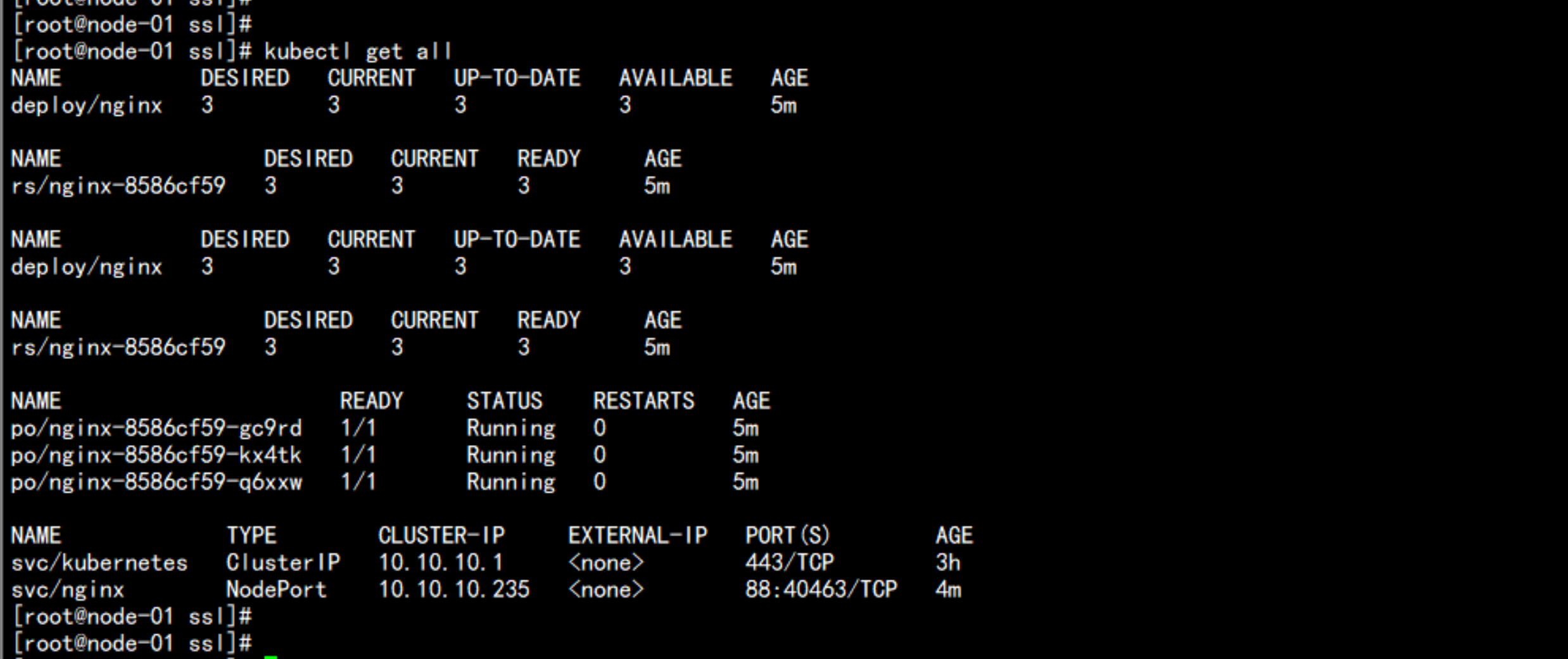

# kubectl get all

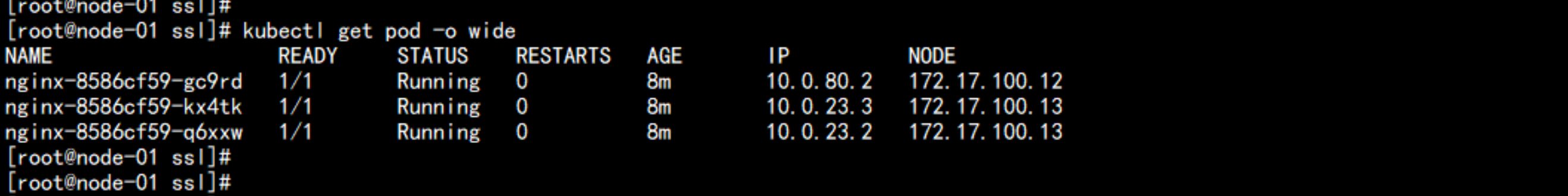

kubectl get pod -o wide

# kubectl get svg nginx

flanneld 网络访问

curl -i 10.10.10.235:88

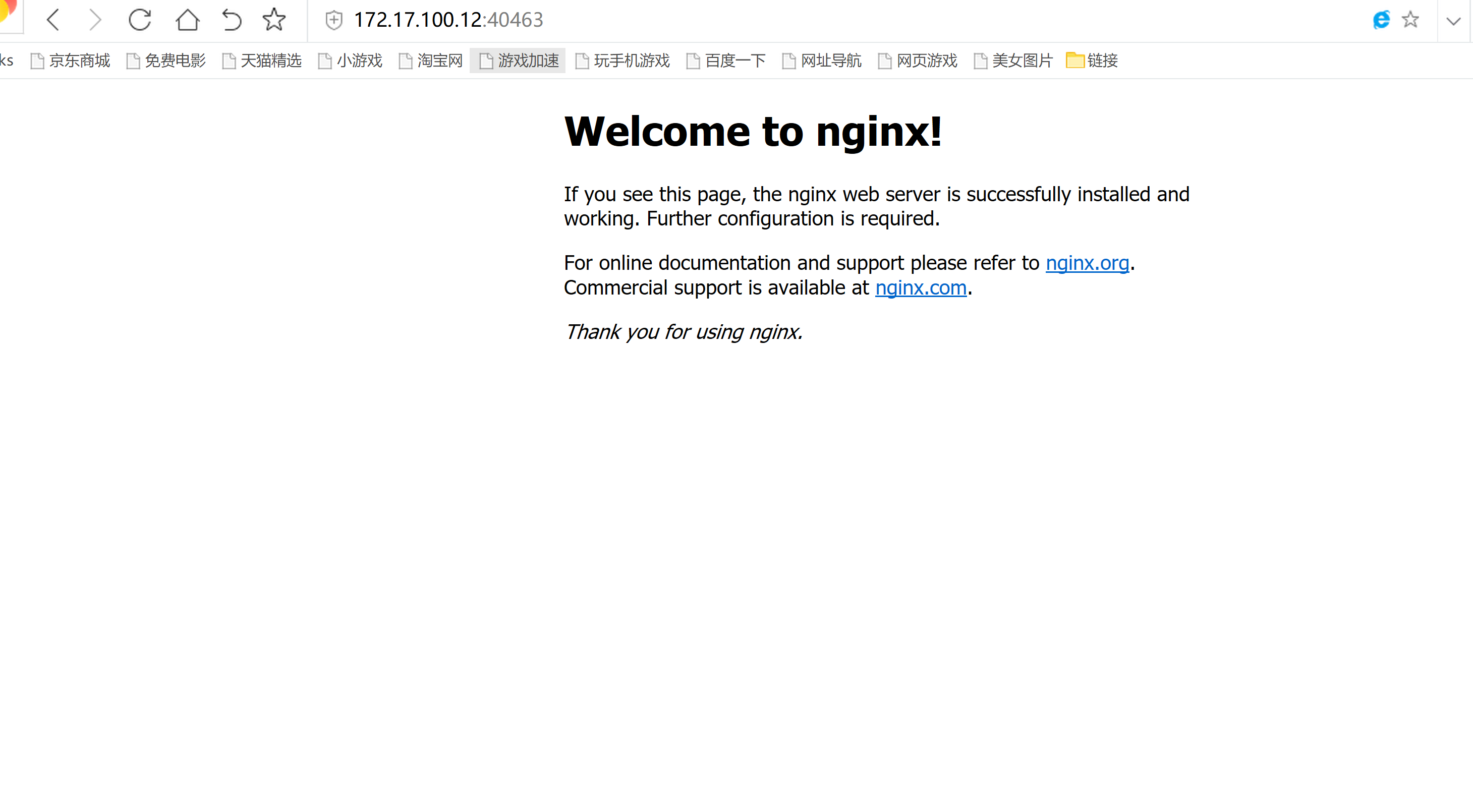

外部网络访问:

172.17.100.12:40463

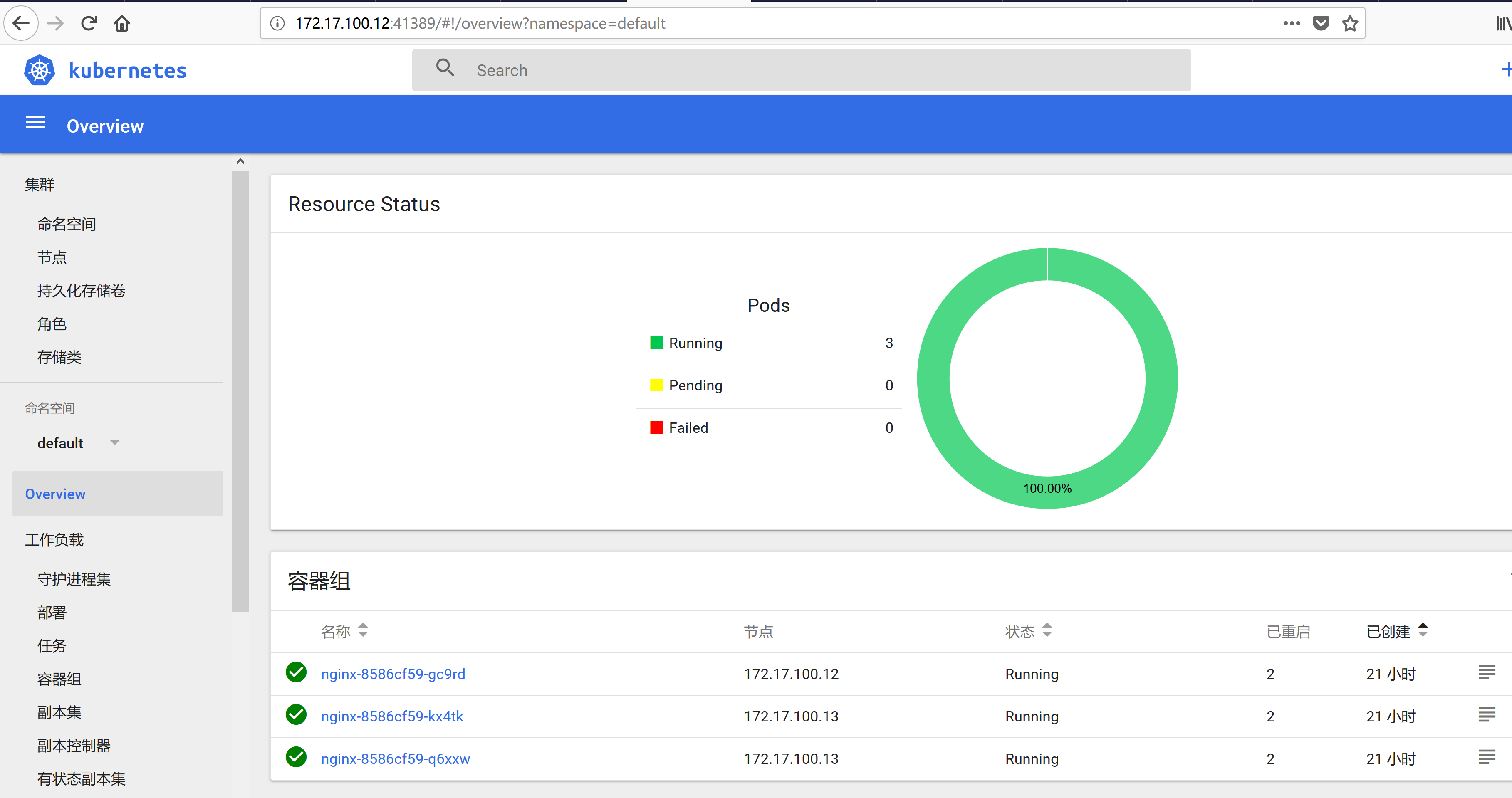

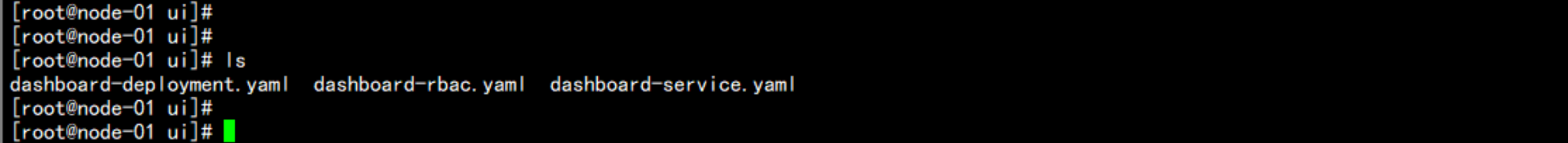

六:kubernetes 的UI界面

mkdir -p /root/ui

上传文件

dashboard-deployment.yaml dashboard-rbac.yaml dashboard-service.yaml

到 /root/ui

cd /root/ui

ls

执行构建

# kubectl create -f dashboard-rbac.yaml

# kubectl create -f dashboard-deployment.yaml

# kubectl create -f dashboard-service.yaml

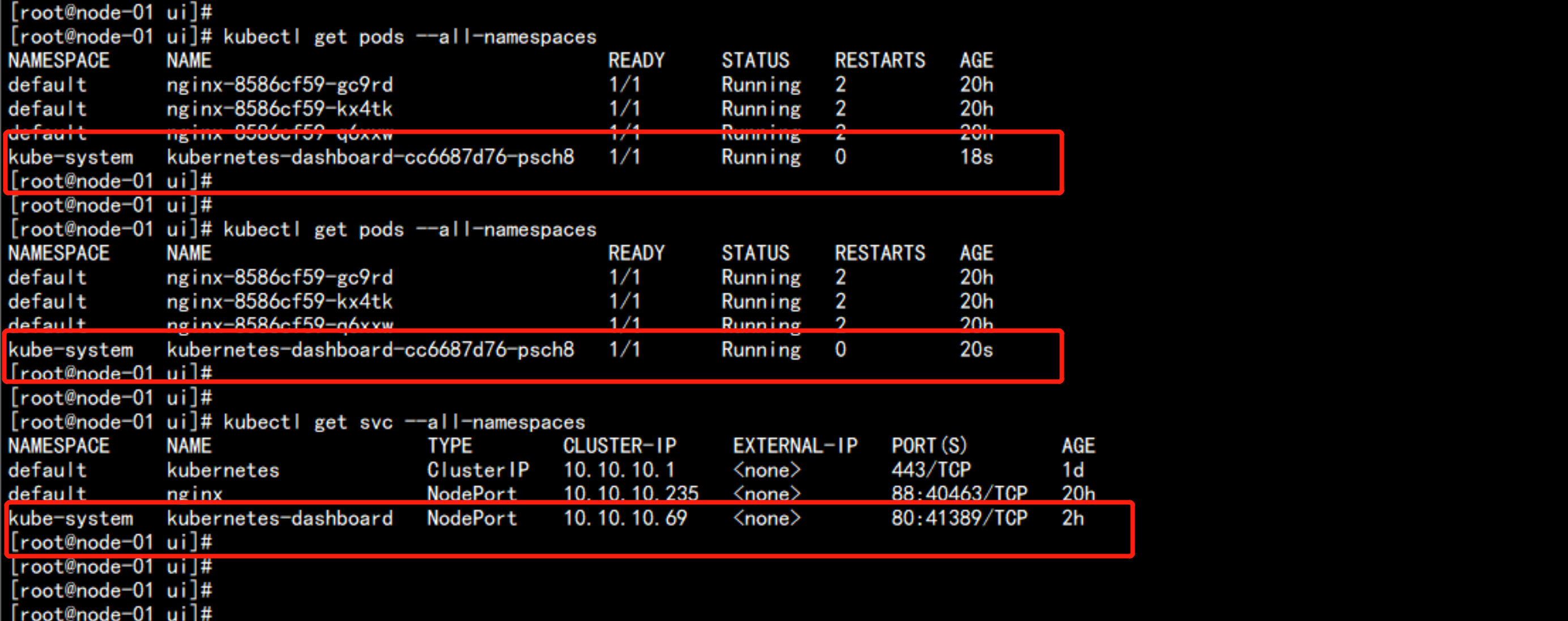

### 查看容器

kubectl get pods --all-namespaces

kubectl get svc --all-namespaces

浏览器访问:

http://172.17.100.12:41389/ui

至此 kubernetes UI 安装完成