http://blog.csdn.net/pipisorry/article/details/78362537

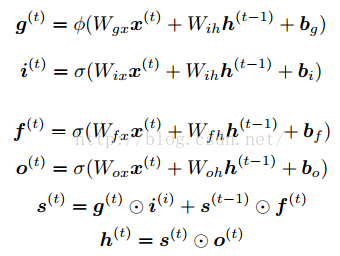

原始的LSTM

LSTM模型的拓展

[Greff, Klaus, et al. "LSTM: A search space odyssey." TNNLS2016] 探讨了基于Vanilla LSTM (Graves & Schmidhube (2005))之上的8个变体,并比较了它们之间的性能差异,包括:

- 没有输入门 (No Input Gate, NIG)

- 没有遗忘门 (No Forget Gate, NFG)

- 没有输出门 (No Output Gate, NOG)

- 没有输入激活函数 (No Input Activation Function, NIAF) (也就是没有输入门对应的tanh层)

- 没有输出激活函数 (No Output Activation Function, NOAF) (也就是没有输出门对应的tanh层)

- 没有"peephole connection" (No Peepholes, NP)

- 遗忘门与输入门结合 (Coupled Input and Forget Gate, CIFG)

- Full Gate Recurrence (FGR)

The FGR variant adds recurrent connections between all the gates (nine additional recurrent weight matrices).

关于不同变体的对比

[Greff, Klaus, et al. "LSTM: A search space odyssey." TNNLS2016]将8种LSTM变体与基本的Vanilla LSTM在TIMIT语音识别、手写字符识别、复调音乐建模三个应用中的表现情况进行了比较,得出了几个有趣的结论:

- Vanilla LSTM在所有的应用中都有良好的表现,其他8个变体并没有什么性能提升;

- 将遗忘门与输出门结合 (Coupled Input and Forget Gate, CIFG)以及没有"peephole connection" (No Peepholes, NP)简化了LSTM的结构,而且并不会对结果产生太大影响;

- 遗忘门和输出门是LSTM结构最重要的两个部分,其中遗忘门对LSTM的性能影响十分关键,输出门 is necessary whenever the cell state is unbounded (用来限制输出结果的边界);

- 学习率和隐含层个数是LSTM最主要的调节参数,而动量因子被发现影响不大,高斯噪音的引入对TIMIT实验性能提升显著,而对于另外两个实验则会带来反效果;

- 超参分析表明学习率与隐含层个数之间并没有什么关系,因此可以独立调参,另外,学习率可以先使用一个小的网络结构进行校准,这样可以节省很多时间。

[[NL系列] RNN & LSTM 网络结构及应用]

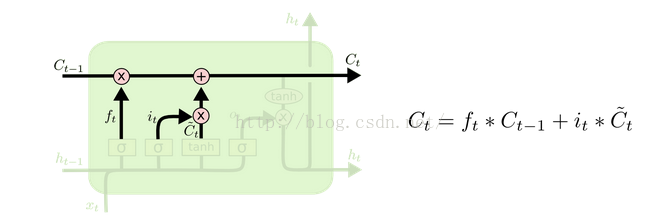

耦合的输入和遗忘门/遗忘门与输入门结合 (Coupled Input and Forget Gate, CIFG)

变成

其它变型

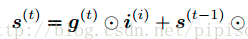

Recurrent Dropout without Memory Loss

the cell and hidden state update equations for LSTM will incorporate a single dropout (Hinton et al., 2012) gate, as developed in Recurrent Dropout without Memory Loss (Semeniuta et al., 2016), to help regularize the entire model during training.

[Stanislaw Semeniuta, Aliases Severyn, and Erhardt Barth. Recurrent dropout without memory loss. arXiv 2016]

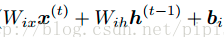

Layer Normalization

add the option to use a Layer Normalization layer in the LSTM

i^t = σ(LN(

i g f o s^t都需要LN。

Layer Normalization (Ba et al., 2016[Jimmy L. Ba, Jamie R. Kiros, and Geoffrey E. Hinton. Layer normalization. NIPS2016]). The central idea for the normalization techniques is to calculate the first two statistical moments of the inputs to the activation function, and to linearly scale the inputs to have zero mean and unit variance.

增加网络层数 Going Deep

既然LSTM(RNN)是神经网络,我们自然可以把一个个LSTM叠加起来进行深度学习。

比如,我们可以用两个独立的LSTM来构造两层的循环神经网络:一个LSTM接收输入向量,另一个将前一个LSTM的输出作为输入。这两个LSTM没有本质区别——这不外乎就是向量的输入输出而已,而且在反向传播过程中每个模块都伴随着梯度操作。

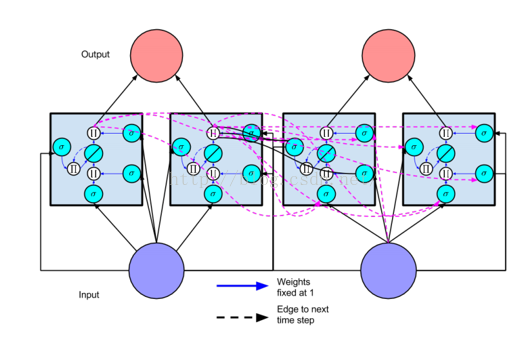

[Gated Feedback Recurrent Neural Networks]

左边是传统的stack RNN(三个RNN叠加),右边是升级版——RNN的hidden state除了会对下一时刻自己产生影响外,还会对其他的RNN产生影响,由reset gates控制。

深层LSTM模型

类似于深层RNN,使用多层的LSTM模型。著名的seq2seq模型使用的就是2层的LSTM来进行encode和decode的。multilayered Long Short-Term Memory (LSTM) to map the input sequence to a vector of a fixed dimensionality。

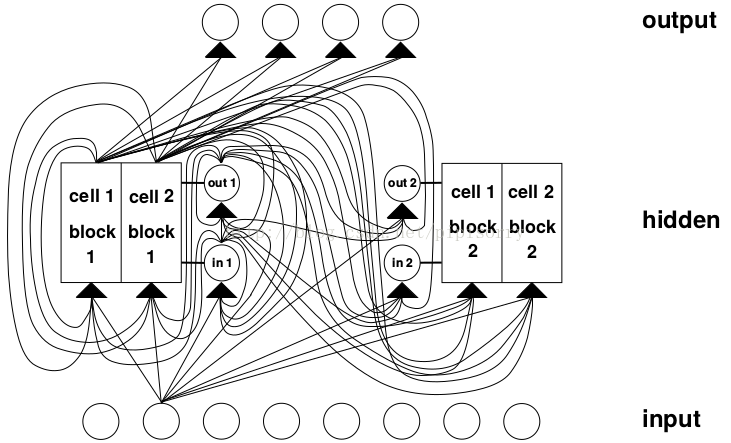

[Ilya Sutskever, Oriol Vinyals, and Quoc VV Le. Sequence to sequence learning with neural networks. In NIPS, 2014.]具有2个cell的LSTM模型

一般时间序列只需要一个block,而空间序列可能需要多个block?如下图。

2个cell的lstm模型就类似于rnn模型中的”动态跨时间步的rnn网络“。

Figure 2: Example of a net with 8 input units, 4 output units, and 2 memory cell blocks of size 2.

in 1 marks the input gate, out 1 marks the output gate, and cell 1 =block 1 marks the rst memory cell of block 1. cell 1 =block 1 's architecture is identical to the one in Figure 1, with gate units in 1 and out 1

The example assumes dense connectivity: each gate unit and each memory cell see all non-output units.

[Hochreiter, S., & Schmidhuber, J. Long short-term memory. Neural computation1997]

[LSTM模型]

LSTM的应用

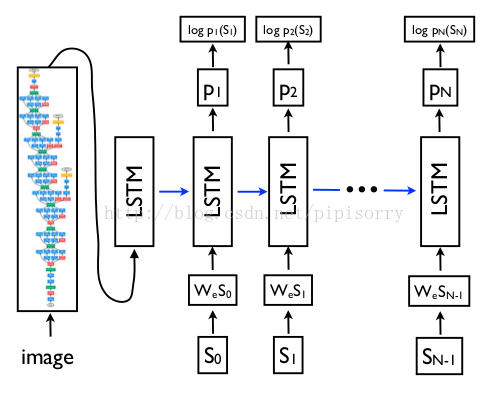

Image Caption

LSTM model combined with a CNN image embedder (as defined in [24]) and word embeddings.

[Show and Tell: Lessons learned from the 2015 MSCOCO Image Captioning Challenge]

from: http://blog.csdn.net/pipisorry/article/details/78362537

ref: