下载Erlang和RabbitMQ

Erlang:http://www.erlang.org/downloads

RabbitMQ:http://www.rabbitmq.com/install-windows.html

安装:https://blog.csdn.net/hzw19920329/article/details/53156015

Erlang安装完以后需要一个环境变量的配置,RabbitMQ安装完即可,只要能查看到它在运行就行,并没有对它进行安装插件之类的操作

查看win10端口占用情况netstat -ano

查看win10固定端口占用情况netstat -ano | findstr "5672"

建立一个简单的rabbitMQ队列通信

receive.py:当receive端会接收缓存在队列的所有消息

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue = 'hello')

def callback(ch,method,properties,body):

print('[x] Received %r' %body)

channel.basic_consume(callback,

queue='hello',

no_ack = True)

print('[*] waiting for messages.To exit press CTRL+C')

channel.start_consuming()send.py:如果send启动时,receive没有启动,那么消息会被保留在queue里指导接收

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue = 'hello')

channel.basic_publish(exchange='',

routing_key='hello',

body = 'hello world!'

)

print('[x] sent "hello world" ')

connection.close()连接远程rabbitMq Server的话 需要配置用户认证:

credentials = pika.PlainCredentials('hcl', '123456')

connection = pika.BlockingConnection(pika.ConnectionParameters('10.33.4.208',5672,'/',credentials))

channel = connection.channel()如果远程服务器是linux系统的话,得先在rabbitMq server中先创建一个用户

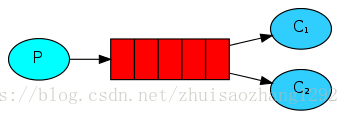

sudo rabbitmqctl add_user hcl 123456 sudo rabbitmqctl set_permissions -p / hcl ".*" ".*" ".*"在这种模式下,RabbitMQ会默认把p发的消息依次分发给各个消费者(c),跟负载均衡差不多

consumer.py

'''

Created on 2018年7月2日

@author: hcl

'''

import pika,time

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='task_queue')

def callback(ch,method,properties,body):

print('[x] received %r' %body)

time.sleep(20)

print('[x] Done')

print('method.delivery_tag',method.delivery_tag)

ch.basic_ack(delivery_tag = method.delivery_tag)

#和no_ack=False配合使用,表示消费段处理完了,消除queue消息,如果没有设置basic_ack 如果客户端断了或者其他情况,queue消息还是存在会发给其他消费端

channel.basic_consume(callback,

queue = 'task_queue',

#no_ack = True #如果设置np_ack = True ,不管消费端有没有处理完,都清除queue消息,默认是no_ack = False,表示保留消息

)

print('[*] waiting for message.To exit press CTRL+C')

channel.start_consuming()'''

Created on 2018年7月2日

@author: hcl

'''

import pika

import time

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue = 'task_queue')

message = ''.join(sys.argv[1:]) or 'hello world %s' % time.time()

channel.basic_publish(exchange='',

routing_key='task_queue', #queue名字队列

body = message, #消息内容

properties = pika.BasicProperties(delivery_mode=2) #make message persistent

)

print('[x] sent %r' %message)

connection.close() #队列关闭此时,先启动消息生产者,然后再分别启动3个消费者,通过生产者多发送几条消息,你会发现,这几条消息会被依次分配到各个消费者身上,

当某一消费段在确认接收到消息之前挂掉了,那么生产端会将消息发送个另外一个消费段,如果没有消费段可以发送了,数据将被一直保存到生产端,直到生产端关闭。因为生产端设置了消息持久化,所以会不断发消息

但是这个只能保证在生产端正常运行时,不会丢失数据,但是一旦生产端关闭或者宕机,数据就会丢失,为防止这种现象,可以在消费 生产端都设置队列的持久化。

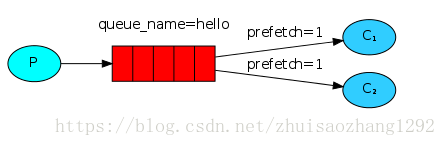

channel.queue_declare(queue='task_queue', durable=True)队列消息的公平分发:

如果Rabbit只管按顺序把消息发到各个消费者身上,不考虑消费者负载的话,很可能出现,一个机器配置不高的消费者那里堆积了很多消息处理不完,同时配置高的消费者却一直很轻松。为解决此问题,可以在各个消费者端,配置perfetch=1,意思就是告诉RabbitMQ在我这个消费者当前消息还没处理完的时候就不要再给我发新消息了。

channel.basic_qos(prefetch_count=1)

带消息持久化+公平分发的完整代码

producer.py

'''

Created on 2018年7月2日

@author: hcl

'''

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.queue_declare(queue='task_queue123', durable=True)

message = ' '.join(sys.argv[1:]) or "Hello World!"

channel.basic_publish(exchange='',

routing_key='task_queue123',

body=message,

properties=pika.BasicProperties(

delivery_mode = 2, # make message persistent

))

print(" [x] Sent %r" % message)

connection.close()consumer.py

'''

Created on 2018年7月2日

@author: hcl

'''

import pika

import time

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.queue_declare(queue='task_queue123', durable=True)

print(' [*] Waiting for messages. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] Received %r" % body)

time.sleep(body.count(b'.'))

print(" [x] Done")

ch.basic_ack(delivery_tag = method.delivery_tag)

channel.basic_qos(prefetch_count=1)

channel.basic_consume(callback,

queue='task_queue123')

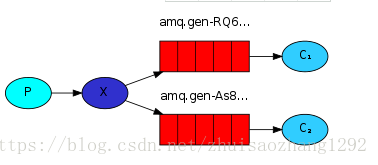

channel.start_consuming()之前的例子都基本都是1对1的消息发送和接收,即消息只能发送到指定的queue里,但有些时候你想让你的消息被所有的Queue收到,类似广播的效果,这时候就要用到exchange了

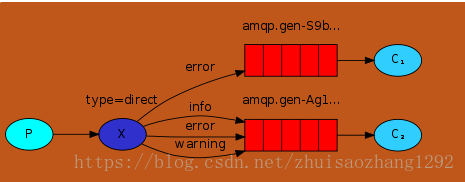

Exchange在定义的时候是有类型的,以决定到底是哪些Queue符合条件,可以接收消息

fanout: 所有bind到此exchange的queue都可以接收消息

direct: 通过routingKey和exchange决定的那个唯一的queue可以接收消息

topic:所有符合routingKey(此时可以是一个表达式)的routingKey所bind的queue可以接收消息

表达式符号说明:#代表一个或多个字符,*代表任何字符

例:#.a会匹配a.a,aa.a,aaa.a等

*.a会匹配a.a,b.a,c.a等

注:使用RoutingKey为#,Exchange Type为topic的时候相当于使用fanout

headers: 通过headers 来决定把消息发给哪些queue

producer.py

'''

Created on 2018年7月2日

@author: hcl

'''

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='logs',

exchange_type='fanout' #fanout:所有bind到此exchange的queue都可以接收消息

)

message = ' '.join(sys.argv[1:]) or "info: Hello World!"

channel.basic_publish(exchange='logs',

routing_key='',

body=message)

print(" [x] Sent %r" % message)

connection.close()consumer.py

'''

Created on 2018年7月2日

@author: hcl

'''

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='logs',

exchange_type='fanout')

result = channel.queue_declare(exclusive=True) #不指定queue名字,rabbit会随机分配一个名字,exclusive=True会在使用此queue的消费者断开后,自动将queue删除

queue_name = result.method.queue

channel.queue_bind(exchange='logs',

queue=queue_name)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r" % body)

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()有选择的接收消息

RabbitMQ还支持根据关键字发送,即:队列绑定关键字,发送者将数据根据关键字发送到消息exchange,exchange根据 关键字 判定应该将数据发送至指定队列。

producer.py

'''

Created on 2018年7月2日

@author: hcl

'''

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

exchange_type='direct')

inputstr = input('Please input message type and message:').strip()

inputlist = inputstr.split()

severity = inputlist[0] if len(inputlist) > 1 else 'info'

message = ' '.join(inputlist[1:]) or 'Hello World!'

channel.basic_publish(exchange='direct_logs',

routing_key=severity,

body=message)

print(" [x] Sent %r:%r" % (severity, message))

connection.close()consumer.py

import pika

'''

Created on 2018年7月2日

@author: hcl

'''

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='direct_logs',

exchange_type='direct')

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

#severities = sys.argv[1:]

inputstr = input('Please input your message type:').strip()

severities = inputstr.split()

if not severities:

sys.stderr.write("Usage: %s [info] [warning] [error]\n" % sys.argv[0])

sys.exit(1)

for severity in severities:

channel.queue_bind(exchange='direct_logs',

queue=queue_name,

routing_key=severity)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()Although using the direct exchange improved our system, it still has limitations - it can't do routing based on multiple criteria.

In our logging system we might want to subscribe to not only logs based on severity, but also based on the source which emitted the log. You might know this concept from the syslog unix tool, which routes logs based on both severity (info/warn/crit...) and facility (auth/cron/kern...).

That would give us a lot of flexibility - we may want to listen to just critical errors coming from 'cron' but also all logs from 'kern'.

producer.py

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='topic_logs',

exchange_type='topic')

inputstr = input('Please input message type and message:').strip()

inputlist = inputstr.split()

routing_key = inputlist[0] if len(inputlist) > 1 else 'anonymous.info'

message = ' '.join(inputlist[1:]) or 'Hello World!'

channel.basic_publish(exchange='topic_logs',

routing_key=routing_key,

body=message)

print(" [x] Sent %r:%r" % (routing_key, message))

connection.close()consumer.py

import pika

import sys

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.exchange_declare(exchange='topic_logs',

exchange_type='topic')

result = channel.queue_declare(exclusive=True)

queue_name = result.method.queue

#binding_keys = sys.argv[1:]

inputstr = input('Please input your message type:').strip()

severities = inputstr.split()

binding_keys = severities[0:]

if not binding_keys:

sys.stderr.write("Usage: %s [binding_key]...\n" % sys.argv[0])

sys.exit(1)

for binding_key in binding_keys:

channel.queue_bind(exchange='topic_logs',

queue=queue_name,

routing_key=binding_key)

print(' [*] Waiting for logs. To exit press CTRL+C')

def callback(ch, method, properties, body):

print(" [x] %r:%r" % (method.routing_key, body))

channel.basic_consume(callback,

queue=queue_name,

no_ack=True)

channel.start_consuming()Remote procedure call (RPC)

To illustrate how an RPC service could be used we're going to create a simple client class. It's going to expose a method named call which sends an RPC request and blocks until the answer is received:

RPC.client.py:

'''

Created on 2018年7月4日

@author: hcl

'''

import pika

import uuid

class FibonacciRpcClient(object):

def __init__(self):

self.connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

self.channel = self.connection.channel()

result = self.channel.queue_declare(exclusive=True)

self.callback_queue = result.method.queue

self.channel.basic_consume(self.on_response, no_ack=True,

queue=self.callback_queue)

def on_response(self, ch, method, props, body):

if self.corr_id == props.correlation_id:

self.response = body

def call(self, n):

self.response = None

self.corr_id = str(uuid.uuid4())

self.channel.basic_publish(exchange='',

routing_key='rpc_queue',

properties=pika.BasicProperties(

reply_to = self.callback_queue,

correlation_id = self.corr_id,

),

body=str(n))

while self.response is None:

self.connection.process_data_events()

return int(self.response)

fibonacci_rpc = FibonacciRpcClient()

print(" [x] Requesting fib(4)")

response = fibonacci_rpc.call(4)

print(" [.] Got %r" % response)RPC.server.py

'''

Created on 2018年7月4日

@author: hcl

'''

import pika

import time

connection = pika.BlockingConnection(pika.ConnectionParameters(

host='localhost'))

channel = connection.channel()

channel.queue_declare(queue='rpc_queue')

def fib(n):

if n == 0:

return 0

elif n == 1:

return 1

else:

return fib(n-1) + fib(n-2)

def on_request(ch, method, props, body):

n = int(body)

print(" [.] fib(%s)" % n)

response = fib(n)

ch.basic_publish(exchange='',

routing_key=props.reply_to,

properties=pika.BasicProperties(correlation_id = \

props.correlation_id),

body=str(response))

ch.basic_ack(delivery_tag = method.delivery_tag)

channel.basic_qos(prefetch_count=1)

channel.basic_consume(on_request, queue='rpc_queue')

print(" [x] Awaiting RPC requests")

channel.start_consuming()Redis

在windows和linux中安装redis请参考:

http://www.runoob.com/redis/redis-install.html