在信息匹配的环节,有根据权重进行匹配的需求。用户输入的字段有:

(job_name(期望工作),city(期望工作城市),sala(期望工作薪水),self_jy(个人经验),self_xl(个人学历))

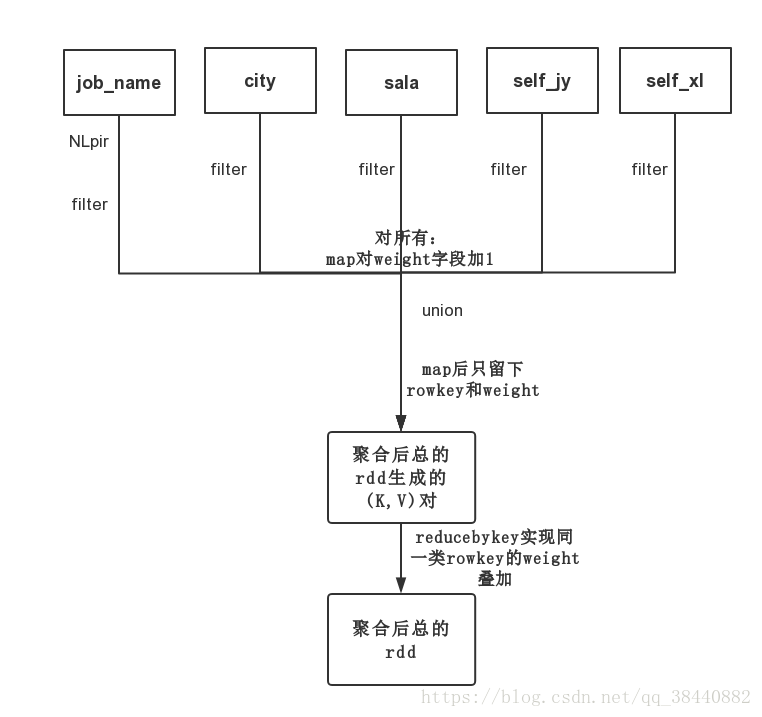

我们希望通过以上条件实现智能化的匹配。具体思路如下:

在spark分析程序中将读取Hbase中企业招聘信息到RDD中,利用RDD的一系列算子实现最终的智能匹配,在对工作名的分析中,调用了nlpir自然语义处理平台接口,对工作名进行分词后筛选出包含所有分词的工作并进行权值加一的操作。对于整个流程如下(利用scala语言进行spark程序的编写):

图1 匹配总流程图

总体代码如下:

package ReadHBase

import org.apache.hadoop.hbase.HBaseConfiguration

import org.apache.hadoop.hbase.client._

import org.apache.hadoop.hbase.mapreduce.TableInputFormat

import org.apache.hadoop.hbase.util.Bytes

import org.apache.spark.sql.SQLContext

import org.apache.spark.{SparkConf, SparkContext}

object PP {

//个性化匹配

def main(job_name:String,city:String,sala:Int,self_jy:String,self_xl:String): Unit = {

val sparkConf = new SparkConf().setAppName("ZNPP").setMaster("local")

val sc = new SparkContext(sparkConf)

val sqlContext = new SQLContext(sc)

val tablename = "bigdata1"

val conf = HBaseConfiguration.create()

//设置zooKeeper集群地址,也可以通过将hbase-site.xml导入classpath,但是建议在程序里这样设置

conf.set("hbase.zookeeper.quorum", "master")

//设置zookeeper连接端口,默认2181

conf.set("hbase.zookeeper.property.clientPort", "2181")

conf.set(TableInputFormat.INPUT_TABLE, tablename)

val hBaseConf1 = HBaseConfiguration.create()

hBaseConf1.set(TableInputFormat.INPUT_TABLE, "bigdata1")

//读取数据并转化成rdd

val hBaseRDD1 = sc.newAPIHadoopRDD(hBaseConf1, classOf[TableInputFormat],

classOf[org.apache.hadoop.hbase.io.ImmutableBytesWritable],

classOf[org.apache.hadoop.hbase.client.Result])

var rdd = hBaseRDD1.map(r => (

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("rowkey"))),//1

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_name"))),//2

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("weight"))),//3

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_link"))),//4

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_num"))),//5

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_fkl"))),//6

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_desc"))),//7

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_wel"))),//8

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_name"))),//9

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_link"))),//10

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_sala_low"))),//11

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_sala_high"))),//12

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_sala"))),//13

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_adr"))),//14

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("create_time"))),//15

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_prop"))),//16

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("com_gm"))),//17

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("self_jy"))),//18

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("self_xl"))),//19

Bytes.toString(r._2.getValue(Bytes.toBytes("cf"), Bytes.toBytes("job_desc")))//20

))

rdd=rdd.map(x=>(x._1,x._2,0.toString,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))

val instance = CLibrary.getInstance("nlplib/libNLPIR.so"); //传入NLPIR库所在的路径

// val Instance = Native.loadLibrary("nlplib/NLPIR", classOf[CLibrary]).asInstanceOf[CLibrary]

//初始化

val init_flag = instance.NLPIR_Init(".", 1, "0")

var resultString: String = null;

if (0 == init_flag) {

resultString = instance.NLPIR_GetLastErrorMsg;

Console.err.println("初始化失败!\n" + resultString)

return

}

val str = instance.NLPIR_ParagraphProcess(job_name, 0)

val str1 = str.split(" ")//分词结果数组

var rdd1=rdd

for (i <- 0 to str1.length - 1) {

rdd1 = rdd.filter(x => x._2.contains(str1(i)))

}

rdd1=rdd1.map(x=>(x._1,x._2,(x._3.toInt+1).toString,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))//第一次过滤加权重

var rdd2=rdd.filter(x=>x._14.contains(city))

rdd2=rdd2.map(x=>(x._1,x._2,(x._3.toInt+1).toString,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))//第二次过滤

rdd2=rdd2.filter(x=>(!x._13.contains("面议"))&&(!x._13.contains("1000元以下")))

var rdd0=rdd

var rdd3=rdd0.filter(x=>(sala>x._11.toInt&&sala<x._12.toInt))

rdd3=rdd.map(x=>(x._1,x._2,(x._3.toInt+1).toString,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))//第三次过滤

var rdd4=rdd.filter(x=>transform.JY1(x._18)<transform.JY1(self_jy))

rdd4=rdd4.map(x=>(x._1,x._2,(x._3.toInt+1).toString,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))//4

var rdd5=rdd.filter(x=>transform.JY1(x._19)<transform.JY1(self_xl))

rdd4=rdd4.map(x=>(x._1,x._2,(x._3.toInt+1).toString,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))//5

var rdd6=rdd1.union(rdd2)

rdd6=rdd6.union(rdd3)//合并所有集合

rdd6=rdd6.union(rdd4)

rdd6=rdd6.union(rdd5)

rdd6=rdd6.filter(x=>(!x._13.contains("1000元以下")))

var rdd7=rdd6.map(x=>(x._1,x._3.toInt))

rdd7=rdd7.reduceByKey((x,y)=>x+y)

val config = HBaseConfiguration.create()

val conn = ConnectionFactory.createConnection(config)

val A=rdd7.collect()

print(A.length)

A.foreach(x=>print(x))

for (i<-0 to A.length-1){

HBase_Con.Hbase_insert(conn,"bigdata1",A(i)._1,"cf","weight",A(i)._2.toString)

}

// while (I1.hasNext){

// var i=0

// val x=I1

// HBase_Con.Hbase_deleteOne(conn,"bigdata",I2.apply(i)._1,"cf","weight")

// print(i)

// HBase_Con.Hbase_insert(conn,"bigdata",I2.apply(i)._1,"cf","weight",I2.apply(i)._2.toString)

// i=i+1

// I1.next()

// }

PP1.main(sc)

//val rdd7=rdd6.map(x=>(x._1,x._2,x._3,x._4,x._5,x._6,x._7,x._8,x._9,x._10,x._11,x._12,x._13,x._14,x._15,x._16,x._17,x._18,x._19,x._20))

// val shop1=rdd7.toDF("rowkey","job_name","weight","job_link","job_num","job_fkl", "com_desc","com_wel","com_name", "com_link", "job_sala_low", "job_sala_high", "job_sala", "com_adr", "create_time", "com_prop", "com_gm", "self_jy", "self_xl","job_desc")

sc.stop()

}

}其中调用的transform object用于特征提取,代码如下:

package ReadHBase

//参数转换,1指对公司,2指对求职者的简历数据

object transform {

def ZY2(zy1:String): Int = {

var a = 0

val url = "/home/hhh/major.txt"

val b = Utils.filetostring(url)

if (b.contains(zy1)){

a = 1

}

a

}

def ZY1(zy1: String): Int = {

var a = 0

if (zy1.contains("相关专业")) {

a = 1

}

a

}

def JY1(jy: String): Int = jy match {

case "1-3年" => 1

case "3-5年" => 2

case "5-10年" => 3

case "10年以上" => 4

case _ => 0

}

def JY_1(jy1:Int):String =jy1 match{

case 1=>"1-3年"

case 2=>"3-5年"

case 3=>"5-10年"

case 4=>"10年以上"

case 0=>"无要求"

}

def XL1(xl: String): Int = xl match {

case "专科" => 1

case "本科" => 2

case "硕士" => 3

case _ => 0

}

def XL_1(jy1:Int):String =jy1 match{

case 1=>"专科"

case 2=>"本科"

case 3=>"硕士"

case 0=>"其他"

}

def City(city:String): Int={

var a=0

val a1=Utils.filetostring("/home/hhh/city/n1.txt")

val a2=Utils.filetostring("/home/hhh/city/1.txt")

val a3=Utils.filetostring("/home/hhh/city/2.txt")

val a4=Utils.filetostring("/home/hhh/city/3.txt")

val a5=Utils.filetostring("/home/hhh/city/4.txt")

val a6=Utils.filetostring("/home/hhh/city/5.txt")

if(a1.contains(city)){

a=1

}

else if(a2.contains(city)){

a=2

}

else if(a3.contains(city)){

a=3

}

else if(a4.contains(city)){

a=4

}

else if(a5.contains(city)){

a=5

}

else if(a6.contains(city)){

a=6

}

a

}

def School(school:String): String={

var a=""

val a2=Utils.filetostring("/home/hhh/school/1.txt")

val a3=Utils.filetostring("/home/hhh/school/2.txt")

if(a2.contains(school)){

a="985高校大学生"

}

else if(a2.contains(school)){

a="211高校大学生"

}

a

}

def nengli(nengli:String): String={

var a=""

val a2=Utils.filetostring("/home/hhh/nengli/1.txt")

val a3=Utils.filetostring("/home/hhh/nengli/2.txt")

if(a2.contains(nengli)){

a="985高校大学生"

}

else if(a2.contains(nengli)){

a="211高校大学生"

}

a

}

}