注:这里的代码是听台大李宏毅老师的ML课程敲的相应代码。

- 先各种import

import numpy as np

np.random.seed(1337)

# https://keras.io/

!pip install -q keras

import keras

from keras.models import Sequential

from keras.layers.core import Dense, Dropout, Activation

from keras.layers import Convolution2D, MaxPooling2D, Flatten

from keras.optimizers import SGD, Adam

from keras.utils import np_utils

from keras.datasets import mnist

#categorical_crossentropy- 再定义函数load_data()

def load_data():

(x_train, y_train), (x_test, y_test) = mnist.load_data()

number = 10000

x_train = x_train[0 : number]

y_train = y_train[0 : number]

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.astype('float32')

x_test = x_train.astype('float32')

#convert class vectors to binary class matrices

y_train = np_utils.to_categorical(y_train, 10)

y_test = np_utils.to_categorical(y_test, 10)

x_train = x_train

x_test = x_test

#x_test = np.random.normal(x_test)

x_train = x_train/255

x_test = x_test/255

return (x_train, y_train), (x_test, y_test)- 第一次运行测试

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'mse', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\nTest Acc:', result[1])

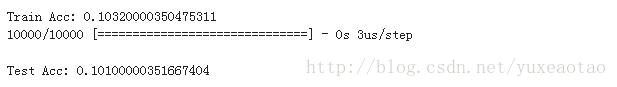

运行结果如下:

说明training的时候就没有train好。

- 修改1:把loss由mse改为categorical_crossrntropy

#修改1:把loss由mse改为categorical_crossrntropy

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 10, activation = 'softmax'))

#这里做了修改

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\nTest Acc:', result[1])

运行结果如下:

training的结果由11%提升到85%(test acc却更小了)。

- 修改2:在修改1的基础上,fit时的batch_size由100调整为10000(这样GPU能发挥它平行运算的特点,会计算的很快)

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 10000, epochs = 20) #这里做了修改

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\nTest Acc:', result[1])运行结果如下:

performance很差。

- 修改3:在lossfunc用crossentropy的基础上,fit时的batch_size由10000调整为1(这样GPU就不能发挥它平行运算的效能,会计算的很慢)

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 1, epochs = 20) #这里做了修改

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\nTest Acc:', result[1])没有等到运行结果出来……

- 修改4:在修改1的基础上,改成deep,再加10层

#修改4:现在改成deep,再加10层

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 689, activation = 'sigmoid'))

#这里做了修改

for i in range(10):

model.add(Dense(units = 689, activation = 'sigmoid'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

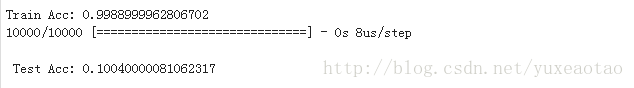

print('\n Test Acc:', result[1])运行结果如下:

还是training就很差。

- 修改5:在修改4的基础上,把sigmoid都改成relu

#修改5:在修改4的基础上,把sigmoid都改成relu

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

for i in range(10):

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\n Test Acc:', result[1])运行结果如下:

training效果非常好,但是test效果依然很差劲。

- 修改6:在修改5的基础上。load_data函数中,第26行和第27行,是除以255做normalize,如果把这两行注释掉:

# 修改6:load_data函数中,第26行和第27行,是除以255做normalize,如果把这两行注释掉,会发现又做不起来了

def load_data():

(x_train, y_train), (x_test, y_test) = mnist.load_data()

number = 10000

x_train = x_train[0 : number]

y_train = y_train[0 : number]

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.astype('float32')

x_test = x_train.astype('float32')

#convert class vectors to binary class matrices

y_train = np_utils.to_categorical(y_train, 10)

y_test = np_utils.to_categorical(y_test, 10)

x_train = x_train

x_test = x_test

#x_test = np.random.normal(x_test)

# x_train = x_train/255 #这里做了修改

# x_test = x_test/255

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

for i in range(10):

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\n Test Acc:', result[1])运行结果如下:

training效果又变得很好。

- 修改7:取消修改6中的操作,并把添加的10层注释掉再来一次

# 修改7:取消修改6中的操作,并把添加的10层注释掉再来一次

def load_data():

(x_train, y_train), (x_test, y_test) = mnist.load_data()

number = 10000

x_train = x_train[0 : number]

y_train = y_train[0 : number]

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.astype('float32')

x_test = x_train.astype('float32')

#convert class vectors to binary class matrices

y_train = np_utils.to_categorical(y_train, 10)

y_test = np_utils.to_categorical(y_test, 10)

x_train = x_train

x_test = x_test

#x_test = np.random.normal(x_test)

x_train = x_train/255

x_test = x_test/255

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

# for i in range(10):

# model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = SGD(lr = 0.1), metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\n Test Acc:', result[1])运行结果如下:

training效果又变得很好。

- 修改8: 换一下gradient descent strategy ,把SGD换为adam

# 修改8: 换一下gradient descent strategy ,把SGD换为adam

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

# for i in range(10):

# model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = 'adam', metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\n Test Acc:', result[1])此时虽然最后收敛得差不多,但是最后上升的速度加快了。

- 修改9:在testing set每个image故意加上noise。

#修改9:在testing set每个image故意加上noise。 现在效果更差了。。。

def load_data():

(x_train, y_train), (x_test, y_test) = mnist.load_data()

number = 10000

x_train = x_train[0 : number]

y_train = y_train[0 : number]

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.reshape(number, 28*28)

x_test = x_test.reshape(x_test.shape[0], 28*28)

x_train = x_train.astype('float32')

x_test = x_train.astype('float32')

#convert class vectors to binary class matrices

y_train = np_utils.to_categorical(y_train, 10)

y_test = np_utils.to_categorical(y_test, 10)

x_train = x_train

x_test = x_test

#x_test = np.random.normal(x_test)

x_train = x_train/255

x_test = x_test/255

x_test = np.random.normal(x_test)

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_data()

model = Sequential()

model.add(Dense(input_dim = 28*28, units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 689, activation = 'relu'))

# for i in range(10):

# model.add(Dense(units = 689, activation = 'relu'))

model.add(Dense(units = 10, activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = 'adam', metrics = ['accuracy'])

model.fit(x_train, y_train, batch_size = 100, epochs = 20)

result = model.evaluate(x_train, y_train, batch_size = 10000)

print('\nTrain Acc:', result[1])

result = model.evaluate(x_test, y_test, batch_size = 10000)

print('\n Test Acc:', result[1])运行结果如下:

现在效果更差了。。。