数据准备

直接导入数据集mnist,把训练集和测试集导入。

from keras.datasets import mnist

(X_train,y_train),(X_test,y_test) = mnist.load_data()

数据集介绍

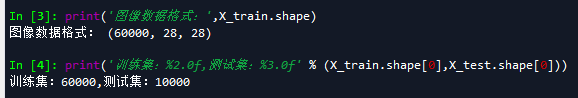

print('图像数据格式:',X_train.shape)

print('训练集:%2.0f,测试集:%3.0f' % (X_train.shape[0],X_test.shape[0]))

训练集一共60000条数据集,测试集一共包含10000数据集,每条记录是2828的格式。图像由2828个像素点(灰度值)构成。

import matplotlib.pyplot as plt

plt.imshow(X_train[6])

plt.imshow(X_train[6],cmap = 'Greys')

#画20个样本

for i in range(20):

plt.subplot(4,5,i+1)

plt.imshow(X_train[i])

#把横纵坐标标签去掉

for i in range(20):

plt.subplot(4,5,i+1)

plt.imshow(X_train[i])

plt.xticks([])

plt.yticks([])

#更改颜色

for i in range(20):

plt.subplot(4,5,i+1)

plt.imshow(X_train[i],cmap = 'Reds')#Blues

plt.xticks([])

plt.yticks([])

#画出值为5的前15个样本

fig = plt.figure(figsize = (8,5))

for i in range(15):

ax = fig.add_subplot(3,5,i+1,xticks = [],yticks = [])

ax.imshow(X_train[y_train ==5][i],cmap = 'Greys')

这个 是画出前15个因变量为5的值

数据整理

输入维度为(num,28,28),需要把后面的维度拼接起来,变换成784维。

将因变量转换为哑变量组

X_train = X_train.reshape(X_train.shape[0],

X_train.shape[1] * X_train.shape[2])

X_test = X_test.reshape(X_test.shape[0],

X_test.shape[1]*X_test.shape[2])

X_train[0].shape

#将因变量转换为哑变量组

from keras.utils import to_categorical

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

建立模型

第一步选择模型

#定义模型

from keras.models import Sequential

from keras.layers.core import Dense,Dropout,Activation

from keras.optimizers import SGD

import numpy

#第一步选择模型

model = Sequential()

第二步:构建网络层

model.add(Dense(500,input_shape = (784,)))#输入层,784

model.add(Activation('tanh'))#激活函数是tanh

model.add(Dropout(0.5))#50%节点连接Dropout

model.add(Dense(300))#隐藏层节点300个

model.add(Activation('tanh'))#激活函数是tanh

model.add(Dropout(0.5))#50%节点连接Dropout

model.add(Dense(10))#输出10个结果,因变量维度为10

model.add(Activation('softmax'))#最后一层激活函数是tanh

#网络连接权重数:(784+1)*500+(500+1)*300+(300+1)*10

model.summary()

第三步:编译

#优化函数,设定学习等参数n

sgd = SGD(lr = 0.01,decay = 1e-e,momentum = 0.9,nesterov = True)

model.compile(loss = 'categorical_crossentropy',

optimizer = sgd,

metrics = ['accuracy'])

#模型训练

from keras.callbacks import ModelCheckpoint

checkpoint = ModelCheckpoint(r'F:\learning_kecheng\deenlearning\NEW\all_zjwj\mnist_BPbest.hdf5',

monitor = 'val_acc',

save_best_only=True,

verbose = 1)

第四步:训练

model.fit(X_train,y_train,batch_size = 200,epochs = 100,

shuffle = True,verbose = 1,

validation_data = (X_test,y_test),

callbacks = [checkpoint])

model.save(r'F:\learning_kecheng\deenlearning\NEW\all_zjwj\mnist_BPbest.hdf5')

from keras.models import load_model

model = load_model(r'F:\learning_kecheng\deenlearning\NEW\all_zjwj\mnist_BPbest.hdf5')

第五步:模型评估

score = model.evaluate(X_test,y_test, batch_size = 200,verbose = 1)

print('测试集损失函数:%f,预测准确率:%2.2f%%'%(score[0],score[1]*100))