统计学习方法第二章:感知机(perceptron)算法及python实现

统计学习方法第三章:k近邻法(k-NN),kd树及python实现

统计学习方法第四章:朴素贝叶斯法(naive Bayes),贝叶斯估计及python实现

统计学习方法第五章:决策树(decision tree),CART算法,剪枝及python实现

统计学习方法第五章:决策树(decision tree),ID3算法,C4.5算法及python实现

完整代码:

https://github.com/xjwhhh/LearningML/tree/master/StatisticalLearningMethod

欢迎follow和star

决策树(decision tree)是一种基本的分类与回归方法。决策树模型呈树状结构,在分类问题中,表示基于特征对实例进行分类的过程。

它可以认为是if-then规则的集合,也可以认为是定义在特征空间与类空间上的条件概率分布。

其主要优点是模型具有可读性,分类速度快。

学习时,利用训练数据,根据损失函数最小化的原则建立决策树模型。预测时,对新的数据,利用决策树模型进行分类。

决策树学习通常包括3个步骤:特征选择,决策树的生成和决策树的修剪

主要的决策树算法包括ID3,C4.5,CART。我主要实现的是CART算法,其余两个算法也有所实现,但正确率不高,先不予分享,以免误人子弟。

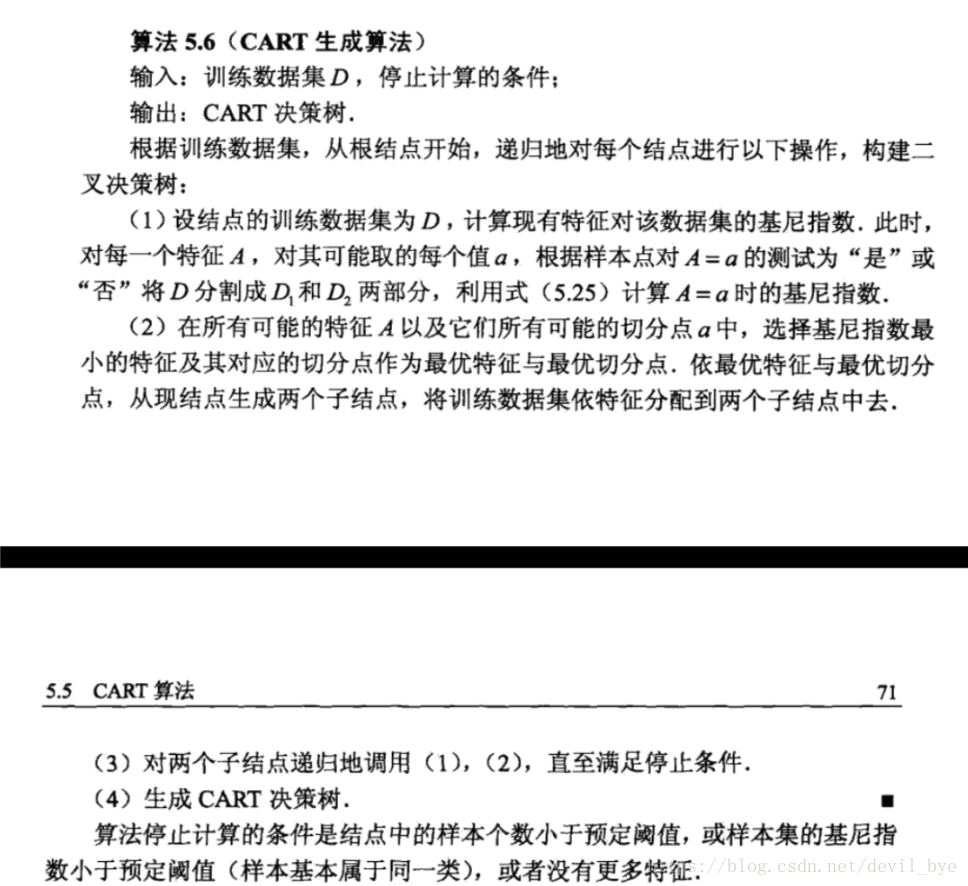

以下是CART生成算法:

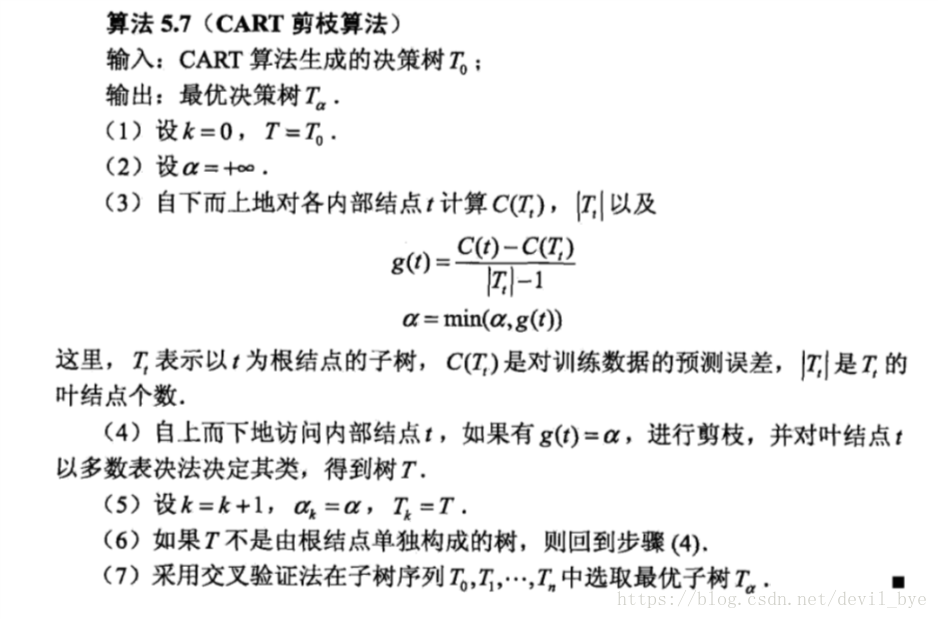

以下是CART剪枝算法:

每次剪枝剪的是某个内部结点的子结点,也就是将某个内部结点的所有子结点回退到这个内部结点里,并将这个内部结点作为叶子结点。因此在计算损失函数时,这个内部结点之外的值都没变,所以我们只需计算内部结点剪枝前和剪枝后的损失函数

问题1:为什么剪去g(t)最小的T?

个人认为是为了获得更多的区间和子树,便于比较

问题2:为什么不直接减去C(t)+

最小的T?

个人认为是整体损失函数=内部结点损失函数+其他结点损失函数和。如果直接剪去这个T,使得内部结点损失函数最小,但其他结点损失函数和不一定,所以整体不一定。换句话说就是局部最优与整体最优的关系。而采用损失函数减小率来进行调整,剪枝幅度更小,能产生更多树,可能其中某个树就是整体损失函数最小

我实现的CART算法并没有剪枝,剪枝的代码会在之后做分享:

import cv2

import time

import logging

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

total_class = 10

# 这里选用了一个比较小的数据集,因为过大的数据集会导致栈溢出

def log(func):

def wrapper(*args, **kwargs):

start_time = time.time()

logging.debug('start %s()' % func.__name__)

ret = func(*args, **kwargs)

end_time = time.time()

logging.debug('end %s(), cost %s seconds' % (func.__name__, end_time - start_time))

return ret

return wrapper

# 二值化

def binaryzation(img):

cv_img = img.astype(np.uint8)

cv2.threshold(cv_img, 50, 1, cv2.THRESH_BINARY_INV, cv_img)

return cv_img

@log

def binaryzation_features(trainset):

features = []

for img in trainset:

img = np.reshape(img, (28, 28))

cv_img = img.astype(np.uint8)

img_b = binaryzation(cv_img)

features.append(img_b)

features = np.array(features)

features = np.reshape(features, (-1, 784))

return features

class TreeNode(object):

"""决策树节点"""

def __init__(self, **kwargs):

'''

attr_index: 属性编号

attr: 属性值

label: 类别(y)

left_chuld: 左子结点

right_child: 右子节点

'''

self.attr_index = kwargs.get('attr_index')

self.attr = kwargs.get('attr')

self.label = kwargs.get('label')

self.left_child = kwargs.get('left_child')

self.right_child = kwargs.get('right_child')

# 计算数据集的基尼指数

def gini_train_set(train_label):

train_label_value = set(train_label)

gini = 0.0

for i in train_label_value:

train_label_temp = train_label[train_label == i]

pk = float(len(train_label_temp)) / len(train_label)

gini += pk * (1 - pk)

return gini

# 计算一个特征不同切分点的基尼指数,并返回最小的

def gini_feature(train_feature, train_label):

train_feature_value = set(train_feature)

min_gini = float('inf')

return_feature_value = 0

for i in train_feature_value:

train_feature_class1 = train_feature[train_feature == i]

label_class1 = train_label[train_feature == i]

# train_feature_class2 = train_feature[train_feature != i]

label_class2 = train_label[train_feature != i]

D1 = float(len(train_feature_class1)) / len(train_feature)

D2 = 1 - D1

if (len(label_class1) == 0):

p1 = 0

else:

p1 = float(len(label_class1[label_class1 == label_class1[0]])) / len(label_class1)

if (len(label_class2) == 0):

p2 = 0

else:

p2 = float(len(label_class2[label_class2 == label_class2[0]])) / len(label_class2)

gini = D1 * 2 * p1 * (1 - p1) + D2 * 2 * p2 * (1 - p2)

if min_gini > gini:

min_gini = gini

return_feature_value = i

return min_gini, return_feature_value

def get_best_index(train_set, train_label, feature_indexes):

'''

:param train_set: 给定数据集

:param train_label: 数据集对应的标记

:return: 最佳切分点,最佳切分变量

求给定切分点集合中的最佳切分点和其对应的最佳切分变量

'''

min_gini = float('inf')

feature_index = 0

return_feature_value = 0

for i in range(len(train_set[0])):

if i in feature_indexes:

train_feature = train_set[:, i]

gini, feature_value = gini_feature(train_feature, train_label)

if gini < min_gini:

min_gini = gini

feature_index = i

return_feature_value = feature_value

return feature_index, return_feature_value

# 根据最有特征和最优切分点划分数据集

def divide_train_set(train_set, train_label, feature_index, feature_value):

left = []

right = []

left_label = []

right_label = []

for i in range(len(train_set)):

line = train_set[i]

if line[feature_index] == feature_value:

left.append(line)

left_label.append(train_label[i])

else:

right.append(line)

right_label.append(train_label[i])

return np.array(left), np.array(right), np.array(left_label), np.array(right_label)

@log

def build_tree(train_set, train_label, feature_indexes):

# 查看是否满足停止条件

train_label_value = set(train_label)

if len(train_label_value) == 1:

print("a")

return TreeNode(label=train_label[0])

if feature_indexes is None:

print("b")

return TreeNode(label=train_label[0])

if len(feature_indexes) == 0:

print("c")

return TreeNode(label=train_label[0])

feature_index, feature_value = get_best_index(train_set, train_label, feature_indexes)

# print("feature_index",feature_index)

left, right, left_label, right_label = divide_train_set(train_set, train_label, feature_index, feature_value)

feature_indexes.remove(feature_index)

# print("feature_indexes",feature_indexes)

left_branch = build_tree(left, left_label, feature_indexes)

right_branch = build_tree(right, right_label, feature_indexes)

return TreeNode(left_child=left_branch,

right_child=right_branch,

attr_index=feature_index,

attr=feature_value)

# @log

# def prune(tree):

def predict_one(node, test):

while node.label is None:

if test[node.attr_index] == node.attr:

node = node.left_child

else:

node = node.right_child

return node.label

@log

def predict(tree, test_set):

result = []

for test in test_set:

label = predict_one(tree, test)

result.append(label)

return result

if __name__ == '__main__':

logger = logging.getLogger()

logger.setLevel(logging.DEBUG)

raw_data = pd.read_csv('../data/train_binary1.csv', header=0)

data = raw_data.values

imgs = data[0:, 1:]

labels = data[:, 0]

print(imgs.shape)

# 图片二值化

# features = binaryzation_features(imgs)

# 选取 2/3 数据作为训练集, 1/3 数据作为测试集

train_features, test_features, train_labels, test_labels = train_test_split(imgs, labels, test_size=0.33,random_state=1)

print(type(train_features))

tree = build_tree(train_features, train_labels, [i for i in range(784)])

test_predict = predict(tree, test_features)

score = accuracy_score(test_labels, test_predict)

print("The accuracy score is ", score)

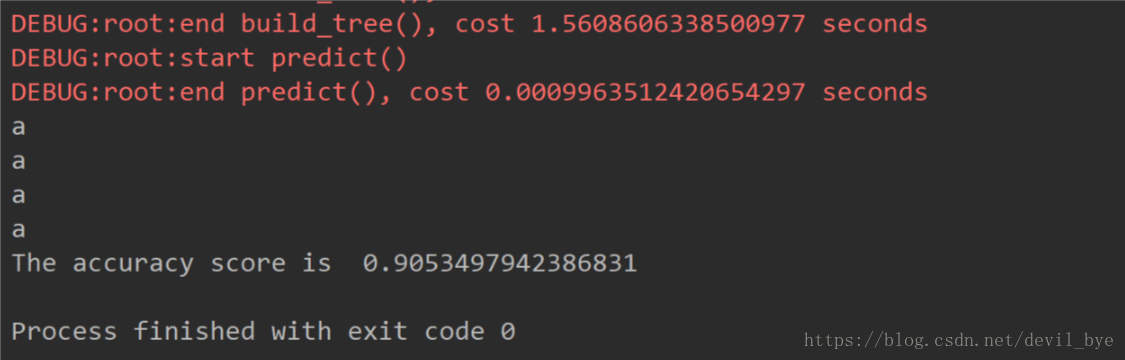

运行结果如下:

水平有限,如有错误,希望指出