introduction to artificial neural networks

from __future__ import division, print_function, unicode_literals

import numpy as np

import os

def reset_graph(seed=42):

tf.reset_default_graph()

tf.set_random_seed(seed)

np.random.seed(seed)

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

plt.rcParams['axes.labelsize'] = 14

plt.rcParams['xtick.labelsize'] = 12

plt.rcParams['ytick.labelsize'] = 12

PROJECT_ROOT_DIR = "."

CHAPTER_ID = "ann"

def save_fig(fig_id, tight_layout=True):

path = os.path.join(PROJECT_ROOT_DIR, "images", CHAPTER_ID, fig_id + ".png")

print("Saving figure", fig_id)

if tight_layout:

plt.tight_layout()

plt.savefig(path, format='png', dpi=300)

感知机

import numpy as np

from sklearn.datasets import load_iris

from sklearn.linear_model import Perceptron

iris = load_iris()

X = iris.data[ : ,(2, 3)]

y = (iris.target == 0).astype(np.int)

per_clf = Perceptron(random_state=42)

per_clf.fit(X, y)

y_pred = per_clf.predict([[2.0 , 0.5]])

print(y_pred)

C:\Users\Xiaowang Zhang\Anaconda3\lib\site-packages\sklearn\linear_model\stochastic_gradient.py:128: FutureWarning: max_iter and tol parameters have been added in <class 'sklearn.linear_model.perceptron.Perceptron'> in 0.19. If both are left unset, they default to max_iter=5 and tol=None. If tol is not None, max_iter defaults to max_iter=1000. From 0.21, default max_iter will be 1000, and default tol will be 1e-3.

"and default tol will be 1e-3." % type(self), FutureWarning)

[1]

per_clf.coef_[0][1]

-1.3

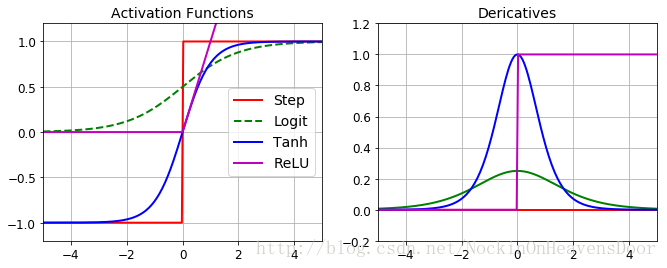

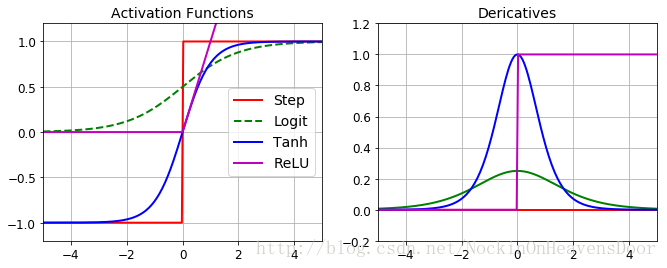

激活函数

def logit(z):

return 1 / (1 + np.exp(-z))

def relu(z):

return np.maximum(0, z)

"""导数定义用来求导"""

def derivative(f, z, eps=0.000001):

return (f(z + eps) - f(z - eps)) / (2 * eps)

z = np.linspace(-5, 5, 200)

plt.figure(figsize=(11,4))

plt.subplot(121)

plt.plot(z, np.sign(z),'r-',linewidth=2, label="Step")

plt.plot(z, logit(z), 'g--', linewidth=2, label="Logit")

plt.plot(z, np.tanh(z), 'b-', linewidth=2,label="Tanh")

plt.plot(z, relu(z), 'm-', linewidth=2, label="ReLU")

plt.grid(True)

plt.legend(loc="center right", fontsize=14)

plt.title("Activation Functions", fontsize=14)

plt.axis([-5, 5, -1.2, 1.2])

plt.subplot(122)

plt.plot(z, derivative(np.sign, z),'r-', linewidth=2, label="Step")

plt.plot(z, derivative(logit, z), 'g-', linewidth=2, label="Logit")

plt.plot(z, derivative(np.tanh, z), 'b-', linewidth=2, label="Tanh")

plt.plot(z, derivative(relu, z), 'm-', linewidth=2, label="ReLU")

plt.grid(True)

plt.title("Dericatives", fontsize=14)

plt.axis([-5, 5, -0.2, 1.2])

plt.show()

def heaviside(z):

return (z >= 0).astype(z.dtype)

def sigmoid(z):

return 1 / (1 + np.exp(-z))

"""多层感知机"""

def mlp_xor(x1, x2, activation=heaviside):

return activation(-activation(x1 + x2 - 1.5) + activation(x1 + x2 - 0.5) - 0.5)

x1s = np.linspace(-0.2, 1.2, 100)

x2s = np.linspace(-0.2, 1.2, 100)

x1, x2 = np.meshgrid(x1s, x2s)

print(x1)

[[-0.2 -0.18585859 -0.17171717 ..., 1.17171717 1.18585859 1.2 ]

[-0.2 -0.18585859 -0.17171717 ..., 1.17171717 1.18585859 1.2 ]

[-0.2 -0.18585859 -0.17171717 ..., 1.17171717 1.18585859 1.2 ]

...,

[-0.2 -0.18585859 -0.17171717 ..., 1.17171717 1.18585859 1.2 ]

[-0.2 -0.18585859 -0.17171717 ..., 1.17171717 1.18585859 1.2 ]

[-0.2 -0.18585859 -0.17171717 ..., 1.17171717 1.18585859 1.2 ]]

用tf的高层api TF.Learn实现多层感知机(MLP)

- 问题描述:两个隐层,第一层300个神经元,第二层100个神经元,输出层10个神经元。

数据是Mnist

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('tmp/data/')

Extracting tmp/data/train-images-idx3-ubyte.gz

Extracting tmp/data/train-labels-idx1-ubyte.gz

Extracting tmp/data/t10k-images-idx3-ubyte.gz

Extracting tmp/data/t10k-labels-idx1-ubyte.gz

X_train = mnist.train.images

print(X_train.shape)

(55000, 784)

X_test = mnist.test.images

y_train = mnist.train.labels.astype("int")

y_test = mnist.test.labels.astype("int")

import tensorflow as tf

""""""

config = tf.contrib.learn.RunConfig(tf_random_seed=42)

""""""

feature_columns = tf.contrib.learn.infer_real_valued_columns_from_input(X_train)

dnn_clf = tf.contrib.learn.DNNClassifier(hidden_units=[300, 100],n_classes=10,feature_columns=feature_columns)

dnn_clf = tf.contrib.learn.SKCompat(dnn_clf)

dnn_clf.fit(X_train, y_train, batch_size=50, steps=40000)

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: C:\Users\XIAOWA~1\AppData\Local\Temp\tmpb16xgf1z

INFO:tensorflow:Using config: {'_task_type': None, '_task_id': 0, '_cluster_spec': <tensorflow.python.training.server_lib.ClusterSpec object at 0x00000237A5884320>, '_master': '', '_num_ps_replicas': 0, '_num_worker_replicas': 0, '_environment': 'local', '_is_chief': True, '_evaluation_master': '', '_tf_config': gpu_options {

per_process_gpu_memory_fraction: 1

}

, '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_secs': 600, '_log_step_count_steps': 100, '_session_config': None, '_save_checkpoints_steps': None, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_model_dir': 'C:\\Users\\XIAOWA~1\\AppData\\Local\\Temp\\tmpb16xgf1z'}

WARNING:tensorflow:From C:\Users\Xiaowang Zhang\Anaconda3\lib\site-packages\tensorflow\contrib\learn\python\learn\estimators\dnn.py:192: get_global_step (from tensorflow.contrib.framework.python.ops.variables) is deprecated and will be removed in a future version.

Instructions for updating:

Please switch to tf.train.get_global_step

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Saving checkpoints for 1 into C:\Users\XIAOWA~1\AppData\Local\Temp\tmpb16xgf1z\model.ckpt.

INFO:tensorflow:loss = 2.38915, step = 1

INFO:tensorflow:global_step/sec: 207.752

INFO:tensorflow:loss = 0.292699, step = 101 (0.486 sec)

INFO:tensorflow:global_step/sec: 212.615

INFO:tensorflow:loss = 0.000831332, step = 37001 (0.482 sec)

INFO:tensorflow:global_step/sec: 208.621

INFO:tensorflow:global_step/sec: 198.667

INFO:tensorflow:loss = 0.00083376, step = 39901 (0.557 sec)

INFO:tensorflow:Saving checkpoints for 40000 into C:\Users\XIAOWA~1\AppData\Local\Temp\tmpb16xgf1z\model.ckpt.

INFO:tensorflow:Loss for final step: 0.000314118.

SKCompat()

from sklearn.metrics import accuracy_score

y_pred = dnn_clf.predict(X_test)

"""y_pre"""

accuracy_score(y_test,y_pred['classes'])

INFO:tensorflow:Restoring parameters from C:\Users\XIAOWA~1\AppData\Local\Temp\tmpb16xgf1z\model.ckpt-40000

0.98270000000000002

from sklearn.metrics import log_loss

y_pred_proba =y_pred['probabilities']

log_loss(y_test, y_pred_proba)

0.068879521670024202

用tf直接搭建DNN(深层神经网络)

"""搭建阶段"""

reset_graph()

import tensorflow as tf

"""先声明输入输出还有隐层个数"""

n_inputs = 28 * 28

n_hidden1 = 300

n_hidden2 = 100

n_outputs = 10

"""X的shape是(None,n_inputs),None是不知道的每次训练实例个数"""

"""y是1D的tensor,但是我们不知道训练实例是多少,所以shape=(None)"""

X = tf.placeholder(tf.float32, shape=(None, n_inputs), name="X")

y = tf.placeholder(tf.int64, shape=(None), name="y")

"""X作为输入层,而且一个train batch里的实例同时被DNN处理"""

"""隐层间的不一样是他们所连接的神经元还有隐层各自包含的神经元数目"""

"""X是输入,n_neurons是神经元个数,name是该层的名字,还有用在该层的激活函数"""

def neuron_layer(X, n_neurons, name, activation=None):

with tf.name_scope(name):

n_inputs = int(X.get_shape()[1])

stddev = 2 / np.sqrt(n_inputs)

"""用截断正太分布初始化保证了不会出现过大的W值从而避免了训练时GD缓慢"""

init = tf.truncated_normal((n_inputs, n_neurons), stddev=stddev)

"""初始化该层的权重W"""

W = tf.Variable(init,name="weights")

b = tf.Variable(tf.zeros([n_neurons]), name="biases")

z = tf.matmul(X,W) + b

if activation=="relu":

return tf.nn.relu(z)

else:

return z

"""创建一个Dnn"""

with tf.name_scope('dnn'):

hidden1 = neuron_layer(X, n_hidden1, "hidden1", activation="relu")

hidden2 = neuron_layer(hidden1, n_hidden2, "hidden2", activation="relu")

logits = neuron_layer(hidden2, n_outputs,"outputs")

"""平均交叉熵作为损失函数的计算值"""

with tf.name_scope("loss"):

xentropy = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=y,logits=logits)

loss = tf.reduce_mean(xentropy, name="loss")

learning_rate = 0.01

with tf.name_scope("train"):

optimizer = tf.train.GradientDescentOptimizer(learning_rate)

training_op = optimizer.minimize(loss)

with tf.name_scope("eval"):

correct = tf.nn.in_top_k(logits, y, 1)

accuracy = tf.reduce_mean(tf.cast(correct,tf.float32))

init = tf.global_variables_initializer()

saver = tf.train.Saver()

n_epochs = 40

batch_size = 50

with tf.Session() as sess:

init.run()

for epoch in range(n_epochs):

for iteration in range(mnist.train.num_examples// batch_size):

X_batch, y_batch = mnist.train.next_batch(batch_size)

sess.run(training_op, feed_dict={X:X_batch,y:y_batch})

acc_train = accuracy.eval(feed_dict={X:X_batch, y:y_batch})

acc_val = accuracy.eval(feed_dict={X:mnist.validation.images,

y:mnist.validation.labels})

print(epoch, " Train accuracy:", acc_train," Val accuracy:", acc_val)

save_path = saver.save(sess,"./my_model_final.ckpt")

0 Train accuracy: 0.92 Val accuracy: 0.9186

1 Train accuracy: 0.92 Val accuracy: 0.9354

2 Train accuracy: 0.96 Val accuracy: 0.9454

3 Train accuracy: 0.92 Val accuracy: 0.9514

4 Train accuracy: 0.88 Val accuracy: 0.9574

5 Train accuracy: 0.96 Val accuracy: 0.9592

6 Train accuracy: 0.94 Val accuracy: 0.9618

7 Train accuracy: 0.98 Val accuracy: 0.9634

8 Train accuracy: 0.96 Val accuracy: 0.9664

9 Train accuracy: 0.98 Val accuracy: 0.9652

10 Train accuracy: 1.0 Val accuracy: 0.9668

11 Train accuracy: 1.0 Val accuracy: 0.9696

12 Train accuracy: 0.94 Val accuracy: 0.9686

13 Train accuracy: 0.98 Val accuracy: 0.9708

14 Train accuracy: 1.0 Val accuracy: 0.971

15 Train accuracy: 1.0 Val accuracy: 0.973

16 Train accuracy: 0.98 Val accuracy: 0.973

17 Train accuracy: 1.0 Val accuracy: 0.9748

18 Train accuracy: 1.0 Val accuracy: 0.9744

19 Train accuracy: 0.96 Val accuracy: 0.9724

20 Train accuracy: 0.96 Val accuracy: 0.9758

21 Train accuracy: 1.0 Val accuracy: 0.9764

22 Train accuracy: 0.98 Val accuracy: 0.9782

23 Train accuracy: 1.0 Val accuracy: 0.9776

24 Train accuracy: 1.0 Val accuracy: 0.9768

25 Train accuracy: 1.0 Val accuracy: 0.9774

26 Train accuracy: 1.0 Val accuracy: 0.9766

27 Train accuracy: 1.0 Val accuracy: 0.9774

28 Train accuracy: 0.98 Val accuracy: 0.9794

29 Train accuracy: 0.94 Val accuracy: 0.9776

30 Train accuracy: 1.0 Val accuracy: 0.9788

31 Train accuracy: 0.98 Val accuracy: 0.9784

32 Train accuracy: 0.98 Val accuracy: 0.9782

33 Train accuracy: 1.0 Val accuracy: 0.9786

34 Train accuracy: 0.98 Val accuracy: 0.978

35 Train accuracy: 1.0 Val accuracy: 0.9792

36 Train accuracy: 1.0 Val accuracy: 0.9782

37 Train accuracy: 1.0 Val accuracy: 0.9788

38 Train accuracy: 1.0 Val accuracy: 0.9784

39 Train accuracy: 1.0 Val accuracy: 0.9778

with tf.Session() as sess:

saver.restore(sess, "./my_model_final.ckpt")

X_new_scaled = mnist.test.images[:20]

Z = logits.eval(feed_dict={X: X_new_scaled})

y_pred = np.argmax(Z, axis=1)

INFO:tensorflow:Restoring parameters from ./my_model_final.ckpt

INFO:tensorflow:Restoring parameters from ./my_model_final.ckpt

print("Predicted classes:", y_pred)

print("Actual classes: ", mnist.test.labels[:20])

Predicted classes: [7 2 1 0 4 1 4 9 5 9 0 6 9 0 1 5 9 7 3 4]

Actual classes: [7 2 1 0 4 1 4 9 5 9 0 6 9 0 1 5 9 7 3 4]

"""jupyter可视化的代码"""

from IPython.display import clear_output, Image, display, HTML

def strip_consts(graph_def, max_const_size=32):

"""Strip large constant values from graph_def."""

strip_def = tf.GraphDef()

for n0 in graph_def.node:

n = strip_def.node.add()

n.MergeFrom(n0)

if n.op == 'Const':

tensor = n.attr['value'].tensor

size = len(tensor.tensor_content)

if size > max_const_size:

tensor.tensor_content = b"<stripped %d bytes>"%size

return strip_def

def show_graph(graph_def, max_const_size=32):

"""Visualize TensorFlow graph."""

if hasattr(graph_def, 'as_graph_def'):

graph_def = graph_def.as_graph_def()

strip_def = strip_consts(graph_def, max_const_size=max_const_size)

code = """

<script>

function load() {{

document.getElementById("{id}").pbtxt = {data};

}}

</script>

<link rel="import" href="https://tensorboard.appspot.com/tf-graph-basic.build.html" onload=load()>

<div style="height:600px">

<tf-graph-basic id="{id}"></tf-graph-basic>

</div>

""".format(data=repr(str(strip_def)), id='graph'+str(np.random.rand()))

iframe = """

<iframe seamless style="width:1200px;height:620px;border:0" srcdoc="{}"></iframe>

""".format(code.replace('"', '"'))

display(HTML(iframe))

"""jupyter下可视化tensorboard"""

show_graph(tf.get_default_graph())