1. 前置要求准备

- 一台或多台机器,操作系统 CentOS7.x-86_x64

- 硬件配置:2GB 或更多 RAM,2 个 CPU 或更多 CPU,硬盘 30GB 或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止 swap 分区

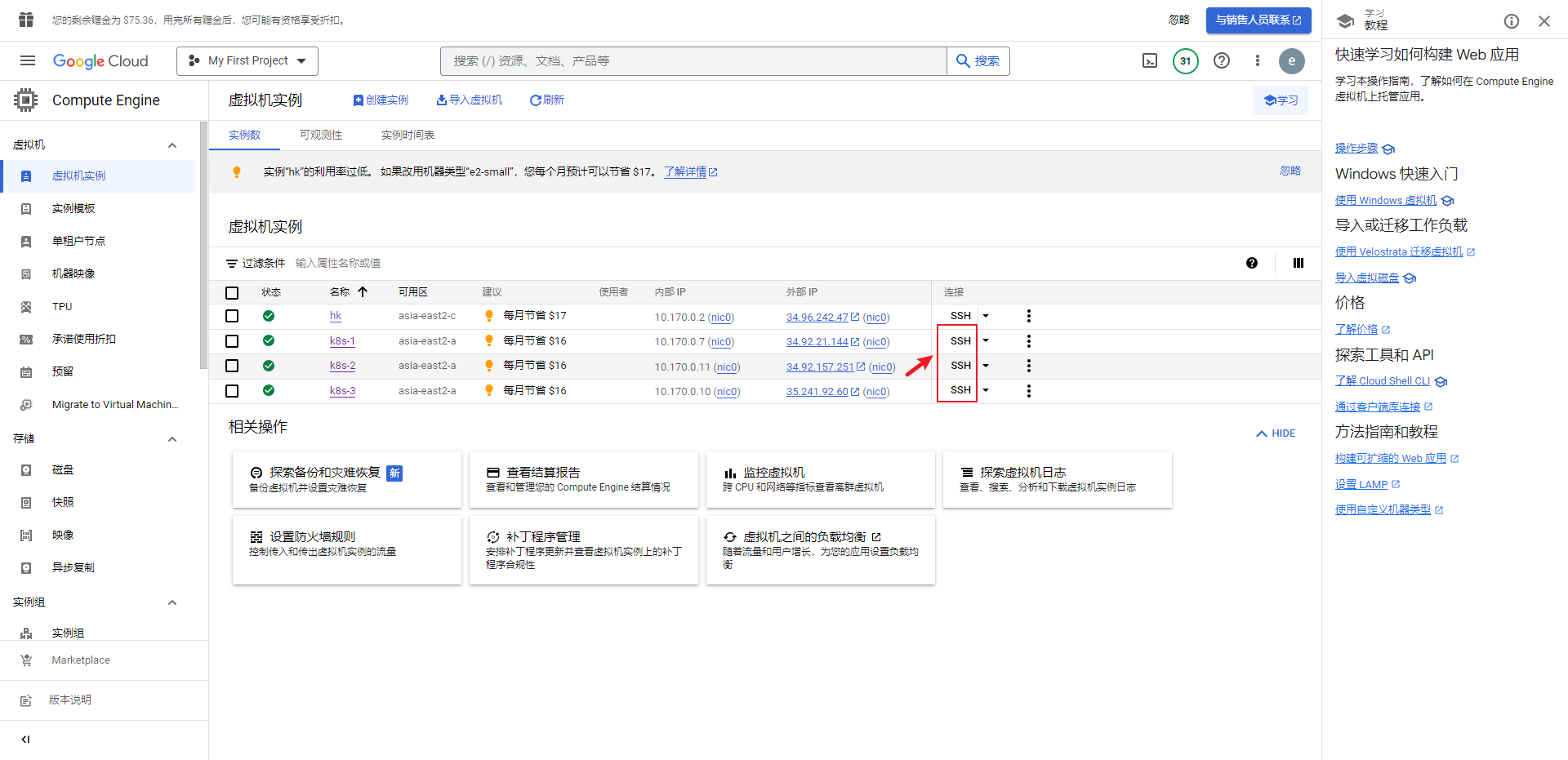

此处我是白嫖的谷歌云的三台香港服务器,所以不会出现

墙相关的问题

- k8s-1为主节点,其他两个为工作节点

2. 部署步骤

- 在所有节点上安装 Docker 和 kubeadm

- 部署 Kubernetes Master

- 部署容器网络插件

- 部署 Kubernetes Node,将节点加入 Kubernetes 集群中

- 部署 Dashboard Web 页面,可视化查看 Kubernetes 资源

3. 使用ssh工具连接服务器

3.1 配置root密码以及服务器使用ssh密码登录

-

使用控制台的ssh登录上服务器

小技巧:

在ssh窗口中按下ctrl+insert是复制窗口选中的内容

在ssh窗口中按下shift+insert是将我们复制的内容粘贴到ssh窗口

-

切换至root用户,并修改root账号密码

注意事项:

输入密码的时候不会显示出来,所以直接输入密码,然后回车,再然后重复输入密码回车

zhouxx@instance-2:~$ sudo -i root@instance-2:~# passwd New password: Retype new password: passwd: password updated successfully -

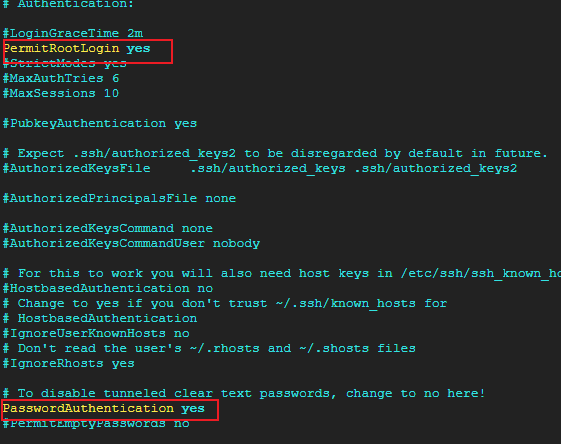

服务器使用ssh密码登录配置

#打开ssh服务配置文件 vim /etc/ssh/sshd_config -

修改配置中如下项为yes

vim使用提示

输入vim /etc/ssh/sshd_config后,按下键盘i进入插入模式

修改完成后按下键盘

esc键退出插入模式,然后输入:wq保存并退出文件

-

重启sshd服务

service sshd restart -

重启完成后就可以使用ssh工具连接服务器了

4. 部署

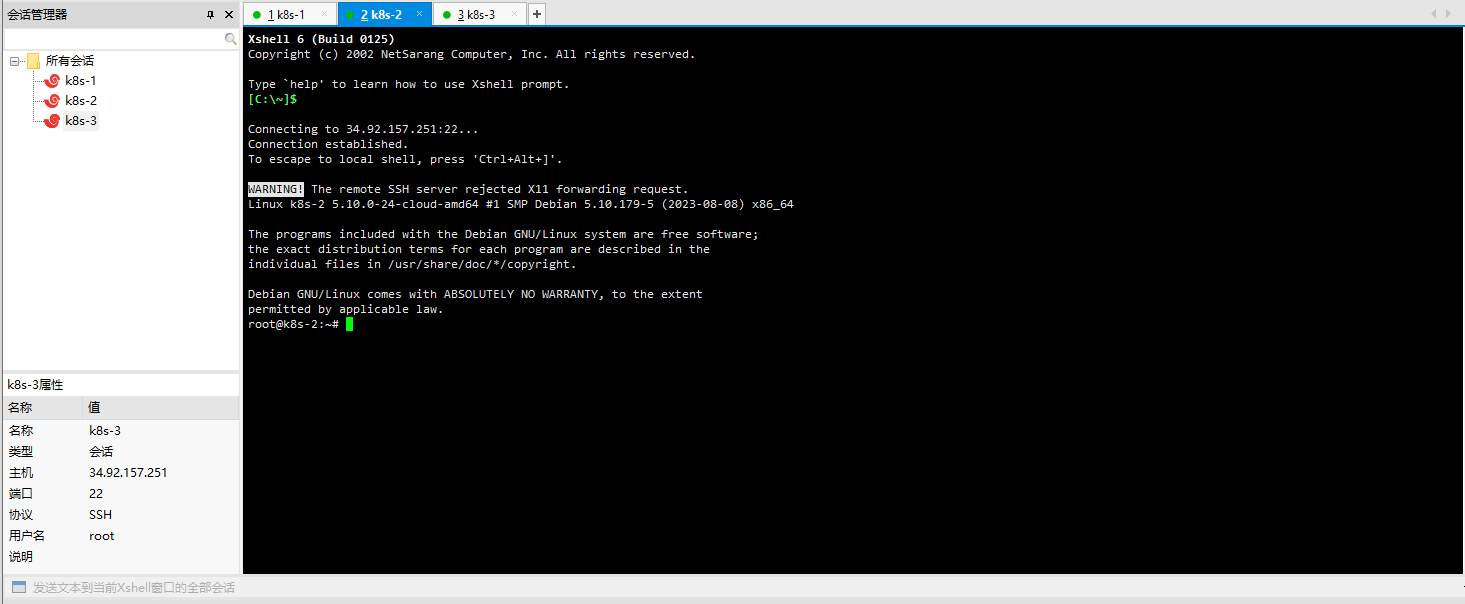

4.1 使用xshell或其他工具连接服务器

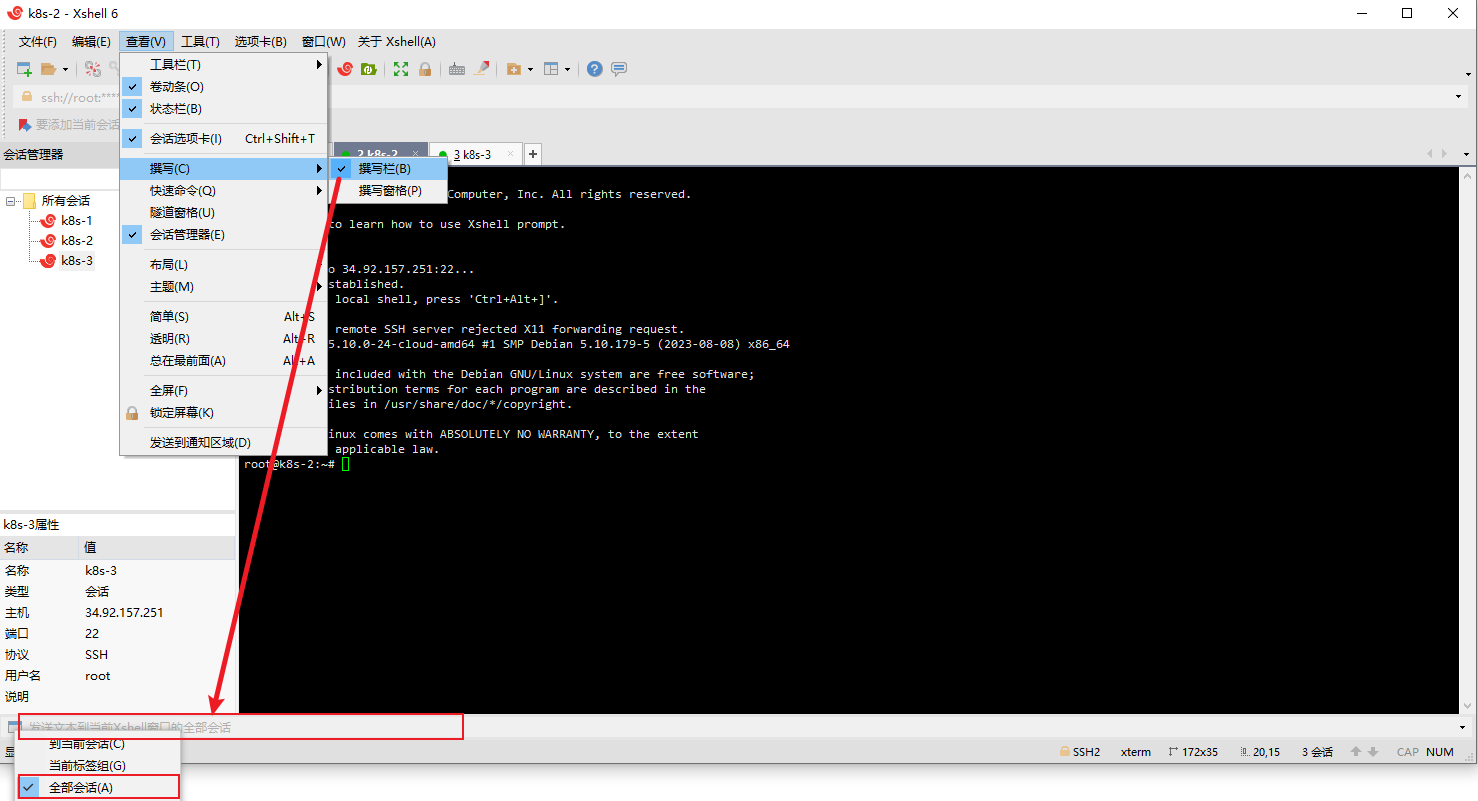

4.2 开启撰写栏 设置为全部会话

这样所有服务都需要执行的语句就可以通过撰写栏一次发送

4.3 设置 linux 环境(三个节点都执行)

-

关闭防火墙

此处是学习使用,所以直接关闭防火墙,生产环境开启对应端口即可

systemctl stop firewalld systemctl disable firewalld -

关闭 selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 -

关闭 swap

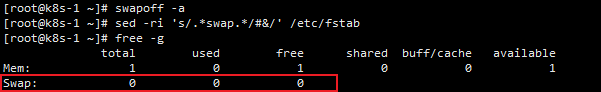

#临时 swapoff -a #永久 sed -ri 's/.*swap.*/#&/' /etc/fstab #验证,swap 必须为 0; free -g

-

添加主机名与 IP 对应关系

vi /etc/hosts添加内容

34.92.240.157 k8s-1 34.92.21.144 k8s-2 34.92.157.251 k8s-3指定新的 hostname

#newhostname例:k8s-1 hostnamectl set-hostname <newhostname> -

将桥接的 IPv4 流量传递到 iptables 的链

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF疑难问题:

遇见提示是只读的文件系统,运行如下命令

mount -o remount rw /执行如下命令使修改生效:

modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf -

安装 ipvs

为了便于查看 ipvs 的代理规则,最好安装一下管理工具 ipvsadm:

yum install ipset -y yum install ipvsadm -y -

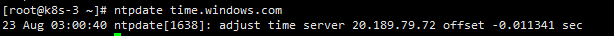

同步最新时间

date #查看时间 (可选) yum install -y ntpdate ntpdate time.windows.com #同步最新时间

4.4 安装Docker/Containerd

Kubernetes 默认 CRI(容器运行时)为 Docker,因此先安装 Docker。==从kubernetes 1.24开始,dockershim已经从kubelet中移除,==但因为历史问题docker却不支持kubernetes主推的CRI(容器运行时接口)标准,所以docker不能再作为kubernetes的容器运行时了,即从kubernetesv1.24开始不再使用docker了。

但是如果想继续使用docker的话,可以在kubelet和docker之间加上一个中间层cri-docker。cri-docker是一个支持CRI标准的shim(垫片)。一头通过CRI跟kubelet交互,另一头跟docker api交互,从而间接的实现了kubernetes以docker作为容器运行时。但是这种架构缺点也很明显,调用链更长,效率更低。

Containerd和Docker在命令使用上的一些区别主要如下:

| 功能 | Docker | Containerd |

|---|---|---|

| 显示本地镜像列表 | docker images | crictl images |

| 下载镜像 | docker pull | crictl pull |

| 上传镜像 | docker push | 无 |

| 删除本地镜像 | docker rmi | crictl rmi |

| 查看镜像详情 | docker inspect IMAGE-ID | crictl inspecti IMAGE-ID |

| 显示容器列表 | docker ps | crictl ps |

| 创建容器 | docker create | crictl create |

| 启动容器 | docker start | crictl start |

| 停止容器 | docker stop | crictl stop |

| 删除容器 | docker rm | crictl rm |

| 查看容器详情 | docker inspect | crictl inspect |

| attach | docker attach | crictl attach |

| exec | docker exec | crictl exec |

| logs | docker logs | crictl logs |

| stats | docker stats | crictl stats |

-

卸载系统之前的 docker

sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine -

安装 Docker-CE

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 -

设置 docker repo 的 yum 位置

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo -

安装 docker,以及 docker-cli

sudo yum install -y docker-ce docker-ce-cli containerd.io -

配置 docker 加速

找阿里云的docker镜像加速

sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://xxxxx.mirror.aliyuncs.com"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker -

docker自启

sudo systemctl enable docker

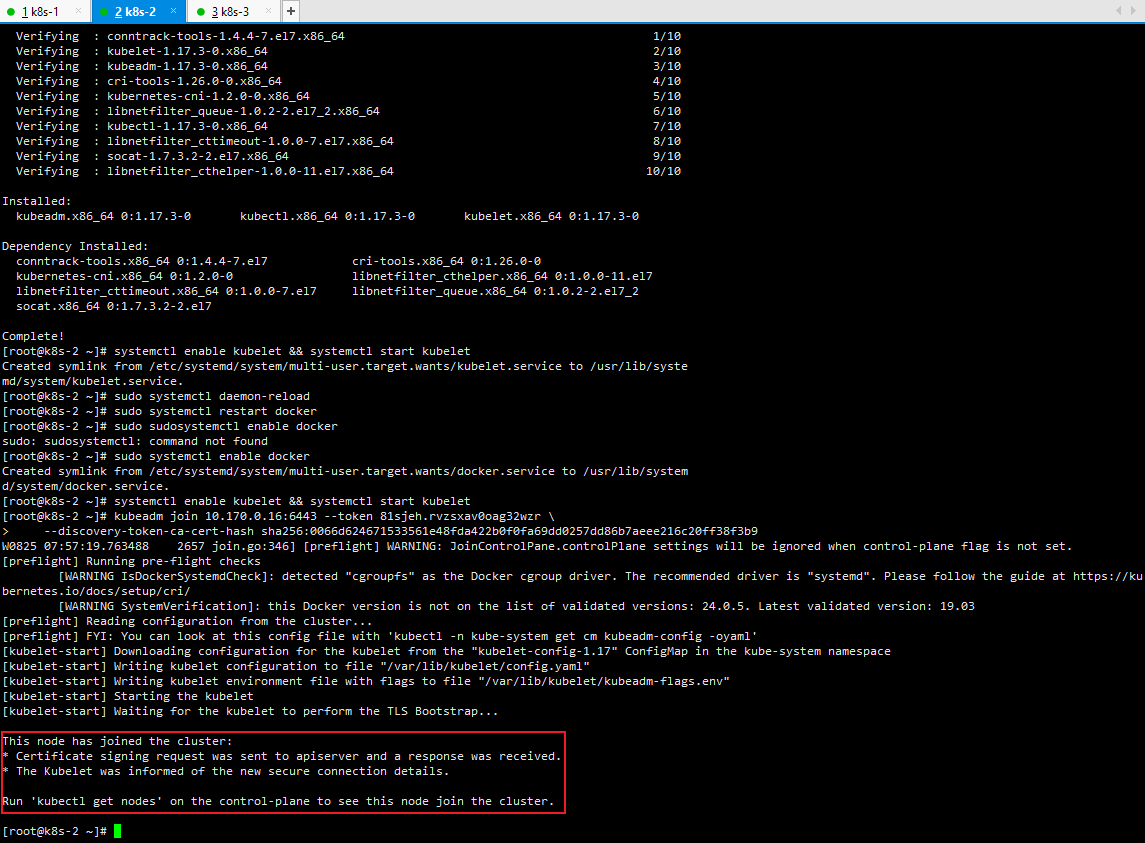

4.5 所有节点安装 Docker、kubeadm、kubelet、kubectl

-

添加阿里云 yum 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF -

kubeadm、kubelet、kubectl(我安装的是最新版,有版本要求自己设定版本)

yum list|grep kube yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3 -

设置运行时容器为containerd(如果使用的containerd,需要执行)

crictl config runtime-endpoint /run/containerd/containerd.sock systemctl daemon-reload -

将 kubelet 设置成开机启动

systemctl enable kubelet && systemctl start kubelet

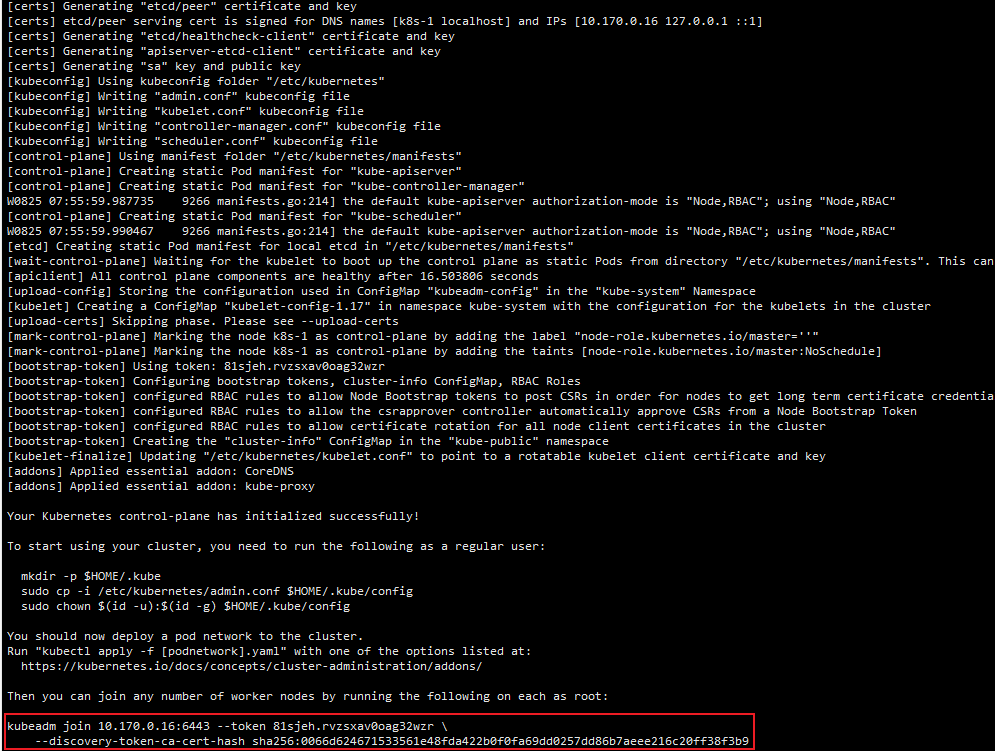

4.5 初始化集群

4.5.1 初始化Master

然后接下来在 master 节点配置 kubeadm 初始化文件,可以通过如下命令导出默认的初始化配置:

service-cidr和pod-network-cidr我们自定义的service和pod的网段

kubeadm init --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.17.3 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.244.0.0/16

然后使用上面的配置文件进行初始化

kubeadm init --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers --kubernetes-version v1.17.3 --service-cidr=10.96.0.0/16 --pod-network-cidr=10.244.0.0/16

W0825 07:55:55.081708 9266 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0825 07:55:55.081992 9266 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.3

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 24.0.5. Latest validated version: 19.03

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.170.0.16]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-1 localhost] and IPs [10.170.0.16 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-1 localhost] and IPs [10.170.0.16 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0825 07:55:59.987735 9266 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0825 07:55:59.990467 9266 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 16.503806 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 81sjeh.rvzsxav0oag32wzr

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.170.0.16:6443 --token 81sjeh.rvzsxav0oag32wzr \

--discovery-token-ca-cert-hash sha256:0066d624671533561e48fda422b0f0fa69dd0257dd86b7aeee216c20ff38f3b9

master 记得执行以下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

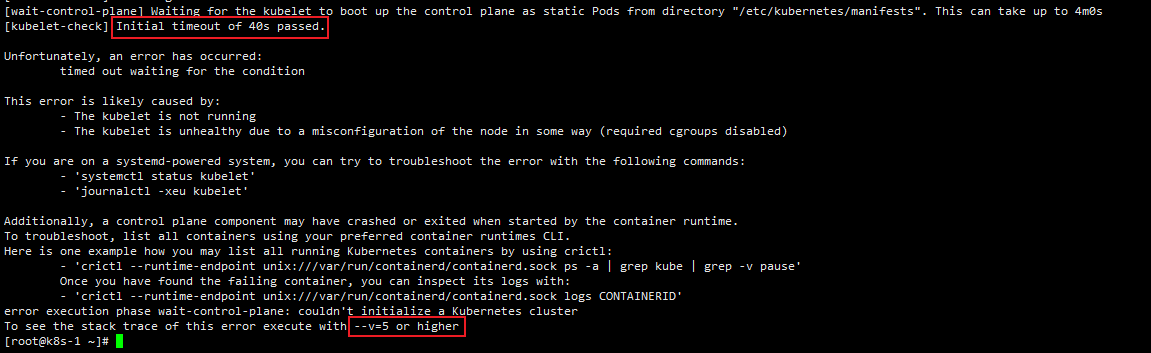

4.5.1.1 问题排查-1

我初始化的时候在这里出现问题,让我使用

--v=5可以查看更多错误信息

直接使用

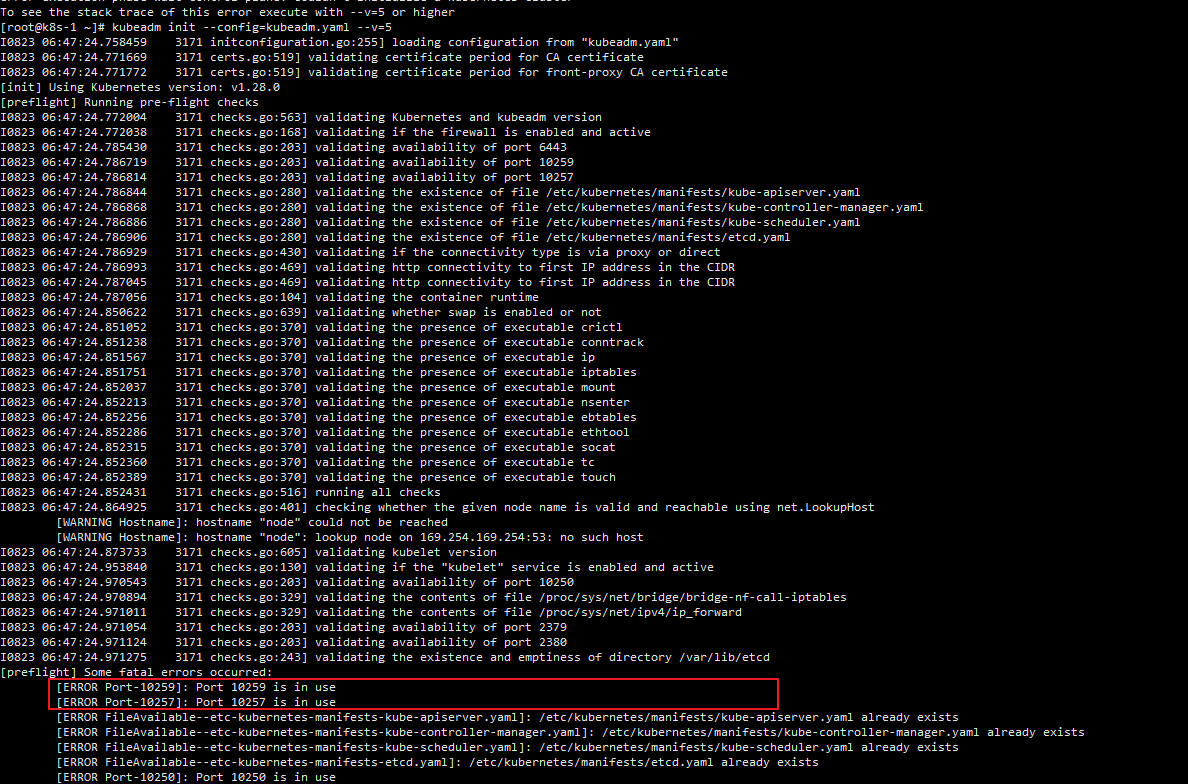

kubeadm init --config=kubeadm.yaml --v=5

发现问题是端口被占用,就是上一次出错了,但是有些服务起来了,所以再次初始化端口占用,需要reset kubeadm 然后重启kubelet

此时需要kubeadm重新init,参照4.5.1.3

4.5.1.2 问题排查-2查看kubelet 日志

journalctl -xeu kubelet

4.5.1.3 kubeadm重新init

如果期间有问题想要重新初始化需要执行以下命令,然后再重新初始化master即可

kubeadm reset

systemctl start kubelet

rm -rf $HOME/.kube

4.5.2 加入集群

复制此命令接到从节点执行

kubeadm join 10.170.0.16:6443 --token 81sjeh.rvzsxav0oag32wzr \

--discovery-token-ca-cert-hash sha256:0066d624671533561e48fda422b0f0fa69dd0257dd86b7aeee216c20ff38f3b9

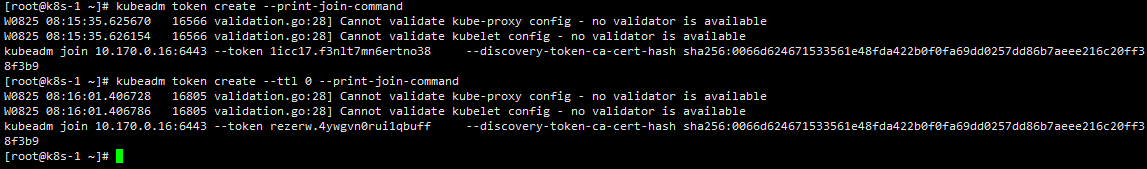

4.5.2.1 token 过期怎么办?

kubeadm token create --print-join-command

kubeadm token create --ttl 0 --print-join-command

这两个命令都会打印新的加入命令

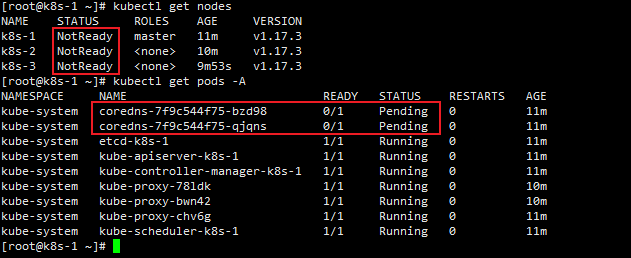

4.5.3 安装 Pod 网络插件(CNI)master节点

以上步骤完成后查看pods发现

coredns一直处于Pending状态,并且节点状态都为NotReady,这是因为没有安装网络插件

- k8s常用网络插件(

Flannel、Calico、Weave)

本次安装

Flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

执行完成后等一会所有pod都起来了

5. 入门操作 kubernetes 集群

-

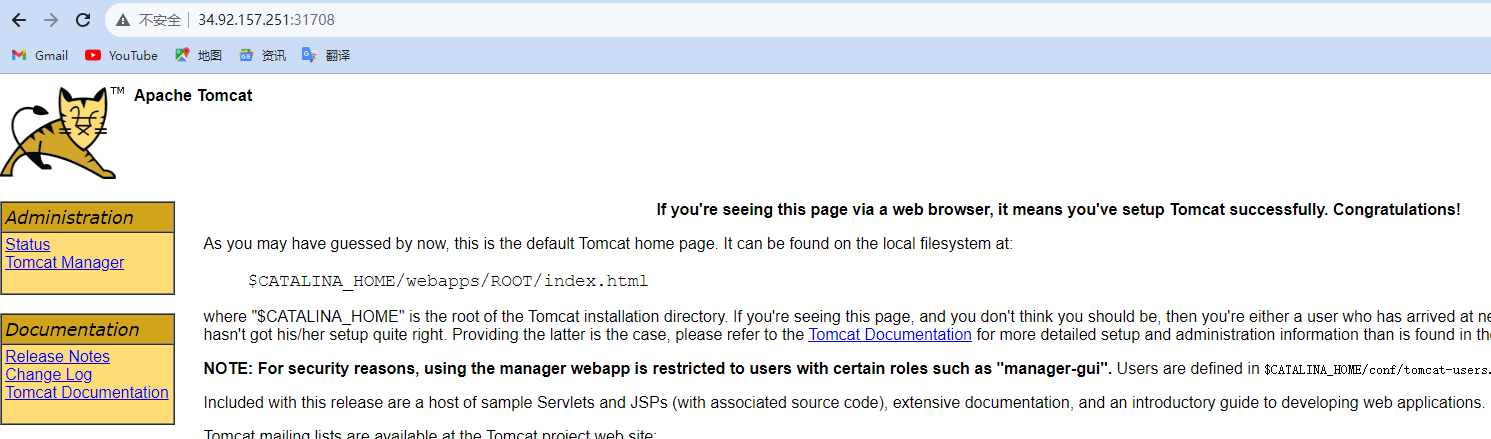

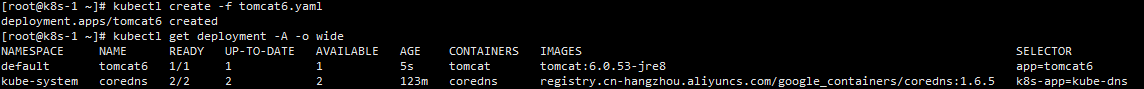

部署一个 tomcat

kubectl create deployment tomcat6 --image=tomcat:6.0.53-jre8 #kubectl get pods -o wide 可以获取到 tomcat 信息 -

暴露 nginx 访问

#Pod 的 80 映射容器的 8080;service 会代理 Pod 的 80 kubectl expose deployment tomcat6 --port=80 --target-port=8080 --type=NodePort -

查看映射端口

kubectl get svc -A -o wide发现服务端口为31708

-

访问测试

k8s集群中的所有节点都可以访问

http://<any_node_ip>:30443

-

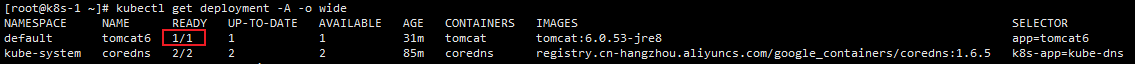

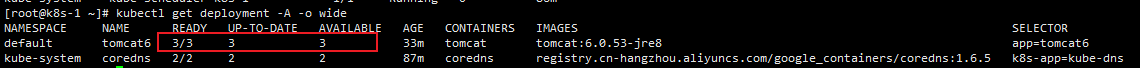

扩容

流程:创建 deployment 会管理 replicas,replicas 控制 pod 数量,有 pod 故障会自动拉起新的 pod

所以我们部署的服务删除pod是删除不了服务的(删除了也会再启动一个),删除deployment才会

扩容之前

扩容

kubectl scale --replicas=3 deployment tomcat6

扩容之后

-

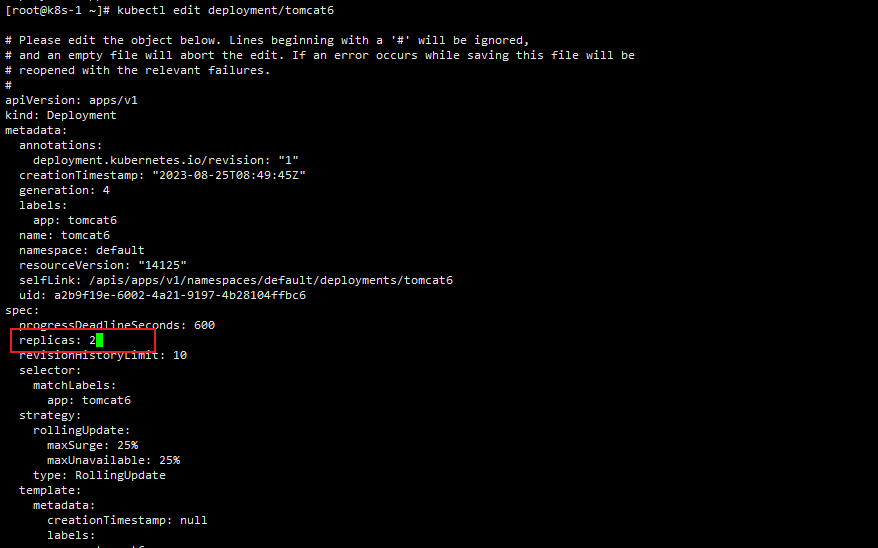

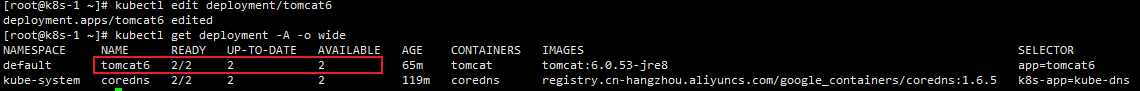

编辑deployment

我们可以修改部署文件的内容,并生效,再拿扩容扩容举例

kubectl edit deployment/tomcat6执行完成会打开tomcat6的部署yaml文件,可以使用vi操作

我们将replicas修改为2,wq保存退出

-

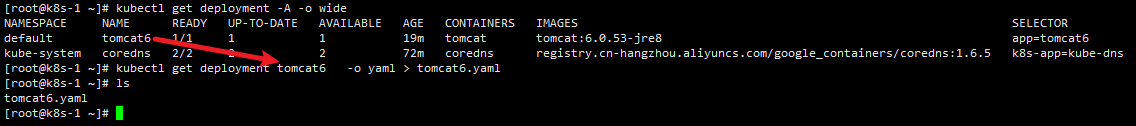

导出deployment yaml

- 集群configMap,deployment,service,secret等导出和导入的方法,以deployment为例,其他同理:

- 如果不指定deployment 名称会导出所有deployment

- 因为我的tomcat6部署在默认名称空间,所以没加 -n 名称空间

kubectl get deployment -A -o wide kubectl get deployment tomcat6 -o yaml > tomcat6.yaml

apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" creationTimestamp: "2023-08-25T08:49:45Z" generation: 1 labels: app: tomcat6 name: tomcat6 namespace: default resourceVersion: "8307" selfLink: /apis/apps/v1/namespaces/default/deployments/tomcat6 uid: a2b9f19e-6002-4a21-9197-4b28104ffbc6 spec: progressDeadlineSeconds: 600 replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: tomcat6 strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: app: tomcat6 spec: containers: - image: tomcat:6.0.53-jre8 imagePullPolicy: IfNotPresent name: tomcat resources: { } terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: { } terminationGracePeriodSeconds: 30 status: availableReplicas: 1 conditions: - lastTransitionTime: "2023-08-25T08:50:01Z" lastUpdateTime: "2023-08-25T08:50:01Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2023-08-25T08:49:45Z" lastUpdateTime: "2023-08-25T08:50:01Z" message: ReplicaSet "tomcat6-5f7ccf4cb9" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 1 replicas: 1 updatedReplicas: 1 -

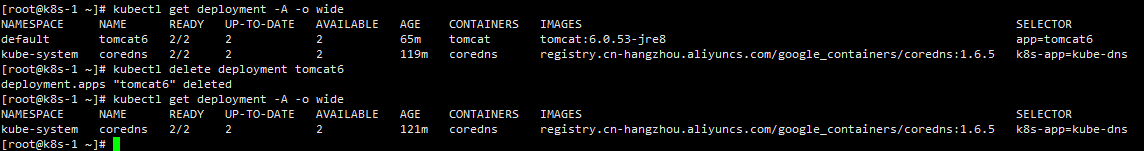

删除deployment

kubectl delete deployment tomcat6 -

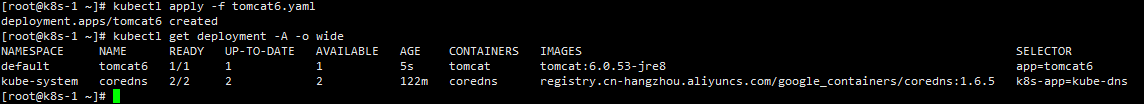

我们再拿之前导出的tomcat6.yaml再次部署

kubectl apply -f tomcat6.yaml

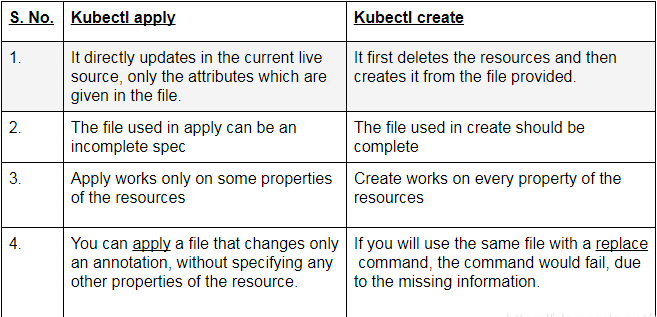

kubectl create -f tomcat6.yaml如果yaml文件中的kind值为deployment,那么上面这两个命令都可以创建一个deployment,生成相应数量的pod

- kubectl create

- kubectl create命令,是先删除所有现有的东西,重新根据yaml文件生成新的。所以要求yaml文件中的配置必须是完整的

- kubectl create命令,用同一个yaml 文件执行替换replace命令,将会不成功,fail掉。

- kubectl apply

- kubectl apply命令,根据配置文件里面列出来的内容,升级现有的。所以yaml文件的内容可以只写需要升级的属性

- kubectl create

6. 安装默认 dashboard

-

部署 dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml -

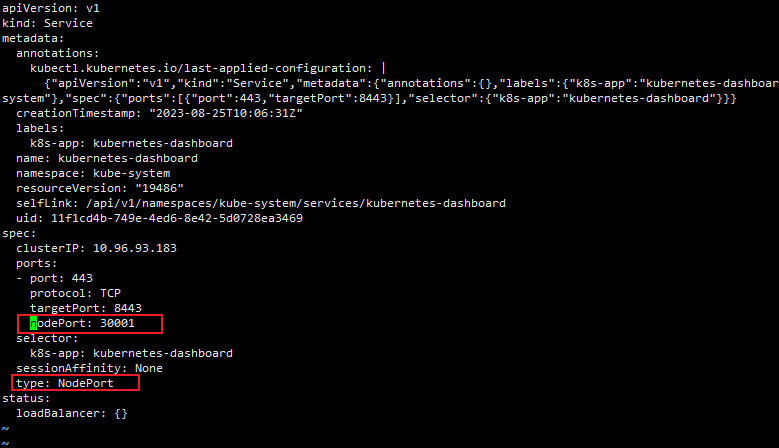

暴露 dashboard 为公共访问

默认 Dashboard 只能集群内部访问,修改 Service 为 NodePort 类型,暴露到外部

kubectl edit svc/kubernetes-dashboard -n kube-system

-

访问地址:http://NodeIP:30001

-

创建授权账户

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')[root@k8s-1 ~]# kubectl create serviceaccount dashboard-admin -n kube-system serviceaccount/dashboard-admin created [root@k8s-1 ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created [root@k8s-1 ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') Name: dashboard-admin-token-zr9hj Namespace: kube-system Labels: <none> Annotations: kubernetes.io/service-account.name: dashboard-admin kubernetes.io/service-account.uid: c7f96dfe-4ed8-47c6-ace5-e597a4e65081 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1025 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImxJZnpUcVBST0V2OXozemZ2T3lnWnA5bFVtXzFkNU85eUhXNmFIMmswNzgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tenI5aGoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYzdmOTZkZmUtNGVkOC00N2M2LWFjZTUtZTU5N2E0ZTY1MDgxIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.cvYeYjTt8nIABc8Tk6ALdCB7Mf8fLwSkzfVEePIN9Kjk5uSDaLmJWE3mcFifWg04iVRRdk-WYbwTDvpdf7AN7snVPLnoyee1haO1eK8LKItO641isJFin2X7I-dcV9V5jUxQ-7afpeWGUq0IvYHkvT1PmPfddoaKOLGcWb9SSNh_CR5dWCx331ZI8sWHa58v25KCajLDP-aEbrkXnaAQsTfOvG_OxvM3xRAqwg3kxbwPLZ3vUGehHMe1o70OgB01Xl3OFflgvxKWG5gtMMUHWcPe6JGu5VnR3eX4s7vxpNrxGwnx_N-lGN3vdgR79J__QzQrViKmKXtzqcTaHj0UfQ

7. KubeSphere (待完成)

默认的 dashboard 没啥用,我们用 kubesphere 可以打通全部的 devops 链路。Kubesphere 集成了很多套件,集群要求较高

KubeSphere 是一款面向云原生设计的开源项目,在目前主流容器调度平台 Kubernetes 之上构建的分布式多租户容器管理平台,提供简单易用的操作界面以及向导式操作方式,在降低用户使用容器调度平台学习成本的同时,极大降低开发、测试、运维的日常工作的复杂度。

7.1 安装 helm和 Tiller

Helm 是 Kubernetes 的包管理器。包管理器类似于我们在 Ubuntu 中使用的 apt、Centos中使用的 yum 或者 Python 中的 pip 一样,能快速查找、下载和安装软件包。Helm 由客户端组件 helm 和服务端组件 Tiller 组成, 能够将一组 K8S 资源打包统一管理, 是查找、共享和使用为 Kubernetes 构建的软件的最佳方式。

-

helm有两个组件:helm客户端和Tiller服务端

-

- **Helm client:**是一个命令行客户端工具,主要用于k8s应用程序chart的创建、打包、发布以及创建和管理本地和远程的chart仓库。

- **Tiller service:**是Helm的一个服务端,部署在k8s集群中;Tiller用于接收Helm client的请求,并根据chart生成k8s的部署文件(称之为Release),然后提交给k8s创建应用。同样Tiller还提供了Release的升级、删除、回滚等一些系列功能。

7.2 安装 OpenEBS

OpenEBS 管理 k8s 节点上存储,并为 k8s 有状态负载(StatefulSet)提供本地存储卷或分布式存储卷。