将分割模型就行腾搜人RT转化后得到engine,该博客主要是针对c++调用tensorRT的模型文件engine

文章目录

1. 框架

文件主要分为两个文件:一个是main_tensorRT(exe),用于调用部署好的AI模型(dll)

一个是segmentationModel(dll),将tensorRT推理的程序封装成dll,用来进行调用。

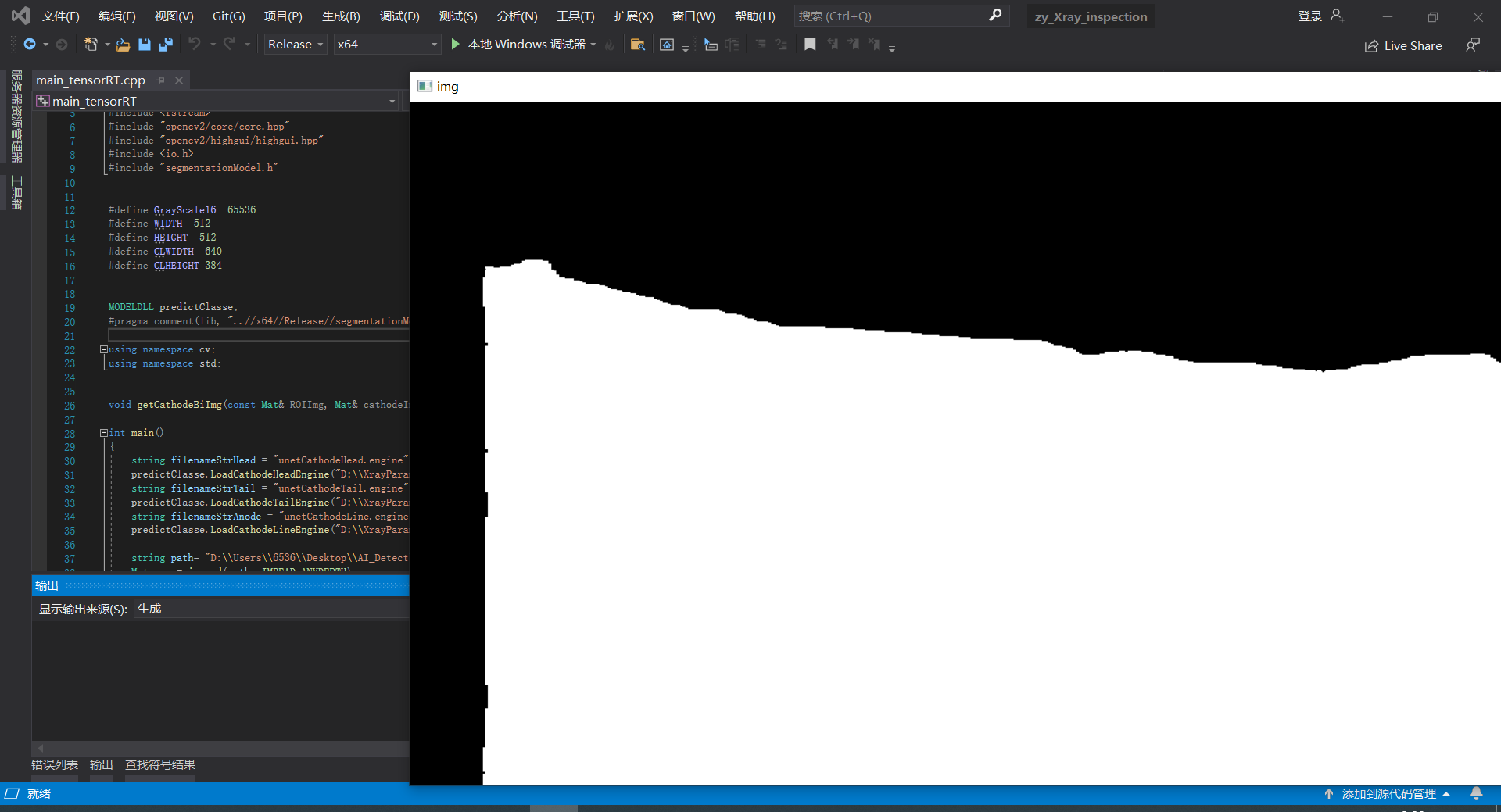

2. main_tensorRT(exe)

2.1 LoadCathodeHeadEngine(读取模型)

2.2 getCathodeBiImg(运行模型)

2.3 输出结果

// Xray_test.cpp : 定义控制台应用程序的入口点。

#define _AFXDLL

#include <iomanip>

#include <string>

#include <fstream>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include <io.h>

#include "segmentationModel.h"

#define GrayScale16 65536

#define WIDTH 512

#define HEIGHT 512

#define CLWIDTH 640

#define CLHEIGHT 384

MODELDLL predictClasse;

#pragma comment(lib, "..//x64//Release//segmentationModel.lib")

using namespace cv;

using namespace std;

void getCathodeBiImg(const Mat& ROIImg, Mat& cathodeImg, int& gap);

int main()

{

string filenameStrHead = "unetCathodeHead.engine";

predictClasse.LoadCathodeHeadEngine("D:\\XrayParameters\\saveEngine\\" + filenameStrHead);

string filenameStrTail = "unetCathodeTail.engine";

predictClasse.LoadCathodeTailEngine("D:\\XrayParameters\\saveEngine\\" + filenameStrTail);

string filenameStrAnode = "unetCathodeLine.engine";

predictClasse.LoadCathodeLineEngine("D:\\XrayParameters\\saveEngine\\" + filenameStrAnode);

string path= "D:\\Users\\6536\\Desktop\\AI_Detect\\mask\\1.png";

Mat pre = imread(path, IMREAD_ANYDEPTH);

int cathodeGap = 1;//分块数量

Mat cathodeMask = cv::Mat::zeros(pre.size(), CV_8UC1);

getCathodeBiImg(pre, cathodeMask, cathodeGap);//预测模块

cv::imshow("img", cathodeMask);

cv::waitKey(0);

return 0;

}

void getCathodeBiImg(const Mat& ROIImg, Mat& cathodeImg, int& gap)

{

//切割

cv::Rect rect, rect1;

for (int k = 0; k < gap; k++)

{

rect.x = k * ROIImg.cols / gap;

rect.y = 0;

rect.width = ROIImg.cols / gap;

rect.height = ROIImg.rows;

rect1.x = k * ROIImg.cols / gap;

rect1.y = 0;

rect1.width = ROIImg.cols / gap;

rect1.height = ROIImg.rows;

rect.x = max(0, int(rect.x));

rect1.x = max(0, int(rect.x));

if (ROIImg.cols % 2 != 0 && k == 0)

{

rect.width = rect.width + 61;

rect1.width = rect1.width + 61;

}

else if (k != gap - 1)

{

rect.width = rect.width + 60;

rect1.width = rect1.width + 60;

}

if (rect.x + rect.width > ROIImg.cols)

{

rect.width = ROIImg.cols - rect.x;

rect1.width = ROIImg.cols - rect1.x;

}

Mat cathodeLocImg;

predictClasse.batteryCathodeHeadPredict(ROIImg(rect), cathodeLocImg, 1, 1, WIDTH, HEIGHT);

bitwise_or(cathodeImg(rect1), cathodeLocImg, cathodeImg(rect1));

}

threshold(cathodeImg, cathodeImg, 0, 255, THRESH_BINARY);

}

3. segmentationModel(dll)

3.1 LoadCathodeHeadEngine(加载模型函数)

3.2 batteryCathodeHeadPredict(模型推理)

#include "pch.h"

#include "segmentationModel.h"

#include "cuda_runtime_api.h"

#include "logging.h"

#include "common.hpp"

#include "calibrator.h"

#define USE_FP16 // comment out this if want to use FP32

#define DEVICE 0 // GPU id

#define CONF_THRESH 0.45 // 0.5

const char* INPUT_BLOB_NAME = "data";

const char* OUTPUT_BLOB_NAME = "prob";

static Logger gLogger;

//IRuntime* runtimeROI;

//ICudaEngine* engineROI;

//IExecutionContext* contextROI;

IRuntime* runtimeCathodeHead;

ICudaEngine* engineCathodeHead;

IExecutionContext* contextCathodeHead;

IRuntime* runtimeCathodeTail;

ICudaEngine* engineCathodeTail;

IExecutionContext* contextCathodeTail;

IRuntime* runtimeCathodeLine;

ICudaEngine* engineCathodeLine;

IExecutionContext* contextCathodeLine;

MODELDLL::MODELDLL()

{

}

MODELDLL::~MODELDLL()

{

}

bool MODELDLL::LoadCathodeHeadEngine(const std::string& engineName)

{

//step1:定义打开文件的方式

std::ifstream file(engineName, std::ios_base::out | std::ios_base::binary);// 如果想以输入方式(只写)打开,就用ifstream来定义;// 如果想以输出方式(只读)打开,就用ofstream来定义;// 如果想以输入/输出方式来打开,就用fstream来定义。

if (!file.good())

{

return false;

}

//step2:定义 trtmodelstream

char* trtModelStream = nullptr;

//step3:获取文件大小

size_t size = 0;

file.seekg(0, file.end);

size = file.tellg();

//step4:回到文件的开头

file.seekg(0, file.beg);

//step5:trtModelStream被定义为一个[“引擎文件大小”]大小的字符

trtModelStream = new char[size];

assert(trtModelStream);

//step6:rtrModelStream读取文件的内容

file.read(trtModelStream, size);

//step7:关闭文件

file.close();

//step8:获取模型文件内容

std::vector<std::pair<char*, size_t>> FileContent;

FileContent.push_back(std::make_pair(nullptr, 0));

FileContent[0] = std::make_pair(trtModelStream, size);

assert(FileContent != nullptr);

//step9:创建runtime

runtimeCathodeHead = createInferRuntime(gLogger);

assert(runtime != nullptr);

//step10:反序列化创建engine

engineCathodeHead = runtimeCathodeHead->deserializeCudaEngine(FileContent[0].first, FileContent[0].second, nullptr);

assert(engine != nullptr);

//step11:创建context,创建一些空间来存储中间激活值

contextCathodeHead = engineCathodeHead->createExecutionContext();

assert(context != nullptr);

return true;

}

bool MODELDLL::batteryCathodeHeadPredict(const Mat& src, Mat& dst, const int& channel, const int& classe, const int& width, const int& height)

{

cudaSetDevice(DEVICE);

if (src.empty())

return false;

//参数初始化

float *data = new float[channel * width * height];

float* prob = new float[classe * width * height];

cv::Mat primg = src.clone();

cv::resize(primg, primg, cv::Size( width, height));

normalizeImg(primg, data);

//step1:使用这些索引,创建buffers指向 GPU 上输入和输出缓冲区

const ICudaEngine& engine = (* contextCathodeHead).getEngine();

assert(engine.getNbBindings() == 2);

//step2:分配buffers空间,为输入输出开辟GPU显存。Allocate GPU memory for Input / Output data

void* buffers[2];

const int inputIndex = engine.getBindingIndex(INPUT_BLOB_NAME);

const int outputIndex = engine.getBindingIndex(OUTPUT_BLOB_NAME);

CHECK(cudaMalloc(&buffers[inputIndex], channel * width * height*sizeof(float)));

CHECK(cudaMalloc(&buffers[outputIndex], classe * width * height * sizeof(float)));

step3:创建cuda流

cudaStream_t stream;

CHECK(cudaStreamCreate(&stream));

step4:从CPU到GPU----拷贝input数据

CHECK(cudaMemcpyAsync(buffers[inputIndex], data, channel * width * height * sizeof(float), cudaMemcpyHostToDevice, stream));

step5: 启动cuda核计算

(*contextCathodeHead).enqueue(1, buffers, stream, nullptr);

step6:从GPU到CPU----拷贝output数据

CHECK(cudaMemcpyAsync(prob, buffers[outputIndex], classe *width * height * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

step7:获取Mat格式的输出结果,并调整尺寸

float* mask = prob;

dst = cv::Mat(height, width, CV_8UC1);

uchar* ptmp = NULL;

for (int i = 0; i < height; i++)

{

ptmp = dst.ptr<uchar>(i);

for (int j = 0; j < width; j++)

{

float* pixcel = mask + i * width + j;

if (*pixcel > CONF_THRESH)

{

ptmp[j] = 255;

}

else

{

ptmp[j] = 0;

}

}

}

cv::resize(dst, dst, src.size(), INTER_NEAREST);

step8:释放资源

cudaStreamDestroy(stream);

CHECK(cudaFree(buffers[inputIndex]));

CHECK(cudaFree(buffers[outputIndex]));

delete prob;

delete data;

}

4. 输出结果