Abstract

In this paper, we sysytematically review Transformer schemes for time series modeling by hignlighting their strengths as well as limitations.

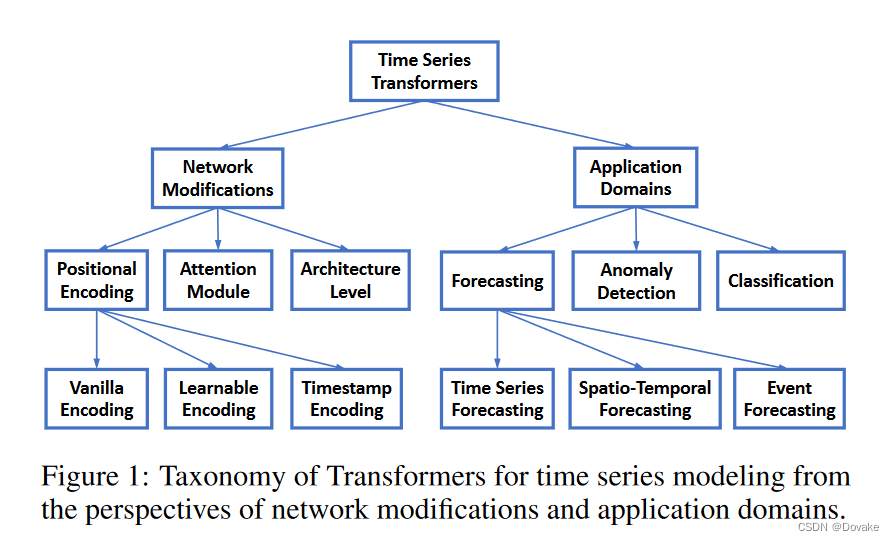

in particular, we examine the development of time series Transformers in two perspectives.

network structure. we sumarize the adaptation and modifacations that have been made to Transformers in order to accommodate the challenges. (我们总结了为了适应时间序列任务的各种挑战,而对 Transformers 所作的修改和调整)applications: we categorize time series Transformer based on common tasks includeing foecasting, anomaly detection, and classfication.

Github: time-series-transformers-review

Introduction

Seasonality or periodicity is an effectively model long-range and short-range temporal dependency and capture seasonality simultaneously remains a chanllenge.

Preliminaries of the Transformer (Transforms 预备知识)

Vanilla Transformer

Input Encoding and Positional Encoding

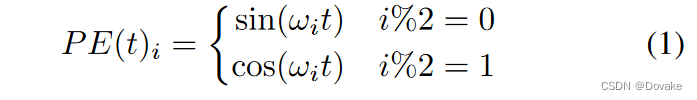

Unlike LSTM or RNN, the vanilla Transformer has no recurrence. Instead, it utilizes the positional encoding added in the input embeddings, to model the sequence information. We summarize some positional encodings below:

Absuolute Positional Encoding

In vanilla Transformer, for each position index t t t , encoding vector is given by

where ω i \omega _i ωi is the had-crafted frequency for each dimension. Another way is to learn a set of positional embeddings for eachposition which is more flexibel.

Relative Positions Encoding

Multi-head Attention

Taxonomy of Transformers in Time Series

Network Modifications for Time Series

Positional Encoding

As the ordering of time series matters,(由于时间序列的顺序很重要), It is of great importance to encode the positions of input time series into Transformers. A common design is to first encode positional information as vector and then inject them into the model as an additional input together with the input time series. How to obtain these vectors when modeling time series with Transformers can be divided into thress main categories.

Vanilla positional Encoding: Unable to fully exploit the important features of time series dataLearnable Positional Encoding: As the vanilla positional encoding is hand-crafted and less expressive and adaptive ,several studies found that learning appropriate positional embeddings from time series data can be much more effective. Compared to fixed vanilla positional encoding, learned embeddings are more flexible and can adapt to specific tasks.TimeStamp Encoding