初试代码版本

import torch

from torch import nn

from torch import optim

import torchvision

from matplotlib import pyplot as plt

from torch.utils.data import DataLoader

def one_hot(label,depth=10):

out = torch.zeros(label.size(0),depth)

idx = torch.LongTensor(label).view(-1,1)

out.scatter_(dim = 1,index = idx,value = 1)

return out

def plot_curve(data):

fig = plt.figure()

plt.plot(range(len(data)),data,color = 'blue')

plt.legend(['value'],loc = 'upper right')

plt.xlabel('step')

plt.ylabel('value')

plt.show()

def plot_image(img,label,name):

fig = plt.figure()

for i in range(6):

plt.subplot(2,3,i+1)

plt.tight_layout()

plt.imshow(img[i][0]*0.3081+0.1307,cmap='gray',interpolation='none')

plt.title("{}:{}".format(name,label[i].item()))

plt.xticks([])

plt.yticks([])

plt.show()

batch_size=512

train_loader=DataLoader(torchvision.datasets.MNIST('./dataset',train=True,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),batch_size=batch_size,shuffle=True)

test_loader=DataLoader(torchvision.datasets.MNIST('./dataset',train=False,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),batch_size=batch_size,shuffle=False)

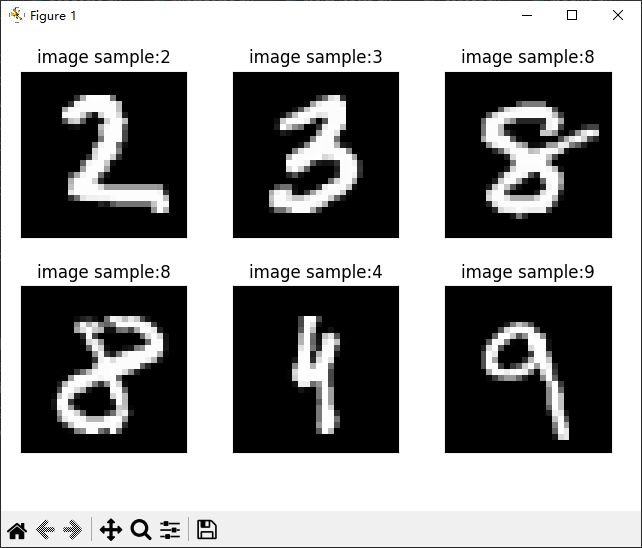

x,y=next(iter(train_loader))

print(x.shape,y.shape,x.min(),x.max())

plot_image(x,y,'image sample')

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# self.fc1=nn.Linear(28*28,256),

# self.fc2=nn.Linear(256,64),

# self.fc3=nn.Linear(64,10)

self.model=nn.Sequential(

nn.Linear(28*28,256),

nn.ReLU(inplace=True),

nn.Linear(256,64),

nn.ReLU(inplace=True),

nn.Linear(64,10)

)

def forward(self, x):

# x:[b,1,28,28]

# h1:relu(xw1+b1)

# x = F.relu(self.fc1(x))

# # h2:relu(h1w2+b2)

# x = F.relu(self.fc2(x))

# # h3 = h2w3+b3

# x = self.fc3(x)

x=self.model(x)

return x

net=Net()

optimizer=optim.SGD(net.parameters(),lr=0.01,momentum=0.9)

loss_fn=nn.MSELoss()

train_loss=[]

for epoch in range(3):

for batch_idx,(x,y) in enumerate(train_loader):

x=x.view(x.size(0),28*28)

output=net(x)

y_onethot=one_hot(y)

loss=loss_fn(output,y_onethot)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss.append(loss.item())

if batch_idx % 10==0:

print(epoch,batch_idx,loss.item())

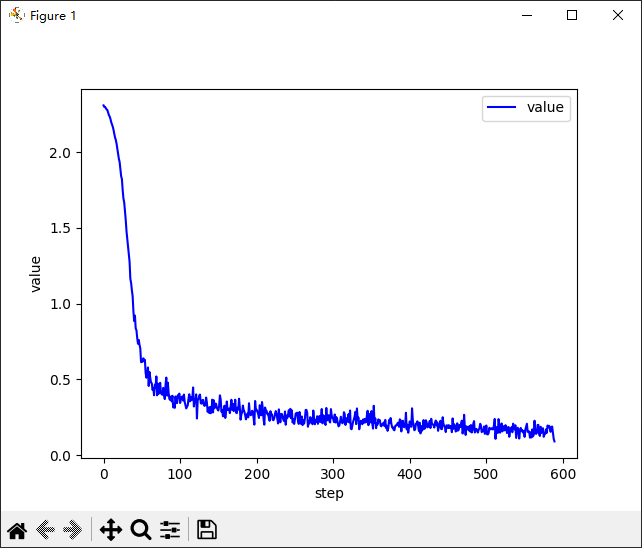

plot_curve(train_loss)

total_correct=0

for x,y in test_loader:

x = x.view(x.size(0),28*28)

out=net(x)

pred=out.argmax(dim=1)

correct=pred.eq(y).sum().float().item()

total_correct+=correct

total_num=len(test_loader.dataset)

acc=total_correct/total_num

print(acc)

# 绘制几个预测正确的图像

x,y=next(iter(test_loader))

out=net(x.view(x.size(0),28*28))

pred=out.argmax(dim=1)

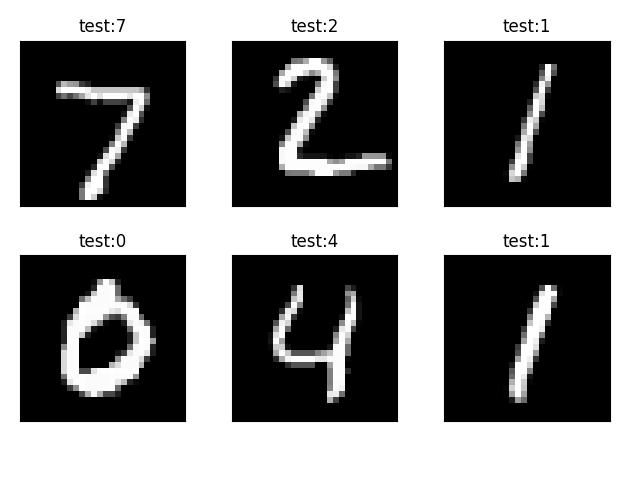

plot_image(x,pred,"test")

查看训练样本数据

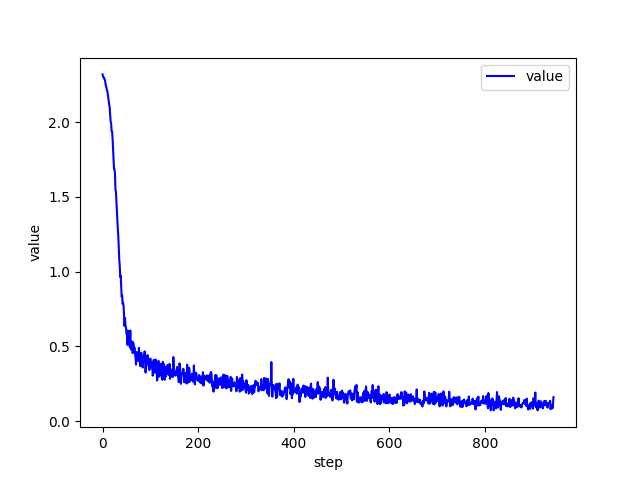

每一步的训练损失

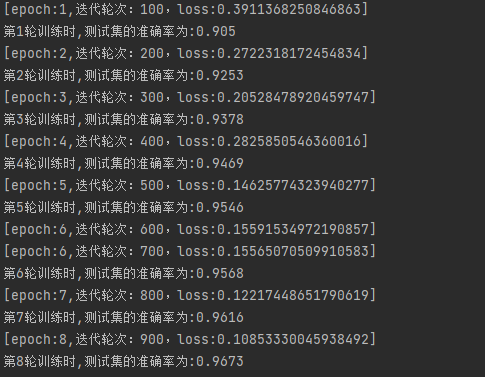

最后一轮模型在测试集的准确率

在测试集的训练效果

进一步优化:

损失函数替换了,

训练的epoch由3变成了5

import torch

from torch import nn

from torch import optim

import torchvision

from matplotlib import pyplot as plt

from torch.utils.data import DataLoader

# 自定义几个绘图函数

def one_hot(label,depth=10):

out = torch.zeros(label.size(0),depth)

idx = torch.LongTensor(label).view(-1,1)

out.scatter_(dim = 1,index = idx,value = 1)

return out

def plot_curve(data):

fig = plt.figure()

plt.plot(range(len(data)),data,color = 'blue')

plt.legend(['value'],loc = 'upper right')

plt.xlabel('step')

plt.ylabel('value')

plt.show()

def plot_image(img,label,name):

fig = plt.figure()

for i in range(6):

plt.subplot(2,3,i+1)

plt.tight_layout()

plt.imshow(img[i][0]*0.3081+0.1307,cmap='gray',interpolation='none')

plt.title("{}:{}".format(name,label[i].item()))

plt.xticks([])

plt.yticks([])

plt.show()

batch_size=512

train_loader=DataLoader(torchvision.datasets.MNIST('./dataset',train=True,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),batch_size=batch_size,shuffle=True)

test_loader=DataLoader(torchvision.datasets.MNIST('./dataset',train=False,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),batch_size=batch_size,shuffle=False)

# x,y=next(iter(train_loader))

# print(x.shape,y.shape,x.min(),x.max())

# plot_image(x,y,'image sample')

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.model=nn.Sequential(

nn.Flatten(),

nn.Linear(28*28,256),

nn.ReLU(inplace=True),

nn.Linear(256,64),

nn.ReLU(inplace=True),

nn.Linear(64,10)

)

def forward(self, x):

# x:[b,1,28,28]

# h1:relu(xw1+b1)

# x = F.relu(self.fc1(x))

# # h2:relu(h1w2+b2)

# x = F.relu(self.fc2(x))

# # h3 = h2w3+b3

# x = self.fc3(x)

x=self.model(x)

return x

net=Net()

optimizer=optim.SGD(net.parameters(),lr=0.01,momentum=0.9)

loss_fn=nn.CrossEntropyLoss()

epoch=5

train_loss=[]

# for epoch in range(epoch):

# for batch_idx,(x,y) in enumerate(train_loader):

# # x=x.view(x.size(0),28*28)

# output=net(x)

# y_onethot=one_hot(y)

# loss=loss_fn(output,y_onethot)

#

# optimizer.zero_grad()

# loss.backward()

# optimizer.step()

# train_loss.append(loss.item())

# if batch_idx % 10==0:

# print(epoch,batch_idx,loss.item())

# plot_curve(train_loss)

#

# total_correct=0

# for x,y in test_loader:

# x = x.view(x.size(0),28*28)

# out=net(x)

# pred=out.argmax(dim=1)

# correct=pred.eq(y).sum().float().item()

# total_correct+=correct

train_step=0

for epoch in range(epoch):

for images,labels in train_loader:

output=net(images)

loss=loss_fn(output,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss.append(loss.item())

train_step+=1

if train_step % 10==0:

print(epoch,train_step,loss.item())

plot_curve(train_loss)

total_correct=0

for x,y in test_loader:

out=net(x)

pred=out.argmax(dim=1)

correct=pred.eq(y).sum().float().item()

total_correct+=correct

total_num=len(test_loader.dataset)

acc=total_correct/total_num

print(acc)

# 绘制几个预测正确的图像

x,y=next(iter(test_loader))

# out=net(x.view(x.size(0),28*28))

# pred=out.argmax(dim=1)

# plot_image(x,pred,"test")

每一步的训练的损失

模型最后一轮在测试集的准确率:

最终版本:

import torch

from torch import nn

from torch import optim

import torchvision

from matplotlib import pyplot as plt

from torch.utils.data import DataLoader

# 自定义几个绘图函数

def one_hot(label,depth=10):

out = torch.zeros(label.size(0),depth)

idx = torch.LongTensor(label).view(-1,1)

out.scatter_(dim = 1,index = idx,value = 1)

return out

def plot_curve(data):

fig = plt.figure()

plt.plot(range(len(data)),data,color = 'blue')

plt.legend(['value'],loc = 'upper right')

plt.xlabel('step')

plt.ylabel('value')

plt.show()

def plot_image(img,label,name):

fig = plt.figure()

for i in range(6):

plt.subplot(2,3,i+1)

plt.tight_layout()

plt.imshow(img[i][0]*0.3081+0.1307,cmap='gray',interpolation='none')

plt.title("{}:{}".format(name,label[i].item()))

plt.xticks([])

plt.yticks([])

plt.show()

batch_size=512

train_loader=DataLoader(torchvision.datasets.MNIST('./dataset',train=True,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),batch_size=batch_size,shuffle=True)

test_loader=DataLoader(torchvision.datasets.MNIST('./dataset',train=False,download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.1307,),(0.3081,))

])),batch_size=batch_size,shuffle=False)

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.model=nn.Sequential(

nn.Flatten(),

nn.Linear(28*28,256),

nn.ReLU(inplace=True),

nn.Linear(256,64),

nn.ReLU(inplace=True),

nn.Linear(64,10)

)

def forward(self, x):

x=self.model(x)

return x

net=Net()

optimizer=optim.SGD(net.parameters(),lr=0.01,momentum=0.9)

loss_fn=nn.CrossEntropyLoss()

epoch=8

train_loss=[]

accuracy=[]

train_step=0

total_num = len(test_loader.dataset)

for epoch in range(epoch):

net.train()

for images,labels in train_loader:

output=net(images)

loss=loss_fn(output,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss.append(loss.item())

train_step+=1

if train_step % 100==0:

print("[epoch:{},迭代轮次:{},loss:{}]".format(epoch+1,train_step,loss.item()))

net.eval()

with torch.no_grad():

total_correct = 0

for images, labels in test_loader:

out = net(images)

pred = out.argmax(dim=1)

correct = pred.eq(labels).sum().float().item()

total_correct += correct

acc = total_correct / total_num

print("第{}轮训练时,测试集的准确率为:{}".format(epoch+1, acc))

accuracy.append(acc)

plot_curve(train_loss)

plot_curve(accuracy)

# 绘制几个预测正确的图像

x,y=next(iter(test_loader))

out=net(x.view(x.size(0),28*28))

pred=out.argmax(dim=1)

plot_image(x,pred,"test")

运行结果:初期预测准确率就挺高的

训练集每一次迭代的损失变化:

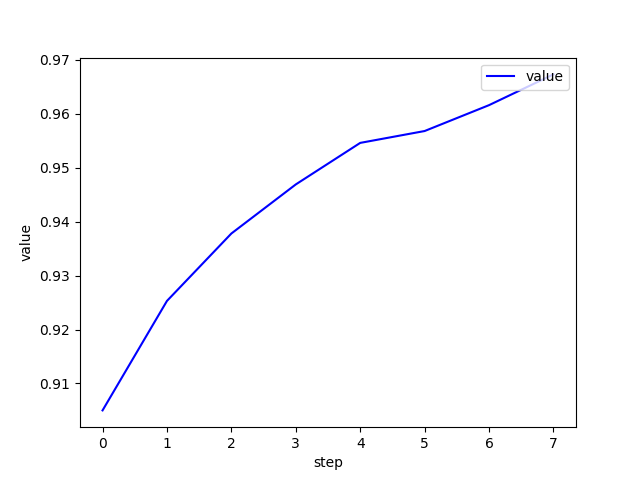

测试集在模型的每一轮训练时的准确率:

看趋势应该是还没收敛,还能提升,可以将epoch调大一点

测试集在最后一轮模型的预测效果: