直接上代码:

import os

import torch

import torch.nn as nn

import pandas as pd

import torch.optim as optim

from torch.utils.data import Dataset, DataLoader

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

batch_size = 256

num_workers = 4

lr = 1e-4

epochs = 20

from torchvision import transforms

image_size = 28

data_transform = transforms.Compose([

transforms.Resize(image_size),

transforms.ToTensor()

])

from torchvision import datasets

train_data = datasets.MNIST('./data',train=True,transform=data_transform)

test_data = datasets.MNIST('./data',train=False,transform=data_transform)

train_loader = DataLoader(dataset=train_data, batch_size = batch_size, shuffle = True, num_workers = num_workers, drop_last = True)

test_loader = DataLoader(dataset=test_data, batch_size=batch_size, shuffle=False, num_workers=num_workers)

import matplotlib.pyplot as plt

image, label = iter(train_loader).next()

print(image.shape, label.shape)

plt.imshow(image[0][0], cmap = 'gray')

print(label[0])

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(1, 32, 5), # in_channel,out_channel,kernel_size

nn.ReLU(),

nn.MaxPool2d(2, stride = 2),

nn.Dropout(0.3),

nn.Conv2d(32, 64, 5),

nn.ReLU(),

nn.MaxPool2d(2, stride = 2),

nn.Dropout(0.3)

)

self.fc = nn.Sequential(

nn.Linear(64 * 4 * 4, 512),

nn.ReLU(),

nn.Linear(512, 10)

)

def forward(self, x):

x = self.conv(x)

x = x.view(-1, 64*4*4)

x = self.fc(x)

return x

model = Net()

model = model.cuda()

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(),lr = 0.001)

train_loss_arr = []

def train(epoch):

model.train()

train_loss = 0

for data,label in train_loader:

data,label = data.cuda(), label.cuda()

optimizer.zero_grad()

output = model(data)

loss = criterion(output, label)

loss.backward()

optimizer.step()

train_loss += loss.item() * data.size(0) # 256

train_loss = train_loss / len(train_loader.dataset)

train_loss_arr.append(train_loss)

print('Epoch: {} \tTraining Loss: {:.6f}'.format(epoch, train_loss))

import numpy as np

acc_data = []

def val(epoch):

model.eval()

val_loss = 0

gt_labels = [] # 真实标签

pred_labels = [] # 预测标签

with torch.no_grad():

for data, label in test_loader:

data, label = data.cuda(), label.cuda()

output = model(data)

preds = torch.argmax(output, 1)

gt_labels.append(label.cpu().data.numpy())

pred_labels.append(preds.cpu().data.numpy())

loss = criterion(output, label)

val_loss += loss.item()*data.size(0) # 256

val_loss = val_loss/len(test_loader.dataset)

gt_labels, pred_labels = np.concatenate(gt_labels), np.concatenate(pred_labels)

acc = np.sum(gt_labels==pred_labels)/len(pred_labels)

acc_data.append(acc)

print('Epoch: {} \tValidation Loss: {:.6f}, Accuracy: {:6f}'.format(epoch, val_loss, acc))

for epoch in range(1, 21):

train(epoch)

val(epoch)

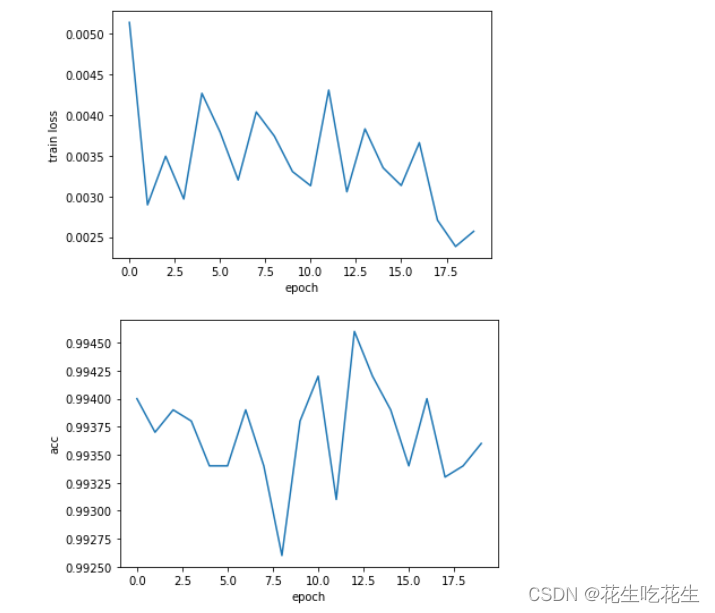

画图

x = []

for i in range(20):

x.append(i)

plt.plot(x,train_loss_arr)

plt.xlabel('epoch')

plt.ylabel('train loss')

plt.show()

plt.plot(x, acc_data)

plt.xlabel('epoch')

plt.ylabel('acc')

plt.show()