本文以Paddle为例,torch操作也差不多

我在5年前管这个限制参数过大的东西叫正则化,结果现在叫权重衰减了hhh

有点儿跟不上潮流了,我还以以为这个权重衰减是每次迭代给权重乘以一个0.99999,让他进行衰减呢(这样操作也衰减的太快了吧,显然不是)

然而实际上和正则化是一个东西

都在loss后边加一个参数的 L1/L2 范数,当成损失函数进行优化

l o s s + = 0.5 ∗ c o e f f ∗ r e d u c e _ s u m ( s q u a r e ( x ) ) loss \mathrel{+}= 0.5 * coeff * reduce\_sum(square(x)) loss+=0.5∗coeff∗reduce_sum(square(x))

c o e f f coeff coeff就是权重衰减系数 或者叫 正则化系数

下面用 L2Decay API来进行一个小实验:

import paddle

from paddle.regularizer import L2Decay

paddle.seed(1107)

linear = paddle.nn.Linear(3, 4, bias_attr=False)

old_linear_weight = linear.weight.detach().clone()

# print(old_linear_weight.mean())

inp = paddle.rand(shape=[2, 3], dtype="float32")

out = linear(inp)

coeff = 0.1

loss = paddle.mean(out)

# loss += 0.5 * coeff * (linear.weight ** 2).sum()

momentum = paddle.optimizer.Momentum(

learning_rate=0.1,

parameters=linear.parameters(),

weight_decay=L2Decay(coeff)

)

loss.backward()

momentum.step()

delta_weight = linear.weight - old_linear_weight

# print(linear.weight.mean())

# print( - delta_weight / linear.weight.grad ) # 学习率

print( delta_weight )

momentum.clear_grad()

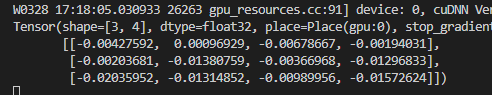

打印结果:

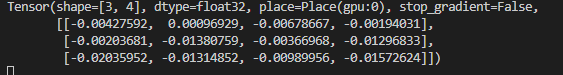

接下来,不用API L2Decay ,手动用权重的范数来做一下

import paddle

from paddle.regularizer import L2Decay

paddle.seed(1107)

linear = paddle.nn.Linear(3, 4, bias_attr=False)

old_linear_weight = linear.weight.detach().clone()

# print(old_linear_weight.mean())

inp = paddle.rand(shape=[2, 3], dtype="float32")

out = linear(inp)

coeff = 0.1

loss = paddle.mean(out)

loss += 0.5 * coeff * (linear.weight ** 2).sum()

momentum = paddle.optimizer.Momentum(

learning_rate=0.1,

parameters=linear.parameters(),

# weight_decay=L2Decay(coeff)

)

loss.backward()

momentum.step()

delta_weight = linear.weight - old_linear_weight

# print(linear.weight.mean())

# print( - delta_weight / linear.weight.grad ) # 学习率

print( delta_weight )

momentum.clear_grad()

API参考地址:

Paddle中:

对于一个可训练的参数,如果在 ParamAttr 中定义了正则化,那么会忽略 optimizer 中的正则化

my_conv2d = Conv2D(

in_channels=10,

out_channels=10,

kernel_size=1,

stride=1,

padding=0,

weight_attr=ParamAttr(regularizer=L2Decay(coeff=0.01)), # <----- 此处优先级高

bias_attr=False)