原文标题:Nvidia H100: Are 550,000 GPUs Enough for This Year?

作者:Doug Eadline

August 17, 2023

The GPU Squeeze continues to place a premium on Nvidia H100 GPUs. In a recent Financial Times article, Nvidia reports that it expects to ship 550,000 of its latest H100 GPUs worldwide in 2023. The appetite for GPUs is obviously coming from the generative AI boom, but the HPC market is also competing for these accelerators. It is not clear if this number includes the throttled China-specific A800 and H800 models.

在《金融时报》最近的一篇文章中,Nvidia 报告称,预计 2023 年将在全球范围内出货 550,000 个最新的 H100 GPU。对 GPU 的需求显然来自生成式 AI 热潮,但 HPC 市场也在争夺这些加速器。 目前尚不清楚这个数字是否包括中国专用的 A800 和 H800 。

The bulk of the GPUs will be going to US technology companies, but the Financial Times notes that Saudi Arabia has purchased at least 3,000 Nvidia H100 GPUs and the UAE has also purchased thousands of Nvidia chips. UAE has already developed its own open-source large language model using 384 A100 GPUs, called Falcon, at the state-owned Technology Innovation Institute in Masdar City, Abu Dhabi.

大部分 GPU 将流向美国科技公司,但英国《金融时报》指出,沙特阿拉伯已经购买了至少 3,000 个 Nvidia H100 GPU,阿联酋也购买了数千个 Nvidia 芯片。 阿联酋已经在阿布扎比马斯达尔城的国有技术创新研究所使用 384 个 A100 GPU 开发了自己的开源大型语言模型,称为 Falcon。

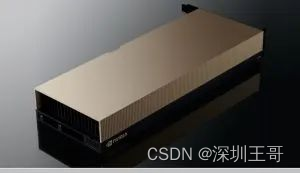

The flagship H100 GPU (14,592 CUDA cores, 80GB of HBM3 capacity, 5,120-bit memory bus) is priced at a massive $30,000 (average), which Nvidia CEO Jensen Huang calls the first chip designed for generative AI. The Saudi university is building its own GPU-based supercomputer called Shaheen III. It employs 700 Grace Hopper chips that combine a Grace CPU and an H100 Tensor Core GPU. Interestingly, the GPUs are being used to create an LLM developed by Chinese researchers who can’t study or work in the US.

旗舰级 H100 GPU(14,592 个 CUDA 核心、80GB HBM3 容量、5,120 位内存总线)售价高达 30,000 美元(平均),Nvidia 首席执行官黄仁勋 (Jensen Huang) 称其为首款为生成式 AI 设计的芯片。 沙特大学正在构建自己的基于 GPU 的超级计算机,名为 Shaheen III。 它采用 700 个 Grace Hopper 芯片,结合了 Grace CPU 和 H100 Tensor Core GPU。 有趣的是,GPU被用来创建LLM,该LLM由不能在美国学习或工作的中国研究人员开发。

Meanwhile, generative AI (GAI) investments continue to fund GPU infrastructure purchases. As reported, in the first 6 months of 2023, funding to GAI start-ups is up more than 5x compared to full-year 2022 and the generative AI infrastructure category has seen over 70% of the funding since Q3’22.

与此同时,生成式人工智能 (GAI) 投资继续为 GPU 基础设施采购提供资金。 据报道,2023 年前 6 个月,GAI 初创企业获得的资金比 2022 年全年增长了 5 倍以上,自 2022 年第三季度以来,生成式 AI 基础设施类别已占资金的 70% 以上。

Worth the Wait

The cost of a H100 varies depending on how it is packaged and presumably how many you are able to purchase. The current (Aug-2023) retail price for an H100 PCIe card is around $30,000 (lead times can vary as well.) A back-of-the-envelope estimate gives a market spending of $16.5 billion for 2023 — a big chunk of which will be going to Nvidia. According to estimates made by Barron’s senior writer Tae Kim in a recent social media post estimates it costs Nvidia $3,320 to make a H100. That is a 1000% percent profit based on the retail cost of an Nvidia H100 card.

H100 的成本因包装方式以及您能够购买的数量而异。 目前(2023 年 8 月)H100 PCIe 卡的零售价约为 30,000 美元(交货时间也可能有所不同。)粗略估计,2023 年的市场支出为 165 亿美元——其中很大一部分 将去Nvidia。 根据《巴伦周刊》资深撰稿人 Tae Kim 最近在社交媒体上发布的估计,Nvidia 制造 H100 的成本为 3,320 美元,1000% 利润。

As often reported, Nvidia’s partner TSMC can barely meet the demand for GPUs. The GPUs require a more complex CoWoS manufacturing process (Chip on Wafer on Substrate — a “2.5D” packaging technology from TSMC where multiple active silicon dies, usually GPUs and HBM stacks, are integrated on a passive silicon interposer.) Using CoWoS adds a complex multi-step, high-precision engineering process that slows down the rate of GPU production.

正如经常报道的那样,Nvidia 的合作伙伴台积电几乎无法满足 GPU 的需求。 GPU 需要更复杂的 CoWoS 制造工艺(基板上晶圆芯片 — 台积电的“2.5D”封装技术,其中多个有源硅芯片(通常是 GPU 和 HBM 堆栈)集成在无源硅中介层上。)使用 CoWoS 会增加复杂的多步骤、高精度工程流程会降低 GPU 的生产速度。

This situation was confirmed by Charlie Boyle, VP and GM of Nvidia’s DGX systems. Boyle states that delays are not from miscalculating demand or wafer yield issues from TSMC, but instead from the chip packaging CoWoS technology.

英伟达DGX系统副总裁兼总经理Charlie Boyle证实了这一情况。博伊尔表示,延迟不是因为台积电的需求计算错误或晶圆产量问题,而是因为芯片封装CoWoS技术。

原文链接:

https://www.hpcwire.com/2023/08/17/nvidia-h100-are-550000-gpus-enough-for-this-year/

//你都看到这里了,不如我们唠叨几句吧!

1. 有人问“大模型挣钱了吗?”,我不知道怎么回答,但,nvidia已经在摘取低垂的果实了。它的先发优势来自于十数年前CUDA软件栈的布局,和n多年在GPU架构方向的积累。

2. 国内三十多家加速卡公司,2024年都将卷入高峰时刻,做几个预测:

-

争上市的,争推大模型专用卡的

-

小公司或走得慢的公司明年会很危险,并购不失为退路。

-

算力中心/信创市场/城市布局,份额之争。

-

2024年,将是算力基础软件公司爆发的高光时刻!

-

美国佬将精确选择目标,精准选择打击时间。好事者/间谍无处不在!