- 安装centos8 jdk

- 部署伪分布式spark环境

安装Centos8 环境下的JDK

下载jdk linux版本

下载链接:

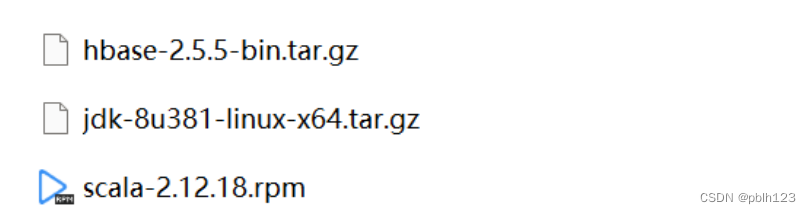

jdk-8u381-linux-x64.tar.gz

将该文件上传到Centos8 主机

部署配置jdk(java8)

# 解压到指定路径

[lhang@tigerkeen Downloads]$ sudo tar -zxvf jdk-8u381-linux-x64.tar.gz -C /opt/soft_Installed/jdk/

# 配置个人用户环境变量

[lhang@tigerkeen jdk1.8.0_381]$ cat ~/.bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# User specific environment

if ! [[ "$PATH" =~ "$HOME/.local/bin:$HOME/bin:" ]]

then

PATH="$HOME/.local/bin:$HOME/bin:$PATH"

fi

export PATH

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

# User specific aliases and functions

# 配置Java 个人环境变量

JAVA_HOME=/opt/soft_Installed/jdk/jdk1.8.0_381

PATH=$PATH:$JAVA_HOME/bin

export PATH JAVE_HOME

# 刷新让环境变量生效

[lhang@tigerkeen jdk1.8.0_381]$ source ~/.bashrc

# 检查java是否部署成功

[lhang@tigerkeen jdk1.8.0_381]$ java -version

java version "1.8.0_381"

Java(TM) SE Runtime Environment (build 1.8.0_381-b09)

Java HotSpot(TM) 64-Bit Server VM (build 25.381-b09, mixed mode)

部署伪分布式Hadoop环境

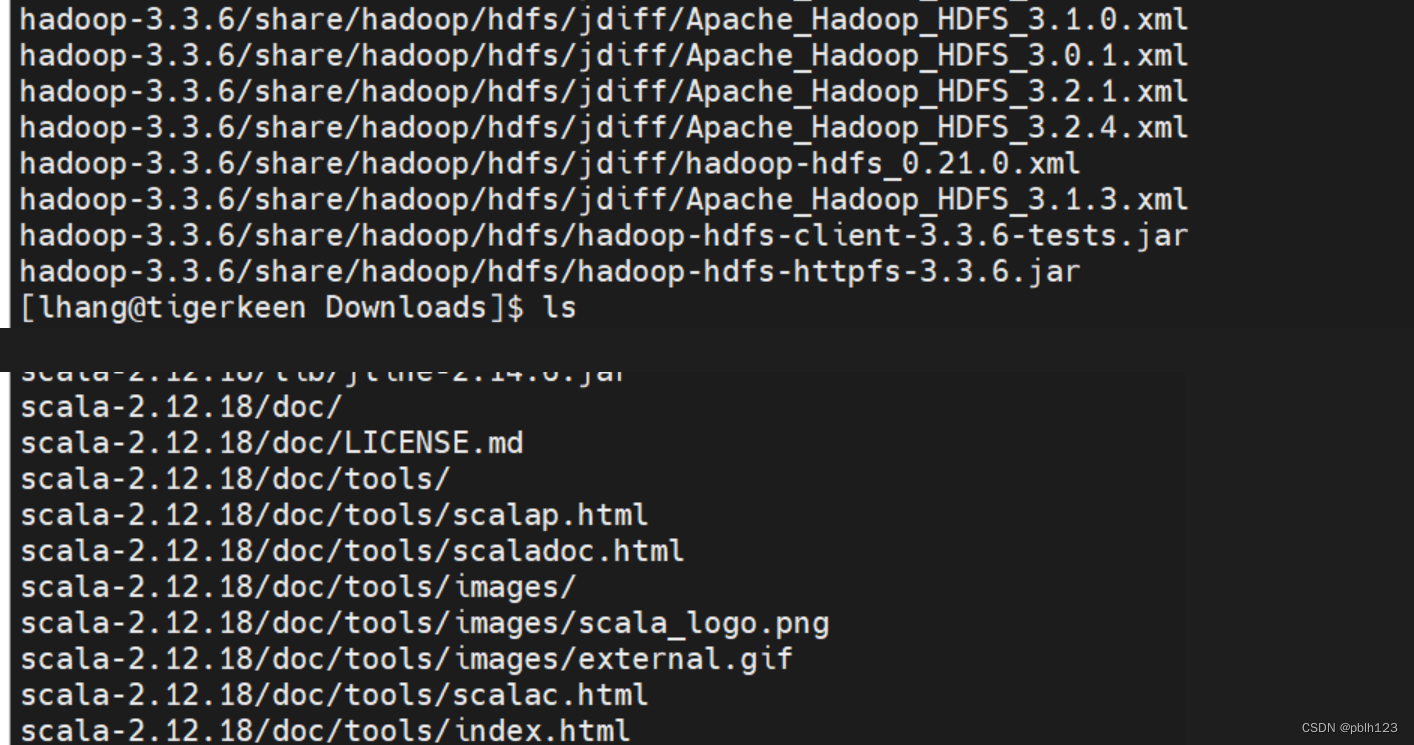

[lhang@tigerkeen Downloads]$ sudo tar -zxvf hadoop-3.3.6.tar.gz -C /opt/soft_Installed/

[lhang@tigerkeen Downloads]$ sudo tar -zxvf scala-2.12.18.tgz -C /opt/soft_Installed/

cd soft_Installed/

sudo mkdir {

hadoop,scala}

sudo mv hadoop-3.3.6/ hadoop

sudo mv scala-2.12.18/ scala

详细的Hadoop伪分布式配置

这里不是重点,如果感兴趣,请参照文后参考链接

部署伪分布式的Spark环境

- 上传spark到centos8

- 解压spark到指定目录

- 配置spark伪分布式环境

[lhang@tigerkeen Downloads]$ sudo tar -zxvf spark-3.4.1-bin-hadoop3.gz -C /opt/soft_Installed/

[lhang@tigerkeen soft_Installed]$ sudo mv spark-3.4.1-bin-hadoop3/ spark

[lhang@tigerkeen conf]$ cp spark-env.sh.template spark-env.sh

[lhang@tigerkeen conf]$ vim spark-env.sh

[lhang@tigerkeen conf]$ tail spark-env.sh

# - OPENBLAS_NUM_THREADS=1 Disable multi-threading of OpenBLAS

# Options for beeline

# - SPARK_BEELINE_OPTS, to set config properties only for the beeline cli (e.g. "-Dx=y")

# - SPARK_BEELINE_MEMORY, Memory for beeline (e.g. 1000M, 2G) (Default: 1G)

# 配置伪分布式Spark环境

export JAVA_HOME=/opt/soft_Installed/jdk/jdk1.8.0_381

export SPARK_MASTER_HOST=tigerkeen

export SPARK_MASTER_PORT=7077

[lhang@tigerkeen conf]$ cp workers.template workers

[lhang@tigerkeen conf]$ vim workers

[lhang@tigerkeen conf]$ tail workers

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# A Spark Worker will be started on each of the machines listed below.

tigerkeen

[lhang@tigerkeen conf]$ ls

[lhang@tigerkeen sbin]$ ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/soft_Installed/spark/spark-3.4.1-bin-hadoop3/logs/spark-lhang-org.apache.spark.deploy.master.Master-1-tigerkeen.out

tigerkeen: Warning: Permanently added 'tigerkeen,fe80::20c:29ff:fee0:bc8c%ens160' (ECDSA) to the list of known hosts.

lhang@tigerkeen's password:

tigerkeen: starting org.apache.spark.deploy.worker.Worker, logging to /opt/soft_Installed/spark/spark-3.4.1-bin-hadoop3/logs/spark-lhang-org.apache.spark.deploy.worker.Worker-1-tigerkeen.out

[lhang@tigerkeen sbin]$ jps

4040 Jps

3900 Master

4012 Worker

配置用户环境变量

vim ~/.bashrc

# 配置Java 个人环境变量

JAVA_HOME=/opt/soft_Installed/jdk/jdk1.8.0_381

CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin

export PATH JAVA_HOME CLASSPATH

# 配置Scala用户环境变量

SCALA_HOME=/opt/soft_Installed/scala/scala-2.12.18

# 配置HADOOP伪分布式环境

HADOOP_HOME=/opt/soft_Installed/hadoop/hadoop-3.3.6

HADOOP_CONF_DIR=/opt/soft_Installed/hadoop/hadoop-3.3.6/etc/hadoop

CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

# 配置伪分布式Spark环境

SPARK_HOME=/opt/soft_Installed/spark/spark-3.4.1-bin-hadoop3

PATH=$PATH:$SCALA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$SPARK_HOME/bin

export PATH HADOOP_HOME HADOOP_CONF_DIR HADOOP_COMMON_LIB_NATIVE_DIR SPARK_HOME CLASSPATH

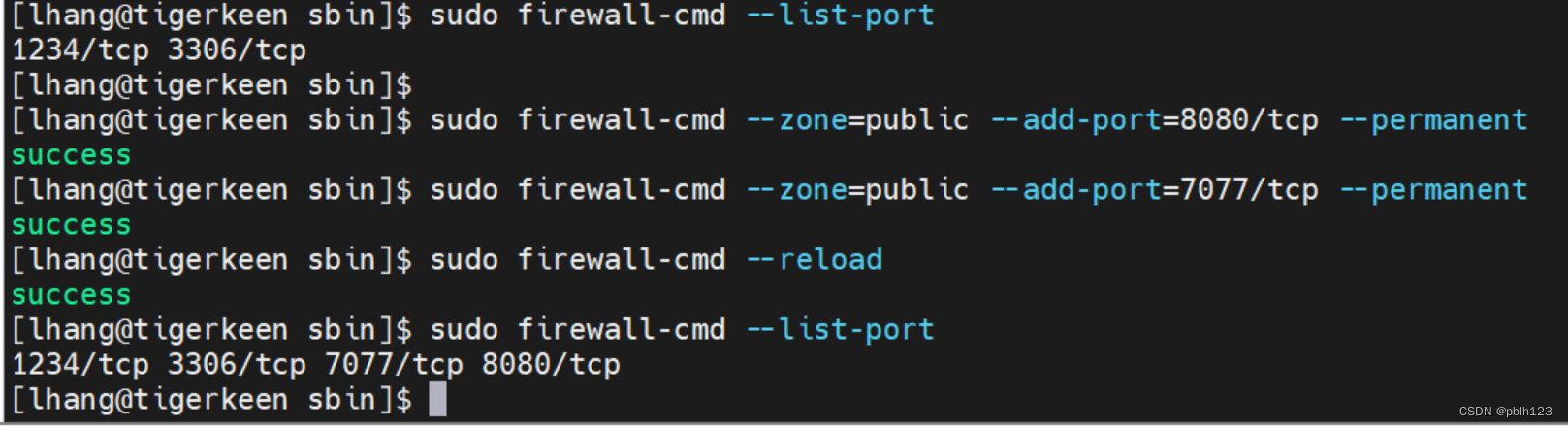

配置Centos8 防火墙开启指定端口

开启centos8 的防火墙指定端口

sudo firewall-cmd --zone=public --add-port=1234/tcp --permanent

sudo firewall-cmd --reload

sudo firewall-cmd --list-port

sudo firewall-cmd --zone=public --add-port=8080/tcp --permanent

sudo firewall-cmd --zone=public --add-port=7077/tcp --permanent

sudo firewall-cmd --reload

sudo firewall-cmd --list-port

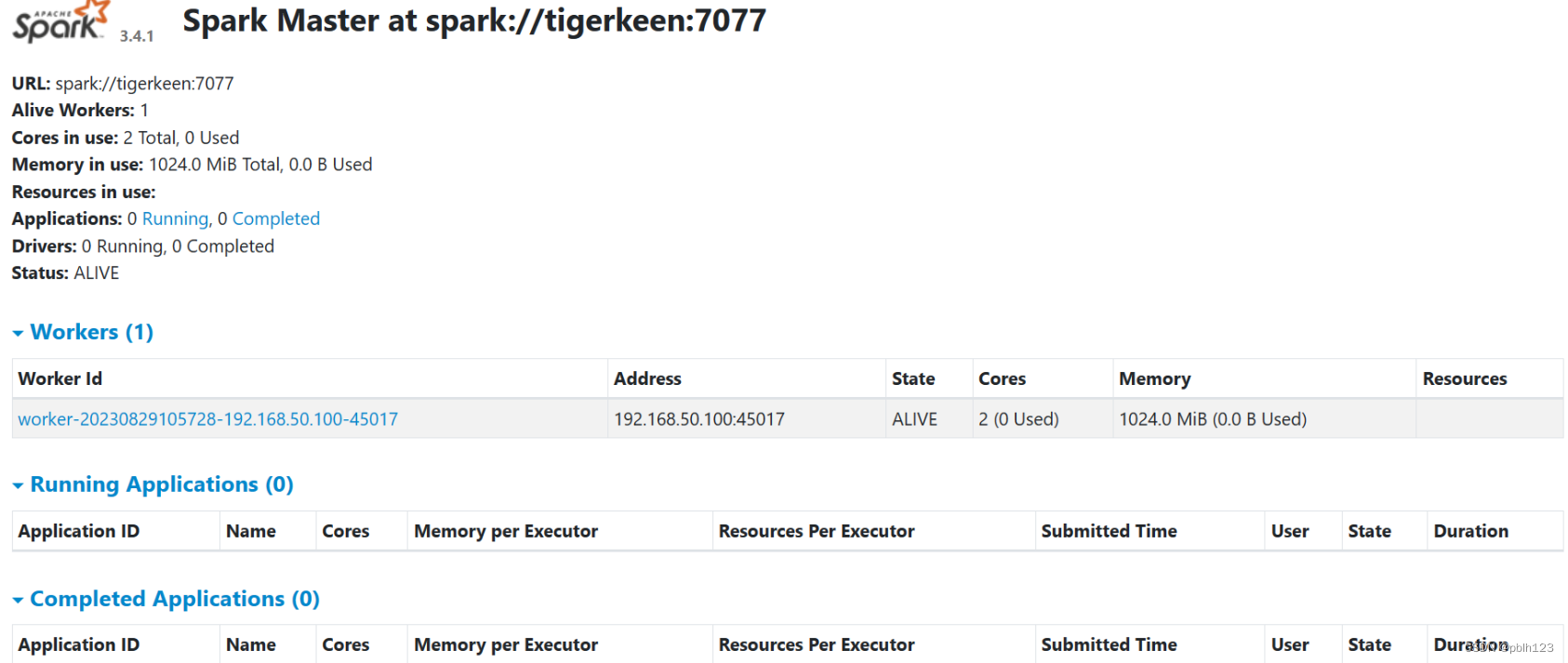

Spark Master at spark://tigerkeen:7077

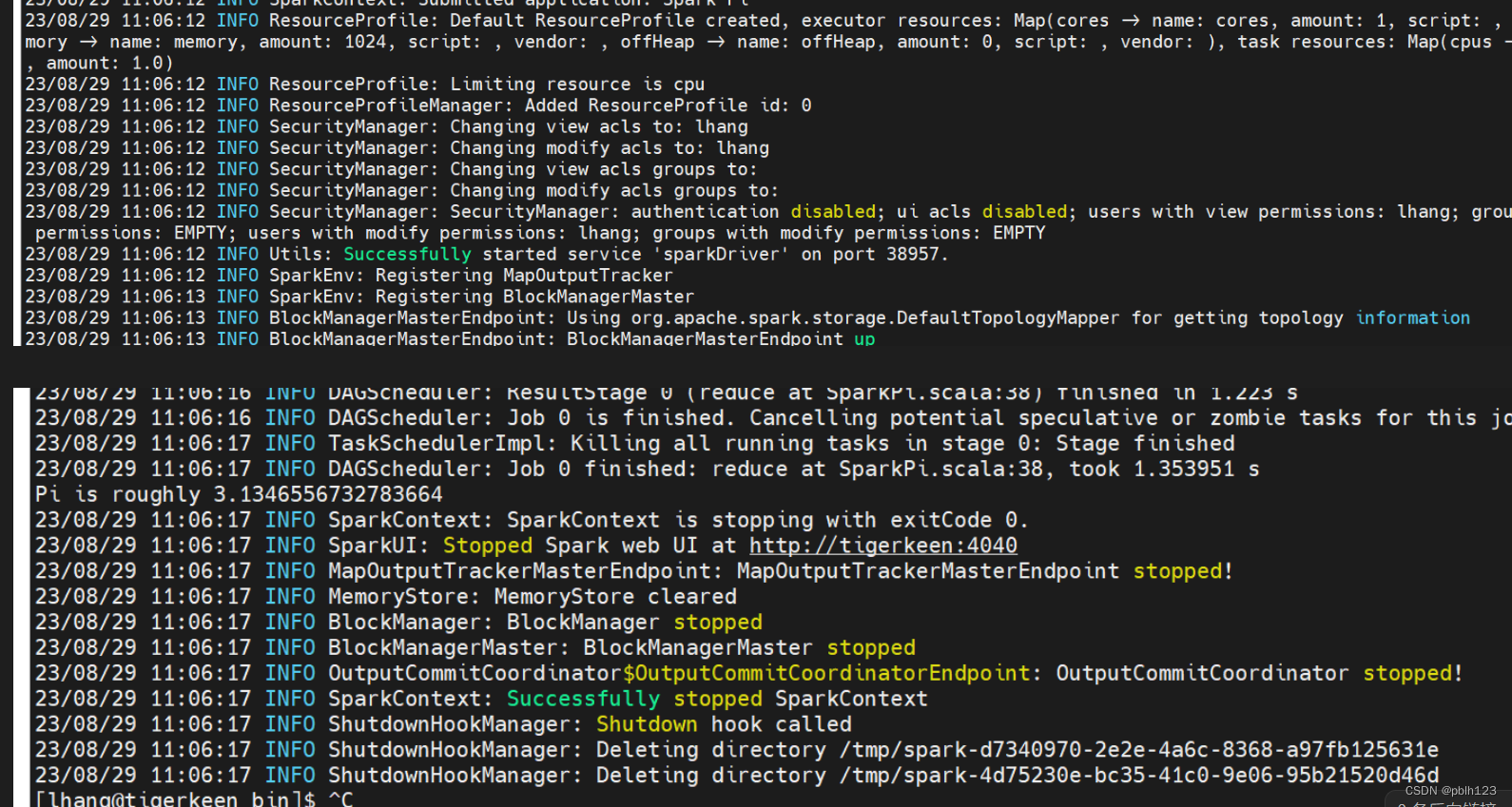

Spark submit 提交pi计算测试

[lhang@tigerkeen bin]$ ./spark-submit --class org.apache.spark.examples.SparkPi --master local[*] /opt/soft_Installed/spark/spark-3.4.1-bin-hadoop3/examples/jars/spark-examples_2.12-3.4.1.jar

参考链接

https://blog.csdn.net/pblh123/article/details/126721139