一般是用C++控制Kinect,用Python还是比较少的,但还是存在用Python控制Kinect相机的需求,毕竟Python更简单些,能进一步降低开发门槛。

开发环境

Windows系统

Python 3.8或更低版本,Python版本不能高于3.8

Open3D 0.13.0

Open3D – A Modern Library for 3D Data Processing

Azure kinect SDK 1.4.1

Azure-Kinect-Sensor-SDK/usage.md at develop · microsoft/Azure-Kinect-Sensor-SDK · GitHub

连接相机

创建记录器对象,需要两个参数——传感器和设备编号;创建传感器对象,需要一个参数——配置信息。

import open3d

config = o3d.io.AzureKinectSensorConfig() # 创建配置对象

sensor = o3d.io.AzureKinectSensor(config) # 创建传感器对象

device = 0 # 设备编号

recorder = o3d.io.AzureKinectRecorder(config, device) # 创建记录器对象通过open3d.io.AzureKinectSensorConfig对象来配置相机参数,上面的示例使用默认参数,具体配置相机参数的方法将在下文介绍;设备编号一般为0,若有多台Kinect与PC相连,则根据需要设定不同的编号。

修改相机配置

读取配置文件,生成配置对象。

import open3d

filename = "./myconfig.json" # 配置文件

myconfig = o3d.io.read_azure_kinect_sensor_config(filename) # 生成配置对象

device = 0 # 设备编号

recorder = o3d.io.AzureKinectRecorder(myconfig, device) # 生成记录器对象通过open3d.io.read_azure_kinect_sensor_config()方法读取配置文件,该方法会返回一个open3d.io.AzureKinectSensorConfig对象。

配置文件是json格式的文件,下面给出默认是配置文件(本文所有示例的相机配置都将采用默认配置):

{

"camera_fps" : "K4A_FRAMES_PER_SECOND_30",

"color_format" : "K4A_IMAGE_FORMAT_COLOR_MJPG",

"color_resolution" : "K4A_COLOR_RESOLUTION_720P",

"depth_delay_off_color_usec" : "0",

"depth_mode" : "K4A_DEPTH_MODE_WFOV_2X2BINNED",

"disable_streaming_indicator" : "false",

"subordinate_delay_off_master_usec" : "0",

"synchronized_images_only" : "false",

"wired_sync_mode" : "K4A_WIRED_SYNC_MODE_STANDALONE"

}具体这些配置是什么含义,可以填什么参数,请参考微软Azure Kinect Sensor SDK文档:Azure Kinect Sensor SDK: Enumerations (microsoft.github.io)

录制视频

使用记录器对象录制视频,需要先进行传感器初始化,然后指定输出路径,再开启记录器。调用记录器的记录帧方法记录下当前的一帧,反复调用该方法则连续记录,形成视频。在视频录制结束后,要关闭记录器。

import open3d

config = o3d.io.AzureKinectSensorConfig() # 创建默认的配置对象

sensor = o3d.io.AzureKinectSensor(config) # 创建传感器对象

device = 0 # 设备编号

recorder = o3d.io.AzureKinectRecorder(config, device) # 创建记录器对象

recorder.init_sensor() # 初始化传感器

recorder.open_record(vedio_path) # 开启记录器

fps = 300

for i in range(fps): # 循环调用记录器的记录帧方法300次

rgbd = recorder.record_frame(enable_record = True,

enable_align_depth_to_color = True)

recorder.close_record() # 关闭记录器上面的示例使用默认的配置,相机的录制帧率是30fps,那么记录300帧就是10秒的视频。

open3d.io.AzureKinectRecorder对象的record_frame()方法有两个参数——enable_record 控制是否将当前帧写入视频中,一般该参数为True; enable_align_depth_to_color控制是否将深度信息与色彩信息对齐,这将在生成点云的操作中起到作用。同时,record_frame()方法的返回值是open3d.geometry.RGBDImage对象,record_frame()方法的返回值将在生成点云的操作中发挥作用。

读取视频

录制好的视频可以由阅读器读取,然后对其每一帧进行处理。

import open3d

reader = open3d.io.AzureKinectMKVReader() # 创建阅读器

path = "./video.mkv"

reader.open(path) # 打开视频文件

while not reader.is_eof(): # 判断视频是否全部读完

rgbd = reader.next_frame() # 获取下一帧

pass # 对这一帧进行处理open3d.io.AzureKinectMKVReader对象读的next_frame()方法的返回值是open3d.geometry.RGBDImage对象,也就是说用open3d.io.AzureKinectMKVReader读取视频的每一帧实际是在读取视频中的每一个open3d.geometry.RGBDImage对象,next_frame()方法的返回值将在生成点云的操作中发挥作用。

生成点云

由记录器记录帧,每记录下一帧就会产生一个open3d.geometry.RGBDImage对象;用阅读器读取视频,每读取一帧也会产生一个open3d.geometry.RGBDImage对象。利用open3d.geometry.RGBDImage对象可以生成点云。

import open3d

ply = open3d.geometry.PointCloud.create_from_rgbd_image(rgbd,

open3d.camera.PinholeCameraIntrinsic(1280, 720,

601.1693115234375, 600.85931396484375,

637.83624267578125, 363.8018798828125))

plypath = "./pointcloud.ply"

open3d.io.write_point_cloud(plypath, ply)用open3d.geometry.PointCloud.create_from_rgbd_image()方法可以从open3d.geometry.RGBDImage对象生成open3d.geometry.PointCloud对象,也就是点云对象。该方法需要四个参数——open3d.geometry.PointCloud对象、open3d.camera.PinholeCameraIntrinsic对象、extrinsic和project_valid_depth_only,前两项参数即RGBDImage对象和相机内参对象,后两项参数有默认值,一般不需要修改。相机内参将在后部分介绍,现在先暂时忽略它。

open3d.geometry.PointCloud.create_from_rgbd_image()方法的返回值是open3d.geometry.PointCloud对象,即点云对象,用open3d.io.write_point_cloud()方法可以在计算机磁盘中生成点云文件,将点云对象保存在其中。open3d.io.write_point_cloud()方法有五个参数,但这里只介绍两个参数——输出文件和open3d.geometry.PointCloud对象,open3d.io.write_point_cloud()方法会自动根据输出文件的扩展名将点云对象保存成相应的格式,open3d.io.write_point_cloud()方法共支持六种点云格式—— xyz、xyzn、xyzrgb、pts、ply和pcd。

相机内参

这个需要自己测定,每台相机的内参都不同,不能直接照搬别人的参数。

这一步需要写C++代码,并且还需要注意一些细节问题;当然了,用VS搭建Azure Kinect开发环境的步骤这里就不再介绍了。

#include <iostream>

#include <fstream>

#include <string>

#include <iomanip>

#include <vector>

#include <k4a/k4a.h>

using namespace std;

static string get_serial(k4a_device_t device)

{

size_t serial_number_length = 0;

if (K4A_BUFFER_RESULT_TOO_SMALL != k4a_device_get_serialnum(device, NULL, &serial_number_length))

{

cout << "Failed to get serial number length" << endl;

k4a_device_close(device);

exit(-1);

}

char* serial_number = new (std::nothrow) char[serial_number_length];

if (serial_number == NULL)

{

cout << "Failed to allocate memory for serial number (" << serial_number_length << " bytes)" << endl;

k4a_device_close(device);

exit(-1);

}

if (K4A_BUFFER_RESULT_SUCCEEDED != k4a_device_get_serialnum(device, serial_number, &serial_number_length))

{

cout << "Failed to get serial number" << endl;

delete[] serial_number;

serial_number = NULL;

k4a_device_close(device);

exit(-1);

}

string s(serial_number);

delete[] serial_number;

serial_number = NULL;

return s;

}

static void print_calibration()

{

uint32_t device_count = k4a_device_get_installed_count();

cout << "Found " << device_count << " connected devices:" << endl;

cout << fixed << setprecision(6);

for (uint8_t deviceIndex = 0; deviceIndex < device_count; deviceIndex++)

{

k4a_device_t device = NULL;

if (K4A_RESULT_SUCCEEDED != k4a_device_open(deviceIndex, &device))

{

cout << deviceIndex << ": Failed to open device" << endl;

exit(-1);

}

k4a_calibration_t calibration;

k4a_device_configuration_t deviceConfig = K4A_DEVICE_CONFIG_INIT_DISABLE_ALL;

deviceConfig.color_format = K4A_IMAGE_FORMAT_COLOR_MJPG;

deviceConfig.color_resolution = K4A_COLOR_RESOLUTION_720P;

deviceConfig.depth_mode = K4A_DEPTH_MODE_WFOV_2X2BINNED;

deviceConfig.camera_fps = K4A_FRAMES_PER_SECOND_30;

deviceConfig.wired_sync_mode = K4A_WIRED_SYNC_MODE_STANDALONE;

deviceConfig.synchronized_images_only = true;

// get calibration

if (K4A_RESULT_SUCCEEDED !=

k4a_device_get_calibration(device, deviceConfig.depth_mode, deviceConfig.color_resolution, &calibration))

{

cout << "Failed to get calibration" << endl;

exit(-1);

}

// auto calib = calibration.depth_camera_calibration; // 校准深度相机

auto calib = calibration.color_camera_calibration; // 校准彩色相机

cout << "\n===== Device " << (int)deviceIndex << ": " << get_serial(device) << " =====\n";

cout << "resolution width: " << calib.resolution_width << endl;

cout << "resolution height: " << calib.resolution_height << endl;

cout << "principal point x: " << calib.intrinsics.parameters.param.cx << endl;

cout << "principal point y: " << calib.intrinsics.parameters.param.cy << endl;

cout << "focal length x: " << calib.intrinsics.parameters.param.fx << endl;

cout << "focal length y: " << calib.intrinsics.parameters.param.fy << endl;

cout << "radial distortion coefficients:" << endl;

cout << "k1: " << calib.intrinsics.parameters.param.k1 << endl;

cout << "k2: " << calib.intrinsics.parameters.param.k2 << endl;

cout << "k3: " << calib.intrinsics.parameters.param.k3 << endl;

cout << "k4: " << calib.intrinsics.parameters.param.k4 << endl;

cout << "k5: " << calib.intrinsics.parameters.param.k5 << endl;

cout << "k6: " << calib.intrinsics.parameters.param.k6 << endl;

cout << "center of distortion in Z=1 plane, x: " << calib.intrinsics.parameters.param.codx << endl;

cout << "center of distortion in Z=1 plane, y: " << calib.intrinsics.parameters.param.cody << endl;

cout << "tangential distortion coefficient x: " << calib.intrinsics.parameters.param.p1 << endl;

cout << "tangential distortion coefficient y: " << calib.intrinsics.parameters.param.p2 << endl;

cout << "metric radius: " << calib.intrinsics.parameters.param.metric_radius << endl;

k4a_device_close(device);

}

}

static void calibration_blob(uint8_t deviceIndex = 0, string filename = "calibration.json")

{

k4a_device_t device = NULL;

if (K4A_RESULT_SUCCEEDED != k4a_device_open(deviceIndex, &device))

{

cout << deviceIndex << ": Failed to open device" << endl;

exit(-1);

}

size_t calibration_size = 0;

k4a_buffer_result_t buffer_result = k4a_device_get_raw_calibration(device, NULL, &calibration_size);

if (buffer_result == K4A_BUFFER_RESULT_TOO_SMALL)

{

vector<uint8_t> calibration_buffer = vector<uint8_t>(calibration_size);

buffer_result = k4a_device_get_raw_calibration(device, calibration_buffer.data(), &calibration_size);

if (buffer_result == K4A_BUFFER_RESULT_SUCCEEDED)

{

ofstream file(filename, ofstream::binary);

file.write(reinterpret_cast<const char*>(&calibration_buffer[0]), (long)calibration_size);

file.close();

cout << "Calibration blob for device " << (int)deviceIndex << " (serial no. " << get_serial(device)

<< ") is saved to " << filename << endl;

}

else

{

cout << "Failed to get calibration blob" << endl;

exit(-1);

}

}

else

{

cout << "Failed to get calibration blob size" << endl;

exit(-1);

}

}

static void print_usage()

{

cout << "Usage: calibration_info [device_id] [output_file]" << endl;

cout << "Using calibration_info.exe without any command line arguments will display" << endl

<< "calibration info of all connected devices in stdout. If a device_id is given" << endl

<< "(0 for default device), the calibration.json file of that device will be" << endl

<< "saved to the current directory." << endl;

}

int main(int argc, char** argv)

{

if (argc == 1)

{

print_calibration();

}

else if (argc == 2)

{

calibration_blob((uint8_t)atoi(argv[1]), "calibration.json");

}

else if (argc == 3)

{

calibration_blob((uint8_t)atoi(argv[1]), argv[2]);

}

else

{

print_usage();

}

cout << "按任意键退出..." << endl;

cin;

return 0;

}上面给出了完整代码,注意这两行,它将决定校准深度相机还是彩色相机。

// auto calib = calibration.depth_camera_calibration; // 校准深度相机

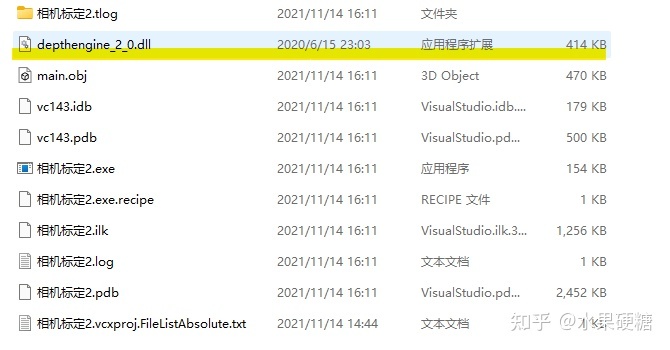

auto calib = calibration.color_camera_calibration; // 校准彩色相机在Azure Kinect SDK v1.4.1\sdk\windows-desktop目录下有两个文件夹,分别是amd64和x86,如果你的系统是64位就复制amd64\release\bin下的depthengine_2_0.dll文件至VS的Debug目录中; 如果你的系统是32位就复制x86\release\bin下的depthengine_2_0.dll文件至VS的Debug目录中。

还要注意将depthengine_2_0.dll文件与VS生成的exe文件放在同一目录下,就像下图那样: