目录

1 遗传算法

遗传算法是一种模拟自然界进化过程的优化算法。它通过模拟生物进化的遗传、交叉和变异等过程,来搜索最优解或近似最优解。

遗传算法的基本步骤如下:

初始化种群:随机生成一组初始解作为种群。

适应度评估:根据问题的特定评价函数,计算每个个体的适应度。

选择操作:根据适应度大小,选择一部分个体作为父代,用于产生下一代。

交叉操作:对选出的父代个体进行交叉操作,生成新的个体。

变异操作:对新生成的个体进行变异操作,引入新的基因。

替换操作:用新生成的个体替换原来的个体,形成新的种群。

终止条件判断:判断是否满足终止条件,如果满足则停止算法,否则返回第3步。

遗传算法的优点是可以在大规模搜索空间中找到较好的解,适用于多种优化问题。然而,由于其基于随机性的特点,可能会陷入局部最优解,且算法的收敛速度较慢。因此,对于复杂问题,需要合理设置参数和运行多次以获得更好的结果。

2 RBF神经网络

RBF神将网络是一种三层神经网络,其包括输入层、隐层、输出层。从输入空间到隐层空间的变换是非线性的,而从隐层空间到输出层空间变换是线性的。流图如下:

RBF网络的基本思想是:用RBF作为隐单元的“基”构成隐含层空间,这样就可以将输入矢量直接映射到隐空间,而不需要通过权连接。当RBF的中心点确定以后,这种映射关系也就确定了。而隐含层空间到输出空间的映射是线性的,即网络的输出是隐单元输出的线性加权和,此处的权即为网络可调参数。其中,隐含层的作用是把向量从低维度的p映射到高维度的h,这样低维度线性不可分的情况到高维度就可以变得线性可分了,主要就是核函数的思想。

这样,网络由输入到输出的映射是非线性的,而网络输出对可调参数而言却又是线性的。网络的权就可由线性方程组直接解出,从而大大加快学习速度并避免局部极小问题。

3 Matlab代码实现

GA.m

clear all

close all

G = 15;

Size = 30;

CodeL = 10;

for i = 1:3

MinX(i) = 0.1*ones(1);

MaxX(i) = 3*ones(1);

end

for i = 4:1:9

MinX(i) = -3*ones(1);

MaxX(i) = 3*ones(1);

end

for i = 10:1:12

MinX(i) = -ones(1);

MaxX(i) = ones(1);

end

E = round(rand(Size,12*CodeL)); %Initial Code!

BsJ = 0;

for kg = 1:1:G

time(kg) = kg

for s = 1:1:Size

m = E(s,:);

for j = 1:1:12

y(j) = 0;

mj = m((j-1)*CodeL + 1:1:j*CodeL);

for i = 1:1:CodeL

y(j) = y(j) + mj(i)*2^(i-1);

end

f(s,j) = (MaxX(j) - MinX(j))*y(j)/1023 + MinX(j);

end

% ************Step 1:Evaluate BestJ *******************

p = f(s,:);

[p,BsJ] = RBF(p,BsJ);

BsJi(s) = BsJ;

end

[OderJi,IndexJi] = sort(BsJi);

BestJ(kg) = OderJi(1);

BJ = BestJ(kg);

Ji = BsJi+1e-10;

fi = 1./Ji;

[Oderfi,Indexfi] = sort(fi);

Bestfi = Oderfi(Size);

BestS = E(Indexfi(Size),:);

% ***************Step 2:Select and Reproduct Operation*********

fi_sum = sum(fi);

fi_Size = (Oderfi/fi_sum)*Size;

fi_S = floor(fi_Size);

kk = 1;

for i = 1:1:Size

for j = 1:1:fi_S(i)

TempE(kk,:) = E(Indexfi(i),:);

kk = kk + 1;

end

end

% ****************Step 3:Crossover Operation*******************

pc = 0.60;

n = ceil(20*rand);

for i = 1:2:(Size - 1)

temp = rand;

if pc>temp

for j = n:1:20

TempE(i,j) = E(i+1,j);

TempE(i+1,j) = E(i,j);

end

end

end

TempE(Size,:) = BestS;

E = TempE;

%*****************Step 4:Mutation Operation*********************

pm = 0.001 - [1:1:Size]*(0.001)/Size;

for i = 1:1:Size

for j = 1:1:12*CodeL

temp = rand;

if pm>temp

if TempE(i,j) == 0

TempE(i,j) = 1;

else

TempE(i,j) = 0;

end

end

end

end

%Guarantee TempE(Size,:) belong to the best individual

TempE(Size,:) = BestS;

E = TempE;

%********************************************************************

end

Bestfi

BestS

fi

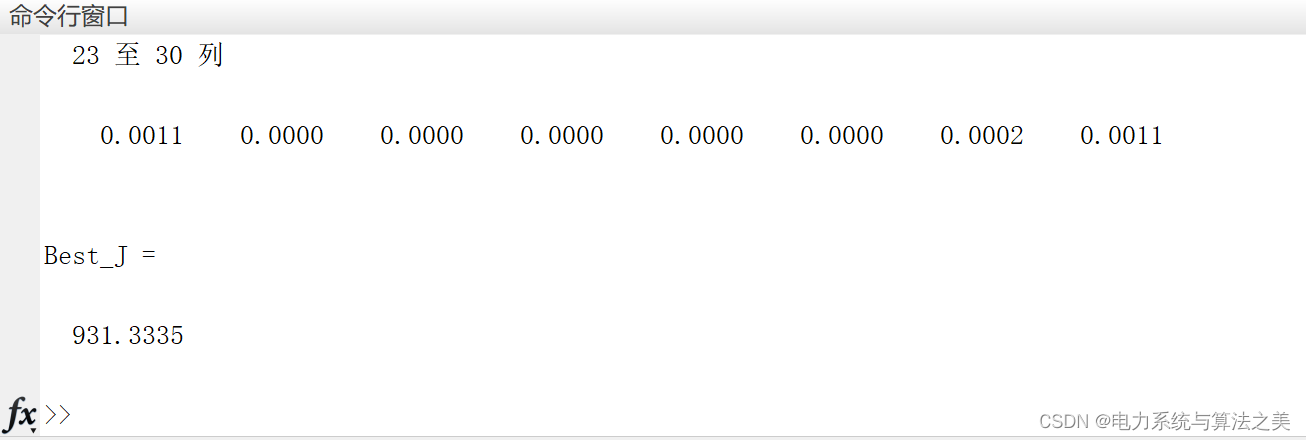

Best_J = BestJ(G)

figure(1);

plot(time,BestJ);

xlabel('Times');ylabel('BestJ');

save pfile p;

RBF.m

function [p,BsJ] = RBF(p,BsJ)

ts = 0.001;

alfa = 0.05;

xite = 0.85;

x = [0,0]';

b = [p(1);p(2);p(3)];

c = [p(4) p(5) p(6);

p(7) p(8) p(9)];

w = [p(10);p(11);p(12)];

w_1 = w;w_2 = w_1;

c_1 = c;c_2 = c_1;

b_1 = b;b_2 = b_1;

y_1 = 0;

for k = 1:500

timef(k) = k*ts;

u(k) = sin(5*2*pi*k*ts);

y(k) = u(k)^3 + y_1/(1 + y_1^2);

x(1) = u(k);

x(2) = y(k);

for j = 1:1:3

h(j) = exp(-norm(x - c(:,j))^2/(2*b(j)*b(j)));

end

ym(k) = w_1'*h';

e(k) = y(k) - ym(k);

d_w = 0*w;d_b = 0*b;d_c = 0*c;

for j = 1:1:3

d_w(j) = xite*e(k)*h(j);

d_b(j) = xite*e(k)*w(j)*h(j)*(b(j)^-3)*norm(x-c(:,j))^2;

for i = 1:1:2

d_c(i,j) = xite*e(k)*w(j)*h(j)*(x(i)-c(i,j))*(b(j)^-2);

end

end

w = w_1 + d_w + alfa*(w_1 - w_2);

b = b_1 + d_b + alfa*(b_1 - b_2);

c = c_1 + d_c + alfa*(c_1 - c_2);

y_1 = y(k);

w_2 = w_1;

w_1 = w;

c_2 = c_1;

c_1 = c;

b_2 = b_1;

b_1 = b;

end

B = 0;

for i = 1:500

Ji(i) = abs(e(i));

B = B + 100*Ji(i);

end

BsJ = B;

Test.m

clear all;

close all;

load pfile;

alfa = 0.05;

xite = 0.85;

x = [0,0]';

%M为1时

M = 2;

if M == 1

b = [p(1);p(2);p(3)];

c = [p(4) p(5) p(6);

p(7) p(8) p(9)];

w = [p(10);p(11);p(12)];

elseif M == 2

b = 3*rand(3,1);

c = 3*rands(2,3);

w = rands(3,1);

end

w_1 = w;w_2 = w_1;

c_1 = c;c_2 = c_1;

b_1 = b;b_2 = b_1;

y_1 = 0;

ts = 0.001;

for k = 1:1500

time(k) = k*ts;

u(k) = sin(5*2*pi*k*ts);

y(k) = u(k)^3 + y_1/(1 + y_1^2);

x(1) = u(k);

x(2) = y(k);

for j = 1:3

h(j) = exp(-norm(x-c(:,j))^2/(2*b(j)*b(j)));

end

ym(k) = w_1'*h';

e(k) = y(k) - ym(k);

d_w = 0*w;d_b = 0*b;d_c=0*c;

for j = 1:1:3

d_w(j) = xite*e(k)*h(j);

d_b(j) = xite*e(k)*w(j)*h(j)*(b(j)^-3)*norm(x-c(:,j))^2;

for i = 1:1:2

d_c(i,j) = xite*e(k)*w(j)*h(j)*(x(i) - c(i,j))*(b(j)^-2);

end

end

w = w_1 + d_w + alfa*(w_1 - w_2);

b = b_1 + d_b + alfa*(b_1 - b_2);

c = c_1 + d_c + alfa*(c_1 - c_2);

y_1 = y(k);

w_2 = w_1;

w_1 = w;

c_2 = c_1;

c_1 = c;

b_2 = b;

end

figure(1);

plot(time,ym,'r',time,y,'b');

xlabel('times(s)');ylabel('y and ym');

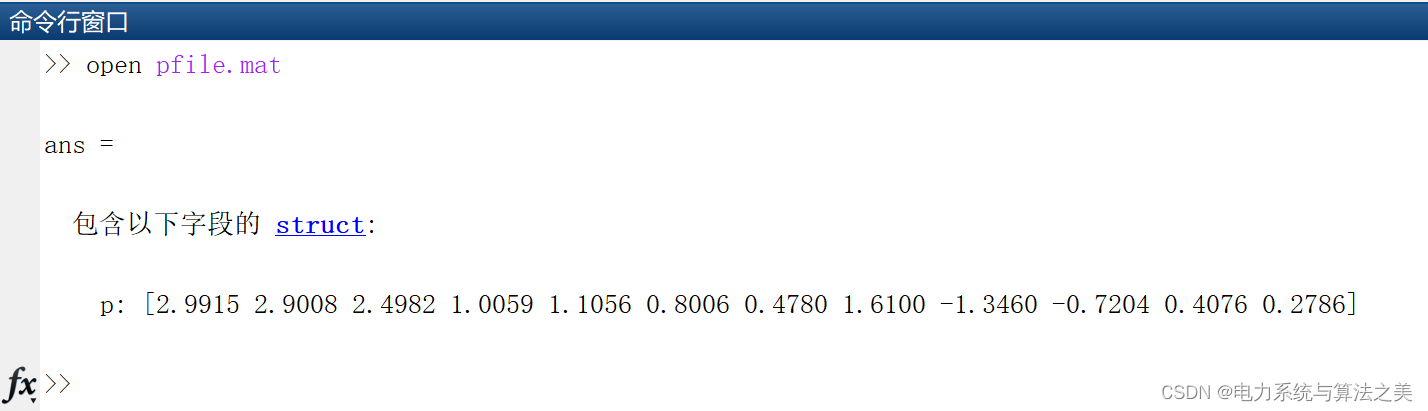

pfile.mat

p: [2.9915 2.9008 2.4982 1.0059 1.1056 0.8006 0.4780 1.6100 -1.3460 -0.7204 0.4076 0.2786]

4 结果