【AI实战】快速搭建中文 33B 大模型 Chinese-Alpaca-33B

中文 33B 大模型 Chinese-Alpaca-33B

-

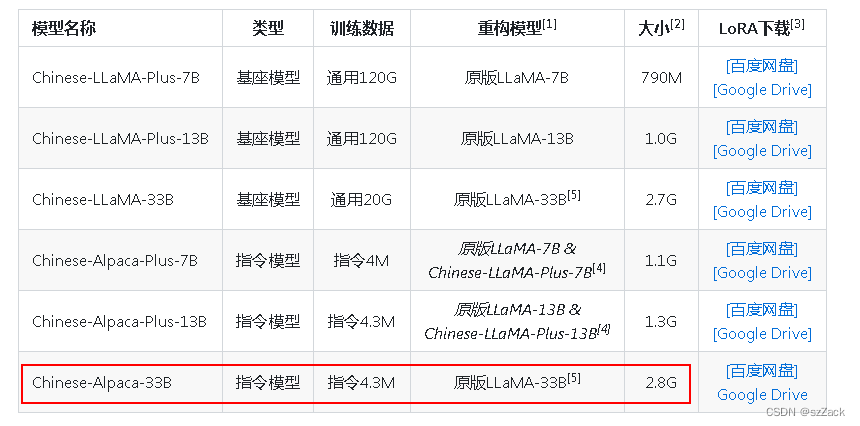

介绍

Chinese-Alpaca-33B 大模型在原版 LLaMA-33B 的基础上扩充了中文词表并使用了中文数据进行二次预训练,进一步提升了中文基础语义理解能力。同时,中文Alpaca模型进一步使用了中文指令数据进行精调,显著提升了模型对指令的理解和执行能力。

官网:https://github.com/ymcui/Chinese-LLaMA-Alpaca

LLaMA模型禁止商用

-

训练数据

-

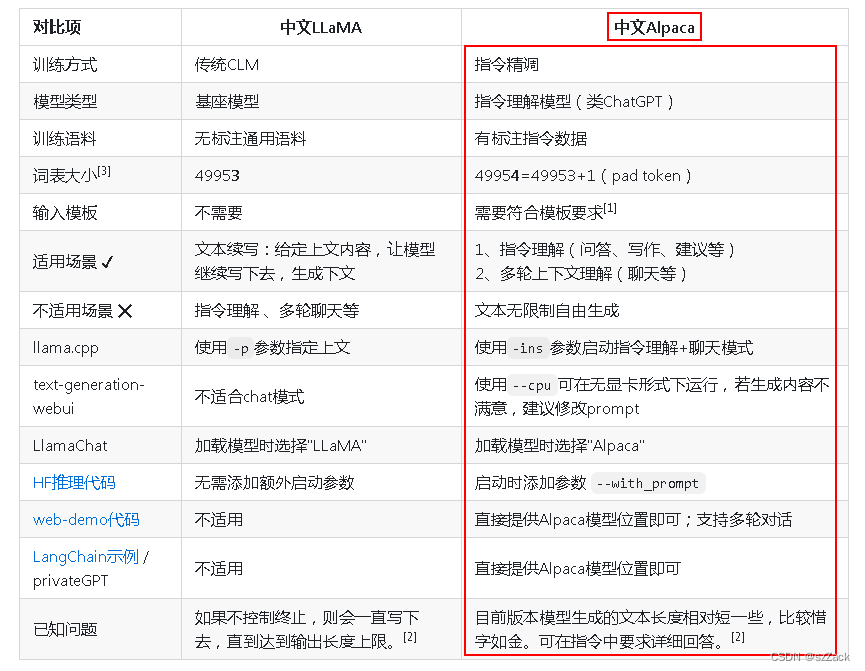

中文LLaMA VS 中文Alpaca

-

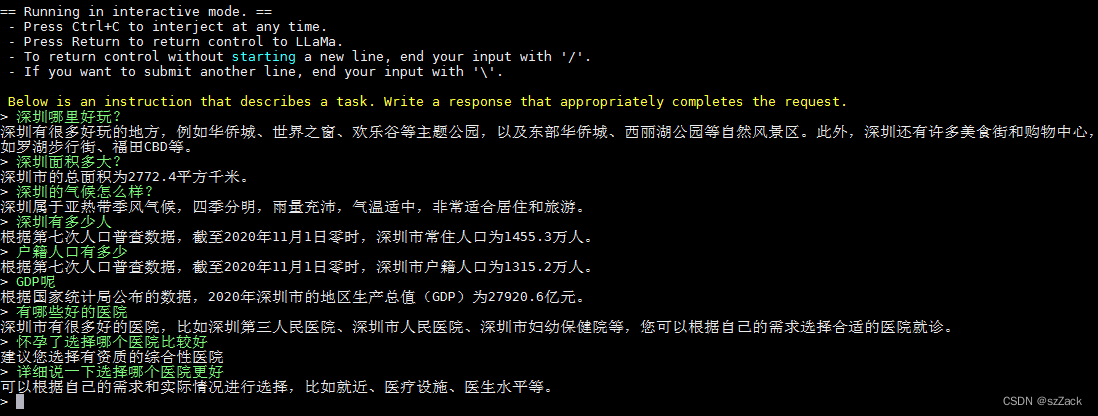

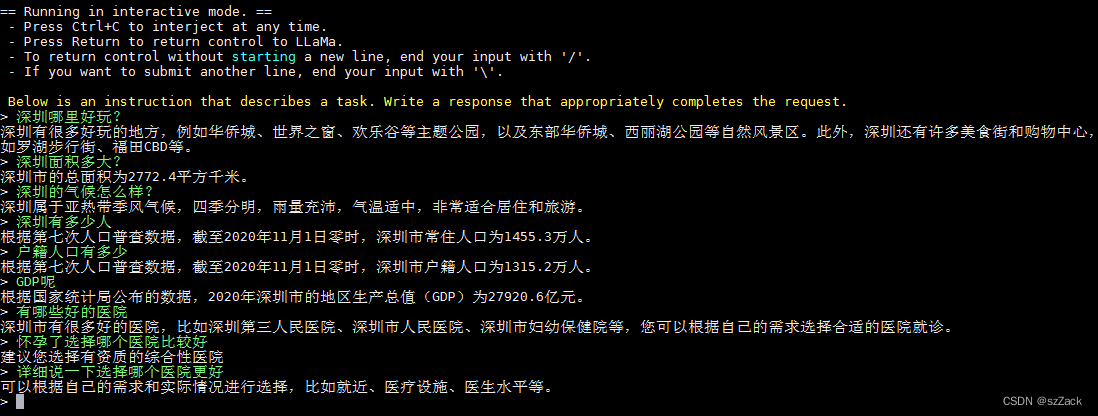

测试截图

从测试结果来看,还可以吧,但是距离chatGPT有距离啊!

环境配置

环境配置过程详情参考我的这篇文章;

【AI实战】从零开始搭建中文 LLaMA-33B 语言模型 Chinese-LLaMA-Alpaca-33B

llama-33B 模型下载、合并方法也是参考这篇文章:

【AI实战】从零开始搭建中文 LLaMA-33B 语言模型 Chinese-LLaMA-Alpaca-33B

得到的模型保存路径:“./Chinese-LLaMA-33B”

llama.cpp 量化部署 llama-33B参考这篇文章:

【AI实战】llama.cpp 量化部署 llama-33B

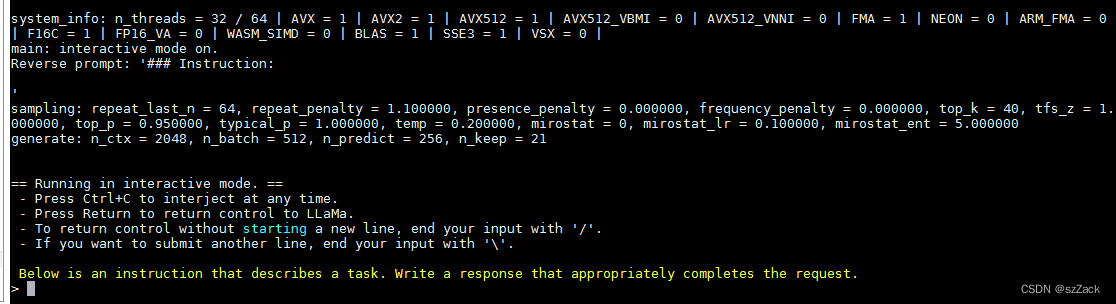

搭建过程

首先按照上面的步骤已经获得:

./llama-30b-hf – llama-30b 原始模型

llama.cpp 已经编译好

1.拉取 chinese-alpaca-lora-33b

执行:

cd /notebooks

git clone https://huggingface.co/ziqingyang/chinese-alpaca-lora-33b

【】可能拉取失败,耐心尝试多次就会成功!!!

比如错误信息:

Cloning into 'chinese-alpaca-lora-33b'...

fatal: unable to access 'https://huggingface.co/ziqingyang/chinese-alpaca-lora-33b/': gnutls_handshake() failed: Error in the pull function.

也可能拉取到的文件太小:

# git clone https://huggingface.co/ziqingyang/chinese-alpaca-lora-33b

Cloning into 'chinese-alpaca-lora-33b'...

remote: Enumerating objects: 19, done.

remote: Total 19 (delta 0), reused 0 (delta 0), pack-reused 19

Unpacking objects: 100% (19/19), 2.58 KiB | 440.00 KiB/s, done.

# du -sh chinese-alpaca-lora-33b/

344K chinese-alpaca-lora-33b/

文件夹 chinese-alpaca-lora-33b/ 大小才 344K

执行:

rm -rf chinese-alpaca-lora-33b/

再拉取,执行:

git clone https://huggingface.co/ziqingyang/chinese-alpaca-lora-33b

正确拉取到的文件大小:

# du -sh chinese-llama-lora-33b/

2.8G chinese-llama-lora-33b/

2.合并lora权重

合并脚本:

merge_chinese-alpaca-33b.sh

cd /notebooks/Chinese-LLaMA-Alpaca

mkdir ./chinese-alpaca-33b-pth

python scripts/merge_llama_with_chinese_lora.py \

--base_model ../llama-30b-hf/ \

--lora_model ../chinese-alpaca-lora-33b/ \

--output_type pth \

--output_dir ./chinese-alpaca-33b-pth

执行合并:

sh merge_chinese-alpaca-33b.sh

输出结果到路径:./chinese-alpaca-33b-pth

输出信息:

# sh merge_chinese-alpaca-33b.sh

Base model: ../llama-30b-hf/

LoRA model(s) ['../chinese-alpaca-lora-33b/']:

Loading checkpoint shards: 100%|██████████████████████████████████████████████████████████████████████████| 61/61 [01:32<00:00, 1.51s/it]

Peft version: 0.3.0

Loading LoRA for 33B model

Loading LoRA ../chinese-alpaca-lora-33b/...

base_model vocab size: 32000

tokenizer vocab size: 49954

Extended vocabulary size to 49954

Loading LoRA weights

Merging with merge_and_unload...

Saving to pth format...

Processing tok_embeddings.weight

Processing layers.0.attention.wq.weight

Processing layers.0.attention.wk.weight

Processing layers.0.attention.wv.weight

Processing layers.0.attention.wo.weight

Processing layers.0.feed_forward.w1.weight

Processing layers.0.feed_forward.w2.weight

Processing layers.0.feed_forward.w3.weight

Processing layers.0.attention_norm.weight

Processing layers.0.ffn_norm.weight

Processing layers.1.attention.wq.weight

Processing layers.1.attention.wk.weight

Processing layers.1.attention.wv.weight

Processing layers.1.attention.wo.weight

Processing layers.1.feed_forward.w1.weight

Processing layers.1.feed_forward.w2.weight

Processing layers.1.feed_forward.w3.weight

Processing layers.1.attention_norm.weight

Processing layers.1.ffn_norm.weight

Processing layers.2.attention.wq.weight

Processing layers.2.attention.wk.weight

Processing layers.2.attention.wv.weight

Processing layers.2.attention.wo.weight

Processing layers.2.feed_forward.w1.weight

Processing layers.2.feed_forward.w2.weight

Processing layers.2.feed_forward.w3.weight

Processing layers.2.attention_norm.weight

Processing layers.2.ffn_norm.weight

Processing layers.3.attention.wq.weight

Processing layers.3.attention.wk.weight

Processing layers.3.attention.wv.weight

Processing layers.3.attention.wo.weight

Processing layers.3.feed_forward.w1.weight

Processing layers.3.feed_forward.w2.weight

Processing layers.3.feed_forward.w3.weight

Processing layers.3.attention_norm.weight

Processing layers.3.ffn_norm.weight

Processing layers.4.attention.wq.weight

Processing layers.4.attention.wk.weight

Processing layers.4.attention.wv.weight

Processing layers.4.attention.wo.weight

Processing layers.4.feed_forward.w1.weight

Processing layers.4.feed_forward.w2.weight

Processing layers.4.feed_forward.w3.weight

Processing layers.4.attention_norm.weight

Processing layers.4.ffn_norm.weight

Processing layers.5.attention.wq.weight

Processing layers.5.attention.wk.weight

Processing layers.5.attention.wv.weight

Processing layers.5.attention.wo.weight

Processing layers.5.feed_forward.w1.weight

Processing layers.5.feed_forward.w2.weight

Processing layers.5.feed_forward.w3.weight

Processing layers.5.attention_norm.weight

Processing layers.5.ffn_norm.weight

Processing layers.6.attention.wq.weight

Processing layers.6.attention.wk.weight

Processing layers.6.attention.wv.weight

Processing layers.6.attention.wo.weight

Processing layers.6.feed_forward.w1.weight

Processing layers.6.feed_forward.w2.weight

Processing layers.6.feed_forward.w3.weight

Processing layers.6.attention_norm.weight

Processing layers.6.ffn_norm.weight

Processing layers.7.attention.wq.weight

Processing layers.7.attention.wk.weight

Processing layers.7.attention.wv.weight

Processing layers.7.attention.wo.weight

Processing layers.7.feed_forward.w1.weight

Processing layers.7.feed_forward.w2.weight

Processing layers.7.feed_forward.w3.weight

Processing layers.7.attention_norm.weight

Processing layers.7.ffn_norm.weight

Processing layers.8.attention.wq.weight

Processing layers.8.attention.wk.weight

Processing layers.8.attention.wv.weight

Processing layers.8.attention.wo.weight

Processing layers.8.feed_forward.w1.weight

Processing layers.8.feed_forward.w2.weight

Processing layers.8.feed_forward.w3.weight

Processing layers.8.attention_norm.weight

Processing layers.8.ffn_norm.weight

Processing layers.9.attention.wq.weight

Processing layers.9.attention.wk.weight

Processing layers.9.attention.wv.weight

Processing layers.9.attention.wo.weight

Processing layers.9.feed_forward.w1.weight

Processing layers.9.feed_forward.w2.weight

Processing layers.9.feed_forward.w3.weight

Processing layers.9.attention_norm.weight

Processing layers.9.ffn_norm.weight

Processing layers.10.attention.wq.weight

Processing layers.10.attention.wk.weight

Processing layers.10.attention.wv.weight

Processing layers.10.attention.wo.weight

Processing layers.10.feed_forward.w1.weight

Processing layers.10.feed_forward.w2.weight

Processing layers.10.feed_forward.w3.weight

Processing layers.10.attention_norm.weight

Processing layers.10.ffn_norm.weight

Processing layers.11.attention.wq.weight

Processing layers.11.attention.wk.weight

Processing layers.11.attention.wv.weight

Processing layers.11.attention.wo.weight

Processing layers.11.feed_forward.w1.weight

Processing layers.11.feed_forward.w2.weight

Processing layers.11.feed_forward.w3.weight

Processing layers.11.attention_norm.weight

Processing layers.11.ffn_norm.weight

Processing layers.12.attention.wq.weight

Processing layers.12.attention.wk.weight

Processing layers.12.attention.wv.weight

Processing layers.12.attention.wo.weight

Processing layers.12.feed_forward.w1.weight

Processing layers.12.feed_forward.w2.weight

Processing layers.12.feed_forward.w3.weight

Processing layers.12.attention_norm.weight

Processing layers.12.ffn_norm.weight

Processing layers.13.attention.wq.weight

Processing layers.13.attention.wk.weight

Processing layers.13.attention.wv.weight

Processing layers.13.attention.wo.weight

Processing layers.13.feed_forward.w1.weight

Processing layers.13.feed_forward.w2.weight

Processing layers.13.feed_forward.w3.weight

Processing layers.13.attention_norm.weight

Processing layers.13.ffn_norm.weight

Processing layers.14.attention.wq.weight

Processing layers.14.attention.wk.weight

Processing layers.14.attention.wv.weight

Processing layers.14.attention.wo.weight

Processing layers.14.feed_forward.w1.weight

Processing layers.14.feed_forward.w2.weight

Processing layers.14.feed_forward.w3.weight

Processing layers.14.attention_norm.weight

Processing layers.14.ffn_norm.weight

Processing layers.15.attention.wq.weight

Processing layers.15.attention.wk.weight

Processing layers.15.attention.wv.weight

Processing layers.15.attention.wo.weight

Processing layers.15.feed_forward.w1.weight

Processing layers.15.feed_forward.w2.weight

Processing layers.15.feed_forward.w3.weight

Processing layers.15.attention_norm.weight

Processing layers.15.ffn_norm.weight

Processing layers.16.attention.wq.weight

Processing layers.16.attention.wk.weight

Processing layers.16.attention.wv.weight

Processing layers.16.attention.wo.weight

Processing layers.16.feed_forward.w1.weight

Processing layers.16.feed_forward.w2.weight

Processing layers.16.feed_forward.w3.weight

Processing layers.16.attention_norm.weight

Processing layers.16.ffn_norm.weight

Processing layers.17.attention.wq.weight

Processing layers.17.attention.wk.weight

Processing layers.17.attention.wv.weight

Processing layers.17.attention.wo.weight

Processing layers.17.feed_forward.w1.weight

Processing layers.17.feed_forward.w2.weight

Processing layers.17.feed_forward.w3.weight

Processing layers.17.attention_norm.weight

Processing layers.17.ffn_norm.weight

Processing layers.18.attention.wq.weight

Processing layers.18.attention.wk.weight

Processing layers.18.attention.wv.weight

Processing layers.18.attention.wo.weight

Processing layers.18.feed_forward.w1.weight

Processing layers.18.feed_forward.w2.weight

Processing layers.18.feed_forward.w3.weight

Processing layers.18.attention_norm.weight

Processing layers.18.ffn_norm.weight

Processing layers.19.attention.wq.weight

Processing layers.19.attention.wk.weight

Processing layers.19.attention.wv.weight

Processing layers.19.attention.wo.weight

Processing layers.19.feed_forward.w1.weight

Processing layers.19.feed_forward.w2.weight

Processing layers.19.feed_forward.w3.weight

Processing layers.19.attention_norm.weight

Processing layers.19.ffn_norm.weight

Processing layers.20.attention.wq.weight

Processing layers.20.attention.wk.weight

Processing layers.20.attention.wv.weight

Processing layers.20.attention.wo.weight

Processing layers.20.feed_forward.w1.weight

Processing layers.20.feed_forward.w2.weight

Processing layers.20.feed_forward.w3.weight

Processing layers.20.attention_norm.weight

Processing layers.20.ffn_norm.weight

Processing layers.21.attention.wq.weight

Processing layers.21.attention.wk.weight

Processing layers.21.attention.wv.weight

Processing layers.21.attention.wo.weight

Processing layers.21.feed_forward.w1.weight

Processing layers.21.feed_forward.w2.weight

Processing layers.21.feed_forward.w3.weight

Processing layers.21.attention_norm.weight

Processing layers.21.ffn_norm.weight

Processing layers.22.attention.wq.weight

Processing layers.22.attention.wk.weight

Processing layers.22.attention.wv.weight

Processing layers.22.attention.wo.weight

Processing layers.22.feed_forward.w1.weight

Processing layers.22.feed_forward.w2.weight

Processing layers.22.feed_forward.w3.weight

Processing layers.22.attention_norm.weight

Processing layers.22.ffn_norm.weight

Processing layers.23.attention.wq.weight

Processing layers.23.attention.wk.weight

Processing layers.23.attention.wv.weight

Processing layers.23.attention.wo.weight

Processing layers.23.feed_forward.w1.weight

Processing layers.23.feed_forward.w2.weight

Processing layers.23.feed_forward.w3.weight

Processing layers.23.attention_norm.weight

Processing layers.23.ffn_norm.weight

Processing layers.24.attention.wq.weight

Processing layers.24.attention.wk.weight

Processing layers.24.attention.wv.weight

Processing layers.24.attention.wo.weight

Processing layers.24.feed_forward.w1.weight

Processing layers.24.feed_forward.w2.weight

Processing layers.24.feed_forward.w3.weight

Processing layers.24.attention_norm.weight

Processing layers.24.ffn_norm.weight

Processing layers.25.attention.wq.weight

Processing layers.25.attention.wk.weight

Processing layers.25.attention.wv.weight

Processing layers.25.attention.wo.weight

Processing layers.25.feed_forward.w1.weight

Processing layers.25.feed_forward.w2.weight

Processing layers.25.feed_forward.w3.weight

Processing layers.25.attention_norm.weight

Processing layers.25.ffn_norm.weight

Processing layers.26.attention.wq.weight

Processing layers.26.attention.wk.weight

Processing layers.26.attention.wv.weight

Processing layers.26.attention.wo.weight

Processing layers.26.feed_forward.w1.weight

Processing layers.26.feed_forward.w2.weight

Processing layers.26.feed_forward.w3.weight

Processing layers.26.attention_norm.weight

Processing layers.26.ffn_norm.weight

Processing layers.27.attention.wq.weight

Processing layers.27.attention.wk.weight

Processing layers.27.attention.wv.weight

Processing layers.27.attention.wo.weight

Processing layers.27.feed_forward.w1.weight

Processing layers.27.feed_forward.w2.weight

Processing layers.27.feed_forward.w3.weight

Processing layers.27.attention_norm.weight

Processing layers.27.ffn_norm.weight

Processing layers.28.attention.wq.weight

Processing layers.28.attention.wk.weight

Processing layers.28.attention.wv.weight

Processing layers.28.attention.wo.weight

Processing layers.28.feed_forward.w1.weight

Processing layers.28.feed_forward.w2.weight

Processing layers.28.feed_forward.w3.weight

Processing layers.28.attention_norm.weight

Processing layers.28.ffn_norm.weight

Processing layers.29.attention.wq.weight

Processing layers.29.attention.wk.weight

Processing layers.29.attention.wv.weight

Processing layers.29.attention.wo.weight

Processing layers.29.feed_forward.w1.weight

Processing layers.29.feed_forward.w2.weight

Processing layers.29.feed_forward.w3.weight

Processing layers.29.attention_norm.weight

Processing layers.29.ffn_norm.weight

Processing layers.30.attention.wq.weight

Processing layers.30.attention.wk.weight

Processing layers.30.attention.wv.weight

Processing layers.30.attention.wo.weight

Processing layers.30.feed_forward.w1.weight

Processing layers.30.feed_forward.w2.weight

Processing layers.30.feed_forward.w3.weight

Processing layers.30.attention_norm.weight

Processing layers.30.ffn_norm.weight

Processing layers.31.attention.wq.weight

Processing layers.31.attention.wk.weight

Processing layers.31.attention.wv.weight

Processing layers.31.attention.wo.weight

Processing layers.31.feed_forward.w1.weight

Processing layers.31.feed_forward.w2.weight

Processing layers.31.feed_forward.w3.weight

Processing layers.31.attention_norm.weight

Processing layers.31.ffn_norm.weight

Processing layers.32.attention.wq.weight

Processing layers.32.attention.wk.weight

Processing layers.32.attention.wv.weight

Processing layers.32.attention.wo.weight

Processing layers.32.feed_forward.w1.weight

Processing layers.32.feed_forward.w2.weight

Processing layers.32.feed_forward.w3.weight

Processing layers.32.attention_norm.weight

Processing layers.32.ffn_norm.weight

Processing layers.33.attention.wq.weight

Processing layers.33.attention.wk.weight

Processing layers.33.attention.wv.weight

Processing layers.33.attention.wo.weight

Processing layers.33.feed_forward.w1.weight

Processing layers.33.feed_forward.w2.weight

Processing layers.33.feed_forward.w3.weight

Processing layers.33.attention_norm.weight

Processing layers.33.ffn_norm.weight

Processing layers.34.attention.wq.weight

Processing layers.34.attention.wk.weight

Processing layers.34.attention.wv.weight

Processing layers.34.attention.wo.weight

Processing layers.34.feed_forward.w1.weight

Processing layers.34.feed_forward.w2.weight

Processing layers.34.feed_forward.w3.weight

Processing layers.34.attention_norm.weight

Processing layers.34.ffn_norm.weight

Processing layers.35.attention.wq.weight

Processing layers.35.attention.wk.weight

Processing layers.35.attention.wv.weight

Processing layers.35.attention.wo.weight

Processing layers.35.feed_forward.w1.weight

Processing layers.35.feed_forward.w2.weight

Processing layers.35.feed_forward.w3.weight

Processing layers.35.attention_norm.weight

Processing layers.35.ffn_norm.weight

Processing layers.36.attention.wq.weight

Processing layers.36.attention.wk.weight

Processing layers.36.attention.wv.weight

Processing layers.36.attention.wo.weight

Processing layers.36.feed_forward.w1.weight

Processing layers.36.feed_forward.w2.weight

Processing layers.36.feed_forward.w3.weight

Processing layers.36.attention_norm.weight

Processing layers.36.ffn_norm.weight

Processing layers.37.attention.wq.weight

Processing layers.37.attention.wk.weight

Processing layers.37.attention.wv.weight

Processing layers.37.attention.wo.weight

Processing layers.37.feed_forward.w1.weight

Processing layers.37.feed_forward.w2.weight

Processing layers.37.feed_forward.w3.weight

Processing layers.37.attention_norm.weight

Processing layers.37.ffn_norm.weight

Processing layers.38.attention.wq.weight

Processing layers.38.attention.wk.weight

Processing layers.38.attention.wv.weight

Processing layers.38.attention.wo.weight

Processing layers.38.feed_forward.w1.weight

Processing layers.38.feed_forward.w2.weight

Processing layers.38.feed_forward.w3.weight

Processing layers.38.attention_norm.weight

Processing layers.38.ffn_norm.weight

Processing layers.39.attention.wq.weight

Processing layers.39.attention.wk.weight

Processing layers.39.attention.wv.weight

Processing layers.39.attention.wo.weight

Processing layers.39.feed_forward.w1.weight

Processing layers.39.feed_forward.w2.weight

Processing layers.39.feed_forward.w3.weight

Processing layers.39.attention_norm.weight

Processing layers.39.ffn_norm.weight

Processing layers.40.attention.wq.weight

Processing layers.40.attention.wk.weight

Processing layers.40.attention.wv.weight

Processing layers.40.attention.wo.weight

Processing layers.40.feed_forward.w1.weight

Processing layers.40.feed_forward.w2.weight

Processing layers.40.feed_forward.w3.weight

Processing layers.40.attention_norm.weight

Processing layers.40.ffn_norm.weight

Processing layers.41.attention.wq.weight

Processing layers.41.attention.wk.weight

Processing layers.41.attention.wv.weight

Processing layers.41.attention.wo.weight

Processing layers.41.feed_forward.w1.weight

Processing layers.41.feed_forward.w2.weight

Processing layers.41.feed_forward.w3.weight

Processing layers.41.attention_norm.weight

Processing layers.41.ffn_norm.weight

Processing layers.42.attention.wq.weight

Processing layers.42.attention.wk.weight

Processing layers.42.attention.wv.weight

Processing layers.42.attention.wo.weight

Processing layers.42.feed_forward.w1.weight

Processing layers.42.feed_forward.w2.weight

Processing layers.42.feed_forward.w3.weight

Processing layers.42.attention_norm.weight

Processing layers.42.ffn_norm.weight

Processing layers.43.attention.wq.weight

Processing layers.43.attention.wk.weight

Processing layers.43.attention.wv.weight

Processing layers.43.attention.wo.weight

Processing layers.43.feed_forward.w1.weight

Processing layers.43.feed_forward.w2.weight

Processing layers.43.feed_forward.w3.weight

Processing layers.43.attention_norm.weight

Processing layers.43.ffn_norm.weight

Processing layers.44.attention.wq.weight

Processing layers.44.attention.wk.weight

Processing layers.44.attention.wv.weight

Processing layers.44.attention.wo.weight

Processing layers.44.feed_forward.w1.weight

Processing layers.44.feed_forward.w2.weight

Processing layers.44.feed_forward.w3.weight

Processing layers.44.attention_norm.weight

Processing layers.44.ffn_norm.weight

Processing layers.45.attention.wq.weight

Processing layers.45.attention.wk.weight

Processing layers.45.attention.wv.weight

Processing layers.45.attention.wo.weight

Processing layers.45.feed_forward.w1.weight

Processing layers.45.feed_forward.w2.weight

Processing layers.45.feed_forward.w3.weight

Processing layers.45.attention_norm.weight

Processing layers.45.ffn_norm.weight

Processing layers.46.attention.wq.weight

Processing layers.46.attention.wk.weight

Processing layers.46.attention.wv.weight

Processing layers.46.attention.wo.weight

Processing layers.46.feed_forward.w1.weight

Processing layers.46.feed_forward.w2.weight

Processing layers.46.feed_forward.w3.weight

Processing layers.46.attention_norm.weight

Processing layers.46.ffn_norm.weight

Processing layers.47.attention.wq.weight

Processing layers.47.attention.wk.weight

Processing layers.47.attention.wv.weight

Processing layers.47.attention.wo.weight

Processing layers.47.feed_forward.w1.weight

Processing layers.47.feed_forward.w2.weight

Processing layers.47.feed_forward.w3.weight

Processing layers.47.attention_norm.weight

Processing layers.47.ffn_norm.weight

Processing layers.48.attention.wq.weight

Processing layers.48.attention.wk.weight

Processing layers.48.attention.wv.weight

Processing layers.48.attention.wo.weight

Processing layers.48.feed_forward.w1.weight

Processing layers.48.feed_forward.w2.weight

Processing layers.48.feed_forward.w3.weight

Processing layers.48.attention_norm.weight

Processing layers.48.ffn_norm.weight

Processing layers.49.attention.wq.weight

Processing layers.49.attention.wk.weight

Processing layers.49.attention.wv.weight

Processing layers.49.attention.wo.weight

Processing layers.49.feed_forward.w1.weight

Processing layers.49.feed_forward.w2.weight

Processing layers.49.feed_forward.w3.weight

Processing layers.49.attention_norm.weight

Processing layers.49.ffn_norm.weight

Processing layers.50.attention.wq.weight

Processing layers.50.attention.wk.weight

Processing layers.50.attention.wv.weight

Processing layers.50.attention.wo.weight

Processing layers.50.feed_forward.w1.weight

Processing layers.50.feed_forward.w2.weight

Processing layers.50.feed_forward.w3.weight

Processing layers.50.attention_norm.weight

Processing layers.50.ffn_norm.weight

Processing layers.51.attention.wq.weight

Processing layers.51.attention.wk.weight

Processing layers.51.attention.wv.weight

Processing layers.51.attention.wo.weight

Processing layers.51.feed_forward.w1.weight

Processing layers.51.feed_forward.w2.weight

Processing layers.51.feed_forward.w3.weight

Processing layers.51.attention_norm.weight

Processing layers.51.ffn_norm.weight

Processing layers.52.attention.wq.weight

Processing layers.52.attention.wk.weight

Processing layers.52.attention.wv.weight

Processing layers.52.attention.wo.weight

Processing layers.52.feed_forward.w1.weight

Processing layers.52.feed_forward.w2.weight

Processing layers.52.feed_forward.w3.weight

Processing layers.52.attention_norm.weight

Processing layers.52.ffn_norm.weight

Processing layers.53.attention.wq.weight

Processing layers.53.attention.wk.weight

Processing layers.53.attention.wv.weight

Processing layers.53.attention.wo.weight

Processing layers.53.feed_forward.w1.weight

Processing layers.53.feed_forward.w2.weight

Processing layers.53.feed_forward.w3.weight

Processing layers.53.attention_norm.weight

Processing layers.53.ffn_norm.weight

Processing layers.54.attention.wq.weight

Processing layers.54.attention.wk.weight

Processing layers.54.attention.wv.weight

Processing layers.54.attention.wo.weight

Processing layers.54.feed_forward.w1.weight

Processing layers.54.feed_forward.w2.weight

Processing layers.54.feed_forward.w3.weight

Processing layers.54.attention_norm.weight

Processing layers.54.ffn_norm.weight

Processing layers.55.attention.wq.weight

Processing layers.55.attention.wk.weight

Processing layers.55.attention.wv.weight

Processing layers.55.attention.wo.weight

Processing layers.55.feed_forward.w1.weight

Processing layers.55.feed_forward.w2.weight

Processing layers.55.feed_forward.w3.weight

Processing layers.55.attention_norm.weight

Processing layers.55.ffn_norm.weight

Processing layers.56.attention.wq.weight

Processing layers.56.attention.wk.weight

Processing layers.56.attention.wv.weight

Processing layers.56.attention.wo.weight

Processing layers.56.feed_forward.w1.weight

Processing layers.56.feed_forward.w2.weight

Processing layers.56.feed_forward.w3.weight

Processing layers.56.attention_norm.weight

Processing layers.56.ffn_norm.weight

Processing layers.57.attention.wq.weight

Processing layers.57.attention.wk.weight

Processing layers.57.attention.wv.weight

Processing layers.57.attention.wo.weight

Processing layers.57.feed_forward.w1.weight

Processing layers.57.feed_forward.w2.weight

Processing layers.57.feed_forward.w3.weight

Processing layers.57.attention_norm.weight

Processing layers.57.ffn_norm.weight

Processing layers.58.attention.wq.weight

Processing layers.58.attention.wk.weight

Processing layers.58.attention.wv.weight

Processing layers.58.attention.wo.weight

Processing layers.58.feed_forward.w1.weight

Processing layers.58.feed_forward.w2.weight

Processing layers.58.feed_forward.w3.weight

Processing layers.58.attention_norm.weight

Processing layers.58.ffn_norm.weight

Processing layers.59.attention.wq.weight

Processing layers.59.attention.wk.weight

Processing layers.59.attention.wv.weight

Processing layers.59.attention.wo.weight

Processing layers.59.feed_forward.w1.weight

Processing layers.59.feed_forward.w2.weight

Processing layers.59.feed_forward.w3.weight

Processing layers.59.attention_norm.weight

Processing layers.59.ffn_norm.weight

Processing norm.weight

Processing output.weight

Saving shard 1 of 4 into ./chinese-alpaca-33b-pth/consolidated.00.pth

Saving shard 2 of 4 into ./chinese-alpaca-33b-pth/consolidated.01.pth

Saving shard 3 of 4 into ./chinese-alpaca-33b-pth/consolidated.02.pth

Saving shard 4 of 4 into ./chinese-alpaca-33b-pth/consolidated.03.pth

Saving params.json into ./chinese-alpaca-33b-pth/params.json

3.llaa.cpp量化

模型准备

cd /notebooks/llama.cpp

mkdir zh-models/

cp /notebooks/Chinese-LLaMA-Alpaca/chinese-alpaca-33b-pth/tokenizer.model zh-models

mkdir zh-models/33B

cp /notebooks/Chinese-LLaMA-Alpaca/chinese-alpaca-33b-pth/consolidated.0* zh-models/33B/

cp /notebooks/Chinese-LLaMA-Alpaca/Chinese-alpaca-33B-pth/params.json zh-models/33B/

模型权重转换为ggml的FP16格式

执行:

python convert.py zh-models/33B/

输出信息:

# python convert.py zh-models/33B/

Loading model file zh-models/33B/consolidated.00.pth

Loading model file zh-models/33B/consolidated.01.pth

Loading model file zh-models/33B/consolidated.02.pth

Loading model file zh-models/33B/consolidated.03.pth

Loading vocab file zh-models/tokenizer.model

params: n_vocab:49954 n_embd:6656 n_mult:256 n_head:52 n_layer:60

Writing vocab...

[ 1/543] Writing tensor tok_embeddings.weight | size 49954 x 6656 | type UnquantizedDataType(name='F16')

[ 2/543] Writing tensor norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 3/543] Writing tensor output.weight | size 49954 x 6656 | type UnquantizedDataType(name='F16')

[ 4/543] Writing tensor layers.0.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 5/543] Writing tensor layers.0.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 6/543] Writing tensor layers.0.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 7/543] Writing tensor layers.0.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 8/543] Writing tensor layers.0.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 9/543] Writing tensor layers.0.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 10/543] Writing tensor layers.0.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 11/543] Writing tensor layers.0.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 12/543] Writing tensor layers.0.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 13/543] Writing tensor layers.1.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 14/543] Writing tensor layers.1.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 15/543] Writing tensor layers.1.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 16/543] Writing tensor layers.1.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 17/543] Writing tensor layers.1.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 18/543] Writing tensor layers.1.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 19/543] Writing tensor layers.1.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 20/543] Writing tensor layers.1.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 21/543] Writing tensor layers.1.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 22/543] Writing tensor layers.2.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 23/543] Writing tensor layers.2.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 24/543] Writing tensor layers.2.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 25/543] Writing tensor layers.2.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 26/543] Writing tensor layers.2.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 27/543] Writing tensor layers.2.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 28/543] Writing tensor layers.2.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 29/543] Writing tensor layers.2.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 30/543] Writing tensor layers.2.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 31/543] Writing tensor layers.3.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 32/543] Writing tensor layers.3.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 33/543] Writing tensor layers.3.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 34/543] Writing tensor layers.3.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 35/543] Writing tensor layers.3.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 36/543] Writing tensor layers.3.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 37/543] Writing tensor layers.3.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 38/543] Writing tensor layers.3.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 39/543] Writing tensor layers.3.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 40/543] Writing tensor layers.4.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 41/543] Writing tensor layers.4.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 42/543] Writing tensor layers.4.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 43/543] Writing tensor layers.4.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 44/543] Writing tensor layers.4.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 45/543] Writing tensor layers.4.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 46/543] Writing tensor layers.4.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 47/543] Writing tensor layers.4.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 48/543] Writing tensor layers.4.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 49/543] Writing tensor layers.5.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 50/543] Writing tensor layers.5.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 51/543] Writing tensor layers.5.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 52/543] Writing tensor layers.5.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 53/543] Writing tensor layers.5.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 54/543] Writing tensor layers.5.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 55/543] Writing tensor layers.5.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 56/543] Writing tensor layers.5.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 57/543] Writing tensor layers.5.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 58/543] Writing tensor layers.6.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 59/543] Writing tensor layers.6.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 60/543] Writing tensor layers.6.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 61/543] Writing tensor layers.6.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 62/543] Writing tensor layers.6.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 63/543] Writing tensor layers.6.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 64/543] Writing tensor layers.6.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 65/543] Writing tensor layers.6.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 66/543] Writing tensor layers.6.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 67/543] Writing tensor layers.7.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 68/543] Writing tensor layers.7.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 69/543] Writing tensor layers.7.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 70/543] Writing tensor layers.7.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 71/543] Writing tensor layers.7.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 72/543] Writing tensor layers.7.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 73/543] Writing tensor layers.7.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 74/543] Writing tensor layers.7.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 75/543] Writing tensor layers.7.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 76/543] Writing tensor layers.8.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 77/543] Writing tensor layers.8.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 78/543] Writing tensor layers.8.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 79/543] Writing tensor layers.8.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 80/543] Writing tensor layers.8.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 81/543] Writing tensor layers.8.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 82/543] Writing tensor layers.8.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 83/543] Writing tensor layers.8.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 84/543] Writing tensor layers.8.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 85/543] Writing tensor layers.9.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 86/543] Writing tensor layers.9.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 87/543] Writing tensor layers.9.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 88/543] Writing tensor layers.9.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 89/543] Writing tensor layers.9.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 90/543] Writing tensor layers.9.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 91/543] Writing tensor layers.9.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[ 92/543] Writing tensor layers.9.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[ 93/543] Writing tensor layers.9.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 94/543] Writing tensor layers.10.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 95/543] Writing tensor layers.10.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 96/543] Writing tensor layers.10.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 97/543] Writing tensor layers.10.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[ 98/543] Writing tensor layers.10.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[ 99/543] Writing tensor layers.10.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[100/543] Writing tensor layers.10.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[101/543] Writing tensor layers.10.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[102/543] Writing tensor layers.10.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[103/543] Writing tensor layers.11.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[104/543] Writing tensor layers.11.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[105/543] Writing tensor layers.11.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[106/543] Writing tensor layers.11.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[107/543] Writing tensor layers.11.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[108/543] Writing tensor layers.11.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[109/543] Writing tensor layers.11.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[110/543] Writing tensor layers.11.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[111/543] Writing tensor layers.11.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[112/543] Writing tensor layers.12.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[113/543] Writing tensor layers.12.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[114/543] Writing tensor layers.12.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[115/543] Writing tensor layers.12.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[116/543] Writing tensor layers.12.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[117/543] Writing tensor layers.12.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[118/543] Writing tensor layers.12.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[119/543] Writing tensor layers.12.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[120/543] Writing tensor layers.12.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[121/543] Writing tensor layers.13.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[122/543] Writing tensor layers.13.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[123/543] Writing tensor layers.13.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[124/543] Writing tensor layers.13.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[125/543] Writing tensor layers.13.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[126/543] Writing tensor layers.13.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[127/543] Writing tensor layers.13.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[128/543] Writing tensor layers.13.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[129/543] Writing tensor layers.13.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[130/543] Writing tensor layers.14.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[131/543] Writing tensor layers.14.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[132/543] Writing tensor layers.14.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[133/543] Writing tensor layers.14.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[134/543] Writing tensor layers.14.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[135/543] Writing tensor layers.14.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[136/543] Writing tensor layers.14.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[137/543] Writing tensor layers.14.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[138/543] Writing tensor layers.14.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[139/543] Writing tensor layers.15.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[140/543] Writing tensor layers.15.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[141/543] Writing tensor layers.15.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[142/543] Writing tensor layers.15.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[143/543] Writing tensor layers.15.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[144/543] Writing tensor layers.15.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[145/543] Writing tensor layers.15.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[146/543] Writing tensor layers.15.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[147/543] Writing tensor layers.15.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[148/543] Writing tensor layers.16.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[149/543] Writing tensor layers.16.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[150/543] Writing tensor layers.16.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[151/543] Writing tensor layers.16.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[152/543] Writing tensor layers.16.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[153/543] Writing tensor layers.16.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[154/543] Writing tensor layers.16.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[155/543] Writing tensor layers.16.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[156/543] Writing tensor layers.16.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[157/543] Writing tensor layers.17.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[158/543] Writing tensor layers.17.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[159/543] Writing tensor layers.17.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[160/543] Writing tensor layers.17.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[161/543] Writing tensor layers.17.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[162/543] Writing tensor layers.17.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[163/543] Writing tensor layers.17.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[164/543] Writing tensor layers.17.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[165/543] Writing tensor layers.17.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[166/543] Writing tensor layers.18.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[167/543] Writing tensor layers.18.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[168/543] Writing tensor layers.18.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[169/543] Writing tensor layers.18.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[170/543] Writing tensor layers.18.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[171/543] Writing tensor layers.18.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[172/543] Writing tensor layers.18.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[173/543] Writing tensor layers.18.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[174/543] Writing tensor layers.18.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[175/543] Writing tensor layers.19.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[176/543] Writing tensor layers.19.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[177/543] Writing tensor layers.19.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[178/543] Writing tensor layers.19.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[179/543] Writing tensor layers.19.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[180/543] Writing tensor layers.19.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[181/543] Writing tensor layers.19.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[182/543] Writing tensor layers.19.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[183/543] Writing tensor layers.19.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[184/543] Writing tensor layers.20.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[185/543] Writing tensor layers.20.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[186/543] Writing tensor layers.20.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[187/543] Writing tensor layers.20.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[188/543] Writing tensor layers.20.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[189/543] Writing tensor layers.20.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[190/543] Writing tensor layers.20.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[191/543] Writing tensor layers.20.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[192/543] Writing tensor layers.20.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[193/543] Writing tensor layers.21.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[194/543] Writing tensor layers.21.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[195/543] Writing tensor layers.21.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[196/543] Writing tensor layers.21.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[197/543] Writing tensor layers.21.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[198/543] Writing tensor layers.21.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[199/543] Writing tensor layers.21.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[200/543] Writing tensor layers.21.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[201/543] Writing tensor layers.21.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[202/543] Writing tensor layers.22.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[203/543] Writing tensor layers.22.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[204/543] Writing tensor layers.22.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[205/543] Writing tensor layers.22.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[206/543] Writing tensor layers.22.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[207/543] Writing tensor layers.22.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[208/543] Writing tensor layers.22.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[209/543] Writing tensor layers.22.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[210/543] Writing tensor layers.22.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[211/543] Writing tensor layers.23.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[212/543] Writing tensor layers.23.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[213/543] Writing tensor layers.23.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[214/543] Writing tensor layers.23.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[215/543] Writing tensor layers.23.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[216/543] Writing tensor layers.23.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[217/543] Writing tensor layers.23.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[218/543] Writing tensor layers.23.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[219/543] Writing tensor layers.23.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[220/543] Writing tensor layers.24.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[221/543] Writing tensor layers.24.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[222/543] Writing tensor layers.24.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[223/543] Writing tensor layers.24.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[224/543] Writing tensor layers.24.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[225/543] Writing tensor layers.24.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[226/543] Writing tensor layers.24.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[227/543] Writing tensor layers.24.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[228/543] Writing tensor layers.24.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[229/543] Writing tensor layers.25.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[230/543] Writing tensor layers.25.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[231/543] Writing tensor layers.25.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[232/543] Writing tensor layers.25.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[233/543] Writing tensor layers.25.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[234/543] Writing tensor layers.25.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[235/543] Writing tensor layers.25.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[236/543] Writing tensor layers.25.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[237/543] Writing tensor layers.25.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[238/543] Writing tensor layers.26.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[239/543] Writing tensor layers.26.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[240/543] Writing tensor layers.26.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[241/543] Writing tensor layers.26.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[242/543] Writing tensor layers.26.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[243/543] Writing tensor layers.26.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[244/543] Writing tensor layers.26.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[245/543] Writing tensor layers.26.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[246/543] Writing tensor layers.26.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[247/543] Writing tensor layers.27.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[248/543] Writing tensor layers.27.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[249/543] Writing tensor layers.27.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[250/543] Writing tensor layers.27.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[251/543] Writing tensor layers.27.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[252/543] Writing tensor layers.27.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[253/543] Writing tensor layers.27.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[254/543] Writing tensor layers.27.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[255/543] Writing tensor layers.27.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[256/543] Writing tensor layers.28.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[257/543] Writing tensor layers.28.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[258/543] Writing tensor layers.28.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[259/543] Writing tensor layers.28.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[260/543] Writing tensor layers.28.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[261/543] Writing tensor layers.28.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[262/543] Writing tensor layers.28.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[263/543] Writing tensor layers.28.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[264/543] Writing tensor layers.28.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[265/543] Writing tensor layers.29.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[266/543] Writing tensor layers.29.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[267/543] Writing tensor layers.29.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[268/543] Writing tensor layers.29.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[269/543] Writing tensor layers.29.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[270/543] Writing tensor layers.29.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[271/543] Writing tensor layers.29.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[272/543] Writing tensor layers.29.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[273/543] Writing tensor layers.29.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[274/543] Writing tensor layers.30.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[275/543] Writing tensor layers.30.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[276/543] Writing tensor layers.30.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[277/543] Writing tensor layers.30.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[278/543] Writing tensor layers.30.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[279/543] Writing tensor layers.30.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[280/543] Writing tensor layers.30.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[281/543] Writing tensor layers.30.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[282/543] Writing tensor layers.30.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[283/543] Writing tensor layers.31.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[284/543] Writing tensor layers.31.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[285/543] Writing tensor layers.31.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[286/543] Writing tensor layers.31.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[287/543] Writing tensor layers.31.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[288/543] Writing tensor layers.31.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[289/543] Writing tensor layers.31.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[290/543] Writing tensor layers.31.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[291/543] Writing tensor layers.31.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[292/543] Writing tensor layers.32.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[293/543] Writing tensor layers.32.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[294/543] Writing tensor layers.32.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[295/543] Writing tensor layers.32.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[296/543] Writing tensor layers.32.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[297/543] Writing tensor layers.32.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[298/543] Writing tensor layers.32.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[299/543] Writing tensor layers.32.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[300/543] Writing tensor layers.32.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[301/543] Writing tensor layers.33.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[302/543] Writing tensor layers.33.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[303/543] Writing tensor layers.33.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[304/543] Writing tensor layers.33.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[305/543] Writing tensor layers.33.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[306/543] Writing tensor layers.33.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[307/543] Writing tensor layers.33.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[308/543] Writing tensor layers.33.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[309/543] Writing tensor layers.33.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[310/543] Writing tensor layers.34.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[311/543] Writing tensor layers.34.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[312/543] Writing tensor layers.34.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[313/543] Writing tensor layers.34.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[314/543] Writing tensor layers.34.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[315/543] Writing tensor layers.34.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[316/543] Writing tensor layers.34.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[317/543] Writing tensor layers.34.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[318/543] Writing tensor layers.34.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[319/543] Writing tensor layers.35.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[320/543] Writing tensor layers.35.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[321/543] Writing tensor layers.35.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[322/543] Writing tensor layers.35.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[323/543] Writing tensor layers.35.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[324/543] Writing tensor layers.35.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[325/543] Writing tensor layers.35.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[326/543] Writing tensor layers.35.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[327/543] Writing tensor layers.35.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[328/543] Writing tensor layers.36.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[329/543] Writing tensor layers.36.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[330/543] Writing tensor layers.36.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[331/543] Writing tensor layers.36.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[332/543] Writing tensor layers.36.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[333/543] Writing tensor layers.36.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[334/543] Writing tensor layers.36.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[335/543] Writing tensor layers.36.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[336/543] Writing tensor layers.36.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[337/543] Writing tensor layers.37.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[338/543] Writing tensor layers.37.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[339/543] Writing tensor layers.37.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[340/543] Writing tensor layers.37.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[341/543] Writing tensor layers.37.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[342/543] Writing tensor layers.37.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[343/543] Writing tensor layers.37.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[344/543] Writing tensor layers.37.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[345/543] Writing tensor layers.37.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[346/543] Writing tensor layers.38.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[347/543] Writing tensor layers.38.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[348/543] Writing tensor layers.38.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[349/543] Writing tensor layers.38.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[350/543] Writing tensor layers.38.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[351/543] Writing tensor layers.38.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[352/543] Writing tensor layers.38.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[353/543] Writing tensor layers.38.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[354/543] Writing tensor layers.38.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[355/543] Writing tensor layers.39.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[356/543] Writing tensor layers.39.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[357/543] Writing tensor layers.39.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[358/543] Writing tensor layers.39.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[359/543] Writing tensor layers.39.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[360/543] Writing tensor layers.39.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[361/543] Writing tensor layers.39.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[362/543] Writing tensor layers.39.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[363/543] Writing tensor layers.39.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[364/543] Writing tensor layers.40.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[365/543] Writing tensor layers.40.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[366/543] Writing tensor layers.40.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[367/543] Writing tensor layers.40.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[368/543] Writing tensor layers.40.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[369/543] Writing tensor layers.40.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[370/543] Writing tensor layers.40.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[371/543] Writing tensor layers.40.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[372/543] Writing tensor layers.40.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[373/543] Writing tensor layers.41.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[374/543] Writing tensor layers.41.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[375/543] Writing tensor layers.41.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[376/543] Writing tensor layers.41.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[377/543] Writing tensor layers.41.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[378/543] Writing tensor layers.41.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[379/543] Writing tensor layers.41.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[380/543] Writing tensor layers.41.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[381/543] Writing tensor layers.41.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[382/543] Writing tensor layers.42.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[383/543] Writing tensor layers.42.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[384/543] Writing tensor layers.42.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[385/543] Writing tensor layers.42.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[386/543] Writing tensor layers.42.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[387/543] Writing tensor layers.42.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[388/543] Writing tensor layers.42.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[389/543] Writing tensor layers.42.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[390/543] Writing tensor layers.42.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[391/543] Writing tensor layers.43.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[392/543] Writing tensor layers.43.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[393/543] Writing tensor layers.43.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[394/543] Writing tensor layers.43.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[395/543] Writing tensor layers.43.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[396/543] Writing tensor layers.43.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[397/543] Writing tensor layers.43.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[398/543] Writing tensor layers.43.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[399/543] Writing tensor layers.43.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[400/543] Writing tensor layers.44.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[401/543] Writing tensor layers.44.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[402/543] Writing tensor layers.44.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[403/543] Writing tensor layers.44.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[404/543] Writing tensor layers.44.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[405/543] Writing tensor layers.44.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[406/543] Writing tensor layers.44.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[407/543] Writing tensor layers.44.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[408/543] Writing tensor layers.44.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[409/543] Writing tensor layers.45.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[410/543] Writing tensor layers.45.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[411/543] Writing tensor layers.45.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[412/543] Writing tensor layers.45.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[413/543] Writing tensor layers.45.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[414/543] Writing tensor layers.45.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[415/543] Writing tensor layers.45.feed_forward.w2.weight | size 6656 x 17920 | type UnquantizedDataType(name='F16')

[416/543] Writing tensor layers.45.feed_forward.w3.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')

[417/543] Writing tensor layers.45.ffn_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[418/543] Writing tensor layers.46.attention.wq.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[419/543] Writing tensor layers.46.attention.wk.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[420/543] Writing tensor layers.46.attention.wv.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[421/543] Writing tensor layers.46.attention.wo.weight | size 6656 x 6656 | type UnquantizedDataType(name='F16')

[422/543] Writing tensor layers.46.attention_norm.weight | size 6656 | type UnquantizedDataType(name='F32')

[423/543] Writing tensor layers.46.feed_forward.w1.weight | size 17920 x 6656 | type UnquantizedDataType(name='F16')