导读

这篇文章我们主要来介绍一下如何来使用paddlepaddle构建一个深度学习模型,这里我们以构建ResNet为例

ResNet模型

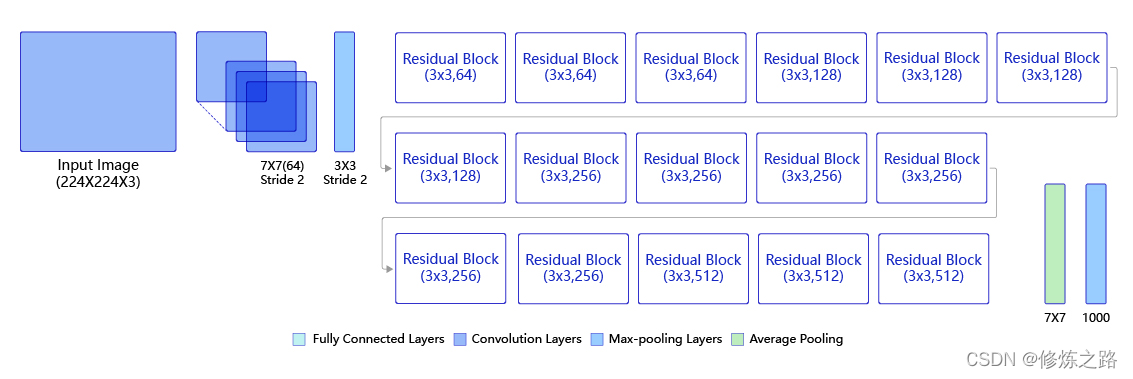

ResNet是2016年由何凯明提出来的,到现在为止我们还是会经常使用到它。我们来看看它的结构

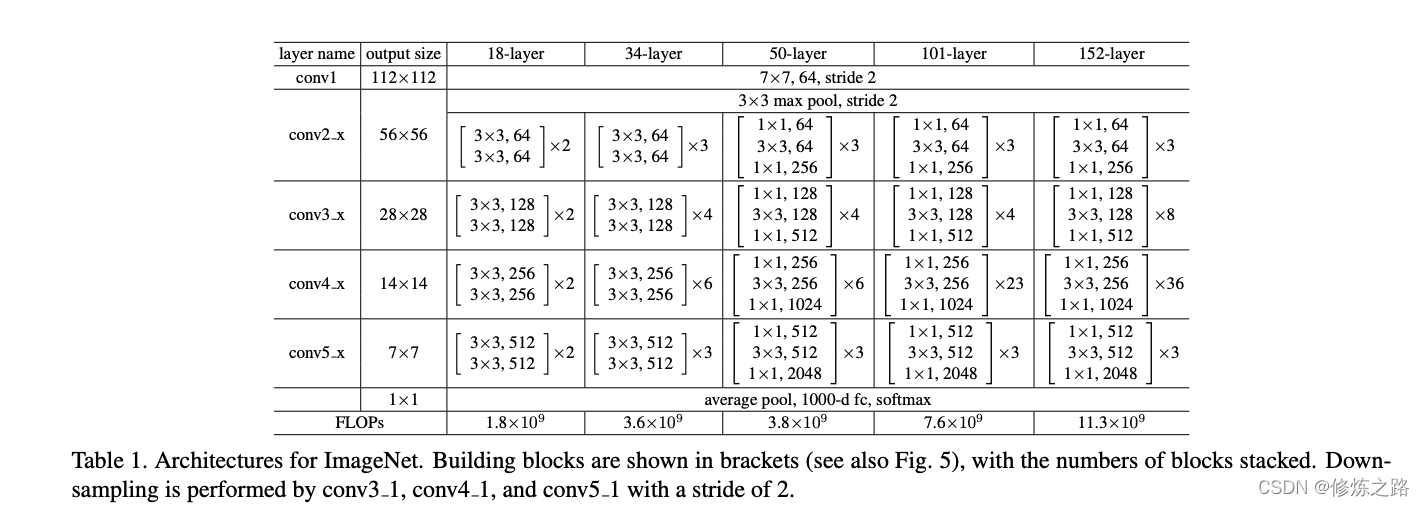

在论文中ResNet主要包含了五种结构,ResNet18、ResNet34、ResNet50和ResNet101以及ResNet152,实际上根据ResNet的网络结构可以拓展到上千层,因为ResNet的核心结构残差块可以使得构建深层次的网络而不出现梯度消失的情况。

通过上面ResNet表格结构图可以发现,ResNet主要由5个卷积层和一个全连接层组成。下面我们来逐步拆分:

- conv1:由一个为步长为2的7x7通道数为64的卷积组成,conv1将输入为

224x224x3的图片转换为112x112x64 - conv2:由一个步长为2的3x3最大池化卷积和堆叠的block组成,输出的size为56x56

- conv3:由堆叠的block组成,输出的size为28x28

- conv4:由堆叠的block组成,输出的size为14x14

- conv5:由堆叠的block组成,输出的size伪7x7

- average pool和softmax:输出1x1x1000,1000表示有1000个不同的lable,softmax将输出的label归一化到[0,1]用来表示每个类别的概率

注:卷积核3x3,步长为2的卷积还可以起到下采样的作用。

ResNet的网络结构图

ResNet网络之所以能够堆叠上百层甚至上千层,主要得益于它的残差结构,它能够有效的减轻梯度消失问题。残差的结构如下图所示,block最终的输出由两部分组成,分别是卷积的输出结果和输入

模型构建

ResNet网络主要由两种不同的Block组成,ResNet18和ResNet34的block是BasicBlock,而ResNet50、ResNet101以及ResNet152的block是BottleneckBlock。

- BasicBlock

import paddle

from paddle import nn

class BasicBlock(nn.Layer):

expansion = 1

def __init__(self,inchannels,channels,stride=1,downsample=None,

groups=1,base_width=64,dilation=1,norm_layer=None):

"""resnet18和resnet32的block

:param inchannels:block输入的通道数

:param channels:block输出的通道数

:param stride:卷积移动的步长

:param downsample:下采样

:param groups:

:param base_width:

:param dilation:

:param norm_layer: 标准化

"""

super(BasicBlock, self).__init__()

if norm_layer is not None:

norm_layer = nn.BatchNorm2D

if dilation > 1:

raise("BasicBlock not support dilation > 1")

#bias_attr设置为False表示卷积没有偏置项

self.conv1 = nn.Conv2D(inchannels,channels,3,padding=1,stride=stride,bias_attr=False)

self.bn1 = norm_layer(channels)

# stride默认为1,kernel_size为3,padding为1等价于same的卷积

self.conv2 = nn.Conv2D(channels,channels,3,padding=1,bias_attr=False)

self.bn2 = norm_layer(channels)

self.relu = nn.ReLU()

self.downsample = downsample

self.stride = stride

def forward(self, x):

input = x

#block的第一层卷积

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#block的第二层卷积

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

input = self.downsample(x)

#残差块

out += input

out = self.relu(out)

return out

BasicBlock的结果比较简单,主要由三部分组成,两个卷积层和一个残差块

- BottleneckBlock

class BottleneckBlock(nn.Layer):

expansion = 4

def __init__(self,inchannels,channels,stride=1,downsample=None,

groups=1,base_width=64,dilation=1,norm_layer=None):

"""resnet50/101/151的block

:param inchannels:block的输入通道数

:param channels:block的输出通道数

:param stride:卷积的步长

:param downsample:下采样

:param groups:

:param base_width:

:param dilation:

:param norm_layer:

"""

super(BottleneckBlock, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2D

width = int(channels * (base_width / 64)) * groups

self.conv1 = nn.Conv2D(inchannels,width,1,bias_attr=False)

self.bn1 = norm_layer(width)

self.conv2 = nn.Conv2D(width,width,3,

padding=dilation,

stride=stride,

dilation=dilation,

bias_attr=False)

self.bn2 = norm_layer(width)

self.conv3 = nn.Conv2D(width,channels*self.expansion,1,bias_attr=False)

self.bn3 = norm_layer(channels * self.expansion)

self.relu = nn.ReLU()

self.downsample = downsample

self.stride = stride

def forward(self,x):

input = x

#第一层卷积层

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

#第二层卷积层

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

#第三层卷积层

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

input = self.downsample(x)

#残差块

out += input

out = self.relu(out)

return out

BottleneckBlock相对于BasicBlock中间多了一个由64个通道3x3的卷积层组成,最后一个卷积层输出的通道数是BasicBlock的2倍

- ResNet网络

class ResNet(nn.Layer):

def __init__(self,block,depth,num_classes=1000,with_pool=True):

super(ResNet, self).__init__()

layer_cfg = {

18:[2,2,2,2],

34:[3,4,6,3],

50:[3,4,6,3],

101:[3,4,23,3],

152:[3,8,36,3]

}

layers = layer_cfg[depth]

self.num_classes = num_classes

self.with_pool = with_pool

self._norm_layer = nn.BatchNorm2D

self.inchannels = 64

self.dilation = 1

self.conv1 = nn.Conv2D(3,self.inchannels,kernel_size=7,

stride=2,padding=3,bias_attr=False)

self.bn1 = self._norm_layer(self.inchannels)

self.relu = nn.ReLU()

self.maxpool = nn.MaxPool2D(kernel_size=3,stride=2,padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

if with_pool:

self.avgpool = nn.AdaptiveAvgPool2D((1, 1))

if num_classes > 0:

self.fc = nn.Linear(512 * block.expansion, num_classes)

def _make_layer(self,block,channels,blocks,stride=1,dilate=False):

norm_layer = self._norm_layer

downsample = None

previous_dilation = self.dilation

if dilate:

self.dilation *= stride

stride = 1

if stride != 1 or self.inchannels != channels * block.expansion:

downsample = nn.Sequential(

nn.Conv2D(

self.inchannels,

channels * block.expansion,

kernel_size=1,

stride=stride,

bias_attr=False

),

norm_layer(channels * block.expansion)

)

layers = []

layers.append(block(self.inchannels,channels,stride,downsample,1,64,

previous_dilation,norm_layer))

self.inchannels = channels * block.expansion

for _ in range(1,blocks):

layers.append(block(self.inchannels,channels,norm_layer=norm_layer))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

if self.with_pool:

x = self.avgpool(x)

if self.num_classes > 0:

x = paddle.flatten(x,1)

x = self.fc(x)

return x

- 查看ResNet网络结构

from paddle import summary

resnet18 = ResNet(BasicBlock,18)

summary(resnet18,(1,3,224,224))