参考:mmpose/projects/rtmpose at main · open-mmlab/mmpose · GitHub

MMDeploy 提供了一系列工具,帮助我们更轻松的将 OpenMMLab 下的算法部署到各种设备与平台上。目前,MMDeploy 可以把 PyTorch 模型转换为 ONNX、TorchScript 等和设备无关的 IR 模型。也可以将 ONNX 模型转换为推理后端模型。两者相结合,可实现端到端的模型转换,也就是从训练端到生产端的一键式部署。

一、模型转换

We provide both Python and C++ inference API with MMDeploy SDK.To use SDK, you need to dump the required info during converting the model. Just add --dump-info to the model conversion command.(MMDeploy sdk提供了python和c++的推理api,在使用api进行推理前需要在pc上进行相应文件的转换,如pth->onnx,pth->ncnn...)

将rtm中的hand_detection模型转为onnx输出,输出后文件夹如下:因为我没装tensorrt,故没有end2end.engine文件,做ncnn部署。

|----{work-dir}

|----end2end.onnx # ONNX model

|----end2end.bin # ncnn model

|----end2end.param # ncnn model

|----pipeline.json #

|----deploy.json # json files for the SDK

|----detail.json #

二、模型SDK推理

1、定义模型位置和输入

DEFINE_string(det_model, "../model/rtm/rtmdet", "Decection model directory");

DEFINE_string(image, "../images/test.bmp", "Input image path");

DEFINE_string(device, "cpu", R"(Device name, e.g. "cpu", "cuda")");

DEFINE_double(det_thr, .5, "Detection score threshold");

2、进行推理,得到预测置信度并截取bbox中的手掌作为后续关键点检测的输入。

// hand detection

mmdeploy::Detector detector(mmdeploy::Model{FLAGS_det_model}, mmdeploy::Device{FLAGS_device});

mmdeploy::Detector::Result dets = detector.Apply(img);

// get the max socre detection

mmdeploy_detection_t target_det = dets[0];

std::cout << "detection max score: " << target_det.score << std::endl;

if (target_det.score < FLAGS_det_thr) {

std::cout << "failed detect hand." << std::endl;

return 0;

}

std::cout << "successfully detect hand." << std::endl;

// crop detection result

cv::Point2f tl(target_det.bbox.left, target_det.bbox.top);

cv::Point2f br(target_det.bbox.right, target_det.bbox.bottom);

cv::Mat det_crop = img(cv::Rect(tl, br)).clone();

cv::imwrite("../images/det_crop.bmp", det_crop);

3、可视化bbox

// visualize detection

utils::Visualize v;

auto det_sess = v.get_session(img);

det_sess.add_det(target_det.bbox, target_det.label_id, target_det.score, target_det.mask, 0);

cv::imwrite("../images/det_out.bmp", det_sess.get());

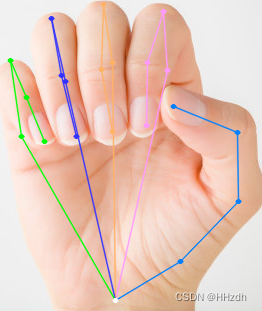

4、做手部关键点预测,同理将crop得到的手部图片作为rtm-pose的输入即可。

// pose detection

mmdeploy::PoseDetector pose_detector(mmdeploy::Model{FLAGS_pose_model}, mmdeploy::Device{FLAGS_device});

mmdeploy::PoseDetector::Result pose_dets = pose_detector.Apply(det_crop);

mmdeploy_pose_detection_t target_pose = pose_dets[0];可视化:

v.set_skeleton(utils::Skeleton::get("coco-wholebody-hand"));

auto pose_sess = v.get_session(det_crop);

pose_sess.add_pose(target_pose.point, target_pose.score, target_pose.length, 0.);

cv::imwrite("../images/pose_out.bmp", pose_sess.get());