应用背景

为了增强模型的鲁棒性和识别精度,从视觉表征方面出发,通过自监督手段,来达到目的。

图片爬虫

爬取你的目标类别图片,越多越好。

from fake_useragent import UserAgent

import requests

import re

import uuid

headers = {

"User-agent": UserAgent().random, # 随机生成一个代理请求

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "zh-CN,zh;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6",

"Connection": "keep-alive"}

img_re = re.compile('"thumbURL":"(.*?)"')

img_format = re.compile("f=(.*).*?w")

def file_op(img):

uuid_str = uuid.uuid4().hex

tmp_file_name = './images/%s.jpeg' % uuid_str

with open(file=tmp_file_name, mode="wb") as file:

try:

file.write(img)

except:

pass

def xhr_url(url_xhr, start_num=0, page=5):

end_num = page*30

for page_num in range(start_num, end_num, 30):

resp = requests.get(url=url_xhr+str(page_num), headers=headers)

if resp.status_code == 200:

img_url_list = img_re.findall(resp.text) # 这是个列表形式

for img_url in img_url_list:

img_rsp = requests.get(url=img_url, headers=headers)

file_op(img=img_rsp.content)

else:

break

print("内容已经全部爬取")

if __name__ == "__main__":

org_url = "https://image.baidu.com/search/acjson?tn=resultjson_com&word={text}&pn=".format(text=input("输入你想检索内容:"))

xhr_url(url_xhr=org_url, start_num=int(input("开始页:")), page=int(input("所需爬取页数:")))

YoloV8 backbone

class YoloBackbone(BaseModel):

"""YOLOv8 detection model."""

def __init__(self, cfg='yolov8n.yaml', ch=3, nc=None, verbose=True): # model, input channels, number of classes

super().__init__()

self.yaml = cfg if isinstance(cfg, dict) else yaml_model_load(cfg) # cfg dict

# Define model

ch = self.yaml['ch'] = self.yaml.get('ch', ch) # input channels

if nc and nc != self.yaml['nc']:

LOGGER.info(f"Overriding model.yaml nc={

self.yaml['nc']} with nc={

nc}")

self.yaml['nc'] = nc # override yaml value

self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelist

self.names = {

i: f'{

i}' for i in range(self.yaml['nc'])} # default names dict

self.inplace = self.yaml.get('inplace', True)

# Build strides

self.model = self.model[:9] # Detect()

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

# self.fc = nn.Linear(512, nc)

# Init weights, biases

initialize_weights(self)

if verbose:

self.info()

LOGGER.info('')

def forward(self, x, augment=False, profile=False, visualize=False):

for m in self.model:

x = m(x) # run

x = self.avgpool(x)

return x # single-scale inference, train

模型自监督预训练

# Note: The model and training settings do not follow the reference settings

# from the paper. The settings are chosen such that the example can easily be

# run on a small dataset with a single GPU.

import torch

from torch import nn

import torchvision.transforms.functional as F

from torchvision import models

import torchvision

from prefetch_generator import BackgroundGenerator

import tqdm

import copy

from copy import deepcopy

from lightly.data import LightlyDataset

from lightly.data.multi_view_collate import MultiViewCollate

from lightly.loss.tico_loss import TiCoLoss

from lightly.models.modules.heads import TiCoProjectionHead

from lightly.models.utils import deactivate_requires_grad, update_momentum

from lightly.transforms.simclr_transform import SimCLRTransform

from lightly.utils.scheduler import cosine_schedule

class TiCo(nn.Module):

def __init__(self, backbone):

super().__init__()

self.backbone = backbone

self.projection_head = TiCoProjectionHead(512, 1024, 256)

self.backbone_momentum = copy.deepcopy(self.backbone)

self.projection_head_momentum = copy.deepcopy(self.projection_head)

deactivate_requires_grad(self.backbone_momentum)

deactivate_requires_grad(self.projection_head_momentum)

def forward(self, x):

y = self.backbone(x).flatten(start_dim=1)

z = self.projection_head(y)

return z

def forward_momentum(self, x):

y = self.backbone_momentum(x).flatten(start_dim=1)

z = self.projection_head_momentum(y)

z = z.detach()

return z

if __name__ == "__main__":

import torchvision.transforms as transforms

import argparse

from ultralytics.nn.tasks import DetectionModel, YoloBackbone

parser = argparse.ArgumentParser()

parser.add_argument('--cfg', type=str, default='yolov8s.yaml', help='model.yaml')

parser.add_argument('--batch-size', type=int, default=1, help='total batch size for all GPUs')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--profile', action='store_true', help='profile model speed')

parser.add_argument('--line-profile', action='store_true', help='profile model speed layer by layer')

parser.add_argument('--test', action='store_true', help='test all yolo*.yaml')

opt = parser.parse_args()

# Create model

backbone = YoloBackbone(opt.cfg, ch=3, nc=3)

model = TiCo(backbone)

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

cifar10 = torchvision.datasets.CIFAR10("datasets/cifar10", download=True)

transform = SimCLRTransform(input_size=32)

# dataset = LightlyDataset.from_torch_dataset(cifar10, transform=transform)

# or create a dataset from a folder containing images or videos:

dataset = LightlyDataset("/data/ssl", transform=transform)

collate_fn = MultiViewCollate()

dataloader = torch.utils.data.DataLoader(

dataset,

batch_size=256,

collate_fn=collate_fn,

shuffle=True,

drop_last=True,

num_workers=8,

)

criterion = TiCoLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.06)

epochs = 10

print("Starting Training")

for epoch in range(epochs):

total_loss = 0

momentum_val = cosine_schedule(epoch, epochs, 0.996, 1)

for (x0, x1), _, _ in dataloader:

update_momentum(model.backbone, model.backbone_momentum, m=momentum_val)

update_momentum(

model.projection_head, model.projection_head_momentum, m=momentum_val

)

x0 = x0.to(device)

x1 = x1.to(device)

z0 = model(x0)

z1 = model.forward_momentum(x1)

loss = criterion(z0, z1)

total_loss += loss.detach()

loss.backward()

optimizer.step()

optimizer.zero_grad()

avg_loss = total_loss / len(dataloader)

print(f"epoch: {

epoch:>02}, loss: {

avg_loss:.5f}")

if best_avg_loss > avg_loss:

torch.save(model.backbone.state_dict(), "best_yoloV8_Backbone.pth")

print(f"Finding optimal model params. Loss is dropping from {

best_avg_loss:.4f} to {

avg_loss:.4f}")

best_avg_loss = avg_loss

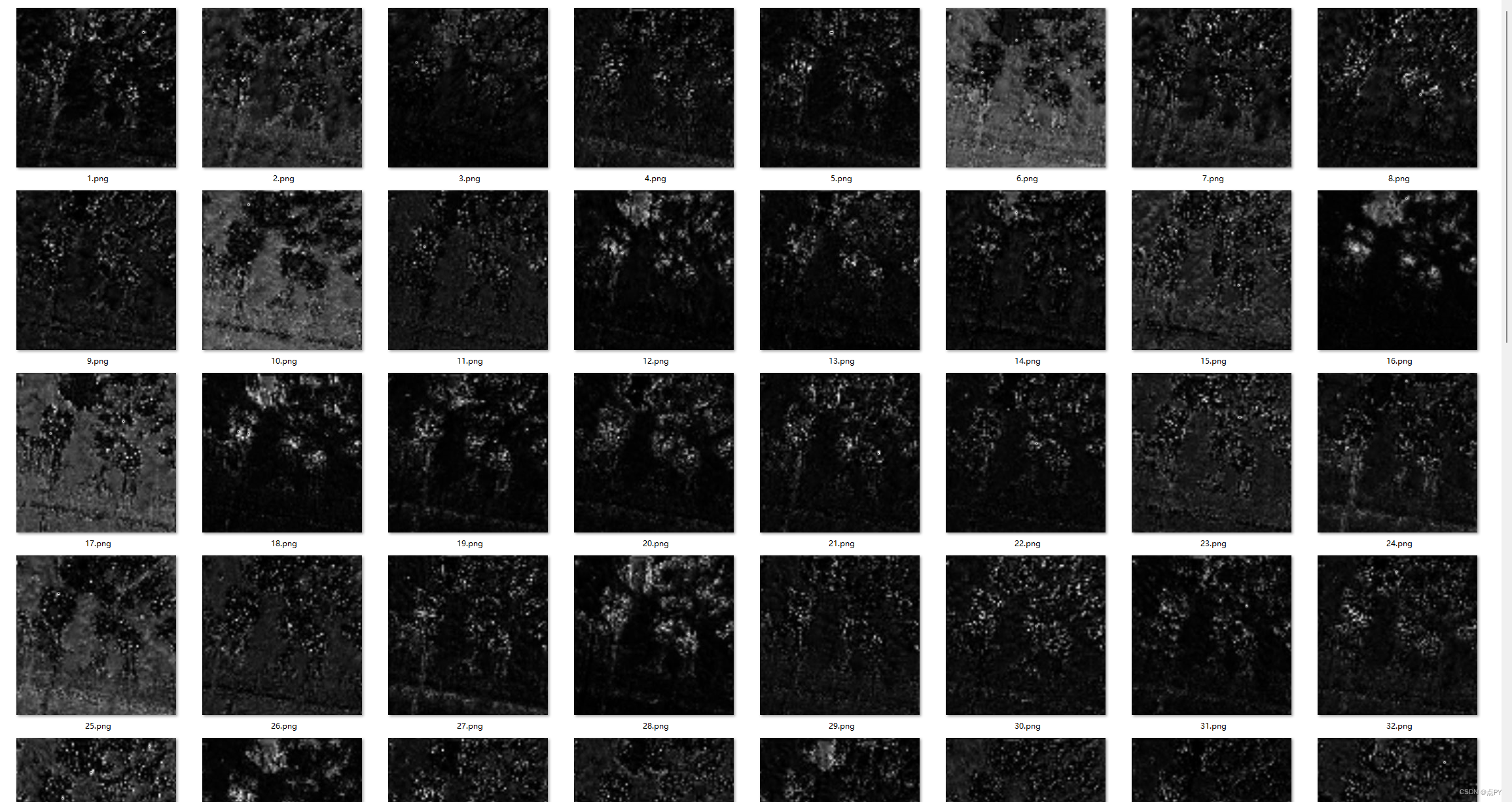

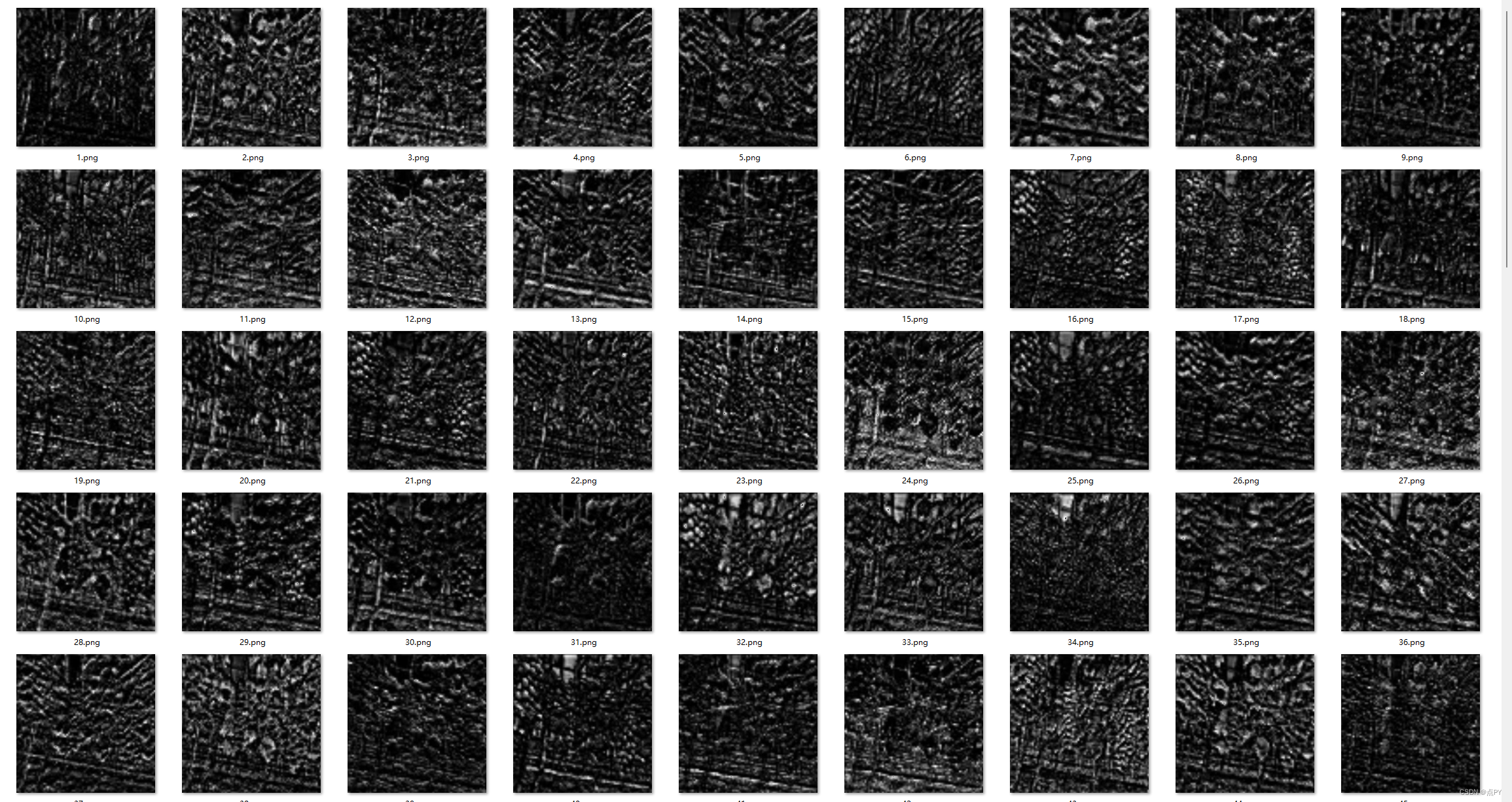

中间特征图可视化

目标图片

随机初始化的特征图

自监督预训练特征图

import torch

from torchvision import models, transforms

from PIL import Image

import matplotlib.pyplot as plt

import numpy as np

import scipy.misc

import cv2

import os

plt.rcParams['font.sans-serif']=['STSong']

import torchvision.models as models

import torchvision

import lightly

#1.模型查看

# print(model)#可以看出网络一共有3层,两个Sequential()+avgpool

# model_features = list(model.children())

# print(model_features[0][3])#取第0层Sequential()中的第四层

# for index,layer in enumerate(model_features[0]):

# print(layer)

#2. 导入数据

# 以RGB格式打开图像

# Pytorch DataLoader就是使用PIL所读取的图像格式

# 建议就用这种方法读取图像,当读入灰度图像时convert('')

def get_image_info(image_dir):

image_info = Image.open(image_dir).convert('RGB')#是一幅图片

# 数据预处理方法

image_transform = torchvision.transforms.Compose([

torchvision.transforms.Resize((640, 640)),

torchvision.transforms.ToTensor(),

# torchvision.transforms.Normalize(

# mean=lightly.data.collate.imagenet_normalize['mean'],

# std=lightly.data.collate.imagenet_normalize['std'],

# )

])

image_info = image_transform(image_info)#torch.Size([3, 224, 224])

image_info = image_info.unsqueeze(0)#torch.Size([1, 3, 224, 224])因为model的输入要求是4维,所以变成4维

return image_info#变成tensor数据

#2. 获取第k层的特征图

'''

args:

k:定义提取第几层的feature map

x:图片的tensor

model_layer:是一个Sequential()特征层

'''

def get_k_layer_feature_map(model_layer, k, x):

with torch.no_grad():

for index, layer in enumerate(model_layer):#model的第一个Sequential()是有多层,所以遍历

x = layer(x)#torch.Size([1, 64, 55, 55])生成了64个通道

if k == index:

return x

# 可视化特征图

def show_feature_map(feature_map, outRoot):#feature_map=torch.Size([1, 64, 55, 55]),feature_map[0].shape=torch.Size([64, 55, 55])

# feature_map[2].shape out of bounds

feature_map = feature_map.squeeze(0)#压缩成torch.Size([64, 55, 55])

#以下4行,通过双线性插值的方式改变保存图像的大小

feature_map =feature_map.view(1,feature_map.shape[0],feature_map.shape[1],feature_map.shape[2])#(1,64,55,55)

upsample = torch.nn.UpsamplingBilinear2d(size=(256,256))#这里进行调整大小

feature_map = upsample(feature_map)

feature_map = feature_map.view(feature_map.shape[1],feature_map.shape[2],feature_map.shape[3])

feature_map_num = feature_map.shape[0]#返回通道数

row_num = np.ceil(np.sqrt(feature_map_num))#8

# plt.figure()

for index in range(1, feature_map_num + 1):#通过遍历的方式,将64个通道的tensor拿出

feature_mask = feature_map[index - 1].numpy()

feature_mask = ((feature_mask - np.min(feature_mask)) / np.max(feature_mask) * 255).astype(np.uint8)

# plt.subplot(int(row_num), int(row_num), int(index))

# plt.imshow(feature_mask, cmap='gray')#feature_map[0].shape=torch.Size([55, 55])

# #将上行代码替换成,可显示彩色 plt.imshow(transforms.ToPILImage()(feature_map[index - 1]))#feature_map[0].shape=torch.Size([55, 55])

# plt.axis('off')

cv2.imwrite( f'{

outRoot}//'+str(index) + ".png", feature_mask)

# plt.show()

def mkdir(path):

if not os.path.exists(path):

os.mkdir(path)

if __name__ == '__main__':

from ultralytics.nn.tasks import DetectionModel, YoloBackbone, SegmentationModel

image_dir = r"D:\projects\yolo_ssl\ultralytics-main\res\grape_356.jpg"

SSLModelPath = r"D:\projects\yolo_ssl\ultralytics-main\res\best_yoloV8_Backbone.pth"

# 定义提取第几层的feature map

for k in range(1, 5):

outRoot = f"feature_map_yolov8_ssl3_layer{

k}"

mkdir(outRoot)

image_info = get_image_info(image_dir)

model = YoloBackbone('yolov8s.yaml', ch=3, nc=3)

state = torch.load(SSLModelPath, map_location="cpu")

model.load_state_dict(state, strict=False)

model_layer= model.model

# model_layer=model_layer[4]#这里选择model的第一个Sequential()

feature_map = get_k_layer_feature_map(model_layer, k, image_info)

show_feature_map(feature_map, outRoot=outRoot)

目标检测任务微调

from ultralytics import YOLO

import torch

class FinetuneYolo(YOLO):

def load_backbone(self, ckptPath):

"""

Transfers backbone parameters with matching names and shapes from 'weights' to model.

"""

backboneWeights = torch.load(ckptPath)

self.model.load_state_dict(backboneWeights, strict=False)

return self

def freeze_backbone(self, freeze):

# Freeze backbone params

freeze = [f'model.{

x}.' for x in range(freeze)] # layers to freeze

for k, v in self.model.named_parameters():

v.requires_grad = True # train all layers

# v.register_hook(lambda x: torch.nan_to_num(x)) # NaN to 0 (commented for erratic training results)

if any(x in k for x in freeze):

v.requires_grad = False

return self

def unfreeze_backbone(self):

# unfreeze backbone params

for k, v in self.model.named_parameters():

v.requires_grad = True # train all layers

# v.register_hook(lambda x: torch.nan_to_num(x)) # NaN to 0 (commented for erratic training results)

return self

if __name__ == "__main__":

import argparse

parser = argparse.ArgumentParser()

parser.add_argument('--cfg', type=str, default='yolov8s.yaml', help='model.yaml')

parser.add_argument('--ckptPath', type=str, default="./best_yoloV8_Backbone.pth", help='The path of checkpoints')

opt = parser.parse_args()

# model = FinetuneYolo(opt.cfg).load_backbone(opt.ckptPath) # build from YAML and transfer weights

model = FinetuneYolo(opt.cfg) # build from YAML and transfer weights

# freeze backbone params, finetuning the decoder params

model.freeze_backbone(9)

model.train(data='coco128.yaml', epochs=5, imgsz=640)

# unfreeze backbone params, finetuning the decoder params

model.unfreeze_backbone()

model.train(data='coco128.yaml', epochs=5, imgsz=640)