DataStream概述

DataStream API 的名字来源于一个特殊的DataStream类,该类用于表示 Flink 程序中的数据集合。可以将它们视为可以包含重复项的不可变数据集合。这些数据可以是有限的也可以是无限的。

Flink 中的 DataStream 是对数据流进行转换(例如过滤、更新状态、定义窗口、聚合)的常规程序。数据流最初是从各种来源(例如,消息队列、套接字流、文件)创建的。结果通过接收器返回,例如可以将数据写入文件或标准输出(例如命令行终端)。Flink 程序可以在各种上下文中运行,可以独立运行,也可以嵌入到其他程序中。执行可以在本地 JVM 中执行。也可以在集群上执行。

Flink 程序基础架构:

- 获得一个执行上下文execution environment

- 加载初始数据

- 数据流转换

- 计算结果存储

- 触发程序执行

其中数据流转换(transformations)中包含多种转换数据流的算子,也是整个flink程序最核心的东西。接下来依次介绍:

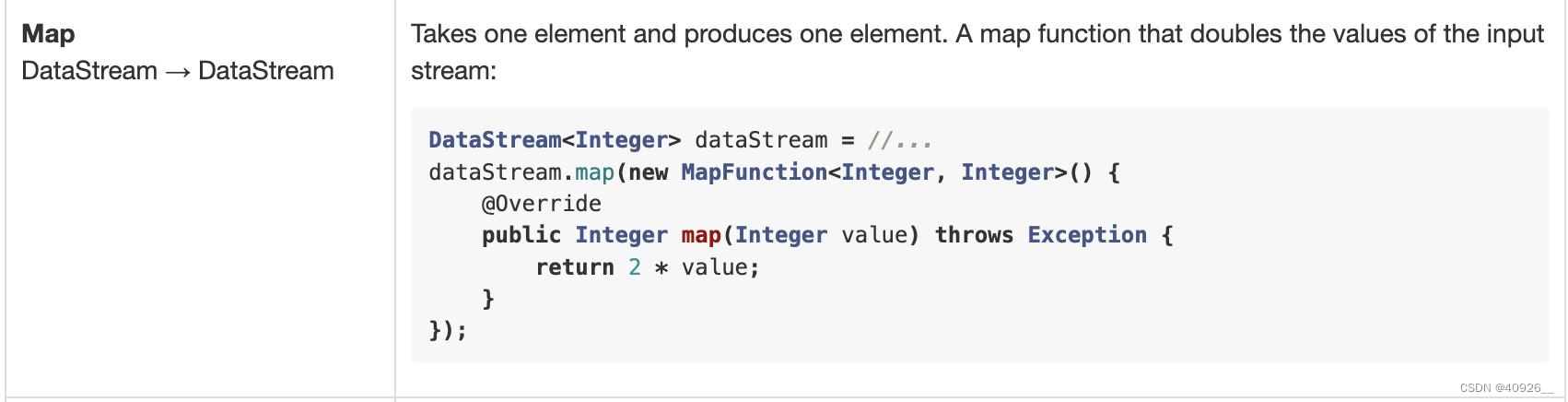

- map

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.ArrayList;

/**

* map算子,作用在流上每一个数据元素上

* 例如:(1,2,3)--> (1*2,2*2,3*2)

*/

public class TestMap {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

CollectMap(env);

env.execute("com.mapTest.test");

}

private static void CollectMap(StreamExecutionEnvironment env){

ArrayList<Integer> list = new ArrayList<>();

list.add(1);

list.add(2);

list.add(3);

DataStreamSource<Integer> source = env.fromCollection(list);

source.map(new MapFunction<Integer, Integer>() {

@Override

public Integer map(Integer value) throws Exception {

return value * 2;

}

}).print();

}

}

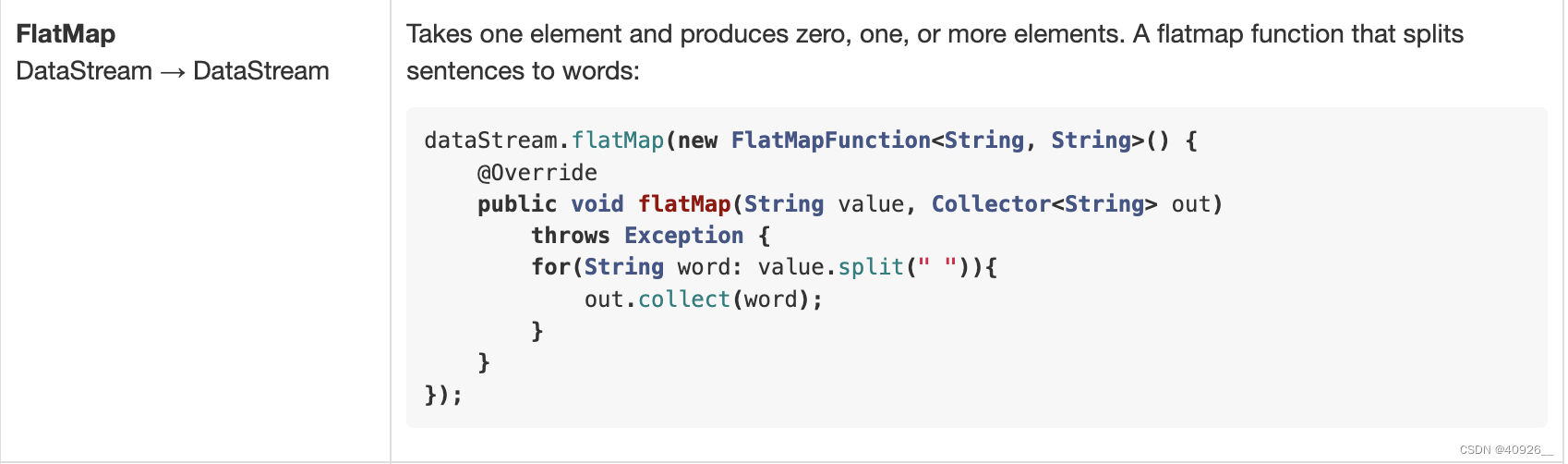

- FlatMap

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* 将数据扁平化处理

* 例如:(flink,spark,hadoop) --> (flink) (spark) (hadoop)

*/

public class TestFlatMap {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<String> source = env.socketTextStream("localhost", 9630);

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String s, Collector<String> collector) throws Exception {

String[] value = s.split(",");

for (String split:value){

collector.collect(split);

}

}

}).filter(new FilterFunction<String>() {

@Override

public boolean filter(String s) throws Exception {

return !"sd".equals(s);

}

}).print();

env.execute("TestFlatMap");

}

}

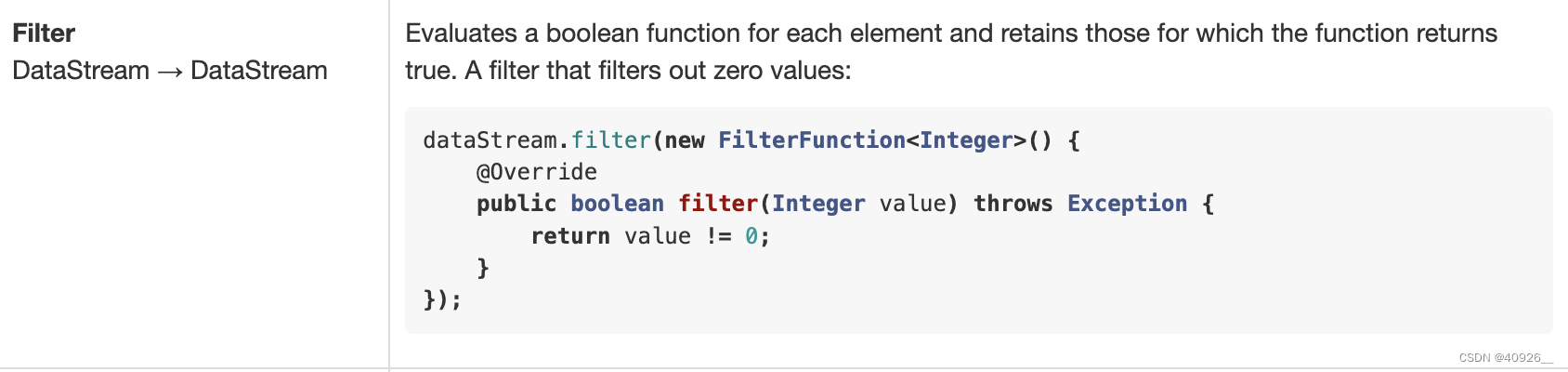

- Filter

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* 过滤器,将流中无关元素过滤掉

* 例如:(1,2,3,4,5) ----> (3,4,5)

*/

public class TestFilter {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//ReadFileMap(env);

FilterTest(env);

env.execute("com.FilterTest.test");

}

private static void FilterTest(StreamExecutionEnvironment env){

DataStreamSource<Integer> source = env.fromElements(1, 2, 3, 4, 5);

source.filter(new FilterFunction<Integer>() {

@Override

public boolean filter(Integer value) throws Exception {

return value >= 3;

}

}).print();

}

}

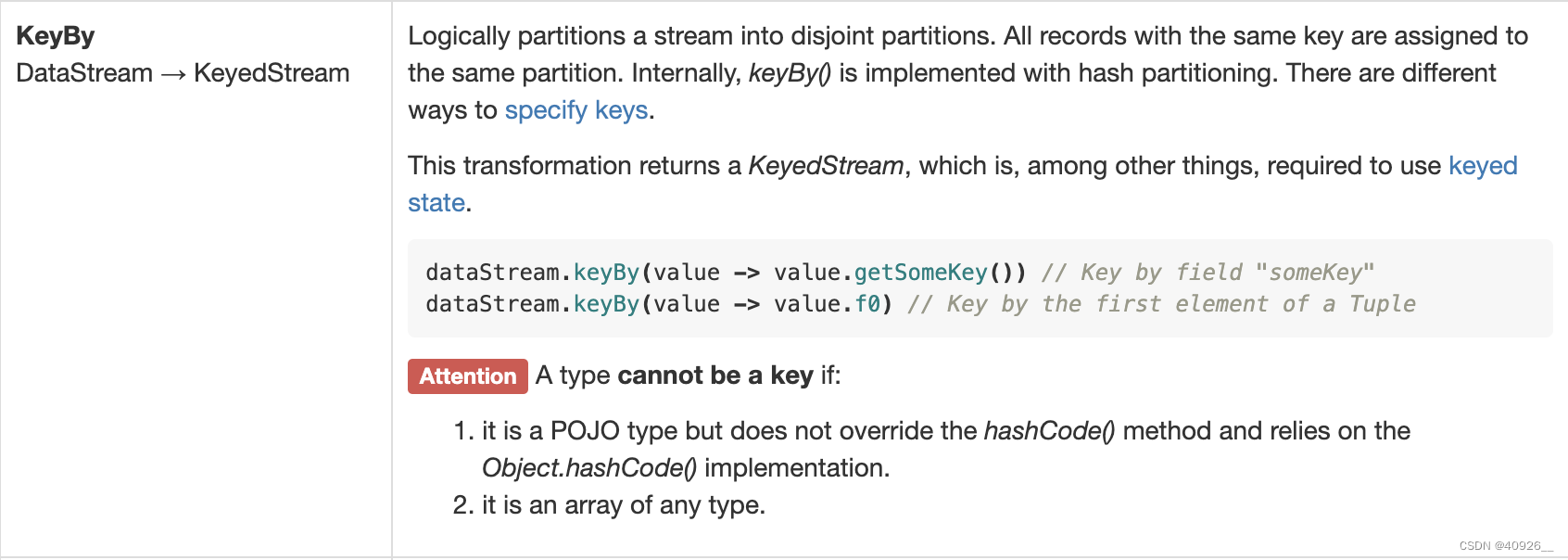

- KeyBy

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.functions.KeySelector;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* keyby算子将数据流转换成一个keyedStream,但这里需要注意的是有两种类型无法转换:

* 一个是没有实现hashcode方法的pojo类型,另一个是数组

* 例如:(flink,spark)---> (flink,1),(spark,1)

*/

public class TestKeyBy {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

KeyByTest1(env);

env.execute();

}

private static void KeyByTest1(StreamExecutionEnvironment env){

DataStreamSource<String> source = env.fromElements("flink","spark","flink");

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String s, Collector<String> out) throws Exception {

String[] split = s.split(",");

for (String value : split) {

out.collect(value);

}

}

})

.map(new MapFunction<String, Tuple2<String,Integer>>() {

@Override

public Tuple2<String, Integer> map(String value) throws Exception {

return Tuple2.of(value,1);

}

})

//过时方法

//.keyBy("f0").print();

//简写方法

//.keyBy(x -> x.f0).print();

.keyBy(new KeySelector<Tuple2<String, Integer>, String>() {

@Override

public String getKey(Tuple2<String, Integer> value) throws Exception {

return value.f0;

}

}).print();

}

}

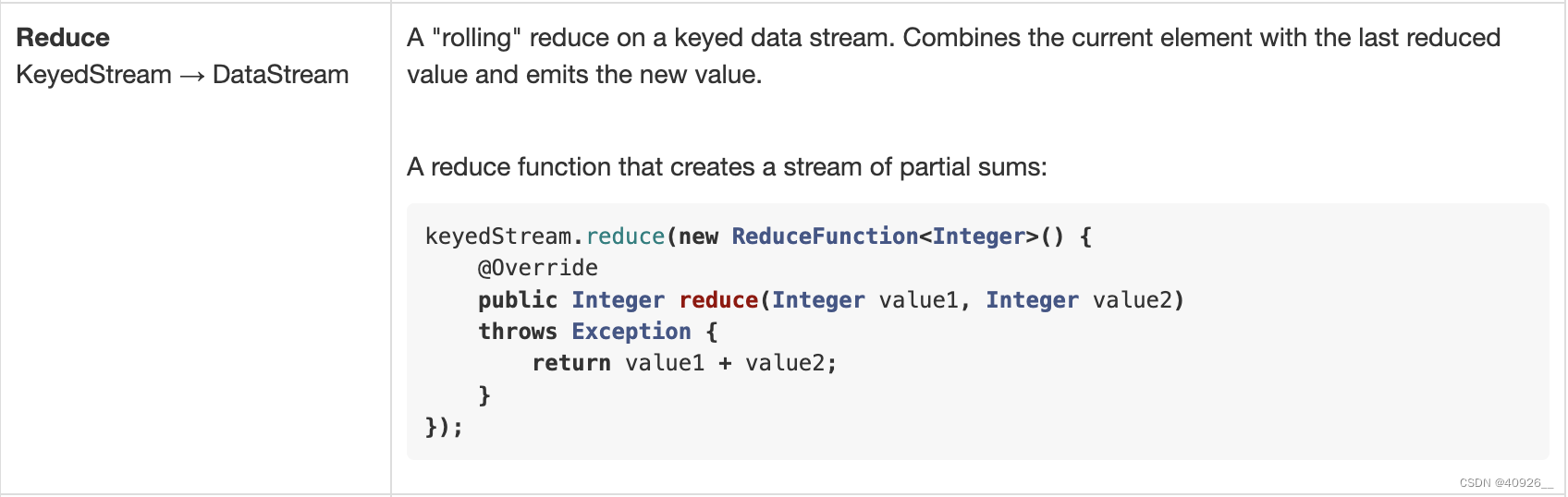

- Reduce

mport org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.functions.ReduceFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* 1、对传过来的数据按照" ,"进行分割

* 2、为每个出现的单词进行赋值

* 3、按照单词进行keyby

* 4、分组求和

*/

public class TestReduce {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStreamSource<String> source = env.socketTextStream("localhost", 9630);

source.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String s, Collector<String> collector) throws Exception {

String[] value = s.split(",");

for (String split:value){

collector.collect(split);

}

}

}).map(new MapFunction<String, Tuple2<String,Integer>>() {

@Override

public Tuple2<String,Integer> map(String s) throws Exception {

return Tuple2.of(s,1);

}

}).keyBy(x ->x.f0)

.reduce(new ReduceFunction<Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> reduce(Tuple2<String, Integer> value1, Tuple2<String, Integer> value2) throws Exception {

return Tuple2.of(value1.f0, value1.f1+value2.f1);

}

}).print();

env.execute();

}

}

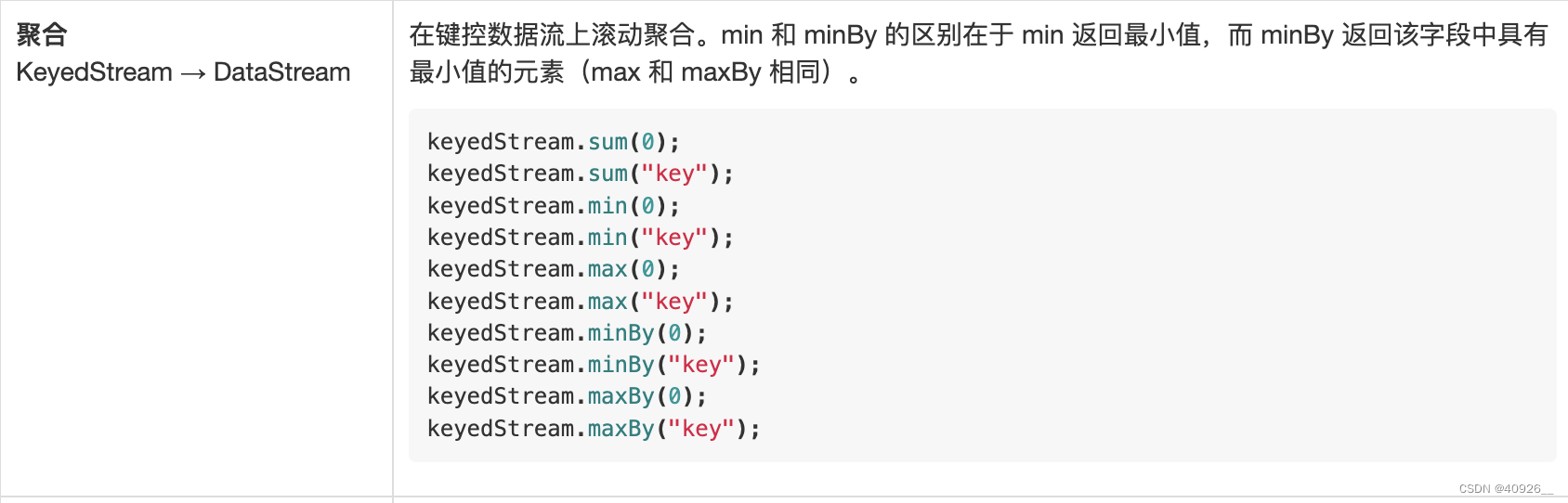

聚合函数,通常跟在keydeStream流后面使用