因为最近遇到spring-kafka消费线程中断消费的问题,所以看了它消费线程的启动过程,来总结一下。

Lifecycle和SmartLifecycle

要想知道它的加载过程,首先要先了解这两个接口:

Lifecycle是Spring中最基础的生命周期接口,该接口定义了容器启动和停止的方法。

SmartLifecycle是对Lifecycle的一个扩展接口,相比Lifecycle增加以下功能:

- 无需容器显示调用start()方法,就可以回调SmartLifecycle接口的start()

- 容器中如果有多个SmartLifecycle实例,可以方便控制调用顺序。

spring-kafka版本

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>1.3.5.RELEASE</version>

</dependency>

启动过程分析

spring加载完所有bean(实例化+初始化)后,要刷新容器org.springframework.context.support.AbstractApplicationContext#finishRefresh

protected void finishRefresh() {

// Initialize lifecycle processor for this context.

initLifecycleProcessor();

// Propagate refresh to lifecycle processor first.

getLifecycleProcessor().onRefresh();

// Publish the final event.

publishEvent(new ContextRefreshedEvent(this));

// Participate in LiveBeansView MBean, if active.

LiveBeansView.registerApplicationContext(this);

}

首先是默认的Lifecycle处理器DefaultLifecycleProcessor

第二步:getLifecycleProcessor().onRefresh();

所有实现了Lifecycle的bean都被start了,即调用以下方法

org.springframework.context.support.DefaultLifecycleProcessor#startBeans

private void startBeans(boolean autoStartupOnly) {

Map<String, Lifecycle> lifecycleBeans = getLifecycleBeans();

Map<Integer, LifecycleGroup> phases = new HashMap<Integer, LifecycleGroup>();

for (Map.Entry<String, ? extends Lifecycle> entry : lifecycleBeans.entrySet()) {

Lifecycle bean = entry.getValue();

if (!autoStartupOnly || (bean instanceof SmartLifecycle && ((SmartLifecycle) bean).isAutoStartup())) {

int phase = getPhase(bean);

LifecycleGroup group = phases.get(phase);

if (group == null) {

group = new LifecycleGroup(phase, this.timeoutPerShutdownPhase, lifecycleBeans, autoStartupOnly);

phases.put(phase, group);

}

group.add(entry.getKey(), bean);

}

}

if (!phases.isEmpty()) {

List<Integer> keys = new ArrayList<Integer>(phases.keySet());

Collections.sort(keys);

for (Integer key : keys) {

phases.get(key).start();

}

}

}

按顺序phase排序,值越小越靠前,最后遍历调start方法:

public void start() {

if (this.members.isEmpty()) {

return;

}

if (logger.isInfoEnabled()) {

logger.info("Starting beans in phase " + this.phase);

}

Collections.sort(this.members);

for (LifecycleGroupMember member : this.members) {

if (this.lifecycleBeans.containsKey(member.name)) {

doStart(this.lifecycleBeans, member.name, this.autoStartupOnly);

}

}

}

最终会进到

private void doStart(Map<String, ? extends Lifecycle> lifecycleBeans, String beanName, boolean autoStartupOnly) {

Lifecycle bean = lifecycleBeans.remove(beanName);

if (bean != null && bean != this) {

String[] dependenciesForBean = this.beanFactory.getDependenciesForBean(beanName);

for (String dependency : dependenciesForBean) {

doStart(lifecycleBeans, dependency, autoStartupOnly);

}

if (!bean.isRunning() &&

(!autoStartupOnly || !(bean instanceof SmartLifecycle) || ((SmartLifecycle) bean).isAutoStartup())) {

if (logger.isDebugEnabled()) {

logger.debug("Starting bean '" + beanName + "' of type [" + bean.getClass() + "]");

}

try {

bean.start();

}

catch (Throwable ex) {

throw new ApplicationContextException("Failed to start bean '" + beanName + "'", ex);

}

if (logger.isDebugEnabled()) {

logger.debug("Successfully started bean '" + beanName + "'");

}

}

}

}

就看bean.start()这句,其中spring-kafka中一个关键类:KafkaListenerEndpointRegistry就实现了SmartLifecycle,所以会进到它里面去,好,我们进到它的start方法:

@Override

public void start() {

for (MessageListenerContainer listenerContainer : getListenerContainers()) {

startIfNecessary(listenerContainer);

}

}

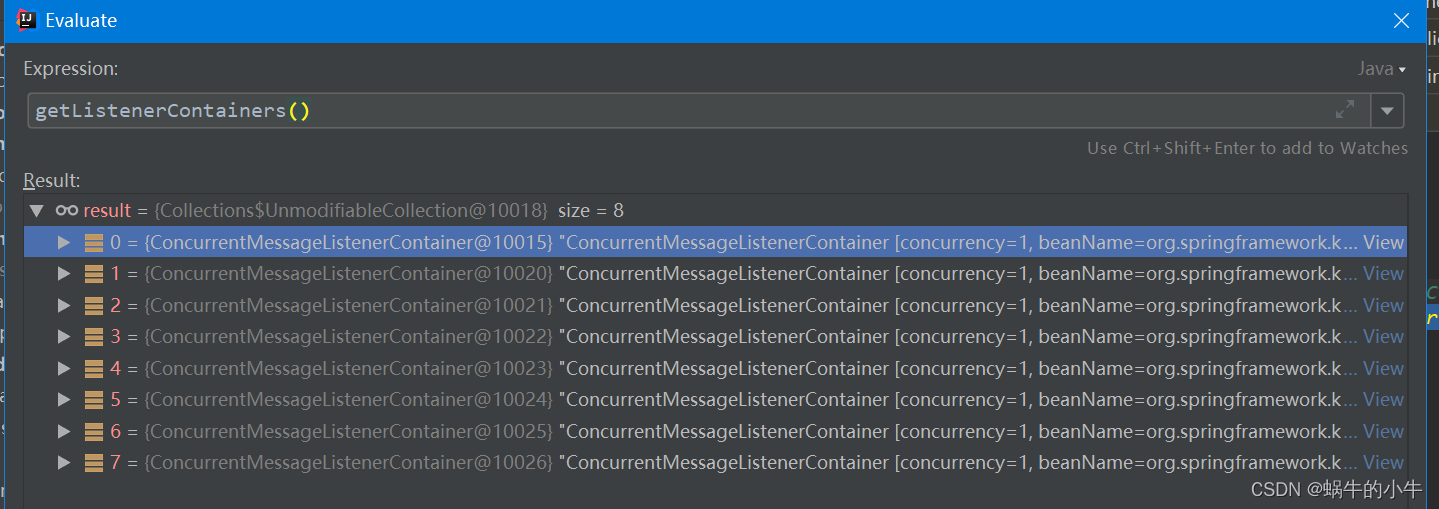

getListenerContainers()这个是啥东西,我这里打印出来了

它是ConcurrentMessageListenerContainer这个对象,像是启动消费者的类(先透露一下,这里对应我们代码中使用**@KafkaListener**注解的次数,也就是说某方法如果使用此注解,就给你生成一个这样的对象),那它是什么时候放进来的,带着这个疑问,先看是哪里对它赋值的,找到赋值给它的代码位置:

public void registerListenerContainer(KafkaListenerEndpoint endpoint, KafkaListenerContainerFactory<?> factory,

boolean startImmediately) {

Assert.notNull(endpoint, "Endpoint must not be null");

Assert.notNull(factory, "Factory must not be null");

String id = endpoint.getId();

Assert.hasText(id, "Endpoint id must not be empty");

synchronized (this.listenerContainers) {

Assert.state(!this.listenerContainers.containsKey(id),

"Another endpoint is already registered with id '" + id + "'");

MessageListenerContainer container = createListenerContainer(endpoint, factory);

this.listenerContainers.put(id, container);

// 省略部分代码

好,那又是哪里调了这个registerListenerContainer方法呢,继续往前推导,发现在类KafkaListenerEndpointRegistrar中分别有registerEndpoint方法和registerAllEndpoints方法都调到了它,那究竟是哪个调了呢?我们先看第一个registerEndpoint方法

public void registerEndpoint(KafkaListenerEndpoint endpoint, KafkaListenerContainerFactory<?> factory) {

Assert.notNull(endpoint, "Endpoint must be set");

Assert.hasText(endpoint.getId(), "Endpoint id must be set");

// Factory may be null, we defer the resolution right before actually creating the container

KafkaListenerEndpointDescriptor descriptor = new KafkaListenerEndpointDescriptor(endpoint, factory);

synchronized (this.endpointDescriptors) {

if (this.startImmediately) {

// Register and start immediately

this.endpointRegistry.registerListenerContainer(descriptor.endpoint,

resolveContainerFactory(descriptor), true);

}

else {

this.endpointDescriptors.add(descriptor);

}

}

}

startImmediately这个刚刚开始是false的,所以应该不是它,那么就剩下registerAllEndpoints方法了。我们看到registerAllEndpoints里面需要拿this.endpointDescriptors这个集合,所以肯定要事先写入它,

而KafkaListenerEndpointRegistrar#registerEndpoint正好就是干了这件事,因为if (this.startImmediately) 不满足,所以先写到了this.endpointDescriptors.add(descriptor);

当调用registerAllEndpoints方法就能拿的到。

那么又是谁调了

KafkaListenerEndpointRegistrar#registerEndpoint ?

发现其中有一个类KafkaListenerAnnotationBeanPostProcessor,看这个名字就知道它是一个BeanPostProcessor,也就是Bean后处理器,它是在bean初始化阶段调用的,我们进去它里面的方法看看

public Object postProcessAfterInitialization(final Object bean, final String beanName) throws BeansException {

if (!this.nonAnnotatedClasses.contains(bean.getClass())) {

Class<?> targetClass = AopUtils.getTargetClass(bean);

Collection<KafkaListener> classLevelListeners = findListenerAnnotations(targetClass);

final boolean hasClassLevelListeners = classLevelListeners.size() > 0;

final List<Method> multiMethods = new ArrayList<Method>();

Map<Method, Set<KafkaListener>> annotatedMethods = MethodIntrospector.selectMethods(targetClass,

new MethodIntrospector.MetadataLookup<Set<KafkaListener>>() {

@Override

public Set<KafkaListener> inspect(Method method) {

Set<KafkaListener> listenerMethods = findListenerAnnotations(method);

return (!listenerMethods.isEmpty() ? listenerMethods : null);

}

});

if (hasClassLevelListeners) {

Set<Method> methodsWithHandler = MethodIntrospector.selectMethods(targetClass,

new MethodFilter() {

@Override

public boolean matches(Method method) {

return AnnotationUtils.findAnnotation(method, KafkaHandler.class) != null;

}

});

multiMethods.addAll(methodsWithHandler);

}

if (annotatedMethods.isEmpty()) {

this.nonAnnotatedClasses.add(bean.getClass());

if (this.logger.isTraceEnabled()) {

this.logger.trace("No @KafkaListener annotations found on bean type: " + bean.getClass());

}

}

else {

// Non-empty set of methods

for (Map.Entry<Method, Set<KafkaListener>> entry : annotatedMethods.entrySet()) {

Method method = entry.getKey();

for (KafkaListener listener : entry.getValue()) {

processKafkaListener(listener, method, bean, beanName);

}

}

if (this.logger.isDebugEnabled()) {

this.logger.debug(annotatedMethods.size() + " @KafkaListener methods processed on bean '"

+ beanName + "': " + annotatedMethods);

}

}

if (hasClassLevelListeners) {

processMultiMethodListeners(classLevelListeners, multiMethods, bean, beanName);

}

}

return bean;

}

这里有点长,在这里看到了熟悉的注解@KafkaListener,没错,我们平时在方法上加@KafkaListener注解要发挥作用也就是靠它,这段逻辑就是解析当前bean加了这个注解的所有方法,接进去这句:

processKafkaListener(listener, method, bean, beanName);

protected void processListener(MethodKafkaListenerEndpoint<?, ?> endpoint, KafkaListener kafkaListener, Object bean,

Object adminTarget, String beanName) {

endpoint.setBean(bean);

endpoint.setMessageHandlerMethodFactory(this.messageHandlerMethodFactory);

endpoint.setId(getEndpointId(kafkaListener));

endpoint.setGroupId(getEndpointGroupId(kafkaListener, endpoint.getId()));

endpoint.setTopicPartitions(resolveTopicPartitions(kafkaListener));

endpoint.setTopics(resolveTopics(kafkaListener));

endpoint.setTopicPattern(resolvePattern(kafkaListener));

String group = kafkaListener.containerGroup();

// 省略部分代码

this.registrar.registerEndpoint(endpoint, factory);

}

先解析一遍注解方法的属性,生成对象,最后就调到了上面留下来的疑问,谁调了this.registrar.registerEndpoint(endpoint, factory)方法,好,到此整条链路就串起来了。

我们接着最开始的位置继续分析,也就是:KafkaListenerEndpointRegistry#start

@Override

public void start() {

for (MessageListenerContainer listenerContainer : getListenerContainers()) {

startIfNecessary(listenerContainer);

}

}

经过上面一轮分析后,知道listenerContainer它是ConcurrentMessageListenerContainer,所以最终会进到它里面的start():

@Override

public final void start() {

synchronized (this.lifecycleMonitor) {

if (!isRunning()) {

Assert.isTrue(

this.containerProperties.getMessageListener() instanceof KafkaDataListener,

"A " + GenericMessageListener.class.getName() + " implementation must be provided");

doStart();

}

}

}

doStart()发现它是一个抽象方法,ConcurrentMessageListenerContainer实现了它,进去看

@Override

protected void doStart() {

if (!isRunning()) {

ContainerProperties containerProperties = getContainerProperties();

TopicPartitionInitialOffset[] topicPartitions = containerProperties.getTopicPartitions();

if (topicPartitions != null

&& this.concurrency > topicPartitions.length) {

this.logger.warn("When specific partitions are provided, the concurrency must be less than or "

+ "equal to the number of partitions; reduced from " + this.concurrency + " to "

+ topicPartitions.length);

this.concurrency = topicPartitions.length;

}

setRunning(true);

for (int i = 0; i < this.concurrency; i++) {

KafkaMessageListenerContainer<K, V> container;

if (topicPartitions == null) {

container = new KafkaMessageListenerContainer<>(this.consumerFactory, containerProperties);

}

else {

container = new KafkaMessageListenerContainer<>(this.consumerFactory, containerProperties,

partitionSubset(containerProperties, i));

}

String beanName = getBeanName();

container.setBeanName((beanName != null ? beanName : "consumer") + "-" + i);

if (getApplicationEventPublisher() != null) {

container.setApplicationEventPublisher(getApplicationEventPublisher());

}

container.setClientIdSuffix("-" + i);

container.start();

this.containers.add(container);

}

}

}

很简单,就是按并发度concurrency创建KafkaMessageListenerContainer对象,这个跟ConcurrentMessageListenerContainer一样也是实现了MessageListenerContainer接口,

看到container.start()没有,又是调用了start方法,这回是KafkaMessageListenerContainer的start,跟进去,最终会进入到doStart方法,如下

@Override

protected void doStart() {

if (isRunning()) {

return;

}

ContainerProperties containerProperties = getContainerProperties();

if (!this.consumerFactory.isAutoCommit()) {

AckMode ackMode = containerProperties.getAckMode();

if (ackMode.equals(AckMode.COUNT) || ackMode.equals(AckMode.COUNT_TIME)) {

Assert.state(containerProperties.getAckCount() > 0, "'ackCount' must be > 0");

}

if ((ackMode.equals(AckMode.TIME) || ackMode.equals(AckMode.COUNT_TIME))

&& containerProperties.getAckTime() == 0) {

containerProperties.setAckTime(5000);

}

}

Object messageListener = containerProperties.getMessageListener();

Assert.state(messageListener != null, "A MessageListener is required");

if (messageListener instanceof GenericAcknowledgingMessageListener) {

this.acknowledgingMessageListener = (GenericAcknowledgingMessageListener<?>) messageListener;

}

else if (messageListener instanceof GenericMessageListener) {

this.listener = (GenericMessageListener<?>) messageListener;

}

else {

throw new IllegalStateException("messageListener must be 'MessageListener' "

+ "or 'AcknowledgingMessageListener', not " + messageListener.getClass().getName());

}

if (containerProperties.getConsumerTaskExecutor() == null) {

SimpleAsyncTaskExecutor consumerExecutor = new SimpleAsyncTaskExecutor(

(getBeanName() == null ? "" : getBeanName()) + "-C-");

containerProperties.setConsumerTaskExecutor(consumerExecutor);

}

this.listenerConsumer = new ListenerConsumer(this.listener, this.acknowledgingMessageListener);

setRunning(true);

this.listenerConsumerFuture = containerProperties

.getConsumerTaskExecutor()

.submitListenable(this.listenerConsumer);

}

创建了ListenerConsumer对象,这个正是监听消费线程对象,并且它是一个Runnable, 接着

this.listenerConsumerFuture = containerProperties

.getConsumerTaskExecutor()

.submitListenable(this.listenerConsumer);

}

这里submitListenable提交任务,getConsumerTaskExecutor默认是SimpleAsyncTaskExecutor将ListenerConsumer包装成ListenableFutureTask,它是FutureTask,这点很重要啊!

上面这段简单的理解就是提交Task任务,然后开启一个线程去处理它,最终到run方法,因为是FutureTask所以就会进入FutureTask的run方法。

因为ListenableFutureTask包装进去的Runnable是ListenerConsumer,所以最后执行的run方法肯定就是它里面的,也就是会走到org.springframework.kafka.listener.KafkaMessageListenerContainer.ListenerConsumer#run

@Override

public void run() {

this.consumerThread = Thread.currentThread();

if (this.theListener instanceof ConsumerSeekAware) {

((ConsumerSeekAware) this.theListener).registerSeekCallback(this);

}

if (this.transactionManager != null) {

ProducerFactoryUtils.setConsumerGroupId(this.consumerGroupId);

}

this.count = 0;

this.last = System.currentTimeMillis();

if (isRunning() && this.definedPartitions != null) {

initPartitionsIfNeeded();

}

long lastReceive = System.currentTimeMillis();

long lastAlertAt = lastReceive;

while (isRunning()) {

try {

if (!this.autoCommit && !this.isRecordAck) {

processCommits();

}

processSeeks();

ConsumerRecords<K, V> records = this.consumer.poll(this.containerProperties.getPollTimeout());

this.lastPoll = System.currentTimeMillis();

if (records != null && this.logger.isDebugEnabled()) {

this.logger.debug("Received: " + records.count() + " records");

}

if (records != null && records.count() > 0) {

// 省略部分代码

ConsumerRecords<K, V> records = this.consumer.poll(this.containerProperties.getPollTimeout());

这句就是去kafka服务端拉取消息,并且发现它是开了while(1)这样方式保证线程不退出,循环去拉取消息。

总结如下:

refresh方法加载完所有bean后,开始完成刷新(finishRefresh),Lifecycle的后处理器DefaultLifecycleProcessor对所有Lifecycle实现类,按phase顺序(越小越优先)执行其start方法,开始进入spring-kafka模块的KafkaListenerEndpointRegistry#start,遍历listenerContainers集合(集合里面是一系列ConcurrentMessageListenerContainer对象),添加到集合的时机是KafkaListenerAnnotationBeanPostProcessor在初始化阶段执行postProcessAfterInitialization方法完成的,为什么会调此方法,熟悉bean创建过程的应该知道,在bean初始化完成后会调BeanPostProcessor的postProcessAfterInitialization方法。

遍历listenerContainers集合,跟着会进入ConcurrentMessageListenerContainer#doStart,在这里创建了KafkaMessageListenerContainer,平时注解@KafkaListener配置的concurrency并发度,也是在这用到,接着会调KafkaMessageListenerContainer#doStart,生成真正干活的线程任务ListenerConsumer,它实现了Runnable,接着将其包装成ListenableFutureTask(FutureTask),最后提交任务,创建线程Thread,最终执行ListenerConsumer的run方法,循环去kafka服务拉取消息回来消费,while(true)保证线程不退出。