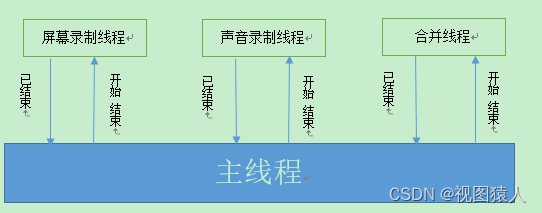

整体框架:

屏幕录制、声音录制、音视频合成分别在不同的子线程中运行,由主程序控制录制的开始和结束。控制流程如下图所示:

主线程:点击开始按钮à打开音频设备、视频设备、输出文件、启动子线程à发送开始录制信号;

子线程:收到开始信号à开始录制;

主线程:点击结束按钮à发送结束信号;

子线程:接收到结束信号à发送结束信号;

主线程:已经接收到了所有子线程发来的结束信号à处理结束事项并清理资源。

主要代码:

主线程:screenrecord.h

/**

* 录屏主控类

*/

#ifndef SCREENRECORD_H

#define SCREENRECORD_H

/**QT header**/

#include <QFont>

#include <QLabel>

#include <QMutex>

#include <QDebug>

#include <QWidget>

#include <QThread>

#include <QLineEdit>

#include <QFileInfo>

#include <QPaintEvent>

#include <QPushButton>

#include <QCameraInfo>

#include <QMessageBox>

#include <QStandardPaths>

#include <QAudioDeviceInfo>

#include "myglobals.h"

#include "muxerprocess.h"

#include "screencaptureprocess.h"

#include "audiocaptureprocess.h"

class ScreenRecord : public QWidget

{

Q_OBJECT

public:

explicit ScreenRecord(QWidget *parent = nullptr);

~ScreenRecord();

public:

QMutex mutex;

/*状态*/

bool screenCapturing;

bool audioCapturing;

bool muxing;

/*合并音视频的线程及程序*/

QThread muxerThread;

MuxerProcess *muxerProcess;

/*抓取屏幕的线程及程序*/

QThread screenCaptureThread;

ScreenCaptureProcess *screenCaptureProcess;

/*录制声音的线程及程序*/

QThread audioCaptureThread;

AudioCaptureProcess *audioCaptureProcess;

/*开始及结束录屏的按钮*/

QPushButton *startButton;

/*转换后的视频帧尺寸(由用户设置,宽度必须是4的整数倍)*/

int outputWidth;

int outputHeight;

/*视频输出路径/文件名称/文件类型*/

QString outputDirectory;

QString outputFilename;

QString outputFileType;

/*********控件***********/

//视频输出路径

QLabel *outputDirLabel;

QLineEdit *outputDirEdit;

QPushButton *outputDirButton;

/*********控件***********/

public slots:

void replyButtonPressed();

void replyScreenCapturingFinished();

void replyAudioCapturingFinished();

void replyMuxingFinished();

signals:

void startRecord(); //开始录屏信号

void stopRecord(); //结束录屏信号

public:

/**

* @brief 打开屏幕抓取设备(gdigrab)

* @return

* 0 -- success,else error code

*

*/

int openScreenCaptureDevice();

/**

* @brief 打开声音抓取设备(dshow)

* @return

* 0 -- success,else error code

*

*/

int openAudioCaptureDevice();

/*打开输出视频文件*/

int openOutput();

/*获取电脑内置的声音设备*/

QString getSpeakerDeviceName();

/*释放全局资源*/

void releaseGlobals();

/*结束所有子线程*/

void stopChildThreads();

/*开始所有的子线程*/

void startChildThreads();

protected:

void paintEvent(QPaintEvent *event);

};

#endif // SCREENRECORD_H

主线程:screenrecord.cpp

#include "screencaptureprocess.h"

ScreenCaptureProcess::ScreenCaptureProcess(QObject *parent)

: QObject{parent}

{

capturing = false;

videoFrame = nullptr;

videoYUVFrame = nullptr;

videoYUVBuffer = nullptr;

}

void ScreenCaptureProcess::start()

{

qDebug()<<"录屏子线程开始";

capturing = true;

captureScreen();

}

void ScreenCaptureProcess::stop()

{

qDebug()<<"ScreenCapture收到停止信号";

capturing = false;

}

void ScreenCaptureProcess::release()

{

if (videoFrame != nullptr)

av_frame_free(&videoFrame);

if (videoYUVFrame != nullptr)

av_frame_free(&videoYUVFrame);

if (videoYUVBuffer != nullptr)

av_freep(&videoYUVBuffer);

}

void ScreenCaptureProcess::captureScreen()

{

int ret = 0;

int number = 0;

int _lineSize = 0;

if (videoFrame == nullptr)

videoFrame = av_frame_alloc();

if (videoYUVFrame == nullptr)

videoYUVFrame = av_frame_alloc();

if (videoYUVBuffer == nullptr)

videoYUVBuffer = (uint8_t *)av_malloc(videoFrameSize);

//帧尺寸输出直接使用输入的尺寸

av_image_fill_arrays(videoYUVFrame->data,videoYUVFrame->linesize,videoYUVBuffer,AV_PIX_FMT_YUV420P, sourceWidth, sourceHeight,1);

_lineSize = sourceWidth * sourceHeight;

AVPacket packet,outPacket;

av_init_packet(&packet);

av_init_packet(&outPacket);

while (1){

/*接收到主程序的停止信号,如果全局recording还是true,那么发送录屏结束信号,

* 主程序接收到此信号后才能清理分配的资源

*/

if (!capturing){

if (recording)

emit(capturingfinished());

break;

}

av_packet_unref(&packet);

av_packet_unref(&outPacket);

if (av_read_frame(videoSourceFormatContext,&packet) < 0)

continue;

ret = avcodec_send_packet(videoSourceCodecContext,&packet);

if (ret >= 0){

ret = avcodec_receive_frame(videoSourceCodecContext,videoFrame);

if (ret == EAGAIN)

continue;

else if (ret == AVERROR_EOF)

break;

else if (ret < 0)

qDebug()<<"Error during decoding";

}

/*数据格式转化为AV_PIX_FMT_YUV420P,尺寸与源尺寸一致*/

sws_scale(videoSwsContext, (const uint8_t* const*)videoFrame->data, videoFrame->linesize, 0, sourceHeight,videoYUVFrame->data,videoYUVFrame->linesize);

mutex.lock();

/*写入帧缓存*/

if (av_fifo_space(videoFifoBuffer) > videoFrameSize){

av_fifo_generic_write(videoFifoBuffer, videoYUVFrame->data[0], _lineSize, NULL);

av_fifo_generic_write(videoFifoBuffer, videoYUVFrame->data[1], _lineSize / 4, NULL);

av_fifo_generic_write(videoFifoBuffer, videoYUVFrame->data[2], _lineSize / 4, NULL);

}

mutex.unlock();

number ++;

QCoreApplication::processEvents();

}

}

声音录制函数:void captureAudio();

void AudioCaptureProcess::captureAudio()

{

int ret = -1;

//编码器的frame size

int _sampleNumber = audioEncodeFrameSize;

if (_sampleNumber == 0)

_sampleNumber = 1024;

int dstNbSamples, maxDstNbSamples;

maxDstNbSamples = dstNbSamples = av_rescale_rnd(_sampleNumber,

audioEncodeCodecContext->sample_rate,

audioSourceCodecContext->sample_rate,

AV_ROUND_UP);

AVPacket packet;

av_init_packet(&packet);

AVFrame *_rawFrame = av_frame_alloc();

AVFrame *_newFrame = AllocAudioFrame(audioEncodeCodecContext, _sampleNumber);

while (1){

if (av_read_frame(audioSourceFormatContext,&packet) < 0){

av_packet_unref(&packet);

qDebug()<<"不能读取音频信号:av_read_frame";

continue;

}

if (packet.stream_index != 0){

av_packet_unref(&packet);

qDebug()<<"读取的音频信号不是正确的:av_read_frame";

continue;

}

ret = avcodec_send_packet(audioSourceCodecContext,&packet);

if (ret != 0){

av_packet_unref(&packet);

continue;

}

ret = avcodec_receive_frame(audioSourceCodecContext,_rawFrame);

if (ret != 0){

av_packet_unref(&packet);

continue;

}

dstNbSamples = av_rescale_rnd(swr_get_delay(audioSwrContext, audioSourceCodecContext->sample_rate) + _rawFrame->nb_samples,

audioEncodeCodecContext->sample_rate, audioSourceCodecContext->sample_rate, AV_ROUND_UP);

if (dstNbSamples > maxDstNbSamples)

{

av_freep(&_newFrame->data[0]);

//nb_samples*nb_channels*Bytes_sample_fmt

ret = av_samples_alloc(_newFrame->data, _newFrame->linesize, audioEncodeCodecContext->channels,

dstNbSamples, audioEncodeCodecContext->sample_fmt, 1);

if (ret < 0){

qDebug() << "av_samples_alloc failed";

return;

}

maxDstNbSamples = dstNbSamples;

audioEncodeCodecContext->frame_size = dstNbSamples;

audioEncodeFrameSize = _newFrame->nb_samples;

}

//为音频分配内存(音频还未转换)

if (audioFifoBuffer == nullptr)

audioFifoBuffer = av_audio_fifo_alloc(audioEncodeCodecContext->sample_fmt,

audioEncodeCodecContext->channels,

30 * _sampleNumber);

_newFrame->nb_samples = swr_convert(audioSwrContext, _newFrame->data, dstNbSamples,

(const uint8_t **)_rawFrame->data,

_rawFrame->nb_samples);

if (_newFrame->nb_samples < 0){

qDebug() << "audio swr_convert failed";

return;

}

int _space = av_audio_fifo_space(audioFifoBuffer);

if (_space >= _newFrame->nb_samples){

ret = av_audio_fifo_write(audioFifoBuffer, (void **)_newFrame->data, _newFrame->nb_samples);

if (ret < _newFrame->nb_samples){

qDebug() << "av_audio_fifo_write error!";

return;

}

}

av_packet_unref(&packet);

QCoreApplication::processEvents();

}

av_frame_free(&_rawFrame);

av_frame_free(&_newFrame);

}

屏幕抓取函数:void captureScreen();

void captureScreen()

{

int ret = 0;

int number = 0;

int _lineSize = 0;

if (videoFrame == nullptr)

videoFrame = av_frame_alloc();

if (videoYUVFrame == nullptr)

videoYUVFrame = av_frame_alloc();

if (videoYUVBuffer == nullptr)

videoYUVBuffer = (uint8_t *)av_malloc(videoFrameSize);

//帧尺寸输出直接使用输入的尺寸

av_image_fill_arrays(videoYUVFrame->data,videoYUVFrame->linesize,videoYUVBuffer,AV_PIX_FMT_YUV420P, sourceWidth, sourceHeight,1);

_lineSize = sourceWidth * sourceHeight;

AVPacket packet,outPacket;

av_init_packet(&packet);

av_init_packet(&outPacket);

while (1){

av_packet_unref(&packet);

av_packet_unref(&outPacket);

if (av_read_frame(videoSourceFormatContext,&packet) < 0)

continue;

ret = avcodec_send_packet(videoSourceCodecContext,&packet);

if (ret >= 0){

ret = avcodec_receive_frame(videoSourceCodecContext,videoFrame);

if (ret == EAGAIN)

continue;

else if (ret == AVERROR_EOF)

break;

else if (ret < 0)

qDebug()<<"Error during decoding";

}

/*数据格式转化为AV_PIX_FMT_YUV420P,尺寸与源尺寸一致*/

sws_scale(videoSwsContext, (const uint8_t* const*)videoFrame->data, videoFrame->linesize, 0, sourceHeight,videoYUVFrame->data,videoYUVFrame->linesize);

/*写入帧缓存*/

if (av_fifo_space(videoFifoBuffer) > videoFrameSize){

av_fifo_generic_write(videoFifoBuffer, videoYUVFrame->data[0], _lineSize, NULL);

av_fifo_generic_write(videoFifoBuffer, videoYUVFrame->data[1], _lineSize / 4, NULL);

av_fifo_generic_write(videoFifoBuffer, videoYUVFrame->data[2], _lineSize / 4, NULL);

}

number ++;

}

}

音视频合并函数:void mux();

void MuxerProcess::mux()

{

int _cts = -1;

AVFrame *_yuvFrame = av_frame_alloc();

uint8_t *_yuvBuffer = (uint8_t *)av_malloc(videoFrameSize);

av_image_fill_arrays(_yuvFrame->data, _yuvFrame->linesize, _yuvBuffer, AV_PIX_FMT_YUV420P, sourceWidth, sourceHeight, 1);

AVPacket packet;

av_init_packet(&packet);

while(1){

/*音频缓存是在抓取开始后才创建*/

if (audioFifoBuffer == nullptr || videoFifoBuffer == nullptr){

qDebug()<<"音频或者视频缓存还没分配:"<<audioFifoBuffer<<videoFifoBuffer;

continue;

}

//比较音频和视频流的播放时间

_cts = av_compare_ts(videoCurrentPTS,outputFormatContext->streams[videoOutputStreamIndex]->time_base,

audioCurrentPTS,outputFormatContext->streams[audioOutputStreamIndex]->time_base);

/*如果视频流的播放时间小于音频流播放时间*/

if (_cts <= 0){

if (av_fifo_size(videoFifoBuffer) >= videoFrameSize){

mutex.lock();

//从缓存中读出数据

av_fifo_generic_read(videoFifoBuffer, _yuvBuffer, videoFrameSize, NULL);

mutex.unlock();

//计算时间

packet.pts = videoFrameIndex;

packet.dts = videoFrameIndex;

av_packet_rescale_ts(&packet, videoSourceCodecContext->time_base, outputFormatContext->streams[0]->time_base);

//设置frame参数

_yuvFrame->pts = packet.pts;

_yuvFrame->pkt_dts = packet.pts;

_yuvFrame->width = sourceWidth;

_yuvFrame->height = sourceHeight;

_yuvFrame->format = AV_PIX_FMT_YUV420P;

videoCurrentPTS = packet.pts;

//释放packet

av_packet_unref(&packet);

avcodec_send_frame(videoEncodeCodecContext,_yuvFrame);

avcodec_receive_packet(videoEncodeCodecContext, &packet);

av_interleaved_write_frame(outputFormatContext, &packet);

avio_flush(outputFormatContext->pb);

videoFrameIndex ++;

}

}

else{

if (av_audio_fifo_size(audioFifoBuffer) >= audioEncodeFrameSize ){

AVFrame *frame_mic = av_frame_alloc();

frame_mic->nb_samples = audioEncodeFrameSize;

frame_mic->channel_layout = audioEncodeCodecContext->channel_layout;

frame_mic->format = audioEncodeCodecContext->sample_fmt;

frame_mic->sample_rate = audioEncodeCodecContext->sample_rate;

frame_mic->pts = audioFrameIndex * audioEncodeFrameSize;

audioFrameIndex ++;

int ret = av_frame_get_buffer(frame_mic, 0);

if (ret < 0){

qDebug()<<"av_frame_get_buffer错误!"<<getErrorMessage(ret);

break;

}

mutex.lock();

ret = av_audio_fifo_read(audioFifoBuffer, (void **)frame_mic->data,audioEncodeFrameSize);

mutex.unlock();

if (ret < 0){

qDebug()<<"不能从音频流读取数据:"<<getErrorMessage(ret)<<ret;

break;

}

AVPacket pkt_out_mic;

av_init_packet(&pkt_out_mic);

ret = avcodec_send_frame(audioEncodeCodecContext, frame_mic);

if (ret != 0){

av_frame_free(&frame_mic);

av_packet_unref(&pkt_out_mic);

continue;

}

ret = avcodec_receive_packet(audioEncodeCodecContext, &pkt_out_mic);

if (ret != 0 ){

av_frame_free(&frame_mic);

av_packet_unref(&pkt_out_mic);

continue;

}

pkt_out_mic.stream_index = audioOutputStreamIndex;

audioCurrentPTS = pkt_out_mic.pts;

ret = av_interleaved_write_frame(outputFormatContext, &pkt_out_mic);

if (ret < 0){

qDebug()<<"音频编码错误!av_interleaved_write_frame";

continue;

}

av_frame_free(&frame_mic);

av_packet_unref(&pkt_out_mic);

}

}

}

av_frame_free(&_yuvFrame);

}

完整代码下载: