1.项目框架

======================程序需要一步一步的调试=====================

一:第一步,KafkaSpout与驱动类

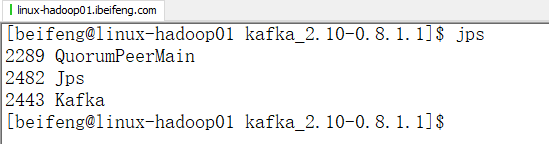

1.此时启动的服务有

2.主驱动类

1 package com.jun.it2; 2 3 import backtype.storm.Config; 4 import backtype.storm.LocalCluster; 5 import backtype.storm.StormSubmitter; 6 import backtype.storm.generated.AlreadyAliveException; 7 import backtype.storm.generated.InvalidTopologyException; 8 import backtype.storm.generated.StormTopology; 9 import backtype.storm.spout.SchemeAsMultiScheme; 10 import backtype.storm.topology.IRichSpout; 11 import backtype.storm.topology.TopologyBuilder; 12 import storm.kafka.*; 13 14 import java.util.UUID; 15 16 public class WebLogStatictis { 17 /** 18 * 主函数 19 * @param args 20 */ 21 public static void main(String[] args) { 22 WebLogStatictis webLogStatictis=new WebLogStatictis(); 23 StormTopology stormTopology=webLogStatictis.createTopology(); 24 Config config=new Config(); 25 //集群或者本地 26 //conf.setNumAckers(4); 27 if(args == null || args.length == 0){ 28 // 本地执行 29 LocalCluster localCluster = new LocalCluster(); 30 localCluster.submitTopology("webloganalyse", config , stormTopology); 31 }else{ 32 // 提交到集群上执行 33 config.setNumWorkers(4); // 指定使用多少个进程来执行该Topology 34 try { 35 StormSubmitter.submitTopology(args[0],config, stormTopology); 36 } catch (AlreadyAliveException e) { 37 e.printStackTrace(); 38 } catch (InvalidTopologyException e) { 39 e.printStackTrace(); 40 } 41 } 42 43 } 44 /** 45 * 构造一个kafkaspout 46 * @return 47 */ 48 private IRichSpout generateSpout(){ 49 BrokerHosts hosts = new ZkHosts("linux-hadoop01.ibeifeng.com:2181"); 50 String topic = "nginxlog"; 51 String zkRoot = "/" + topic; 52 String id = UUID.randomUUID().toString(); 53 SpoutConfig spoutConf = new SpoutConfig(hosts,topic,zkRoot,id); 54 spoutConf.scheme = new SchemeAsMultiScheme(new StringScheme()); // 按字符串解析 55 spoutConf.forceFromStart = true; 56 KafkaSpout kafkaSpout = new KafkaSpout(spoutConf); 57 return kafkaSpout; 58 } 59 60 public StormTopology createTopology() { 61 TopologyBuilder topologyBuilder=new TopologyBuilder(); 62 //指定Spout 63 topologyBuilder.setSpout(WebLogConstants.KAFKA_SPOUT_ID,generateSpout()); 64 // 65 topologyBuilder.setBolt(WebLogConstants.WEB_LOG_PARSER_BOLT,new WebLogParserBolt()).shuffleGrouping(WebLogConstants.KAFKA_SPOUT_ID); 66 67 return topologyBuilder.createTopology(); 68 } 69 70 }

3.WebLogParserBolt

这个主要的是打印Kafka的Spout发送的数据是否正确。

1 package com.jun.it2; 2 3 import backtype.storm.task.OutputCollector; 4 import backtype.storm.task.TopologyContext; 5 import backtype.storm.topology.IRichBolt; 6 import backtype.storm.topology.OutputFieldsDeclarer; 7 import backtype.storm.tuple.Tuple; 8 9 import java.util.Map; 10 11 public class WebLogParserBolt implements IRichBolt { 12 @Override 13 public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) { 14 15 } 16 17 @Override 18 public void execute(Tuple tuple) { 19 String webLog=tuple.getStringByField("str"); 20 System.out.println(webLog); 21 } 22 23 @Override 24 public void cleanup() { 25 26 } 27 28 @Override 29 public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) { 30 31 } 32 33 @Override 34 public Map<String, Object> getComponentConfiguration() { 35 return null; 36 } 37 }

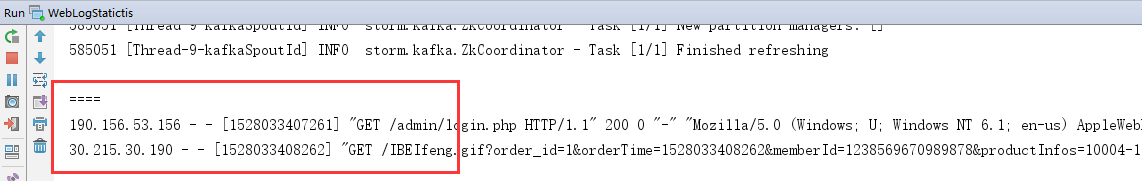

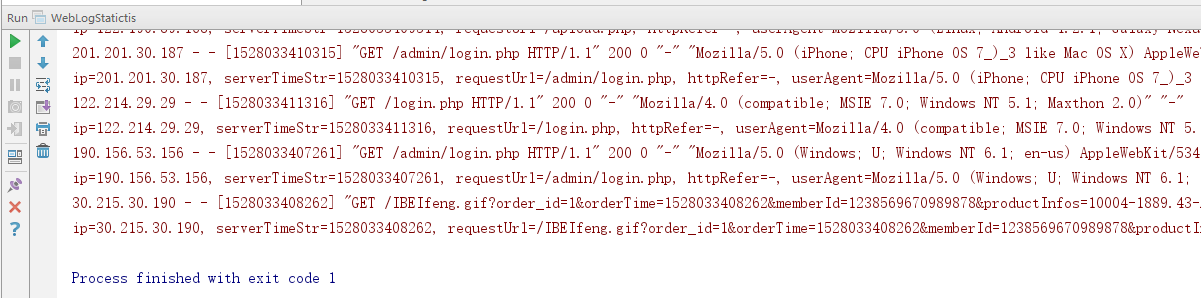

4.运行Main

先消费在Topic中的数据。

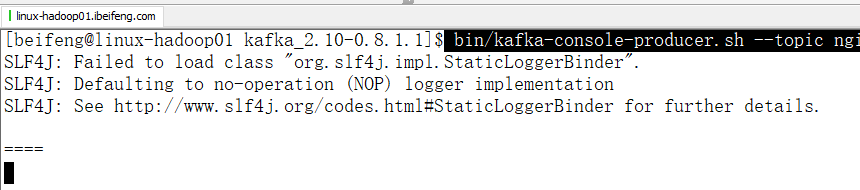

5.运行kafka的生产者

bin/kafka-console-producer.sh --topic nginxlog --broker-list linux-hadoop01.ibeifeng.com:9092

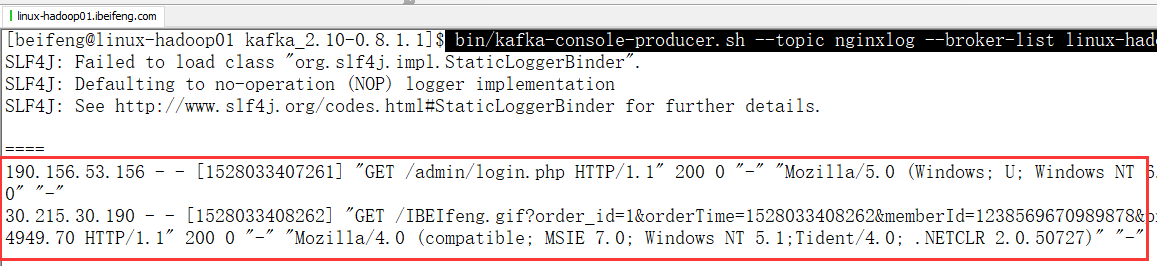

6.拷贝数据到kafka生产者控制台

7.Main下面控制台的程序

二:第二步,解析Log

1.WebLogParserBolt

如果要是验证,就删除两个部分,打开一个注释:

删掉分流

删掉发射

打开打印的注释。

2.效果

这个只要启动Main函数就可以验证。

3.WebLogParserBolt

1 package com.jun.it2; 2 3 import backtype.storm.task.OutputCollector; 4 import backtype.storm.task.TopologyContext; 5 import backtype.storm.topology.IRichBolt; 6 import backtype.storm.topology.OutputFieldsDeclarer; 7 import backtype.storm.tuple.Tuple; 8 import backtype.storm.tuple.Values; 9 10 import java.text.DateFormat; 11 import java.text.SimpleDateFormat; 12 import java.util.Date; 13 import java.util.Map; 14 import java.util.regex.Matcher; 15 import java.util.regex.Pattern; 16 17 public class WebLogParserBolt implements IRichBolt { 18 private Pattern pattern; 19 20 private OutputCollector outputCollector; 21 @Override 22 public void prepare(Map map, TopologyContext topologyContext, OutputCollector outputCollector) { 23 pattern = Pattern.compile("([^ ]*) [^ ]* [^ ]* \\[([\\d+]*)\\] \\\"[^ ]* ([^ ]*) [^ ]*\\\" \\d{3} \\d+ \\\"([^\"]*)\\\" \\\"([^\"]*)\\\" \\\"[^ ]*\\\""); 24 this.outputCollector = outputCollector; 25 } 26 27 @Override 28 public void execute(Tuple tuple) { 29 String webLog=tuple.getStringByField("str"); 30 if(webLog!= null || !"".equals(webLog)){ 31 32 Matcher matcher = pattern.matcher(webLog); 33 if(matcher.find()){ 34 // 35 String ip = matcher.group(1); 36 String serverTimeStr = matcher.group(2); 37 38 // 处理时间 39 long timestamp = Long.parseLong(serverTimeStr); 40 Date date = new Date(); 41 date.setTime(timestamp); 42 43 DateFormat df = new SimpleDateFormat("yyyyMMddHHmm"); 44 String dateStr = df.format(date); 45 String day = dateStr.substring(0,8); 46 String hour = dateStr.substring(0,10); 47 String minute = dateStr ; 48 49 String requestUrl = matcher.group(3); 50 String httpRefer = matcher.group(4); 51 String userAgent = matcher.group(5); 52 53 //可以验证是否匹配正确 54 // System.err.println(webLog); 55 // System.err.println( 56 // "ip=" + ip 57 // + ", serverTimeStr=" + serverTimeStr 58 // +", requestUrl=" + requestUrl 59 // +", httpRefer=" + httpRefer 60 // +", userAgent=" + userAgent 61 // ); 62 63 //分流 64 this.outputCollector.emit(WebLogConstants.IP_COUNT_STREAM, tuple,new Values(day, hour, minute, ip)); 65 this.outputCollector.emit(WebLogConstants.URL_PARSER_STREAM, tuple,new Values(day, hour, minute, requestUrl)); 66 this.outputCollector.emit(WebLogConstants.HTTPREFER_PARSER_STREAM, tuple,new Values(day, hour, minute, httpRefer)); 67 this.outputCollector.emit(WebLogConstants.USERAGENT_PARSER_STREAM, tuple,new Values(day, hour, minute, userAgent)); 68 } 69 } 70 this.outputCollector.ack(tuple); 71 72 } 73 74 @Override 75 public void cleanup() { 76 77 } 78 79 @Override 80 public void declareOutputFields(OutputFieldsDeclarer outputFieldsDeclarer) { 81 82 } 83 84 @Override 85 public Map<String, Object> getComponentConfiguration() { 86 return null; 87 } 88 }

三:第三步,通用计数器

1.CountKpiBolt