1.集成学习

在监督学习中,我们往往建立单一的模型来做预测。而集成学习就是通过组合多个模型(往往是weak learner 弱学习器) 以得到一个强模型(strong learner 强学习器),以求得到更好的预测效果。

2.stacking

stacking属于集成学习的一种

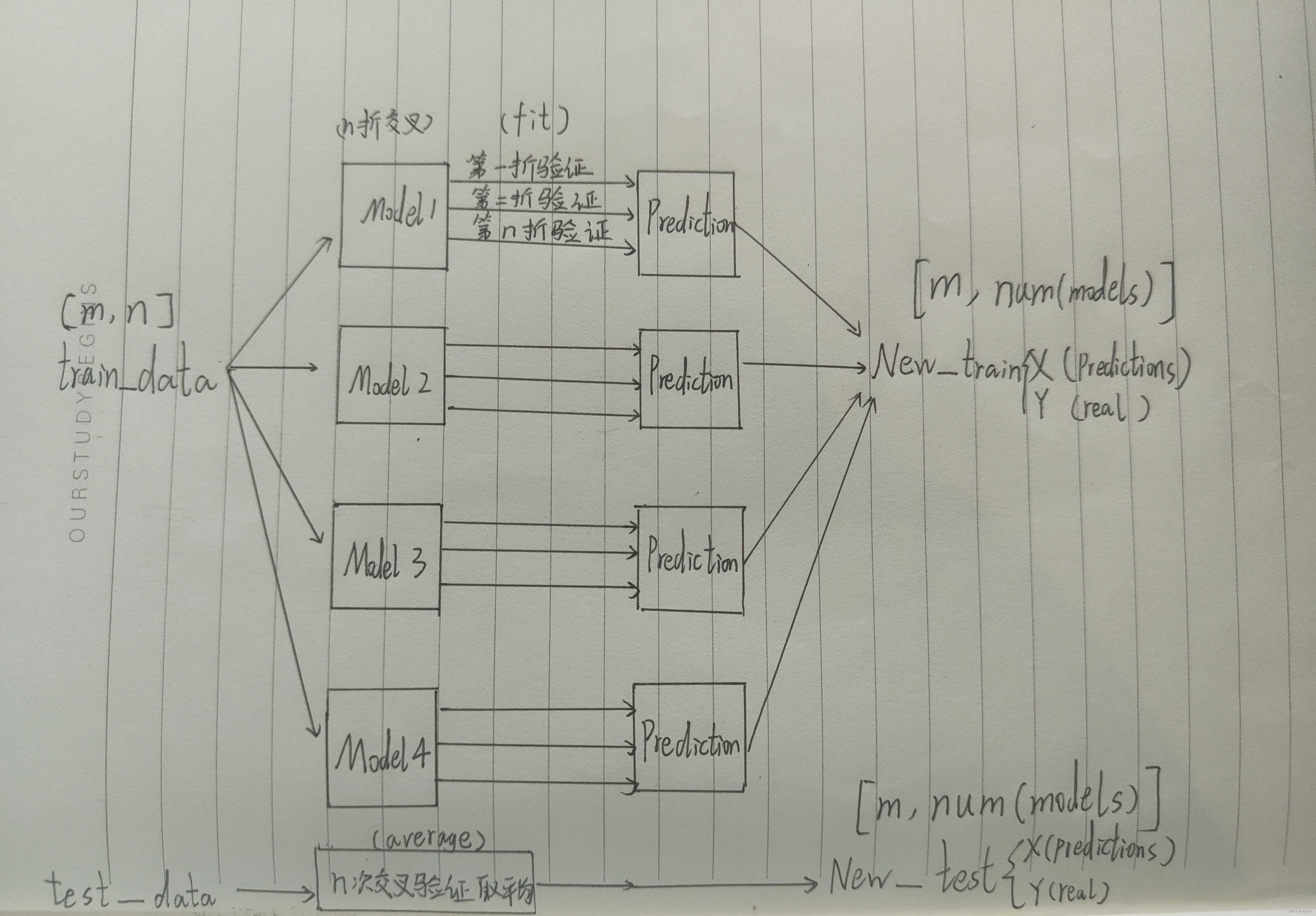

训练集经过多个模型预测出结果,然后将他们的结果构建成新的数据集,过程如下

接着,只要用这个新数据集训练新的学习器就ok了,具体可以看示例

3.Python示例

以boston数据集为例,下面是stacking解决回归问题的示例

stacking可以看成两层模型的构建,请参考代码部分

from sklearn import datasets

from sklearn.model_selection import KFold

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import GradientBoostingRegressor as GBDT

from sklearn.ensemble import ExtraTreesRegressor as ET

from sklearn.ensemble import RandomForestRegressor as RF

from sklearn.ensemble import AdaBoostRegressor as ADA

from sklearn.metrics import r2_score

import pandas as pd

import numpy as np

boston = datasets.load_boston()

X = boston.data

Y = boston.target

df = pd.DataFrame(X,columns = boston.feature_names)

df.head()

# 数据集划分

X_train, X_test, Y_train, Y_test = train_test_split(X, Y,random_state=123)

# 标准化

transfer = StandardScaler()

X_train=transfer.fit_transform(X_train)

X_test=transfer.transform(X_test)

print ("Number of training examples: " + str(X_train.shape[0]))

print ("Number of testing examples: " + str(X_test.shape[0]))

print("X_train shape: "+ str(X_train.shape))

print("Y_train shape: "+ str(Y_train.shape))

定义第一层模型并训练

model_num = 4

models = [GBDT(n_estimators=100),

RF(n_estimators=100),

ET(n_estimators=100),

ADA(n_estimators=100)]

# 第二层模型训练和测试数据集

# 第一层每个模型交叉验证对训练集的预测值作为训练数据,对测试集预测值的平均作为测试数据

X_train_stack = np.zeros((X_train.shape[0], len(models)))

X_test_stack = np.zeros((X_test.shape[0], len(models)))# 第一层训练

# 10折stacking

n_folds = 10

kf = KFold(n_splits=n_folds)

# kf.split返回划分的索引

for i, model in enumerate(models):

X_stack_test_n = np.zeros((X_test.shape[0], n_folds)) #(test样本数,10组索引)

for j, (train_index, test_index) in enumerate(kf.split(X_train)):

tr_x = X_train[train_index]

tr_y = Y_train[train_index]

model.fit(tr_x, tr_y)

# 生成stacking训练数据集

X_train_stack[test_index, i] = model.predict(X_train[test_index])

X_stack_test_n[:, j] = model.predict(X_test)

# 生成stacking测试数据集

X_test_stack[:, i] = X_stack_test_n.mean(axis=1)

# 查看构建的新数据集

print("X_train_stack shape: "+ str(X_train_stack.shape))

print("X_test_stack shape: "+ str(X_test_stack.shape))

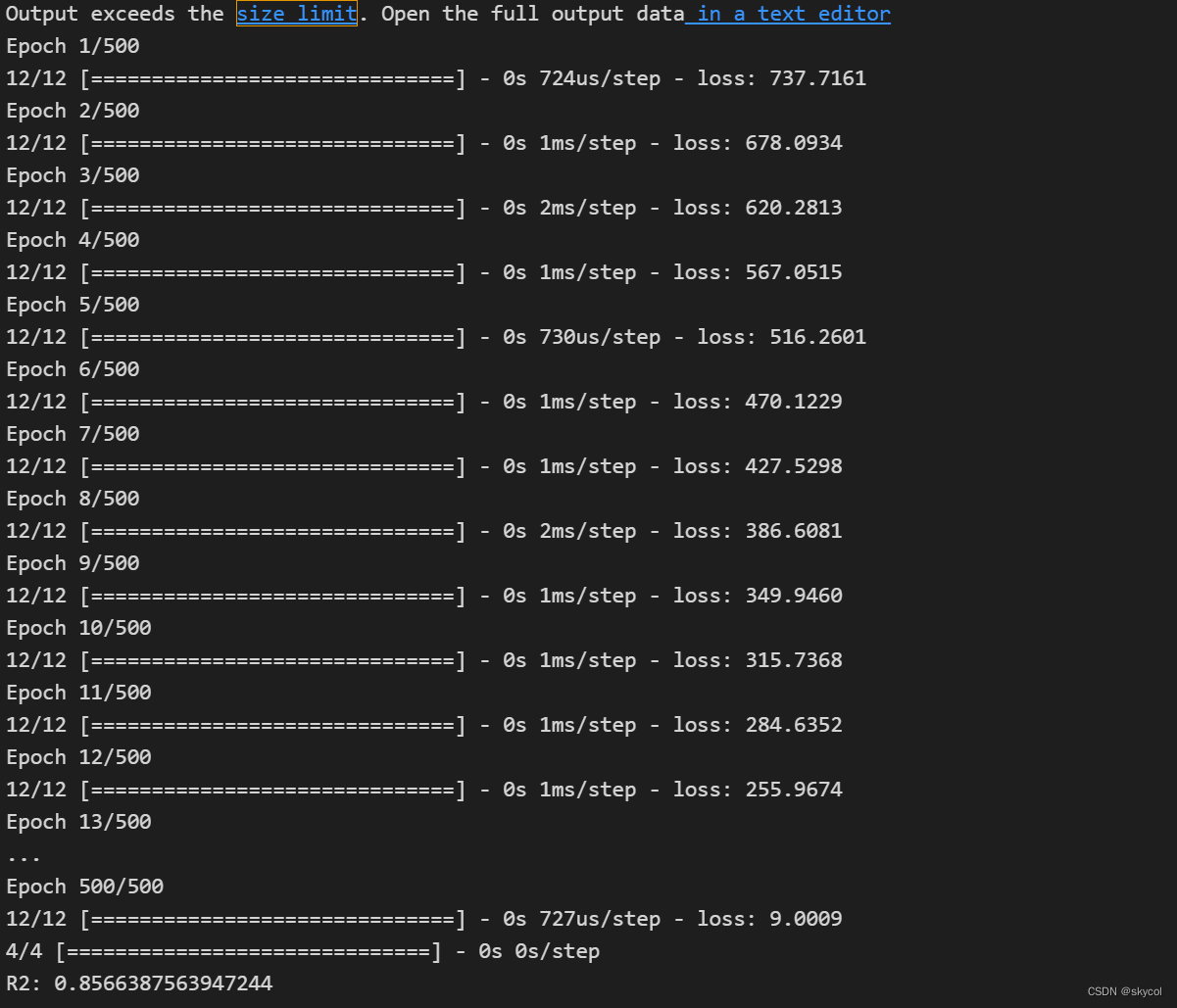

现在新的数据集便构建完毕了,进入第二层模型

第二层定义了一个普通的线性模型(这里用了keras,后续可以将模型保存为h5文件)

为了防止过拟合,这个模型应该简单些

# 第二层训练

from keras import models

from keras.models import Sequential

from keras.layers import Dense

model_second = Sequential()

model_second.add(Dense(units=1,input_dim=X_train_stack.shape[1]))

model_second.compile(loss='mean_squared_error',optimizer='adam')

model_second.fit(X_train_stack,Y_train,epochs=500)

pred = model_second.predict(X_test_stack)

print("R2:", r2_score(Y_test, pred))

# 模型评估

from sklearn.metrics import mean_absolute_error

Y_test=np.array(Y_test)

print('MAE:%f',mean_absolute_error(Y_test,pred))

for i in range(len(Y_test)):

print("Real:%f,Predict:%f"%(Y_test[i],pred[i]))或者直接用sklearn中的线性回归

from sklearn.linear_model import LinearRegression

model_second = LinearRegression()

model_second.fit(X_train_stack,Y_train)

pred = model_second.predict(X_test_stack)

print("R2:", r2_score(Y_test, pred))

# 模型评估

from sklearn.metrics import mean_absolute_error

Y_test=np.array(Y_test)

print('MAE:%f',mean_absolute_error(Y_test,pred))

for i in range(len(Y_test)):

print("Real:%f,Predict:%f"%(Y_test[i],pred[i]))