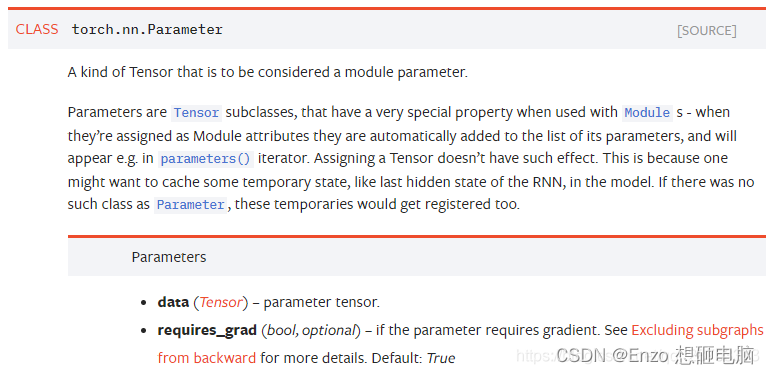

torch.nn.parameter 是 PyTorch 中的一种特殊类型的 tensor,它主要用于存储神经网络中的参数。这些参数可以被自动求导和被优化器自动更新。使用 torch.nn.Parameter 定义的tensor 会被自动添加到模型的参数列表中。

\quad

torch.nn.Parameter 是继承自 torch.Tensor 的子类,其主要作用是作为 nn.Module 中的可训练参数使用。它与 torch.Tensor 的区别就是 nn.Parameter 生成的 tensor 自动被认为是 module 的可训练参数,即加入到 parameter() 这个迭代器中去;而 module 中 非 nn.Parameter() 的普通tensor是不在 parameter 中的。

\quad

注意到,nn.Parameter 的对象的 requires_grad 属性的默认值是 True,即是可被训练的,这与 torth.Tensor 对象的默认值相反。

\quad

在 nn.Module 类中,也是使用 nn.Parameter 来对每一个 module 的参数进行初始化的。以nn.Linear为例:

import torch.nn.Parameter as Parameter

class Linear(Module):

r"""Applies a linear transformation to the incoming data: :math:`y = xA^T + b`

Args:

in_features: size of each input sample

out_features: size of each output sample

bias: If set to ``False``, the layer will not learn an additive bias.

Default: ``True``

Shape:

- Input: :math:`(N, *, H_{in})` where :math:`*` means any number of

additional dimensions and :math:`H_{in} = \text{in\_features}`

- Output: :math:`(N, *, H_{out})` where all but the last dimension

are the same shape as the input and :math:`H_{out} = \text{out\_features}`.

Attributes:

weight: the learnable weights of the module of shape

:math:`(\text{out\_features}, \text{in\_features})`. The values are

initialized from :math:`\mathcal{U}(-\sqrt{k}, \sqrt{k})`, where

:math:`k = \frac{1}{\text{in\_features}}`

bias: the learnable bias of the module of shape :math:`(\text{out\_features})`.

If :attr:`bias` is ``True``, the values are initialized from

:math:`\mathcal{U}(-\sqrt{k}, \sqrt{k})` where

:math:`k = \frac{1}{\text{in\_features}}`

Examples::

>>> m = nn.Linear(20, 30)

>>> input = torch.randn(128, 20)

>>> output = m(input)

>>> print(output.size())

torch.Size([128, 30])

"""

__constants__ = ['in_features', 'out_features']

def __init__(self, in_features, out_features, bias=True):

super(Linear, self).__init__()

self.in_features = in_features

self.out_features = out_features

self.weight = Parameter(torch.Tensor(out_features, in_features))

if bias:

self.bias = Parameter(torch.Tensor(out_features))

else:

self.register_parameter('bias', None)

self.reset_parameters()

def reset_parameters(self):

init.kaiming_uniform_(self.weight, a=math.sqrt(5))

if self.bias is not None:

fan_in, _ = init._calculate_fan_in_and_fan_out(self.weight)

bound = 1 / math.sqrt(fan_in)

init.uniform_(self.bias, -bound, bound)

def forward(self, input):

return F.linear(input, self.weight, self.bias)

def extra_repr(self):

return 'in_features={}, out_features={}, bias={}'.format(

self.in_features, self.out_features, self.bias is not None

)

可以看到, Linear 在初始化时,weights 和 bias 都是使用 torch.nn.Parameter() 来生成的:

self.weight = torch.nn.Parameter(torch.Tensor(out_features, in_features))

self.bias = torch.nn.Parameter(torch.Tensor(out_features))