系列文章目录

实践数据湖iceberg 第一课 入门

实践数据湖iceberg 第二课 iceberg基于hadoop的底层数据格式

实践数据湖iceberg 第三课 在sqlclient中,以sql方式从kafka读数据到iceberg

实践数据湖iceberg 第四课 在sqlclient中,以sql方式从kafka读数据到iceberg(升级版本到flink1.12.7)

实践数据湖iceberg 第五课 hive catalog特点

实践数据湖iceberg 第六课 从kafka写入到iceberg失败问题 解决

实践数据湖iceberg 第七课 实时写入到iceberg

实践数据湖iceberg 第八课 hive与iceberg集成

实践数据湖iceberg 第九课 合并小文件

实践数据湖iceberg 第十课 快照删除

实践数据湖iceberg 第十一课 测试分区表完整流程(造数、建表、合并、删快照)

实践数据湖iceberg 第十二课 catalog是什么

实践数据湖iceberg 第十三课 metadata比数据文件大很多倍的问题

实践数据湖iceberg 第十四课 元数据合并(解决元数据随时间增加而元数据膨胀的问题)

实践数据湖iceberg 第十五课 spark安装与集成iceberg(jersey包冲突)

实践数据湖iceberg 第十六课 通过spark3打开iceberg的认知之门

实践数据湖iceberg 第十七课 hadoop2.7,spark3 on yarn运行iceberg配置

实践数据湖iceberg 第十八课 多种客户端与iceberg交互启动命令(常用命令)

实践数据湖iceberg 第十九课 flink count iceberg,无结果问题

实践数据湖iceberg 第二十课 flink + iceberg CDC场景(版本问题,测试失败)

实践数据湖iceberg 第二十一课 flink1.13.5 + iceberg0.131 CDC(测试成功INSERT,变更操作失败)

实践数据湖iceberg 第二十二课 flink1.13.5 + iceberg0.131 CDC(CRUD测试成功)

实践数据湖iceberg 第二十三课 flink-sql从checkpoint重启

实践数据湖iceberg 第二十四课 iceberg元数据详细解析

实践数据湖iceberg 第二十五课 后台运行flink sql 增删改的效果

实践数据湖iceberg 第二十六课 checkpoint设置方法

实践数据湖iceberg 第二十七课 flink cdc 测试程序故障重启:能从上次checkpoint点继续工作

实践数据湖iceberg 第二十八课 把公有仓库上不存在的包部署到本地仓库

实践数据湖iceberg 第二十九课 如何优雅高效获取flink的jobId

实践数据湖iceberg 第三十课 mysql->iceberg,不同客户端有时区问题

实践数据湖iceberg 第三十一课 使用github的flink-streaming-platform-web工具,管理flink任务流,测试cdc重启场景

实践数据湖iceberg 第三十二课 DDL语句通过hive catalog持久化方法

实践数据湖iceberg 第三十三课 升级flink到1.14,自带functioin支持json函数

实践数据湖iceberg 更多的内容目录

文章目录

前言

需要flink支持类似hive的get_json_object的功能,又不想自定义function, 有什么办法?

目前用flink1.13.5版本,看官网,自带function都没有这个函数,于是发现了新版本flink1.14提供了这些功能,于是有了升级的冲动。。。

一、flink1.4版本开始支持JSON函数

1.1 flink1.14 json相关官网介绍

https://nightlies.apache.org/flink/flink-docs-release-1.14/docs/dev/table/functions/systemfunctions/#json-functions

// TRUE

SELECT JSON_EXISTS('{"a": true}', '$.a');

// FALSE

SELECT JSON_EXISTS('{"a": true}', '$.b');

// TRUE

SELECT JSON_EXISTS('{"a": [{ "b": 1 }]}',

'$.a[0].b');

// TRUE

SELECT JSON_EXISTS('{"a": true}',

'strict $.b' TRUE ON ERROR);

// FALSE

SELECT JSON_EXISTS('{"a": true}',

'strict $.b' FALSE ON ERROR);

1.2 flink1.13 是没有json函数支持的

https://nightlies.apache.org/flink/flink-docs-release-1.13/docs/dev/table/functions/systemfunctions/

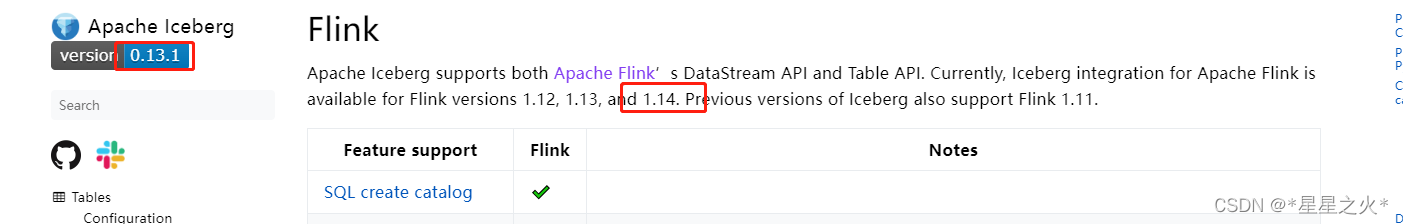

二、iceberg支持flink1.14吗?

2.1 查看iceberg官网: https://iceberg.apache.org/docs/latest/flink/

是支持1.14的

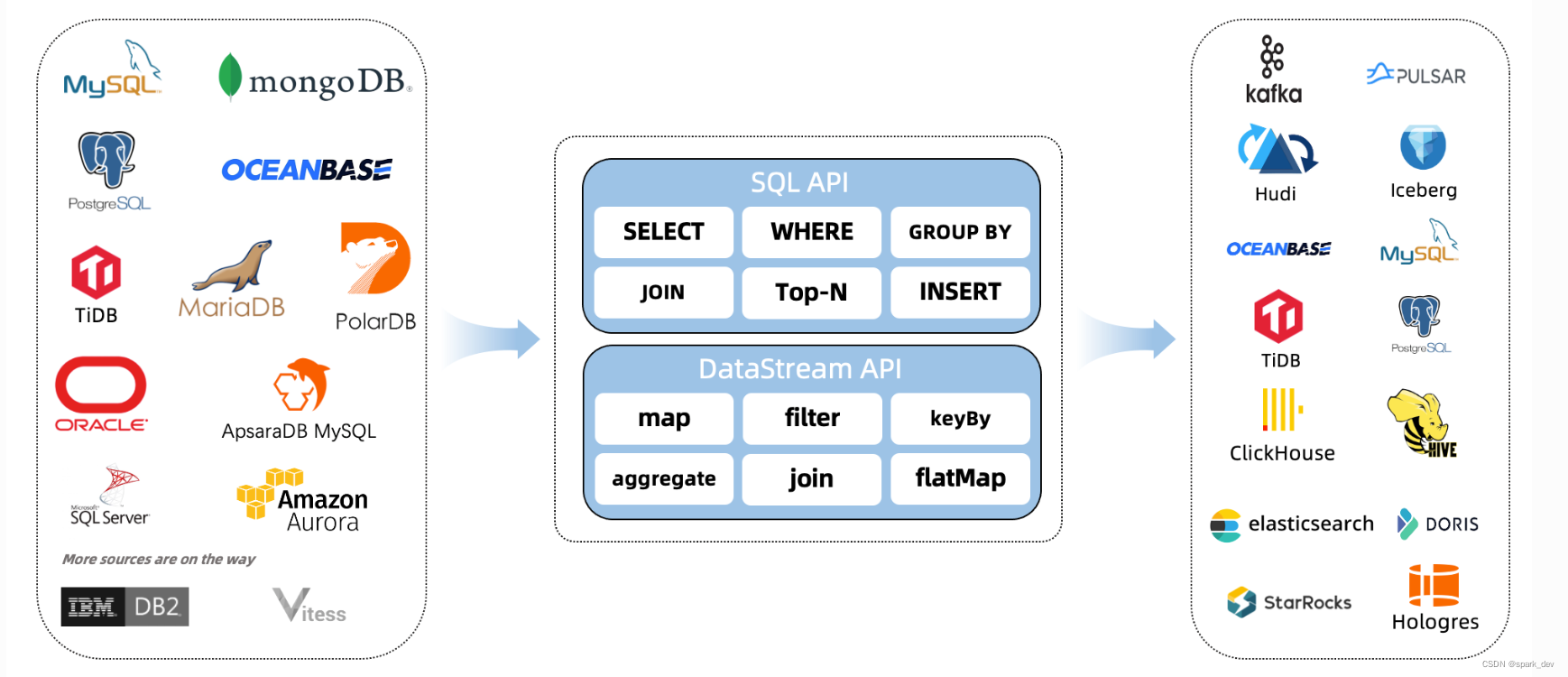

架构图:

三、 flink cdc版本

3.1 官方声明

官网: https://ververica.github.io/flink-cdc-connectors/master/content/about.html

3.2 编译看

到github 下载源码: https://github.com/ververica/flink-cdc-connectors

修改pom.xml 版本:

<flink.version>1.14.4</flink.version>

<scala.binary.version>2.12</scala.binary.version>

编译:

[INFO] --------------------------------[ jar ]---------------------------------

[WARNING] The POM for org.apache.flink:flink-table-planner-blink_2.12:jar:1.14.4 is missing, no dependency information available

[WARNING] The POM for org.apache.flink:flink-table-runtime-blink_2.12:jar:1.14.4 is missing, no dependency information available

[WARNING] The POM for org.apache.flink:flink-table-planner-blink_2.12:jar:tests:1.14.4 is missing, no dependency information available

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for flink-cdc-connectors 2.2-SNAPSHOT:

[INFO]

[INFO] flink-cdc-connectors ............................... SUCCESS [ 2.171 s]

[INFO] flink-connector-debezium ........................... SUCCESS [ 3.874 s]

[INFO] flink-cdc-base ..................................... FAILURE [ 0.085 s]

[INFO] flink-connector-test-util .......................... SKIPPED

[INFO] flink-connector-mysql-cdc .......................... SKIPPED

[INFO] flink-connector-postgres-cdc ....................... SKIPPED

[INFO] flink-connector-oracle-cdc ......................... SKIPPED

[INFO] flink-connector-mongodb-cdc ........................ SKIPPED

[INFO] flink-connector-oceanbase-cdc ...................... SKIPPED

[INFO] flink-connector-sqlserver-cdc ...................... SKIPPED

[INFO] flink-connector-tidb-cdc ........................... SKIPPED

[INFO] flink-sql-connector-mysql-cdc ...................... SKIPPED

[INFO] flink-sql-connector-postgres-cdc ................... SKIPPED

[INFO] flink-sql-connector-mongodb-cdc .................... SKIPPED

[INFO] flink-sql-connector-oracle-cdc ..................... SKIPPED

[INFO] flink-sql-connector-oceanbase-cdc .................. SKIPPED

[INFO] flink-sql-connector-sqlserver-cdc .................. SKIPPED

[INFO] flink-sql-connector-tidb-cdc ....................... SKIPPED

[INFO] flink-cdc-e2e-tests ................................ SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 6.293 s

[INFO] Finished at: 2022-05-09T16:40:18+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal on project flink-cdc-base: Could not resolve dependencies for project com.ververica:flink-cdc-base:jar:2.2-SNAPSHOT: The following artifacts could not be resolved: org.apache.flink:flink-table-planner-blink_2.12:jar:1.14.4, org.apache.flink:flink-table-runtime-blink_2.12:jar:1.14.4, org.apache.flink:flink-table-planner-blink_2.12:jar:tests:1.14.4: org.apache.flink:flink-table-planner-blink_2.12:jar:1.14.4 was not found in https://repo.maven.apache.org/maven2 during a previous attempt. This failure was cached in the local repository and resolution is not reattempted until the update interval of central has elapsed or updates are forced -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <args> -rf :flink-cdc-base

原因:

The Table API/SQL Stack modules have been renamed in Flink 1.14. This means that flink-table-planner-blink and flink-table-runtime-blink have been renamed to flink-table-planner and flink-table-runtime. Support for the legacy flink-table-planner already ended since 1.12.

flink 1.14 版本以后,之前版本 blink-planner 转正为 flink 唯一的 planner,对于的依赖包的名字也从:flink-table-planner-blink -> flink-table-planner,flink-table-runtime-blink -> flink-table-runtime

所以需要修改 flink-table-planner-blink 和 flink-table-runtime-blink 的包名

https://www.cnblogs.com/Springmoon-venn/p/15951496.html

ct and does not override abstract method getRestoredCheckpointId() in org.apache.flink.runtime.state.ManagedInitializationContext

[ERROR] /root/opensource/flink-cdc-connectors/flink-connector-mysql-cdc/src/test/java/com/ververica/cdc/connectors/mysql/source/reader/MySqlSourceReaderTest.java:[334,20] com.ververica.cdc.connectors.mysql.source.reader.MySqlSourceReaderTest.SimpleReaderOutput is not abstract and does not override abstract method markActive() in org.apache.flink.api.common.eventtime.WatermarkOutput

[INFO] 2 errors

[INFO] -------------------------------------------------------------

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for flink-cdc-connectors 2.2-SNAPSHOT:

[INFO]

[INFO] flink-cdc-connectors ............................... SUCCESS [ 2.092 s]

[INFO] flink-connector-debezium ........................... SUCCESS [ 3.783 s]

[INFO] flink-cdc-base ..................................... SUCCESS [ 3.000 s]

[INFO] flink-connector-test-util .......................... SUCCESS [ 0.972 s]

[INFO] flink-connector-mysql-cdc .......................... FAILURE [ 4.430 s]

[INFO] flink-sql-connector-mysql-cdc ...................... SKIPPED

[INFO] flink-cdc-e2e-tests ................................ SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 14.397 s

[INFO] Finished at: 2022-05-09T17:06:02+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.7.0:testCompile (default-testCompile) on project flink-connector-mysql-cdc: Compilation failure: Compilation failure:

[ERROR] /root/opensource/flink-cdc-connectors/flink-connector-mysql-cdc/src/test/java/com/ververica/cdc/connectors/mysql/MySqlTestUtils.java:[193,20] com.ververica.cdc.connectors.mysql.MySqlTestUtils.MockFunctionInitializationContext is not abstract and does not override abstract method getRestoredCheckpointId() in org.apache.flink.runtime.state.ManagedInitializationContext

[ERROR] /root/opensource/flink-cdc-connectors/flink-connector-mysql-cdc/src/test/java/com/ververica/cdc/connectors/mysql/source/reader/MySqlSourceReaderTest.java:[334,20] com.ververica.cdc.connectors.mysql.source.reader.MySqlSourceReaderTest.SimpleReaderOutput is not abstract and does not override abstract method markActive() in org.apache.flink.api.common.eventtime.WatermarkOutput

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException

[ERROR]

放到idea里看看,加一个空实现

@Override

public void markActive() {

}

编译结果:

[INFO] -------------------------------------------------------------

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for flink-cdc-connectors 2.2-SNAPSHOT:

[INFO]

[INFO] flink-cdc-connectors ............................... SUCCESS [ 2.113 s]

[INFO] flink-connector-debezium ........................... SUCCESS [ 3.916 s]

[INFO] flink-cdc-base ..................................... SUCCESS [ 3.044 s]

[INFO] flink-connector-test-util .......................... SUCCESS [ 0.962 s]

[INFO] flink-connector-mysql-cdc .......................... FAILURE [ 3.659 s]

[INFO] flink-sql-connector-mysql-cdc ...................... SKIPPED

[INFO] flink-cdc-e2e-tests ................................ SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 13.815 s

[INFO] Finished at: 2022-05-09T17:59:50+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.7.0:testCompile (default-testCompile) on project flink-connector-mysql-cdc: Compilation failure

[ERROR] /root/opensource/flink-cdc-connectors/flink-connector-mysql-cdc/src/test/java/com/ververica/cdc/connectors/mysql/MySqlTestUtils.java:[193,20] com.ververica.cdc.connectors.mysql.MySqlTestUtils.MockFunctionInitializationContext is not abstract and does not override abstract method getRestoredCheckpointId() in org.apache.flink.runtime.state.ManagedInitializationContext

[ERROR]

上面这个错误,把源码导入到idea就,看看idea提示,生成这个未实现的方法就OK!

l有几个这种地方,最后,就跑过了。由于只要mysql的包,其他报错什么的,不管了!

四、决定:升级版本

编译flink, 修改scala版本,修改hadoop, hive的版本为自己对应的版本

总结

重新编译,各个组件,麻烦,坑多多!